|

| 1 | +--- |

| 2 | +title: "Detection and coverage" |

| 3 | +--- |

| 4 | + |

| 5 | +In Elementary you can detect data issues by combining data validations (as dbt tests, custom SQL) and anomaly detection monitors. |

| 6 | + |

| 7 | +As you expand your coverage, it's crucial to balance between coverage and meaningful detections. While it may seem attractive to implement extensive monitoring throughout your data infrastructure, this approach is often suboptimal. Excessive failures can lead to alerts fatigue, potentially causing them to overlook significant issues. Additionally, such approach will incur unnecessary compute costs. |

| 8 | + |

| 9 | +In this section we will cover the available tests in Elementary, recommended tests for common use cases, and how to use the data quality dimensions framework to improve coverage. |

| 10 | + |

| 11 | +## Supported data tests and monitors |

| 12 | + |

| 13 | +Elementary detection includes: |

| 14 | + |

| 15 | +- Data tests - Validate an explicit expectation, and fail if it is not met. |

| 16 | + - Example: validate there are no null values in a column. |

| 17 | +- Anomaly detection monitors - Track a data quality metric over time, and fail if there is an anomaly comparing to previous values and trend. |

| 18 | + - Example: track the rate of null values in a column over time, fail if there is a spike. |

| 19 | + |

| 20 | +### Data tests - |

| 21 | + |

| 22 | +- dbt tests - Built in dbt tests (`not_null`, `unique`, `accepted_values`, `relationship` ) |

| 23 | +- dbt packages - Any dbt package test, we recommend installing `dbt-utils` and `dbt-expectations` . |

| 24 | +- Custom SQL tests - Custom query, will pass if no results and fail if any results are returned. |

| 25 | + |

| 26 | +### Anomaly detection monitors - |

| 27 | + |

| 28 | +Elementary offers two types of anomaly detection monitors: |

| 29 | + |

| 30 | +- **Automated Monitors** - Out-of-the-box volume and freshness monitors activated automatically, that query metadata only. |

| 31 | +- **Opt-in anomaly detection tests** - Monitors that query raw data and require configuration. |

| 32 | + |

| 33 | +<Check> |

| 34 | +### Recommendations |

| 35 | + |

| 36 | +- Deploy the packages dbt-utils and dbt-expectations in your dbt projects, to enrich your available tests |

| 37 | +- Refer to the [dbt test hub](https://www.elementary-data.com/dbt-test-hub) by Elementary, to explore available tests by use case |

| 38 | +</Check> |

| 39 | + |

| 40 | +## Fine-tuning automated monitors |

| 41 | + |

| 42 | +As soon as you connect Elementary Cloud Platform to your data warehouse, a backfill process will begin to collect historical metadata. Within an average of a few hours, your automated monitors will be operational. By default, Elementary collects at least 21 days of historical metadata. |

| 43 | + |

| 44 | +You can fine tune the [**configuration**](https://docs.elementary-data.com/features/anomaly-detection/monitors-configuration) and [**provide feedback**](https://docs.elementary-data.com/features/anomaly-detection/monitors-feedback) to adjust the detection to your needs. |

| 45 | + |

| 46 | +You can read here about how to interpret the result, and what are the available setting of each monitor: |

| 47 | + |

| 48 | +- [Automated Freshness](https://docs.elementary-data.com/features/anomaly-detection/automated-freshness) |

| 49 | +- [Automated Volume](https://docs.elementary-data.com/features/anomaly-detection/automated-volume) |

| 50 | + |

| 51 | +## Common testing use cases |

| 52 | + |

| 53 | +We have the following recommendations for testing different data assets: |

| 54 | + |

| 55 | +### Data sources |

| 56 | + |

| 57 | +To detect issues in sources updates, you should monitor volume, freshness and schema: |

| 58 | + |

| 59 | +- Volume and freshness |

| 60 | + - Data updates - Elementary cloud provides automated monitors for freshness and volume. **These are metadata monitors.** |

| 61 | + - Updates freshness vs. data freshness - The automated freshness will detect delays in **updates**. \*\*\*\*However, sometimes the update will be on time, but the data itself will be outdated. |

| 62 | + - Data freshness (advanced) - Sometimes a table can update on time, but the data itself will be outdated. If you want to validate the freshness of the raw data by relaying on the actual timestamp, you can use: |

| 63 | + - Explicit treshold [freshness dbt tests](https://www.elementary-data.com/dbt-test-hub) such as `dbt_utils.recency` , or [dbt source freshness](https://docs.getdbt.com/docs/deploy/source-freshness). |

| 64 | + - Elementary `event_freshness_anomalies` to detect anomalies. |

| 65 | + - Data volume (advanced) - Although a table can be updated as expected, the data itself might still be imbalanced in terms of volume per specific segment. There are several tests available to monitor that: |

| 66 | + - Explicit [volume expectations](https://www.elementary-data.com/dbt-test-hub) such as `expect_table_row_count_to_be_between`. |

| 67 | + - Elementary `dimension_anomalies` , that will count rows grouped by a column or combination of columns and can detect drops or spikes in volume in specific subsets of the data. |

| 68 | +- Schema changes |

| 69 | + |

| 70 | + - Automated schema monitors are coming soon: |

| 71 | + - These monitors will detect breaking changes to the schema only for columns being consumed based on lineage. |

| 72 | + - For now, we recommend defining schema tests on the sources consumed by downstream staging models. |

| 73 | + |

| 74 | + Some validations on the data itself should be added in the source tables, to test early in the pipeline and detect when data is arriving with an issue from the source. |

| 75 | + |

| 76 | + - Low cardinality columns / strict set of values - If there are fields with a specific set of values you expect use `accepted_values`. If you also expect a consistency in ratio of these values, use `dimension_anomalies` and group by this column. |

| 77 | + - Business requirements - If you are aware of expectations specific to your business, try to enforce early to detect when issues are at the source. Some examples: `expect_column_values_to_be_between`, `expect_column_values_to_be_increasing`, `expect-column-values-to-have-consistent-casing` |

| 78 | + |

| 79 | +<Check> |

| 80 | +### Recommendations |

| 81 | + |

| 82 | +- Add data freshness and volume validations for relevant source tables, on top of the automated monitors (advanced) |

| 83 | +- Add schema tests for source tables |

| 84 | +</Check> |

| 85 | + |

| 86 | +### Primary / foreign key columns in your transformation models |

| 87 | + |

| 88 | +Tables should be covered with: |

| 89 | + |

| 90 | +- Unique checks on primary / foreign key columns to detect unnecessary duplications during data transformations. |

| 91 | +- Not null checks on primary / foreign key columns to detect missing values during data transformations. |

| 92 | + |

| 93 | +For incremental tables, it’s recommended to use a `where` clause in the tests, and only validate recent data. This will prevent running the tests on large data sets which is costly and slow. |

| 94 | + |

| 95 | +<Check> |

| 96 | +#### Recommendations |

| 97 | + |

| 98 | +- Add `unique` and `not_null` tests to key columns |

| 99 | +</Check> |

| 100 | + |

| 101 | +### Public tables |

| 102 | + |

| 103 | +As these are your data products, coverage here is highly important. |

| 104 | + |

| 105 | +- Consistency with sources (based on aggregation/primary keys) |

| 106 | +- Volume and freshness |

| 107 | +- Unique and not null checks on primary keys |

| 108 | +- Schema to ensure the "API" to data consumers is not broken |

| 109 | +- Business Metrics / KPIs |

| 110 | + - Sum / max anomalies group by your critical dimensions / segments (For example - country, platform…) |

| 111 | + |

| 112 | +### Data quality dimensions framework |

| 113 | + |

| 114 | +To ensure your detection and coverage have a solid baseline, we recommend leveraging the quality dimensions framework for your critical and public assets. |

| 115 | + |

| 116 | +The quality dimensions framework divides data validation into six common dimensions: |

| 117 | + |

| 118 | +- **Completeness**: No missing values, empty values, nulls, etc. |

| 119 | +- **Uniqueness**: The data is unique, with no duplicates. |

| 120 | +- **Freshness**: The data is up to date and within the expected SLAs. |

| 121 | +- **Validity**: The data is in the correct format and structure. |

| 122 | +- **Accuracy**: The data adheres to our business requirements and constraints. |

| 123 | +- **Consistency**: The data is consistent from sources to targets, and from sources to where it is consumed. |

| 124 | + |

| 125 | +Elementary has already categorized all the existing tests in the dbt ecosystem, including all elementary anomaly detection monitors, into these quality dimensions and provides health scores per dimension automatically. It also shows if there are coverage gaps per dimension. |

| 126 | + |

| 127 | +We highly recommend going to the relevant quality dimension, then filtering by a business domain tag to see your coverage gaps in that domain. |

| 128 | + |

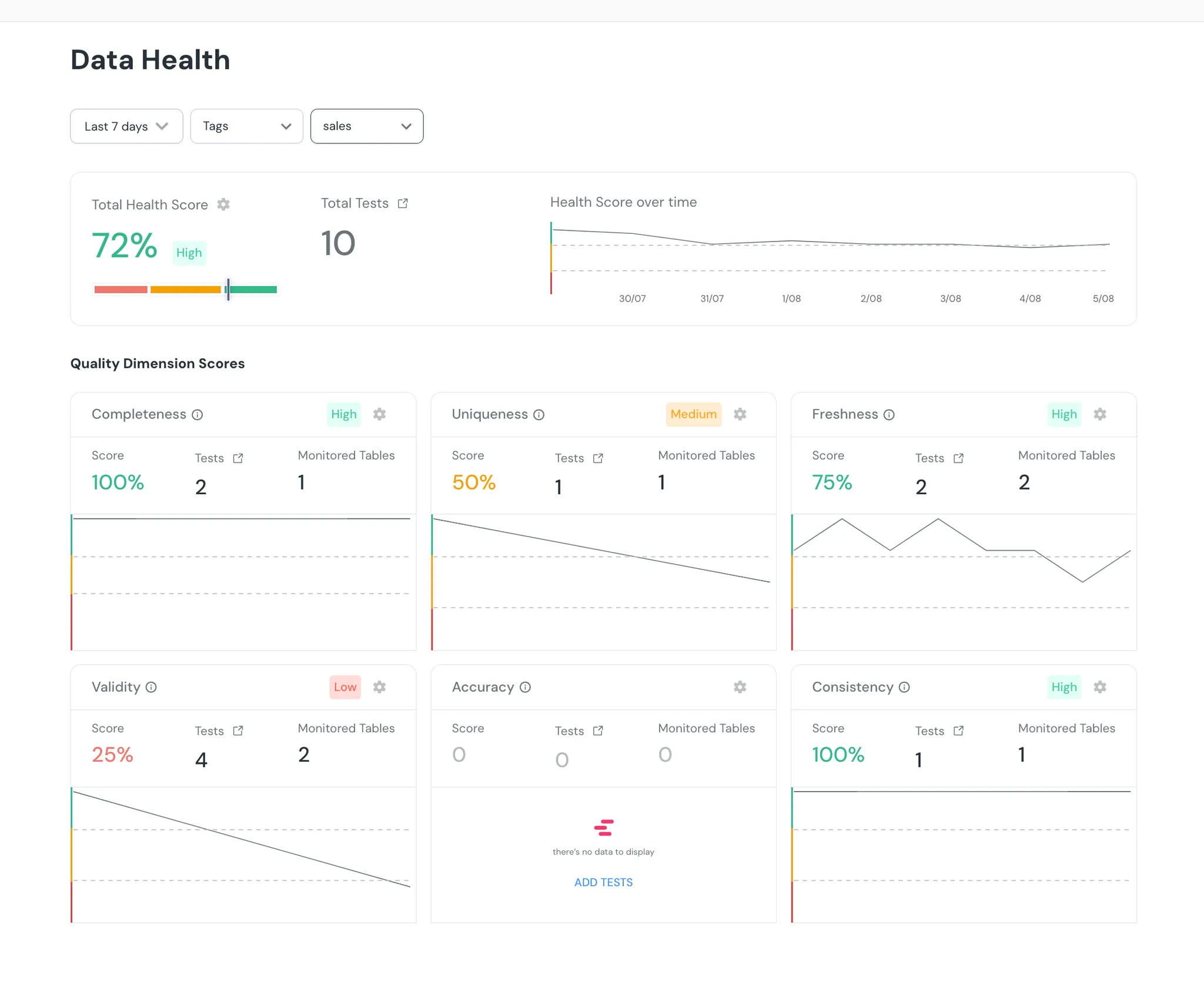

| 129 | +Example - |

| 130 | + |

| 131 | + |

| 132 | + |

| 133 | +In this example, you can see that accuracy tests are missing for our sales domain. This means we don't know if the data in our public-facing "sales" tables adheres to our business constraints. For example, if we have an e-commerce shop where no product has a price below $100 or above $1000, we can easily add a test to validate this. Implementing validations for the main constraints in this domain will allow us to get a quality score for the accuracy level of our data. |

| 134 | + |

| 135 | +NOTE: The `Test Coverage` page in Elementary allows adding any dbt test from the ecosystem, Elementary anomaly detection monitors, and custom SQL tests. We are working on making it easier to add tests by creating a test catalog organized by quality dimensions and common use cases. |

| 136 | + |

| 137 | +Example for tests in each quality dimension - |

| 138 | + |

| 139 | +- **Completeness**: |

| 140 | + - not_null, null count, null percent, missing values, empty values, column anomalies on null count, null percent, etc |

| 141 | +- **Uniqueness**: |

| 142 | + - unique, expect_column_values_to_be_unique, expect_column_unique_value_count_to_be_between, expect_compound_columns_to_be_unique |

| 143 | +- **Freshness**: The data is up to date and within the expected SLAs. |

| 144 | + - Elementary automated freshness monitor, dbt source freshness, dbt_utils.recency, expect_grouped_row_values_to_have_recent_data |

| 145 | +- **Validity**: The data is in the correct format and structure. |

| 146 | + - expect_column_values_to_match_regex, expect_column_min_to_be_between, expect_column_max_to_be_between, expect_column_value_lengths_to_be_between, column anomalies on min, max, string lengths |

| 147 | +- **Accuracy**: The data adheres to our business requirements and constraints. |

| 148 | + - expression_is_true, custom SQL |

| 149 | +- **Consistency**: The data is consistent from sources to targets, and from sources to where it is consumed. |

| 150 | + - relationship, expect_table_row_count_to_equal_other_table, expect_table_aggregation_to_equal_other_table |

0 commit comments