|

| 1 | +# Human Activity Recognition Based on ESP-DL |

| 2 | + |

| 3 | +This is a human activity recognition model built using the [ESP-DL](https://github.com/espressif/esp-dl) framework, leveraging the [Human Activity Recognition with Smartphones Dataset](https://www.kaggle.com/datasets/uciml/human-activity-recognition-with-smartphones). The dataset was collected from 30 volunteers (aged 19-48) performing six activities (WALKING, WALKING_UPSTAIRS, WALKING_DOWNSTAIRS, SITTING, STANDING, LAYING) while carrying a waist-mounted smartphone (Samsung Galaxy S II). Data was recorded at 50Hz using the device's accelerometer and gyroscope. The dataset is manually labeled via video recordings and split into training (70%) and test (30%) sets. |

| 4 | + |

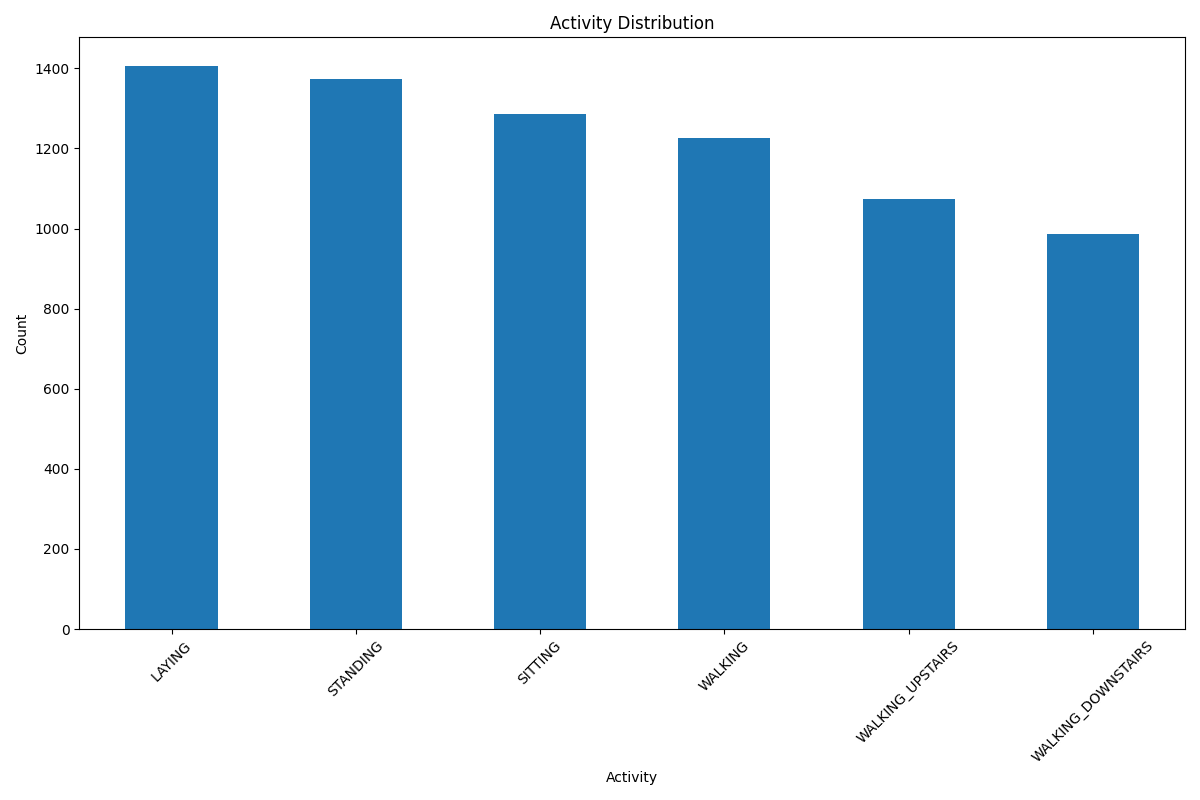

| 5 | +Please note that the data in the [Human Activity Recognition with Smartphones Dataset](https://www.kaggle.com/datasets/uciml/human-activity-recognition-with-smartphones) is not raw data, but preprocessed feature vectors with a length of 561. The class distribution in the training set is as follows: |

| 6 | + |

| 7 | + |

| 8 | + |

| 9 | +In this example, we demonstrate how to train the model, quantize it, and deploy it to the ESP32 platform. |

| 10 | + |

| 11 | +## How to deploy model |

| 12 | + |

| 13 | +### Build and train model |

| 14 | + |

| 15 | +This example uses [PyTorch](https://pytorch.org/) to build a model, and the model architecture is: |

| 16 | + |

| 17 | +```python |

| 18 | +class HARModel(nn.Module): |

| 19 | + def __init__(self): |

| 20 | + super(HARModel, self).__init__() |

| 21 | + |

| 22 | + self.model = nn.Sequential( |

| 23 | + nn.Linear(561, 256), |

| 24 | + nn.ReLU(), |

| 25 | + nn.Linear(256, 128), |

| 26 | + nn.ReLU(), |

| 27 | + nn.Linear(128, 6) |

| 28 | + ) |

| 29 | + |

| 30 | + def forward(self, x): |

| 31 | + output = self.model(x) |

| 32 | + return output |

| 33 | +``` |

| 34 | +> **Note:** In the model design, 561 represents the number of input features, while 6 denotes the number of output classes, corresponding to the different activity categories in the human activity recognition task. |

| 35 | +

|

| 36 | +Next, the data needs to be preprocessed: |

| 37 | + |

| 38 | +```python |

| 39 | +mean = X_train.mean(dim=0, keepdim=True) |

| 40 | +std = X_train.std(dim=0, keepdim=True) |

| 41 | +X_train = (X_train - mean) / std |

| 42 | +X_test = (X_test - mean) / std |

| 43 | + |

| 44 | +train_dataset = TensorDataset(X_train, y_train) |

| 45 | +test_dataset = TensorDataset(X_test, y_test) |

| 46 | + |

| 47 | +train_loader = DataLoader(train_dataset, batch_size=64, shuffle=True) |

| 48 | +test_loader = DataLoader(test_dataset, batch_size=64, shuffle=False) |

| 49 | +``` |

| 50 | + |

| 51 | +After that, we train the model using the Adam optimizer and the cross-entropy loss function: |

| 52 | + |

| 53 | +```python |

| 54 | +model = HARModel().to(DEVICE) |

| 55 | + |

| 56 | +criterion = torch.nn.CrossEntropyLoss() |

| 57 | +optimizer = torch.optim.Adam(model.parameters(), lr=0.001) |

| 58 | + |

| 59 | +num_epochs = 100 |

| 60 | + |

| 61 | +for epoch in range(num_epochs): |

| 62 | + model.train() |

| 63 | + running_loss = 0.0 |

| 64 | + for inputs, labels in train_loader: |

| 65 | + inputs, labels = inputs.to(DEVICE), labels.to(DEVICE) |

| 66 | + outputs = model(inputs) |

| 67 | + loss = criterion(outputs, labels) |

| 68 | + optimizer.zero_grad() |

| 69 | + loss.backward() |

| 70 | + optimizer.step() |

| 71 | + |

| 72 | + running_loss += loss.item() |

| 73 | + |

| 74 | + avg_train_loss = running_loss / len(train_loader) |

| 75 | + print(f"Epoch [{epoch + 1}/{num_epochs}], Loss: {avg_train_loss:.4f}") |

| 76 | + |

| 77 | + model.eval() |

| 78 | + correct = 0 |

| 79 | + total = 0 |

| 80 | + with torch.no_grad(): |

| 81 | + for test_inputs, test_labels in test_loader: |

| 82 | + test_inputs, test_labels = test_inputs.to(DEVICE), test_labels.to(DEVICE) |

| 83 | + outputs = model(test_inputs) |

| 84 | + _, predicted = torch.max(outputs.data, 1) |

| 85 | + total += test_labels.size(0) |

| 86 | + correct += (predicted == test_labels).sum().item() |

| 87 | + |

| 88 | + accuracy = 100 * correct / total |

| 89 | + print(f"Accuracy of the model on the validation data: {accuracy:.2f}%") |

| 90 | +``` |

| 91 | + |

| 92 | +### Model Quantization and Deployment |

| 93 | + |

| 94 | +In order to convert the model to the format required by ``esp-dl``, the [esp-ppq](https://github.com/espressif/esp-ppq) conversion tool needs to be installed: |

| 95 | + |

| 96 | +```shell |

| 97 | +pip uninstall ppq |

| 98 | +pip install git+https://github.com/espressif/esp-ppq.git |

| 99 | +``` |

| 100 | + |

| 101 | +Next, use the ``esp-ppq`` tool to quantize and convert the model; the conversion process can be referenced as follows: |

| 102 | + |

| 103 | +```python |

| 104 | +BATCH_SIZE = 1 |

| 105 | +DEVICE = "cpu" |

| 106 | +TARGET = "esp32p4" |

| 107 | +NUM_OF_BITS = 8 |

| 108 | +input_shape = [561] |

| 109 | +ESPDL_MODLE_PATH = "./p4/har.espdl" |

| 110 | + |

| 111 | +class HARFeature(Dataset): |

| 112 | + def __init__(self, features): |

| 113 | + self.features = features |

| 114 | + |

| 115 | + def __len__(self): |

| 116 | + return len(self.features) |

| 117 | + |

| 118 | + def __getitem__(self, idx): |

| 119 | + return self.features[idx] |

| 120 | + |

| 121 | +def collate_fn2(batch): |

| 122 | + return batch.to(DEVICE) |

| 123 | + |

| 124 | +if __name__ == '__main__': |

| 125 | + x_test, y_test = load_and_preprocess_data( |

| 126 | + "../dataset/train.csv", |

| 127 | + "../dataset/test.csv") |

| 128 | + |

| 129 | + test_input = x_test[0] |

| 130 | + test_input = test_input.unsqueeze(0) |

| 131 | + |

| 132 | + model = HARModel() |

| 133 | + model.load_state_dict(torch.load("final_model.pth", map_location="cpu")) |

| 134 | + model.eval() |

| 135 | + |

| 136 | + har_dataset = HARFeature(x_test) |

| 137 | + har_dataloader = DataLoader(har_dataset, batch_size=BATCH_SIZE, shuffle=False) |

| 138 | + |

| 139 | + quant_ppq_graph = espdl_quantize_torch( |

| 140 | + model=model, |

| 141 | + espdl_export_file=ESPDL_MODLE_PATH, |

| 142 | + calib_dataloader=har_dataloader, |

| 143 | + calib_steps=8, |

| 144 | + input_shape=[1] + input_shape, |

| 145 | + inputs=[test_input], |

| 146 | + target=TARGET, |

| 147 | + num_of_bits=NUM_OF_BITS, |

| 148 | + collate_fn=collate_fn2, |

| 149 | + device=DEVICE, |

| 150 | + error_report=True, |

| 151 | + skip_export=False, |

| 152 | + export_test_values=True, |

| 153 | + verbose=1, |

| 154 | + dispatching_override=None |

| 155 | + ) |

| 156 | +``` |

| 157 | + |

| 158 | +During the conversion process, we applied 8-bit quantization to reduce the model's storage requirements and improve inference speed. |

| 159 | + |

| 160 | +The output of the conversion process is as follows: |

| 161 | + |

| 162 | +```shell |

| 163 | +[INFO][ESPDL][2025-02-10 20:07:01]: Calibration dataset samples: 2947, len(Calibrate iter): 2947 |

| 164 | +[20:07:01] PPQ Quantize Simplify Pass Running ... Finished. |

| 165 | +[20:07:01] PPQ Quantization Fusion Pass Running ... Finished. |

| 166 | +[20:07:01] PPQ Parameter Quantization Pass Running ... Finished. |

| 167 | +Calibration Progress(Phase 1): 100%|██████████| 8/8 [00:00<00:00, 615.37it/s] |

| 168 | +Calibration Progress(Phase 2): 100%|██████████| 8/8 [00:00<00:00, 799.98it/s] |

| 169 | +Finished. |

| 170 | +[20:07:01] PPQ Passive Parameter Quantization Running ... Finished. |

| 171 | +[20:07:01] PPQ Quantization Alignment Pass Running ... Finished. |

| 172 | +[INFO][ESPDL][2025-02-10 20:07:01]: --------- Network Snapshot --------- |

| 173 | +Num of Op: [5] |

| 174 | +Num of Quantized Op: [5] |

| 175 | +Num of Variable: [12] |

| 176 | +Num of Quantized Var: [12] |

| 177 | +------- Quantization Snapshot ------ |

| 178 | +Num of Quant Config: [16] |

| 179 | +ACTIVATED: [7] |

| 180 | +OVERLAPPED: [6] |

| 181 | +PASSIVE: [3] |

| 182 | + |

| 183 | +[INFO][ESPDL][2025-02-10 20:07:01]: Network Quantization Finished. |

| 184 | +Analysing Graphwise Quantization Error(Phrase 1):: 100%|██████████| 8/8 [00:00<00:00, 235.29it/s] |

| 185 | +Analysing Graphwise Quantization Error(Phrase 2):: 100%|██████████| 8/8 [00:00<00:00, 166.67it/s] |

| 186 | +Analysing Layerwise quantization error:: 0%| | 0/3 [00:00<?, ?it/s]Layer | NOISE:SIGNAL POWER RATIO |

| 187 | +/model/model.0/Gemm: | ████████████████████ | 0.012% |

| 188 | +/model/model.2/Gemm: | ███████ | 0.009% |

| 189 | +/model/model.4/Gemm: | | 0.007% |

| 190 | +Analysing Layerwise quantization error:: 100%|██████████| 3/3 [00:00<00:00, 88.23it/s] |

| 191 | +Layer | NOISE:SIGNAL POWER RATIO |

| 192 | +/model/model.4/Gemm: | ████████████████████ | 0.003% |

| 193 | +/model/model.0/Gemm: | █████████████████ | 0.003% |

| 194 | +/model/model.2/Gemm: | | 0.000% |

| 195 | + |

| 196 | +Process finished with exit code 0 |

| 197 | +``` |

| 198 | + |

| 199 | +Please note that esp-ppq supports adding test data during the export of the espdl model. You can place the data in a list and pass it to the ``inputs`` parameter of ``espdl_quantize_torch``. This is important for troubleshooting model inference issues. |

| 200 | + |

| 201 | +You can check the model's inference results in the exported info file: |

| 202 | + |

| 203 | +```shell |

| 204 | +test outputs value: |

| 205 | +%11, shape: [1, 6], exponents: [-2], |

| 206 | +value: array([-32, -41, 82, -54, -51, -51], dtype=int8) |

| 207 | +``` |

| 208 | + |

| 209 | + |

| 210 | +### Load model in ESP Platform |

| 211 | + |

| 212 | +You can load models from ``rodata``, ``partition``, or ``sdcard``. In this example, the ``partition`` method is used; for more details, please refer to [how_to_load_model](https://github.com/espressif/esp-dl/blob/master/docs/en/tutorials/how_to_load_model.rst). |

| 213 | + |

| 214 | +Additionally, to ensure the model outputs the expected results, you need to preprocess the data following the inference process on the PC and parse the model's inference results. You can refer to the data preprocessing and post-processing steps in this example. |

| 215 | + |

| 216 | +## Example output |

| 217 | + |

| 218 | +In this example, we selected the 0th, 100th, and 500th samples from x_test as test data for the ESP-DL model. The corresponding ground truth labels are ``STANDING``, ``WALKING``, and ``SITTING``, respectively. |

| 219 | + |

| 220 | +```shell |

| 221 | +I (25) boot: ESP-IDF v5.5-dev-1610-g9cabe79385 2nd stage bootloader |

| 222 | +I (26) boot: compile time Feb 10 2025 16:00:45 |

| 223 | +I (26) boot: Multicore bootloader |

| 224 | +I (29) boot: chip revision: v0.2 |

| 225 | +I (30) boot: efuse block revision: v0.1 |

| 226 | +I (34) qio_mode: Enabling default flash chip QIO |

| 227 | +I (38) boot.esp32p4: SPI Speed : 80MHz |

| 228 | +I (42) boot.esp32p4: SPI Mode : QIO |

| 229 | +I (46) boot.esp32p4: SPI Flash Size : 16MB |

| 230 | +I (50) boot: Enabling RNG early entropy source... |

| 231 | +I (54) boot: Partition Table: |

| 232 | +I (57) boot: ## Label Usage Type ST Offset Length |

| 233 | +I (63) boot: 0 factory factory app 00 00 00010000 007d0000 |

| 234 | +I (70) boot: 1 model Unknown data 01 82 007e0000 007b7000 |

| 235 | +I (77) boot: End of partition table |

| 236 | +I (80) esp_image: segment 0: paddr=00010020 vaddr=480f0020 size=16730h ( 91952) map |

| 237 | +I (102) esp_image: segment 1: paddr=00026758 vaddr=30100000 size=00068h ( 104) load |

| 238 | +I (104) esp_image: segment 2: paddr=000267c8 vaddr=4ff00000 size=09850h ( 38992) load |

| 239 | +I (114) esp_image: segment 3: paddr=00030020 vaddr=48000020 size=e16e4h (923364) map |

| 240 | +I (257) esp_image: segment 4: paddr=0011170c vaddr=4ff09850 size=094c4h ( 38084) load |

| 241 | +I (266) esp_image: segment 5: paddr=0011abd8 vaddr=4ff12d80 size=0337ch ( 13180) load |

| 242 | +I (274) boot: Loaded app from partition at offset 0x10000 |

| 243 | +I (274) boot: Disabling RNG early entropy source... |

| 244 | +I (284) hex_psram: vendor id : 0x0d (AP) |

| 245 | +I (284) hex_psram: Latency : 0x01 (Fixed) |

| 246 | +I (285) hex_psram: DriveStr. : 0x00 (25 Ohm) |

| 247 | +I (285) hex_psram: dev id : 0x03 (generation 4) |

| 248 | +I (290) hex_psram: density : 0x07 (256 Mbit) |

| 249 | +I (295) hex_psram: good-die : 0x06 (Pass) |

| 250 | +I (299) hex_psram: SRF : 0x02 (Slow Refresh) |

| 251 | +I (303) hex_psram: BurstType : 0x00 ( Wrap) |

| 252 | +I (308) hex_psram: BurstLen : 0x03 (2048 Byte) |

| 253 | +I (312) hex_psram: BitMode : 0x01 (X16 Mode) |

| 254 | +I (316) hex_psram: Readlatency : 0x04 (14 cycles@Fixed) |

| 255 | +I (321) hex_psram: DriveStrength: 0x00 (1/1) |

| 256 | +I (326) MSPI DQS: tuning success, best phase id is 2 |

| 257 | +I (508) MSPI DQS: tuning success, best delayline id is 11 |

| 258 | +I esp_psram: Found 32MB PSRAM device |

| 259 | +I esp_psram: Speed: 200MHz |

| 260 | +I (515) mmu_psram: .rodata xip on psram |

| 261 | +I (560) mmu_psram: .text xip on psram |

| 262 | +I (561) hex_psram: psram CS IO is dedicated |

| 263 | +I (561) cpu_start: Multicore app |

| 264 | +I (1054) esp_psram: SPI SRAM memory test OK |

| 265 | +I (1064) cpu_start: Pro cpu start user code |

| 266 | +I (1064) cpu_start: cpu freq: 360000000 Hz |

| 267 | +I (1064) app_init: Application information: |

| 268 | +I (1064) app_init: Project name: human_activity_recognition |

| 269 | +I (1070) app_init: App version: ca0d8a28-dirty |

| 270 | +I (1074) app_init: Compile time: Feb 10 2025 20:12:38 |

| 271 | +I (1079) app_init: ELF file SHA256: 257da75e2... |

| 272 | +I (1084) app_init: ESP-IDF: v5.5-dev-1610-g9cabe79385 |

| 273 | +I (1089) efuse_init: Min chip rev: v0.1 |

| 274 | +I (1093) efuse_init: Max chip rev: v1.99 |

| 275 | +I (1097) efuse_init: Chip rev: v0.2 |

| 276 | +I (1101) heap_init: Initializing. RAM available for dynamic allocation: |

| 277 | +I (1108) heap_init: At 4FF192E0 len 00021CE0 (135 KiB): RAM |

| 278 | +I (1113) heap_init: At 4FF3AFC0 len 00004BF0 (18 KiB): RAM |

| 279 | +I (1118) heap_init: At 4FF40000 len 00040000 (256 KiB): RAM |

| 280 | +I (1123) heap_init: At 30100068 len 00001F98 (7 KiB): TCM |

| 281 | +I (1129) esp_psram: Adding pool of 31552K of PSRAM memory to heap allocator |

| 282 | +I (1135) spi_flash: detected chip: generic |

| 283 | +I (1139) spi_flash: flash io: qio |

| 284 | +I (1143) main_task: Started on CPU0 |

| 285 | +I (1179) esp_psram: Reserving pool of 32K of internal memory for DMA/internal allocations |

| 286 | +I (1179) main_task: Calling app_main() |

| 287 | +I (1180) FbsLoader: The storage free size is 65536 KB |

| 288 | +I (1184) FbsLoader: The partition size is 7900 KB |

| 289 | +I (1189) dl::Model: model:main_graph, version:0 |

| 290 | + |

| 291 | +I (1193) dl::Model: /model/model.0/Gemm: Gemm |

| 292 | +I (1203) dl::Model: /model/model.2/Gemm: Gemm |

| 293 | +I (1204) dl::Model: /model/model.4/Gemm: Gemm |

| 294 | +I (1206) MemoryManagerGreedy: Maximum memory size: 832 |

| 295 | + |

| 296 | +I (343) HAR: Test case 0: Predict result: STANDING |

| 297 | +I (346) HAR: Test case 1: Predict result: WALKING |

| 298 | +I (349) HAR: Test case 2: Predict result: SITTING |

| 299 | +I (354) main_task: Returned from app_main() |

| 300 | +``` |

0 commit comments