Wanting to get predictions from spacy evaluate #10736

Replies: 2 comments

-

|

My working but terrible solution is to delete those docs that don't match in length as they are a subset. You could go doc wise and merge tokens i&i+1 if it doesn't match with the original, check again and repeat. But I cant find an elegant way of achieving this. |

Beta Was this translation helpful? Give feedback.

-

|

It sounds like in order to get your confusion matrix you want to get the output of the NER model and apply it to your original tokenization - is that right? If so then what I would do is load your training data, run the trained model on the raw text from the training data, and then map the entities back onto the original tokenization using character indices. This is kind of a pain but it seems like the best you can do given your tokenization issues. You could also do something like this to remap your training data to fit spaCy's tokenization, and use that data as training data. That way you'd have consistent tokenization and it should be easy to collect data for a confusion matrix if you want. |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

Uh oh!

There was an error while loading. Please reload this page.

-

Hi,

I am trying to implement a confusion matrix for NER prediction, I'm not sure why this isn't a feature already, although the other metrics are quite nice.

I saw that you can get rendered output examples from the evaluation call, why not be able to output predictions in another format that can be used externally? Does this exist already?

!python -m spacy evaluate $modelBestFolder $TestFolder --output resultsLocation --gpu-id 0 --displacy-path $displacyFolderSo far I have tried to pull out the docs from the spacy format doc bin and feed them into the same nlp object that I trained & evaluated on. I am experiencing tokenisation differences that make it so I can't compare ents as the lengths don't match, between my validation docs from docbin from .spacy file and those predicted on the docs.

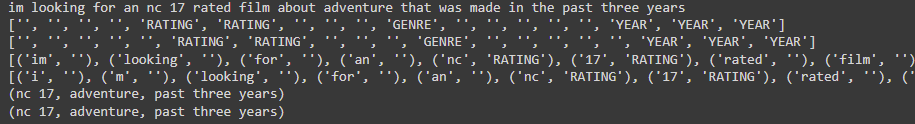

eg. splits on 's' at the end of a word, on apostrophes and between "I" and "m" like in the example below:

I tried freezing the tok2vec - no change

I tried just selecting the nlp pipe with:

ner = nlp.get_pipe("ner")and then:

ner(docs) - doesn't accept a docs list

I also tried:

but the ents were the same as were before.

I just want it to predict the ents from the docs that I am getting from the .spacy file I converted my IOB data to, and from this compare to the real values that are still stored as the entities for the file.

Please help.

Beta Was this translation helpful? Give feedback.

All reactions