How can I reproduce the models accuracy evaluations? #11030

Answered

by

polm

vmatter

asked this question in

Help: Coding & Implementations

-

|

I would like to reproduce the accuracy evaluations of the Portuguese models . I tried to search for the code used in the accuracy evaluation, however, I could not find it... Is there a way to reproduce them? Many thanks. |

Beta Was this translation helpful? Give feedback.

Answered by

polm

Jun 26, 2022

Replies: 1 comment 3 replies

-

|

Evaluation metrics for a pipline are saved in |

Beta Was this translation helpful? Give feedback.

3 replies

Answer selected by

vmatter

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Evaluation metrics for a pipline are saved in

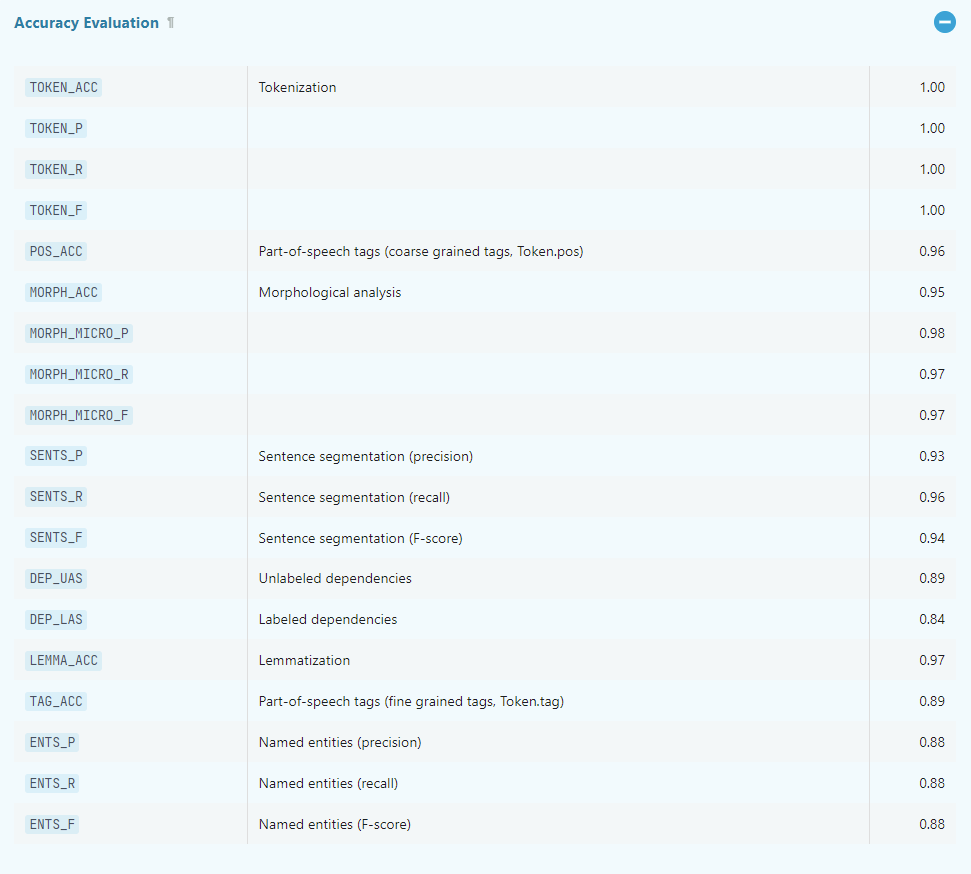

meta.jsonunder theperformancekey. Evaluation (on the dev set) is performed automatically during training, but you can also do it manually (on any data, such as test data) withspacy evaluate. The metrics on the model pages are for the dev set.