Training data for spancat #11351

-

|

Wanted to try out spancategorizer for a text dataset with overlapping entities but can't seem to find anywhere as to how the training data format looks like. Can someone possibly share a training.jsonl file so as to ease this process? |

Beta Was this translation helpful? Give feedback.

Replies: 1 comment 3 replies

-

|

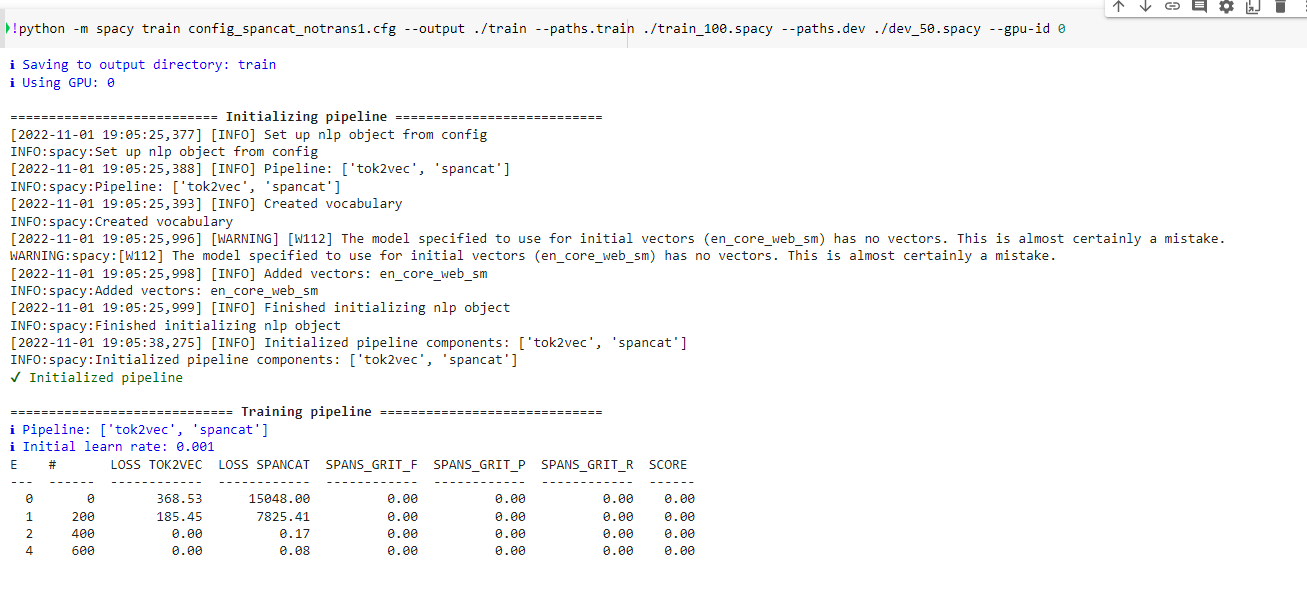

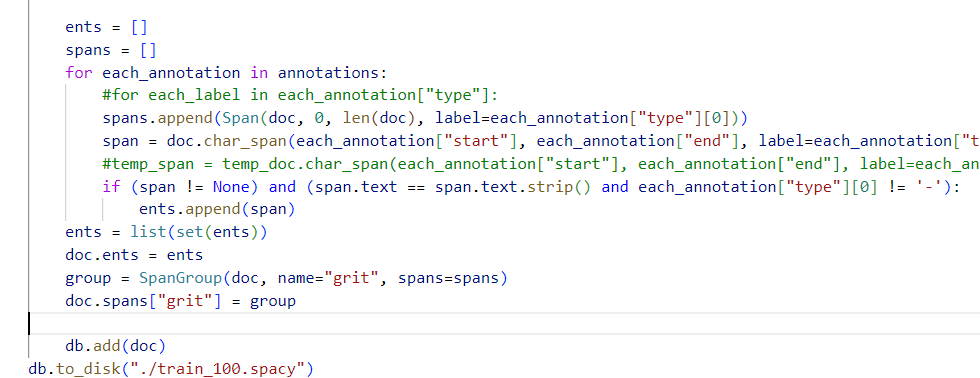

Hi @RandomArnab , In spaCy v3, we use the serialized .spacy files instead of JSONL. To prepare the training data for span categorization, you need to assign entities in the import spacy

from spacy import displacy

from spacy.tokens import Span

text = "Welcome to the Bank of China."

nlp = spacy.blank("en")

doc = nlp(text)

doc.spans["sc"] = [

Span(doc, 3, 6, "ORG"),

Span(doc, 5, 6, "GPE"),

]To serialize them into a You can check some of our spaCy projects to better understand this process. We have one for overlapping spans. |

Beta Was this translation helpful? Give feedback.

Hi @RandomArnab ,

In spaCy v3, we use the serialized .spacy files instead of JSONL. To prepare the training data for span categorization, you need to assign entities in the

doc.spanattribute. Here's an example below for an example sentence "Welcome to the Bank of China":To serialize them into a

.spacyfile, you need to collect them inside aDocBinobject and call theto_disk()method. Something like this: