Spacy training on GPU - CPU Utilization is at 100% and GPU Utilization is very low #11550

-

|

I'm trying to run a training job in Amazon Sagemaker with the p3 instance type . Whenever I start the training job the CPU Utilization goes to 100% and GPU utilization barely reaches 30%. Whatever I tried, I couldn't take advantage of the GPU to 100%. I created the docker image with the following configuration HW Configuration:

Docker configuration:

Packages installed via pip:

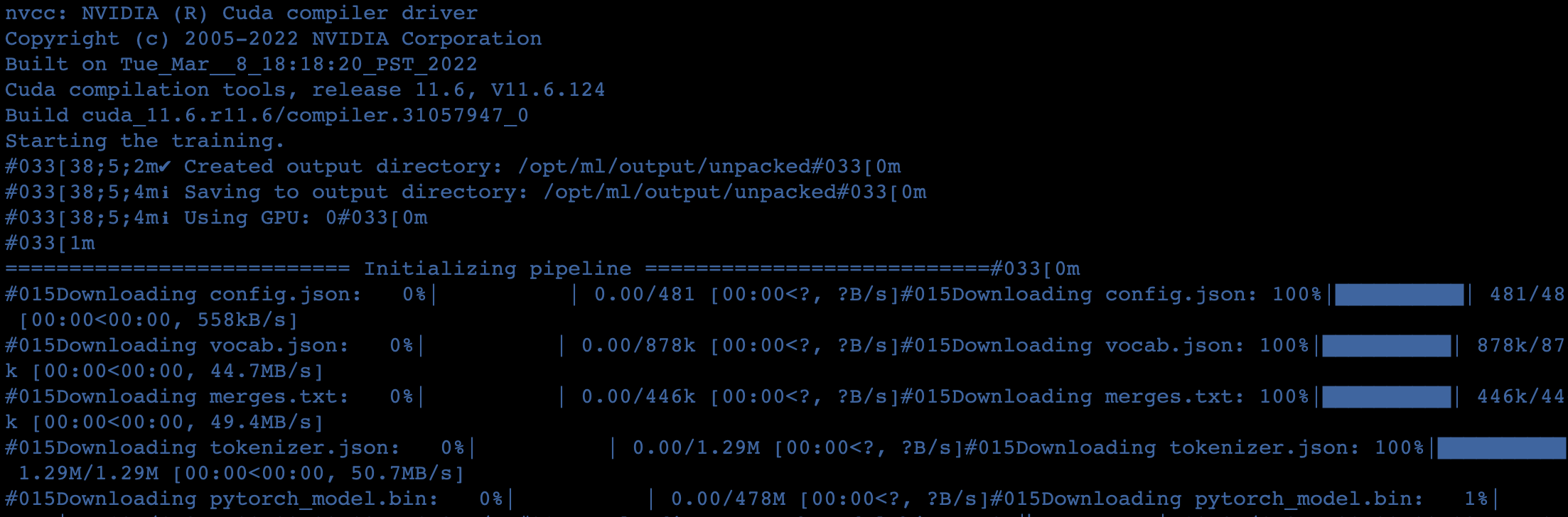

Training is done using spacy train cli` overrides = { use_gpu: int = 0 if env.num_gpus > 0 else -1 #returns 0 if it has gpu else -1 train( config_path=Path(config_path), The config file I used isSpacy is using GPU, Screenshot is attached belowThe instance metric screenshot taken during the training is attached belowEven though spacy is using GPU, the CPU usage is at 100% but the GPU usage is barely reaching 20%. Please help me figure out what I'm doing wrong. |

Beta Was this translation helpful? Give feedback.

Replies: 1 comment

-

|

Typically the first thing to try is to adjust the batch sizes. It's basically the opposite advice as for OOM errors: you can raise the batch sizes until you run into issues with your data. See links with more details under "I'm getting Out of Memory errors" in this FAQ: #8226 |

Beta Was this translation helpful? Give feedback.

Typically the first thing to try is to adjust the batch sizes. It's basically the opposite advice as for OOM errors: you can raise the batch sizes until you run into issues with your data. See links with more details under "I'm getting Out of Memory errors" in this FAQ: #8226