Huge losses while Training Spacy Custom Model #12280

-

|

I tried to create a new spacy model and train it with a custom dataset but received huge losses while training. How can I fix the issue and get it trained correctly? Length of the training data seems more than 1000 samples ex training data: Here is my Code: |

Beta Was this translation helpful? Give feedback.

Replies: 1 comment 6 replies

-

|

Hey, Thanks for the question! Let me focus on the example data first: data = [

("AGS", {"entities": [(0, 3, "CUST")]}),

("YML SERVICOS LTD", {"entities": [(0, 16, "CUST")]}),

("BORG GROUP", {"entities": [(0, 10, "CUST")]}),

("GRABCRANEX", {"entities": [(0, 10, "CUST")]}),

("GREEN SHIP", {"entities": [(0, 10, "CUST")]}),

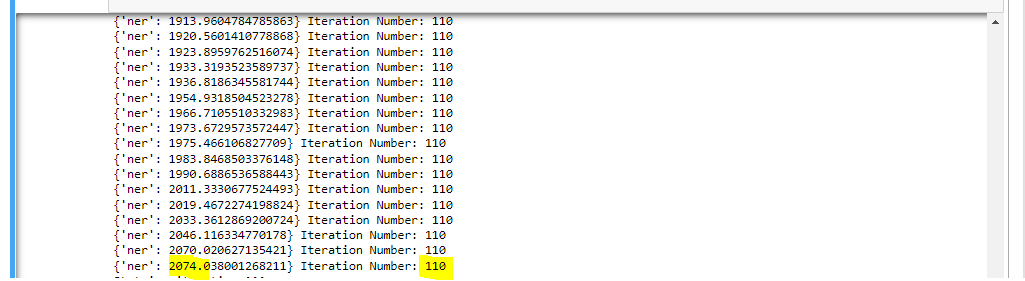

]If I understand correctly the texts here are the names of entities and not documents. When training a named entity recognizer the goal most often is to find the names of entities within texts. As such the methodology is to annotate the texts with entities and not to only expose the model to the entities themselves. What you have here is a list of entities and you can look at the In terms of the value for the loss. Do I understand correctly that this is the same question: https://stackoverflow.com/questions/75459001/spacy-train-the-existing-model-with-custom-data? The loss seems to be around assert 1.6 * 10**-8 == 1.6e-08You can ask Python to show the actual number with: print(f"{1.6e-08:.10f}")The output should be If I'm getting it right then the loss is really low. |

Beta Was this translation helpful? Give feedback.

Hey,

Thanks for the question! Let me focus on the example data first:

If I understand correctly the texts here are the names of entities and not documents. When training a named entity recognizer the goal most often is to find the names of entities within texts. As such the methodology is to annotate the texts with entities and not to only expose the model to the entities themselves.

What you have here is a list of enti…