Shape mismatch #12555

-

|

i am sorry i have to open another topic. After i managed to train a ner model with the use of my own wordvectors, i get the following error when calling

I've used this config-file: |

Beta Was this translation helpful? Give feedback.

Replies: 7 comments 7 replies

-

|

Hi @Gitclop! I'd recommend setting |

Beta Was this translation helpful? Give feedback.

-

|

hey @rmitsch! I belive it hase something to do with how i use my wordvectors. I didnt touch the tok2vec component |

Beta Was this translation helpful? Give feedback.

-

|

I exported the gensim vectors as .txt file and ran

I train it again, with the same config, load my model with

and again i get the error: ValueError: Shape mismatch for blis.gemm: (12, 96), (256, 64) |

Beta Was this translation helpful? Give feedback.

-

|

I was wrong. It is not working.

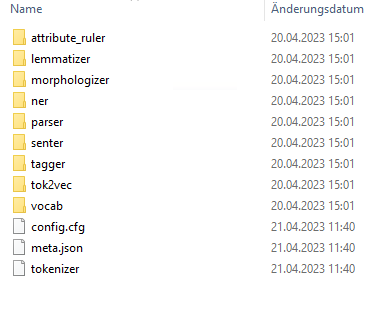

In the config for the ner-training i use those vectors: i initialize and save my pipeline and i get: If i build my pipeline without the ner model, the wordvectors are loaded and it works just fine. I've also tried setting the tok2vec component with which gives me an errror when initilaizing the pipeline Sorry for the confusion, but i am out of ideas :( |

Beta Was this translation helpful? Give feedback.

-

|

I think thats exactly what i am doing:

Then i build my pipeline with de_core_news_lg as the base and add the vectors to the vocab and my trained ner-modell to the pipeline

I use the de_core_news_lg model because i want to use its tagger and dependency-parser how would i train this pipeline with my wordvectors? I don't have any annotated training data, just the corpus for the word2vec algorithm. (And of course annotations for the ner-training) Or is it just the tok2vec componets that needs to be trained? (The tok2vec model-file in trained ner-model is 33mb large, compared to 6mb in the Spacy-Model folder) |

Beta Was this translation helpful? Give feedback.

-

|

My goal ist to extract segments of text around my entities. For example: |

Beta Was this translation helpful? Give feedback.

-

|

I work in tech-support in a special domain. The end-goal is to find similar, or duplicate support-tickets. I allready use word embeddings to calculate document similarity. Because of the special domain, there were no meaningfull embeddings within spacy for my corpus. The trained gensim vectors give pretty acurate results now. |

Beta Was this translation helpful? Give feedback.

Thanks for the context. I'm afraid we only have two options here (1) retrain all the embedding-using components you want to have in your pipeline (tagger, dependency parser NER) using your converted Gensim embeddings or (2) use two pipelines.

I can see that it's annoying having to use two pipelines, but I don't think it'll be complicated to implement or use (less so than option (1) for sure). You'd load your tagger/parser pipeline and your NER pipeline and process your docs as

nlp_tagger_parser(nlp_ner(text)).