Proposal: Non-NER span categorizer #3961

Replies: 18 comments

-

|

Don't know if this will help, but I've come to understand that one of the key factors is that the non-NER span detection task is vastly different in terms of detecting whether to start a span vs whether to end it. Usually a decision to start tagging a sequence is relatively easy, there's a sequence of words that bring us to a place in the text which becomes a placeholder to express something, think of looking for a topic introduction phrase in a limited domain context. On the other hand the decision whether to close the span is extremely hard. Has enough information been said? Is the meaning of the text shifting? Especially in compound sentences or even multi-sentence spans. Example: if you are doing a zoning process in an urban municipal office, you will get a lot of input from people living in the area. You can imagine that there is a need to detect spans of text containing information about requirements and needs that people have with an area. You will usually get an easy tasks of finding where they start: |

Beta Was this translation helpful? Give feedback.

-

Well wait a second here! Yeah an annotator might initially want to do that, but you definitely can't let them. That's hopelessly broken. Even aside from the recognition challenge, if the span scope and content is so undefined, how do you even use it in the rest of your application? You'll have this chunk of text and you'll have to like, make another bag of words model or something. You're setting yourself an unsolvable NLP problem that will take ages to annotate (as your spans are really long and uncertain), only to queue up a second difficult NLP problem downstream. |

Beta Was this translation helpful? Give feedback.

-

|

Yes, the task does require an extra model in many cases, but actually is a reasonable expectation in a non-NER information extraction scenario from your list of use cases in the proposal. Don't chatbots require extra models to handle non-NER arguments to their intents? I agree that this is a complicated task, ideally the annotator would have a wider ontology that allows splitting THE_NEED into multiple specific cases. But I do think that this is a fair general use case / expectation of a non-NER span marking model, to use it as tier-one approach for area of interest extraction in text. This area of interest might be quite complicated to define and many users might want to define it naively, by just tagging the maximum sequence that conveys the desired meaning. My example is an extreme case of the |

Beta Was this translation helpful? Give feedback.

-

|

I wouldn't normally be so direct, but since we got to know each other a bit at IRL: No no, that's all wrong. You definitely shouldn't want this. If you need to extract a general area of interest, you should work at the sentence or paragraph level and just do text classification. Trying to extract a long, vaguely-defined region of text as a span is a terrible bad no good idea. You may as well align it to a sentence or paragraph boundary, because you're not getting any value out of the subsentence information, and it makes the task basically impossible, both to annotate and to recognise. This is also totally different from what's called a "multi-word expression". A MWE is basically a "word with spaces" --- a term like "artificial intelligence" is best understood as a single concept, rather than as a sum of its parts. |

Beta Was this translation helpful? Give feedback.

-

|

I think it might also be useful to have a variant of the loss, that works on a vector similarity rather than % string overlap. For instance, in free text queries, multi-token names can be considered as some perturbed version of a canonical name. In such cases, a loss that works with the vector representation of the spans and the gold-standard might be better than a loss that works with surface string overlap. |

Beta Was this translation helpful? Give feedback.

-

|

@ramji-c that's a very interesting idea. I'll think about that. |

Beta Was this translation helpful? Give feedback.

-

|

You may have look at my chunker / entity extractor here (using spaCy): More tools here (spell corrector, semantic grouping, ..): Coming very soon: a Cross-lingual Semantic Memory = feed sentences in any language, search sentences having a similar meaning to a given sentence in any other language. |

Beta Was this translation helpful? Give feedback.

-

|

I am working on a comparable issue and I am stuck with a solution for extracting the phrases. AFAIK, there are mostly solutions that expect a noun to be a central aspect of the phrase. In my current domain (service reviews), this does not help. Noun chunking is not doing the job and neither are dependency or constituency parsing. What approach do you have in mind? (I mean, do you extract all phrases, even overlapping ones?) |

Beta Was this translation helpful? Give feedback.

-

|

@kommerzienrat, can you provide with some of your sentences to test ? |

Beta Was this translation helpful? Give feedback.

-

|

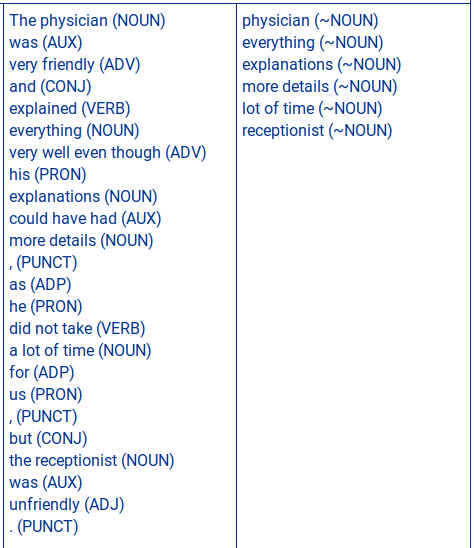

Indeed, e.g. the following sentence is a good example: |

Beta Was this translation helpful? Give feedback.

-

|

Are you looking for something like this ? |

Beta Was this translation helpful? Give feedback.

-

|

It does have to do with my problem but seems not to be a perfect match for such spans. |

Beta Was this translation helpful? Give feedback.

-

|

@kommerzienrat you could check out Flair framework which does this kind of sequence labelling and is easy to get working.https://github.com/zalandoresearch/flair/blob/master/README.md On a separate note, do you have any recommendations for guidelines about constructing the kind of ontology needed to decide on useful spans? |

Beta Was this translation helpful? Give feedback.

-

|

yay thanks for this 💯. So for my use case, the span indices are pre-determined using some rule. So I would think a loss function that's just cross entropy would be more apt. It would be nice to have as an option as part of the config. If I have time, I'll test an example. The default for |

Beta Was this translation helpful? Give feedback.

-

Thanks for the link to flair. It is good to see more great stuff coming from Europe/Germany. I regret that it might not help in my case, but anyway, I am still having a try. What is more, is that I cannot determine span boundaries due to the nature of my data. I would love to see a solution as proposed in the first post above where one missing word does not prevent a whole span from being used. |

Beta Was this translation helpful? Give feedback.

-

|

I think that a decent use case (and a data set) would be these kinds of de-anonymization efforts where the model aims at detecting a span containing NER-like personal information: https://ai.google/tools/datasets/audio-ner-annotations/ Some are single-word and clearly NER like a person's name, others are relevant numbers that have personal meaning like a health insurance identifier or the name of the hospital a person goes to. |

Beta Was this translation helpful? Give feedback.

-

|

@honnibal Is there yet something we could try to use? I am working on a comparable issue (aspect-based SA) and still have not found a working solution to identify spans in texts. Thanks in advance! |

Beta Was this translation helpful? Give feedback.

-

|

More recent work-in-progress: #6747 Update: will be released as an experimental functionality in spaCy 3.1! |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

Uh oh!

There was an error while loading. Please reload this page.

-

People often want to train spaCy's

EntityRecognizercomponent on sequence labeling tasks that aren't "traditional" Named Entity Recognition (NER). A named entity actually has a fairly specific linguistic definition: they're either definite descriptors, or a numeric expression. Software designed for doing NER may not perform well on other sequence labelling tasks. This is especially true of spaCy's NER system, which makes fairly aggressive trade-offs. What we need is another system that's better suited for these non-NER sequence tasks.WIP can be found here: https://github.com/explosion/spaCy/blob/feature/span-categorizer/spacy/pipeline/spancat.py

Examples of non-NER span categorization

(This section needs to be filled out with actual examples. For now I've just noted some general categories of problem.)

Chatbot tutorials and software have taken to calling all intent arguments "entities". I wish they wouldn't, but they do --- so people want to recognize and label these phrases with an "entity recognizer". These phrases could be anything. They're whatever argument of the "intent" the author has defined, even if they don't really make good linguistic sense. The boundaries of these "entities" can also be quite inconsistently defined.

In aspect-oriented sentiment analysis, one often has a set of attributes to recognize, like "price", "quality", "customer service", etc. Sometimes these can be recognized as topics in a sentence, but often people want them localised to specific spans.

In information or relation extraction, it's often important to recognize predicate words or phrases. For verbs, this is often a single word, but sometimes you want nouns as well, which can be multi-word expressions.

Tasks such as disfluency detection, detection of spelling errors, detection of grammatical errors etc are all quite sensible to code as sequence tagging problems.

Problems with IOB/BILUO

NER systems typically make one decision per word. Usually that decision corresponds to a tag (such as an IOB or BILUO tag). spaCy does things only slightly differently: on each word we predict an action, where the actions correspond to the BILUO tags. Encoding the task as transition-based parsing has some subtle advantages I won't go into here.

The problem is, the BILUO-style approach implies a loss where a boundary error means the model's prediction is completely wrong. This is the right way to think about NER and probably chunking, but it doesn't match up well with what people often want on non-NER problems. Often people are pretty ambivalent about the exact boundaries of their target spans. They would much rather have the boundary off-by-one than miss the entity entirely.

Suggested solution

The

SpanCategorizerwill consist of three components:DocFor instance, we might set out

get_spansfunction to get the span offsets for all unigrams, bigrams, noun chunks and named entities. No matter how many candidate spans we have, we only need to run the token-to-vector model once -- and that model should be able to do most of the "heavy lifting". For each span, we need to perform some sort of weighted sum, and then run a feed-forward network. Hopefully this can be quite cheap.There's an additional trick we could play when encoding the problem, to reduce the number of spans. If we have relatively few classes (especially if we only have one class), we could have extra classes that denote span offsets. This is what the object detection systems like YOLO do. Instead of generating all the overlapping spans, we can generate non-overlapping spans, and have classes that tell us to adjust the border. This might make things easier for the model as well, if it means the spans are of more uniform length.

If we do have spans of non-uniform length, we should still be able to process them efficiently. We need to be able to handle cases where there are some long spans, and a lot of short ones, without blowing up the space by padding out into a huge square. Batching the spans by length internally should take care of this.

I think it would be good to have a loss function that assigns partial credit to spans according to how many tokens of overlap they have with the gold-standard. For instance, if we extract a span with 90% overlap with a gold-standard phrase, we can make the loss small.

Current progress

TARGET, or three word sequences withTARGETin the middle.Beta Was this translation helpful? Give feedback.

All reactions