How to debug model's predictions? #8003

Replies: 2 comments

-

|

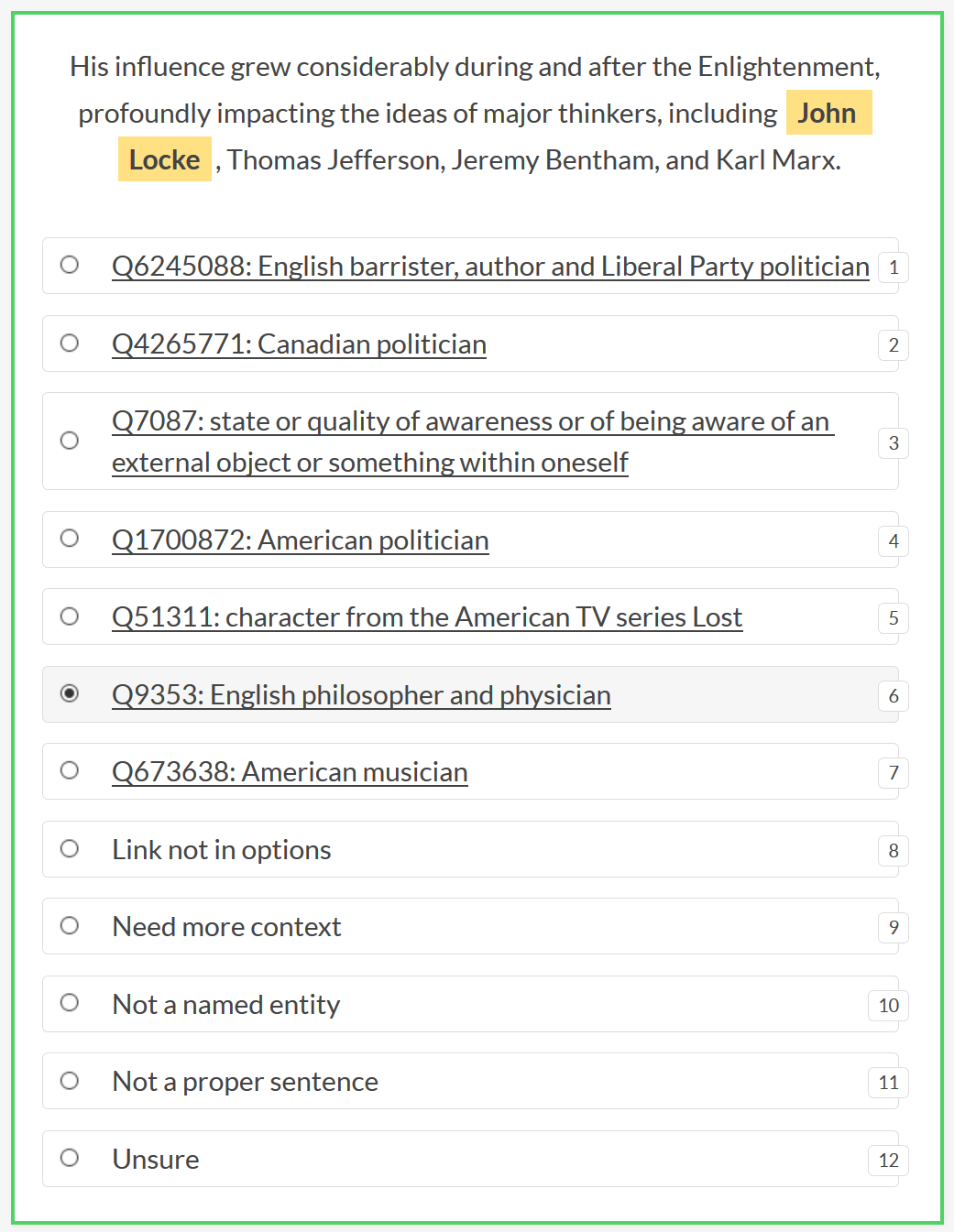

To really understand the type of (wrong) predictions by your model on your data, you probably want to perform a manual error analysis. Ideally, you'd first annotate some unseen data manually, so you have a gold-standard. Then you take the same data, run it through your model, and start inspecting the cases where the predictions are different to the annotation. Then you can start dissecting for each case, what when wrong and why. For instance, some time ago I did an evaluation of an NEL use-case by first manually annotating some data with Prodigy: And then I'd compare those results to the predictions. Errors would largely fall in these categories:

This error analysis will give you an idea on realistic upper bounds of your system, as well as providing clues which parts/steps of the model to improve. |

Beta Was this translation helpful? Give feedback.

-

|

Nothing beats manual error analysis, but doing automatic evaluation of certain kinds of errors can also be helpful. There was a great paper last year with a system called CheckList about automatically testing NLP models; this article is a nice summary of it. An example of what you can do is swap named entities with different named entities and see how often that affects the prediction, or see what adding "not" to a sentence does. spaCy doesn't have a built-in feature for this, but the dependency parse related features make implementation easy. |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

-

I'm using

nlp.evaluate()to evaluate a trained custom entity linker on a dataset. It's great but I've been thinking is there a good way to monitor model's prediction to learn more about different types of mistakes?How do you monitor the behaviour of your NLP pipeline?

Thanks for your suggestions.

Beta Was this translation helpful? Give feedback.

All reactions