Which Loss function is used for NER and Transformer tokenizer? #8211

Answered

by

polm

sureshPalla230393

asked this question in

Help: Other Questions

-

Beta Was this translation helpful? Give feedback.

Answered by

polm

Jun 7, 2021

Replies: 1 comment

-

|

Sorry for the delayed reply on this. The NER loss calculation is here, it's pretty basic but optimized for whole-entity matching rather than best-effort token overlap. Loss for the Transformer is just the backpropagated loss from other components in the pipeline, in your case the NER. In your case you should just be able to ignore it. You can see the source here. |

Beta Was this translation helpful? Give feedback.

0 replies

Answer selected by

polm

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

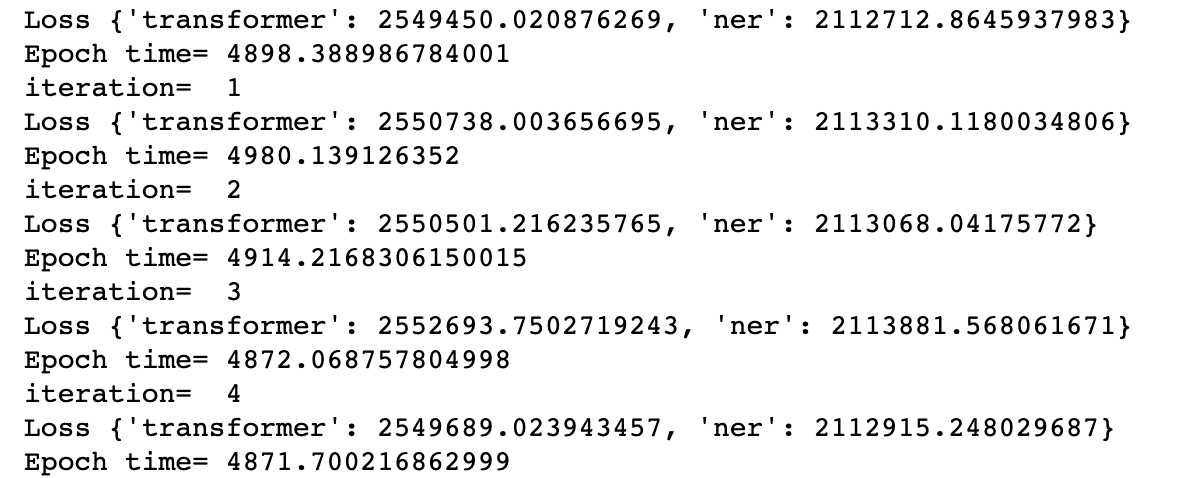

Sorry for the delayed reply on this. The NER loss calculation is here, it's pretty basic but optimized for whole-entity matching rather than best-effort token overlap.

Loss for the Transformer is just the backpropagated loss from other components in the pipeline, in your case the NER. In your case you should just be able to ignore it. You can see the source here.