Pretrain epoch loss strange behaviour #9312

-

|

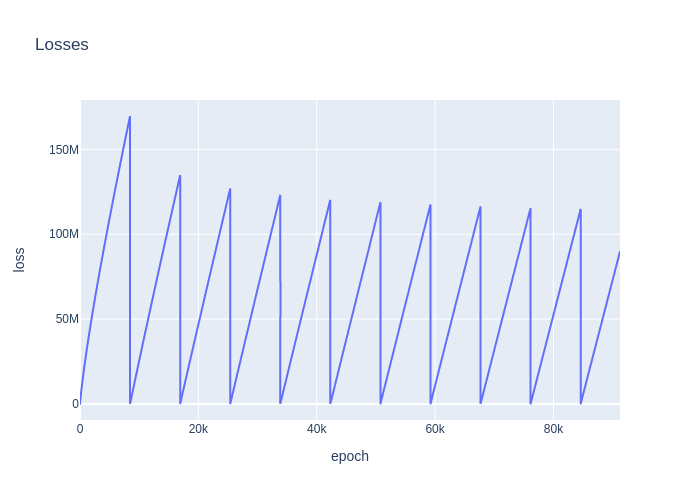

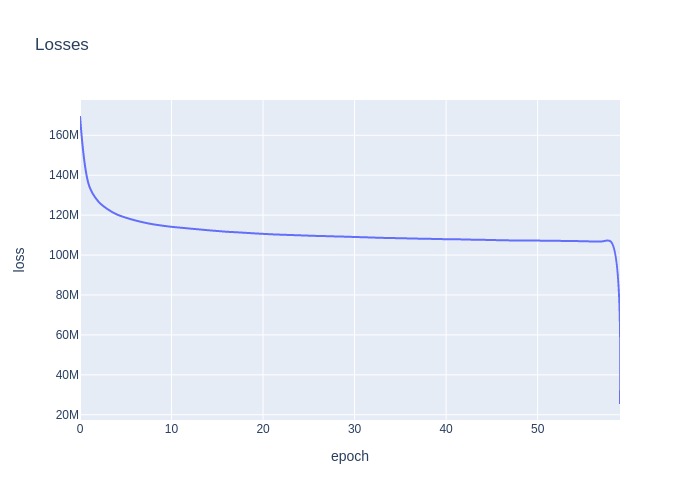

Plotting epoch_loss from log.jsonl from pretraining which yields strange results. Not sure what is going wrong?! Pretraining is on a Swedish language dataset consisting of approx. 7.6 miljon sentences and 129 million words... Losses plotted from the log.jsonl file: Which is generated using the following script: The config pretraining section is taken directly from the pretraining section in the docs: |

Beta Was this translation helpful? Give feedback.

Replies: 1 comment 8 replies

-

|

Thanks for the report, that does look weird. I don't see anything wrong with your graph code, but could you upload the raw data for us to look at? |

Beta Was this translation helpful? Give feedback.

Thanks for the report, that does look weird.

I don't see anything wrong with your graph code, but could you upload the raw data for us to look at?