Identical models being trained even with different parameters #9403

Replies: 3 comments 6 replies

-

|

I am afraid that more info is needed. |

Beta Was this translation helpful? Give feedback.

-

|

There are a variety of things that could be going wrong here, but just to check one thing - is If it is set to |

Beta Was this translation helpful? Give feedback.

-

|

Yes I’m setting the vectors parameter in the [initialize] block. I’ve tried en_core_web_md/lg and also none, which made no difference to the final model. This could be explained by the fact that my data has little overlap with the standard pipelines. However what I don’t understand is how pretraining tok2vec also has no impact on the model whatsoever. I made sure to include the bin file as the tok2vec init, and the pipeline seems to be loading the pretrained weights. Any ideas there? |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

-

Hi! First of all want to thank the Spacy team for being available and helpful when users ask questions! I'm currently facing an issue that I'd like some help with.

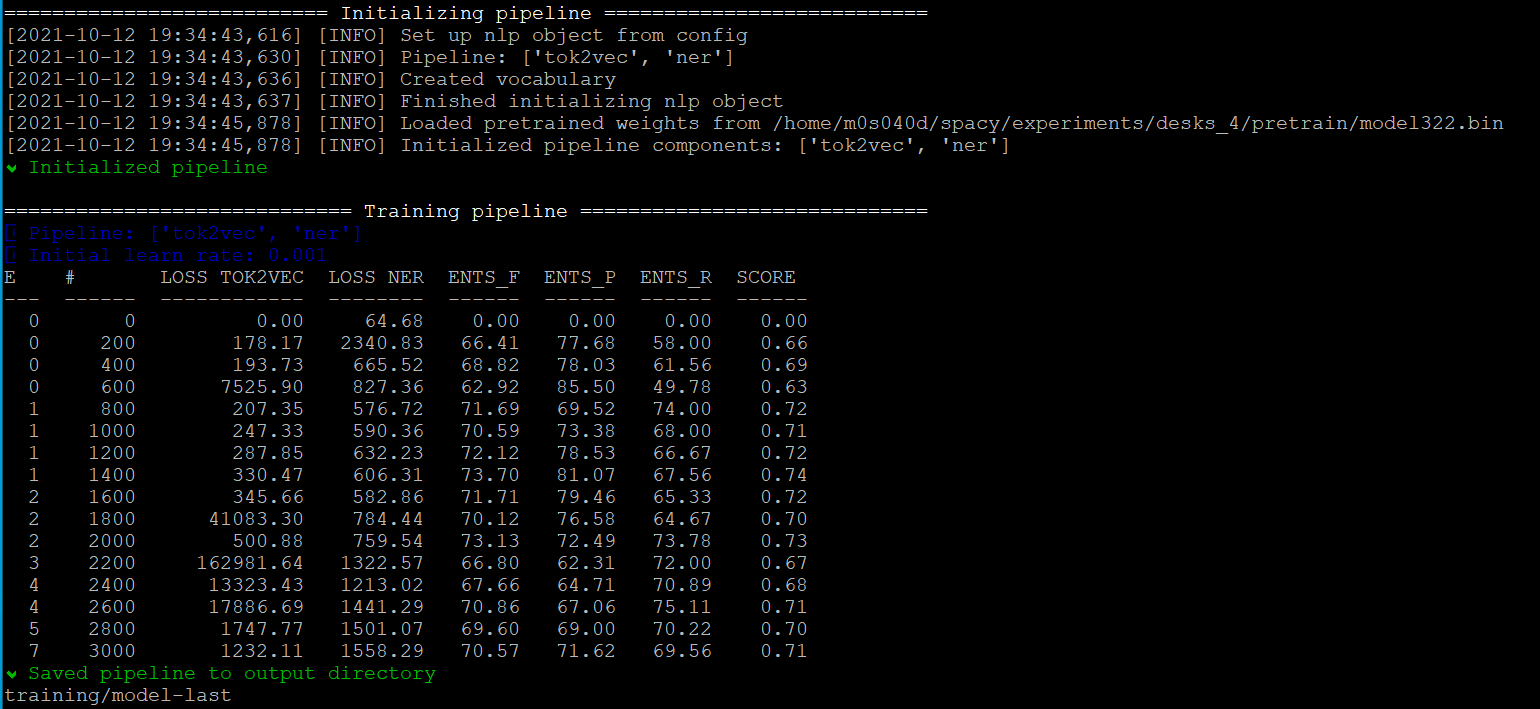

I'm training an NER model using 1.5k Prodigy-annotated data points. I'm running a Spacy project, with tok2vec and NER as the components. I have experimented with multiple parameters, all of them leading to what seems like the exact same model being trained. (The NER f-scores for each entity and the overall f-score are identical each time).

In config.cfg, I've varied:

In all these experiments, I get the exact same F-score on the evaluation set. I create a new directory for each experiment, remove project.lock and the training directories, and rerun spacy project run all.

I might be missing something obvious here, but would really appreciate some help as to why this is happening! A brief explanation of the difference between the [initialize] block and tok2vec initialization would also be very helpful, as I haven't yet been able to find an explanation I fully understood.

Thanks,

Mustafa

Beta Was this translation helpful? Give feedback.

All reactions