Sizing and controlling GPU memory for training #9451

Replies: 6 comments 16 replies

-

|

There are two batch sizes to adjust for GPU RAM:

The training batch size may have some minor effects on performance. The main corpus parameter to consider is text length, which is why the corpus reader also has the option to break texts up into individual sentences if they are beyond a specified token length. We use a lower |

Beta Was this translation helpful? Give feedback.

-

|

Well, I have gained a bit more experience since I wrote the above. I bought a 12G RTX 3060 ... and my struggle with GPU out of memory did not end there. The fist question to ask is 'in which training phase you run out of memory'. I kept running out of GPU memory in the 'evaluate()' phase, and learned (see #9602) that evaluate() is designed to keep the entire 'dev' corpus in memory (twice + tensor data). Initially I tried using [corpora.dev] max_length = 200, but (after working with language.py) I learned that it is useless - regardless of how you break up your 'dev' corpus, it will be loaded into memory ALL at once, and tensors are kept in GPU till the last document is scored. Note that when using spacy evaluate command, your 'eval' data sample may be bigger than 'dev' - but not that much (same code). IF you are running out of memory during the training (update), the main parameters that do seem to matter are:

That said, perhaps I would try to run only the NER, and only after you succeed there, I would look at what "relation_extractor" addds. |

Beta Was this translation helpful? Give feedback.

-

|

Regarding max_length, your [corpora.train] has it commented out: As I wrote above, using max_length for [corpora.dev] = evaluate() is pointless, because the code does it's best to load all the data to memory regardless of the batch_size (which is only used by the pipeline components). There were some comments that using max_length other than zero may lead to 'inconsistent results', especially when there are no good sentence markers. I use the sentencier (in my data preparation pipeline), and I have 'good' sentence markers - and I was still getting inconsistent results. Using max_length = 200 for [corpora.train] did not help with the GPU OOM, so I abandoned it. Below is the config.cfg which handles my data set of 9738 'doc' averaging 956 words (Spacy tokens) per document (with 'max' about 1.5 times of avg). The 'dev' uses only 400 such 'doc', above it I get GPU OOM in evaluate() during training. Windows 10, GPU is RTX 3060 12GB, installed NVIDIA CUDA 11.5 and using Spacy 3.2 with: config.cfg -- |

Beta Was this translation helpful? Give feedback.

-

|

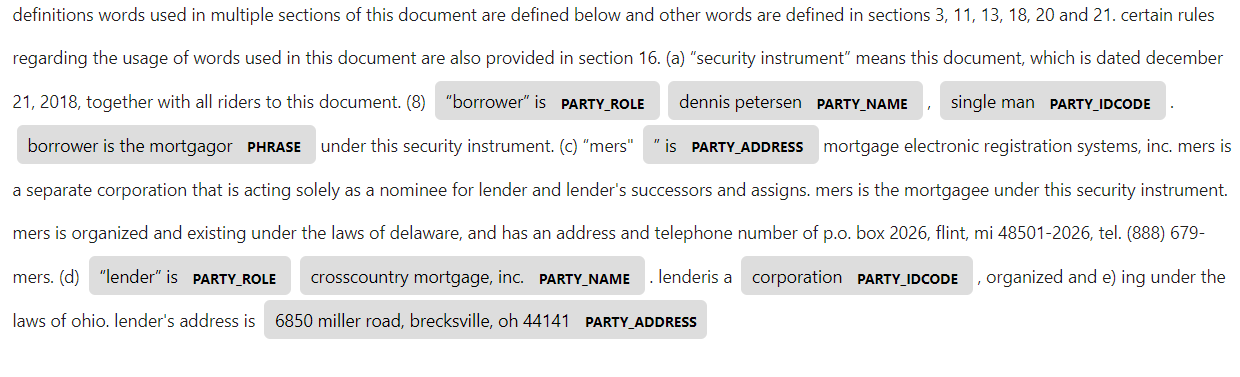

@mbrunecky Btw, I am training NER + RELATIONAL model together with 5000 training dataset and 500 dev dataset. And following image shows GPU usage while training, Any suggestions on increasing the dev data also would be helpful as you can see their is still some memory left in GPU which is unused: |

Beta Was this translation helpful? Give feedback.

-

|

On Windows, the TaskManager GPU performance provides nice graphs showing GPU memory, CUDA and copy, copy2 usage – I use the ‘slow’ refresh.

The GPU memory usage with torch is tricky, because it’s memory allocator keeps the GPU memory even when not in use, and them seems to release it at some (perhaps predictable) points.

I found that the max GPU memory usage can go up anytime (often late) during the first epoch – there may be some bigger document ‘later’ in the data. I relax only after the first epoch completed. And my GPU memory usage eventually grows to 11.5 GB, because IF I see less usage I just add more DocBins to my ‘dev’ sample for the next run.

From: Karndeep Singh ***@***.***

Sent: Sunday, November 28, 2021 5:08 AM

To: explosion/spaCy ***@***.***>

Cc: Martin Brunecky ***@***.***>; Mention ***@***.***>

Subject: [EXT] - Re: [explosion/spaCy] Sizing and controlling GPU memory for training (Discussion #9451)

@mbrunecky<https://github.com/mbrunecky> Btw, I am training NER + RELATIONAL model together with 5000 training dataset and 500 dev dataset. And following image shows GPU usage while training, Any suggestions on increasing the dev data also would be helpful as you can see their is still some memory left in GPU which is unused:

[image]<https://user-images.githubusercontent.com/49562460/143767020-13beee38-0bd1-4a2b-ae8b-477e512bbb21.png>

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub<#9451 (comment)>, or unsubscribe<https://github.com/notifications/unsubscribe-auth/AOGQRXJZZMEV3YK2RBJVHSLUOILQ5ANCNFSM5F5V4NDA>.

Triage notifications on the go with GitHub Mobile for iOS<https://apps.apple.com/app/apple-store/id1477376905?ct=notification-email&mt=8&pt=524675> or Android<https://play.google.com/store/apps/details?id=com.github.android&referrer=utm_campaign%3Dnotification-email%26utm_medium%3Demail%26utm_source%3Dgithub>.

|

Beta Was this translation helpful? Give feedback.

-

|

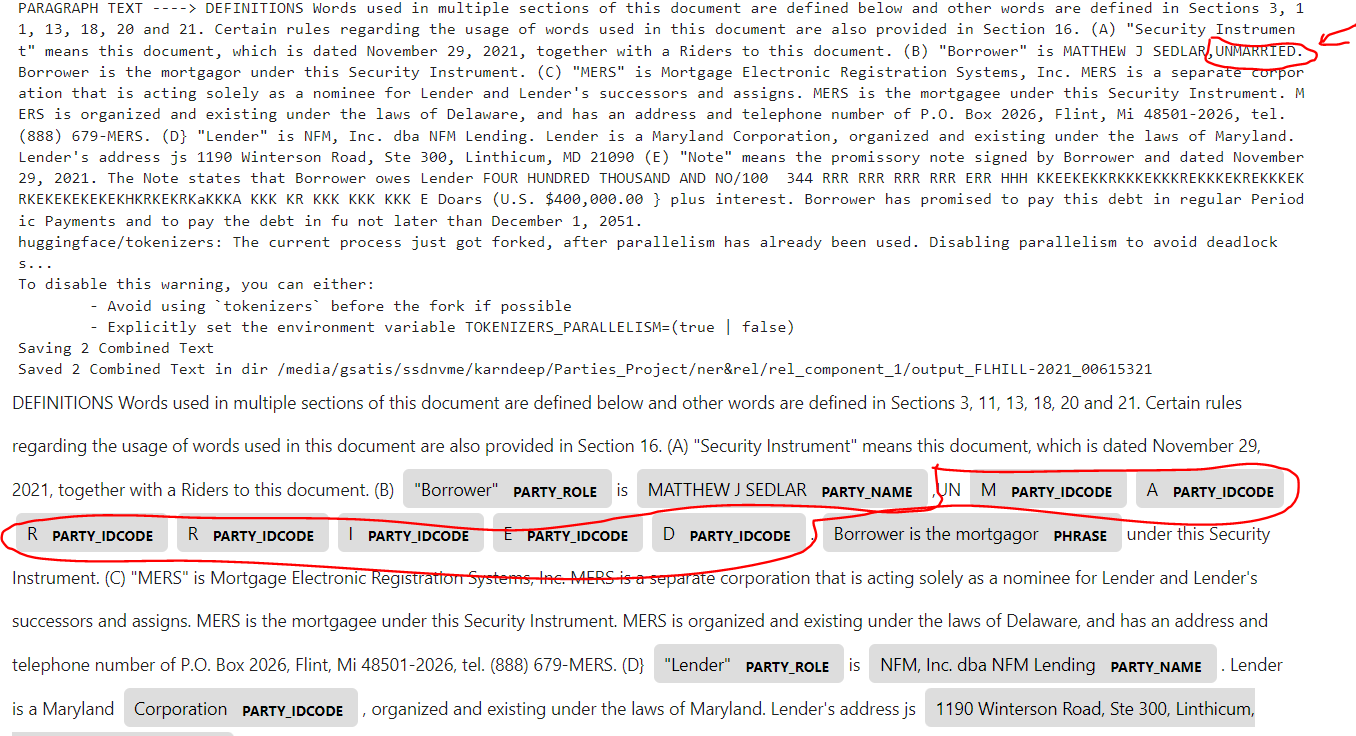

@mbrunecky Hi Any Idea Why I am getting the "UNMARRIED" word's each character is being Predicted as PARTY IDCODE rather than whole "UNMARRIED" word predicted as "PARTY_IDCODE". Please help me to understand. |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

-

Background:

In Spacy 2.3 I was able to use an 8GB GPU for all my NER training, getting about 3x better performance. With Spacy 3, the documentation suggests 'at least 10GB' and sure enough, the same models run out of 8GB GPU memory (unless I tweak down the parameters - but than my prediction accuracy suffers).

With the addition of transformers (and they are great), the GPU memory needs go even higher (at least I am unable to 'tweak down' my configuration to fit into 8GB).

That said, the GPU memory usage is significantly lower in production (using trained models to get predictions) . I can easily use my 8GB GPU for my (CPU-only trained) transformer models or en_core_web_trf - and get the speed.

This raises two questions:

I do not want to buy another GPU card (especially at today's prices) and later find out that (for example) 12GB is not enough, and I need another upgrade. On the other hand, training transformer on CPU only (even with 20 cores) takes too many days...

On the subject of 'controlling GPU memory usage':

It seems that

batch_sizehas only a limited impact (used mainly during validation), so reducing it only adds some overhead and saves memory.For tok2vec training, significant parameters seem (and there is probably more)

Reducing width/depth saves memory - but (in my case) goes against accuracy.

And (despite lots of trying) I have not found anything that would reduce the transformer memory greed :-).

Beta Was this translation helpful? Give feedback.

All reactions