Dependency Parser Training #9470

-

|

Hi everyone, I was working on developing Korean Language support and I have a couple of questions regarding implementation & dataset. Would anyone be so kind to answer the following questions?

|

Beta Was this translation helpful? Give feedback.

Replies: 1 comment 16 replies

-

Often basic features that are relevant for POS prediction are also relevant for the dependency parse - for example,

I think we can train these on separate datasets, but I'm not sure I've ever seen a dependency parsing dataset without POS data. Do you have one like that?

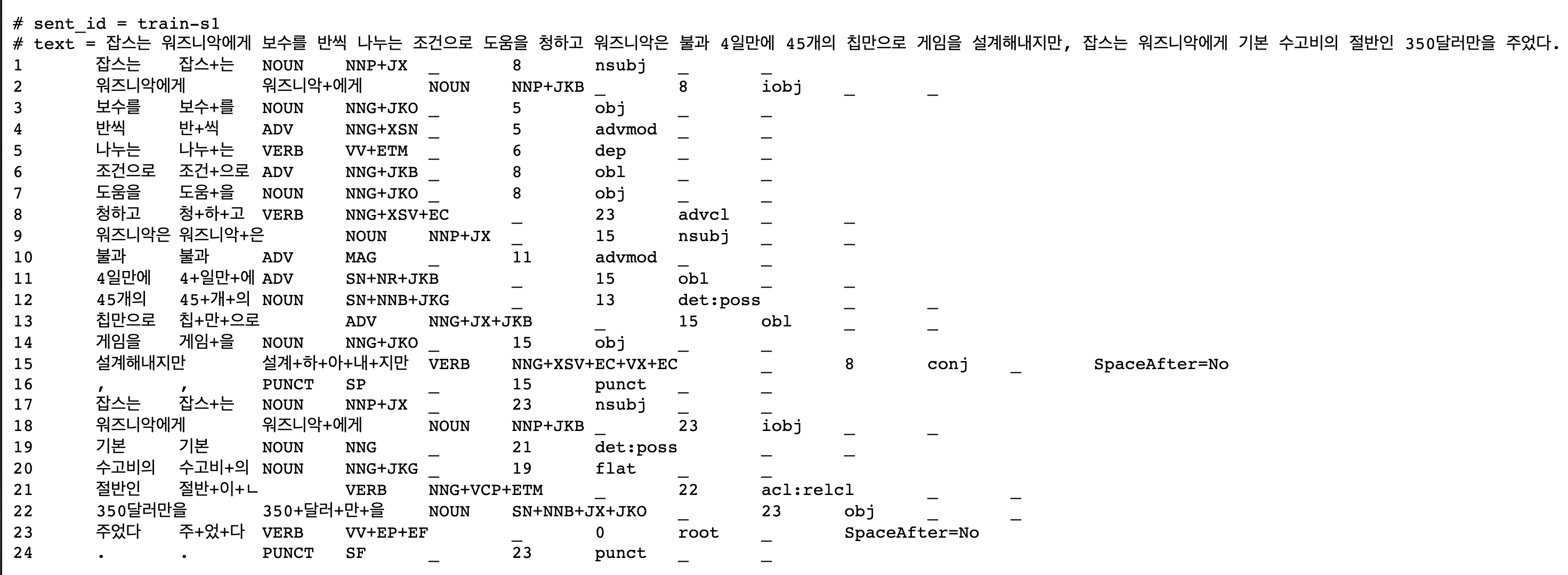

I think you would need to convert the word-based annotations to morpheme based ones. I know something similar happened with some Japanese datasets, which had dependency annotations at the bunsetsu level ("bunsetsu" is roughly word + particles/endings) that were converted to token-level. It was possible to automate that because bunsetsu-internal structure was basically always unambiguous and predictable. Does that sound feasible for Korean? |

Beta Was this translation helpful? Give feedback.

Often basic features that are relevant for POS prediction are also relevant for the dependency parse - for example,

nmodusually attaches to an adjective and noun pair. There's no guarantee that's optimal, but we also don't have some other architectures (like pointer generators and CRFs) in spaCy.