Combining Pretrained and Trained NER Components, both with Transformers #9784

-

|

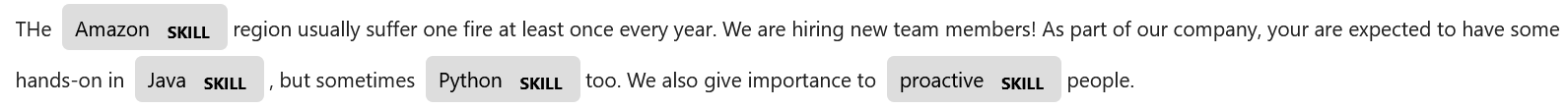

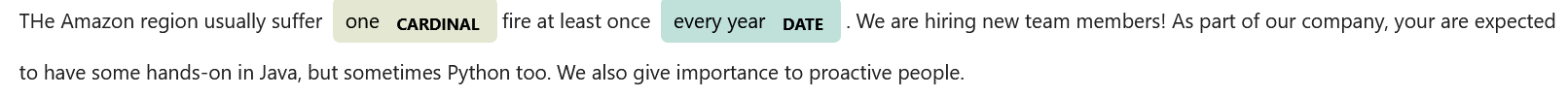

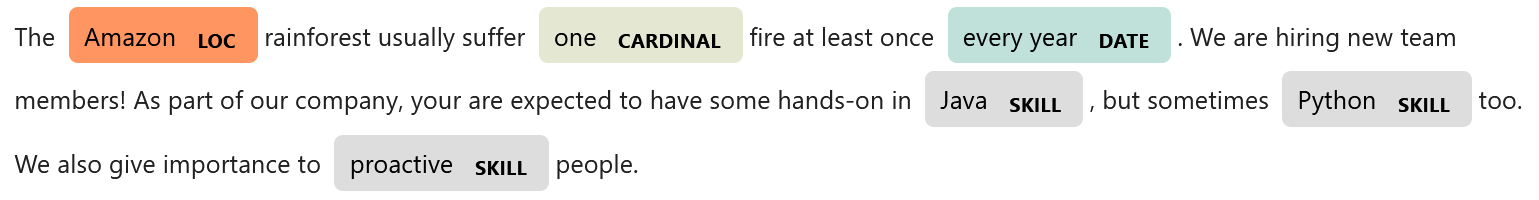

Hello everyone! I have just finished training a NER model using transformers (as accuracy is needed for the application I am designing), to recognize a single entity: 'SKILL'. Temporarily, I wanted another highly-accurate, pretrained NER model (say "en_core_web_trf"), to identify other entities, and so I wanted to "chain" those models (mine and pretrained), which according to the documentation is posible. More specifically, I have followed this tutorial. I am facing 2 issues with it:

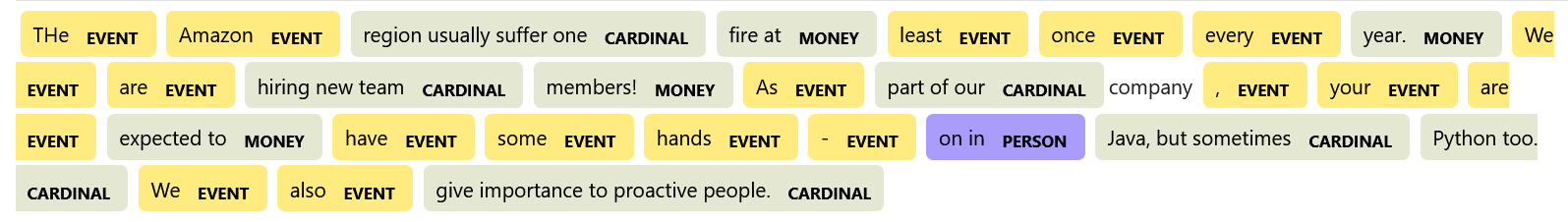

Which seems weird because And the pre-trained model Needless to say, I was expecting to obtain "the sum" of both NER models, but not what I got. What am I doing wrong? I am using spaCy V3.2.0 on Python 3.9.7. Please let me know if you need something else, to better understand these queries. PS.: I have some more general queries derived:

THANK YOU. |

Beta Was this translation helpful? Give feedback.

Replies: 2 comments 6 replies

-

|

Transformers pipelines use a "transformer" in the same way that non-transformers use a "tok2vec", so you should change the You get nonsense labels because, as the warning suggests, the ner component from the other pipeline was trained with different vectors. As a general note, non-transformer models are not "word vector" models. The tok2vec layer is a CNN, which optionally uses word vectors as input features.

Yes.

No. Note that you do need consistent word vectors (which are separate from the tok2vec) between pipelines if they were used as features in training the tok2vec.

GPU uses Transformers, CPU does not. |

Beta Was this translation helpful? Give feedback.

-

Each pipeline has one set of default vectors associated with the That's kind of slow, so the actual check in spaCy has some faster things it tries first, like making sure the shapes are equivalent. One thing is, Transformers models don't have word vectors, so I'm a little confused why you would be getting this warning if you're only using Transformers models. This warning should only apply to the word vectors, not to the tok2vec/transformers vectors.

If you are only using Transformers this shouldn't matter. Otherwise you can just use the same base model ( You might find this section in the docs helpful. |

Beta Was this translation helpful? Give feedback.

Transformers pipelines use a "transformer" in the same way that non-transformers use a "tok2vec", so you should change the

replace_listenersto refer to the transformer layer rather than the tok2vec layer. (We have had some issues with this in the past, but I think this should work at present.)You get nonsense labels because, as the warning suggests, the ner component from the other pipeline was trained with different vectors.

As a general note, non-transformer models are not "word vector" models. The tok2vec layer is a CNN, which optionally uses word vectors as input features.

Yes.