Speed up inference instance segmentation #2891

Unanswered

namnguyenhai

asked this question in

Q&A

Replies: 0 comments

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Uh oh!

There was an error while loading. Please reload this page.

Uh oh!

There was an error while loading. Please reload this page.

-

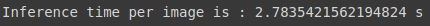

Hi All, I have working instance segmentation, I'm using "mask_rcnn_R_101_FPN_3x" model. When I inference image it takes about 3 second / image on GPU. How can I speed up it faster ?

I code in Google Colab

This is my setup config:

This is inference:

Return

Image I inference size 1024 x 1024 pixel. I have change different size but it still inference 3 second / image. Am I missing anything about Detectron2 ?

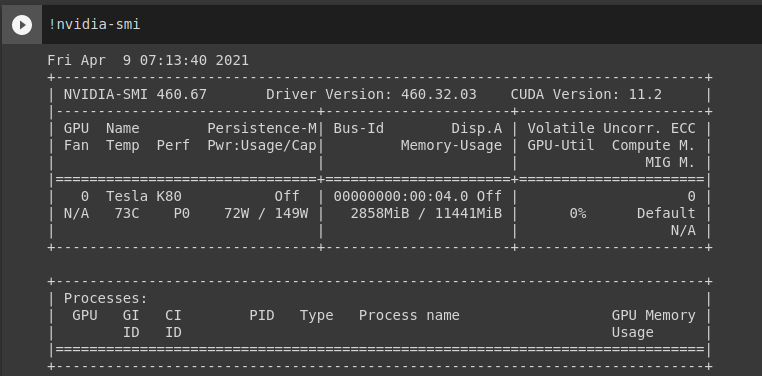

More information GPU

Beta Was this translation helpful? Give feedback.

All reactions