-

Couldn't load subscription status.

- Fork 2.1k

Description

We have an application where we regularly respond with object lists of multiple thousand objects. Completing the list containing these objects can take several seconds, during which the event loop is busy and the server non-responsive, which is far from ideal.

The problematic forEach call in completeListValue here:

https://github.com/graphql/graphql-js/blob/master/src/execution/execute.js#L911

Would it be interesting to divide this work into smaller synchronous chunks of work in order to return to the event loop more frequently?

I have made a working solution below that may be used by anyone who has the same problem and are fine with monkey-patching inside the execute module.

The chunked implementation only starts using chunks if the completion time goes above a given time-threshold (e.g. 50 ms) and uses a variable chunk size in order to minimize overhead.

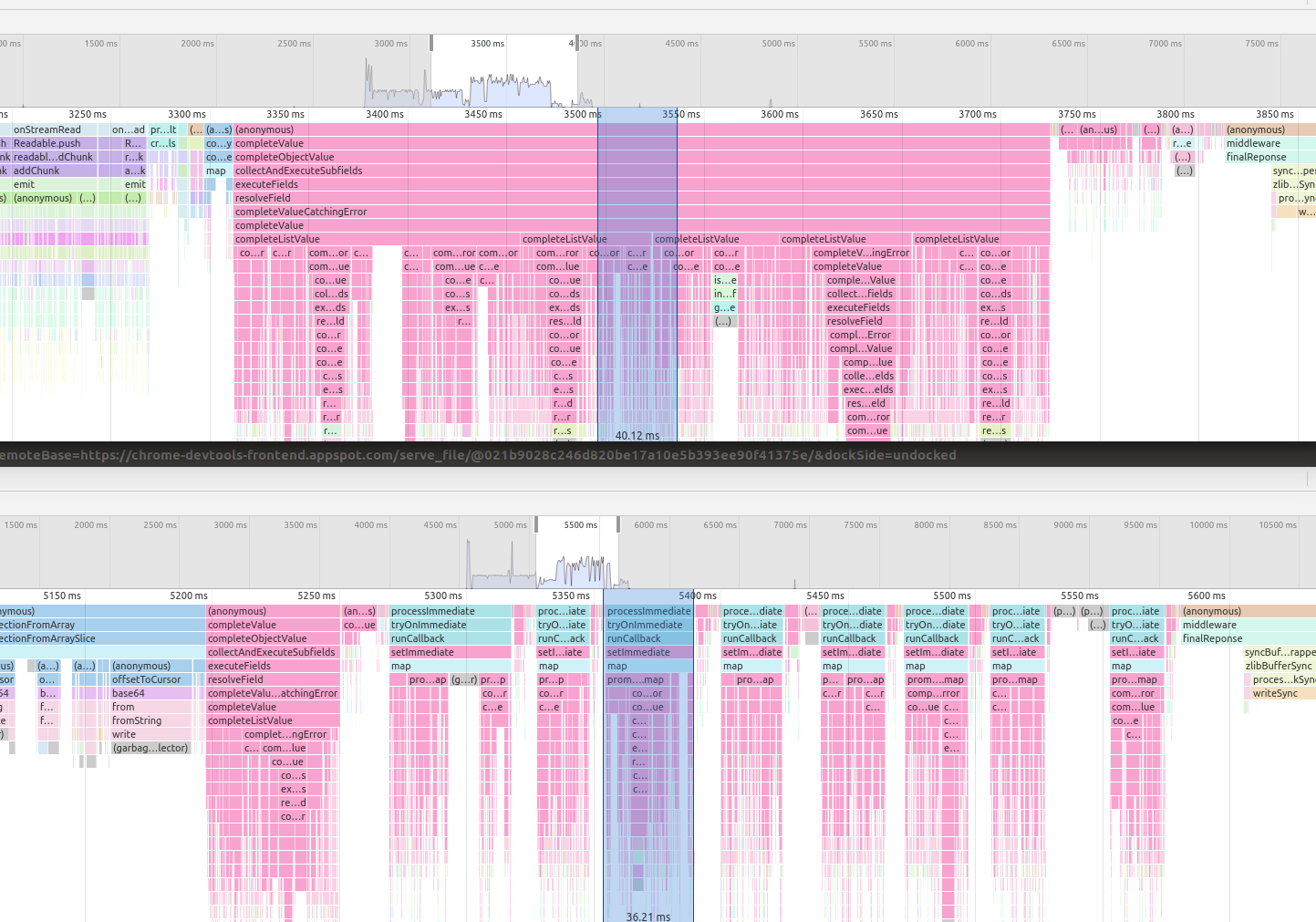

Profiles before and after chunkification:

const rewire = require('rewire');

const executeModule = rewire('graphql/execution/execute');

const completeValueCatchingError = executeModule.__get__( 'completeValueCatchingError');

const { GraphQLError } = require('graphql');

const _ = require('lodash');

function completeListValueChunked(

exeContext,

returnType,

fieldNodes,

info,

path,

result

) {

if (!_.isArray(result)) {

throw new GraphQLError(

'Expected Iterable, but did not find one for field '

.concat(info.parentType.name, '.')

.concat(info.fieldName, '.')

);

}

const itemType = returnType.ofType;

const completedResults = [];

let containsPromise = false;

let fieldPath;

const t0 = new Date().getTime();

let breakIdx;

for (const [idx, item] of result.entries()) {

// Check every Nth item (e.g. 20th) if the elapsed time is larger than 50 ms.

// If so, break and divide work into chunks using chained then+setImmediate

if (idx % 20 === 0 && idx > 0 && new Date().getTime() - t0 > 50) {

breakIdx = idx; // Used as chunk size

break;

}

fieldPath = { prev: path, key: idx }; // =addPath behaviour in execute.js

const completedItem = completeValueCatchingError(

exeContext,

itemType,

fieldNodes,

info,

fieldPath,

item

);

if (!containsPromise && completedItem instanceof Promise) {

containsPromise = true;

}

completedResults.push(completedItem);

}

if (breakIdx) {

const chunkSize = breakIdx;

const returnPromise = _.chunk(result.slice(breakIdx), chunkSize).reduce(

(prevPromise, chunk, chunkIdx) =>

prevPromise.then(

async reductionResults =>

await Promise.all(

await new Promise(resolve =>

setImmediate(() => // We want to execute this in the next tick

resolve(

reductionResults.concat(

[...chunk.entries()].map(([idx, item]) => {

fieldPath = {

prev: path,

key: breakIdx + chunkIdx * chunkSize + idx,

};

const completedValue = completeValueCatchingError(

exeContext,

itemType,

fieldNodes,

info,

fieldPath,

item

);

return completedValue;

})

)

)

)

)

)

),

Promise.all(completedResults)

);

return returnPromise;

} else {

return containsPromise ? Promise.all(completedResults) : completedResults;

}

}

// Monkey-patch the completeListValue function inside the execute module using rewire

executeModule.__set__('completeListValue', completeListValueChunked);

// Use the rewired execute method in the actual server

const rewiredExecute = rewiredExecuteModule.execute;