|

| 1 | +--- |

| 2 | +title: "Introduction to 3D Gaussian Splatting" |

| 3 | +thumbnail: /blog/assets/124_ml-for-games/thumbnail-gaussian-splatting.png |

| 4 | +authors: |

| 5 | +- user: dylanebert |

| 6 | +--- |

| 7 | + |

| 8 | +<h1>Introduction to 3D Gaussian Splatting</h1> |

| 9 | + |

| 10 | +<!-- {blog_metadata} --> |

| 11 | +<!-- {authors} --> |

| 12 | + |

| 13 | + |

| 14 | +3D Gaussian Splatting is a rasterization technique described in [3D Gaussian Splatting for Real-Time Radiance Field Rendering](https://huggingface.co/papers/2308.04079) that allows real-time rendering of photorealistic scenes learned from small samples of images. This article will break down how it works and what it means for the future of graphics. |

| 15 | + |

| 16 | +Check out the remote Gaussian Viewer space [here](https://huggingface.co/spaces/dylanebert/gaussian-viewer) or embedded below for an example of a Gaussian Splatting scene. |

| 17 | + |

| 18 | +<script type="module" src="https://gradio.s3-us-west-2.amazonaws.com/3.42.0/gradio.js"> </script> |

| 19 | +<gradio-app theme_mode="light" space="dylanebert/gaussian-viewer"></gradio-app> |

| 20 | + |

| 21 | +## What is 3D Gaussian Splatting? |

| 22 | + |

| 23 | +3D Gaussian Splatting is, at its core, a rasterization technique. That means: |

| 24 | + |

| 25 | +1. Have data describing the scene. |

| 26 | +2. Draw the data on the screen. |

| 27 | + |

| 28 | +This is analogous to triangle rasterization in computer graphics, which is used to draw many triangles on the screen. |

| 29 | + |

| 30 | + |

| 31 | + |

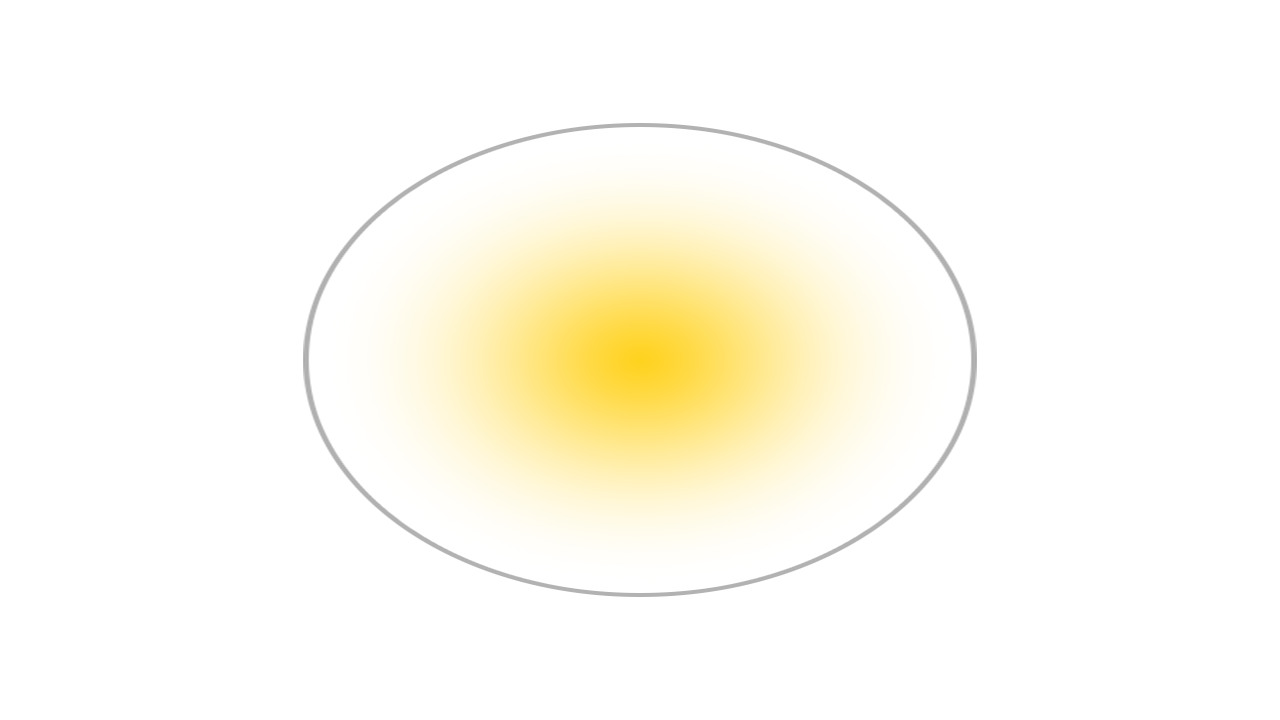

| 32 | +However, instead of triangles, it's gaussians. Here's a single rasterized gaussian, with a border drawn for clarity. |

| 33 | + |

| 34 | + |

| 35 | + |

| 36 | +It's described by the following parameters: |

| 37 | + |

| 38 | +- **Position**: where it's located (XYZ) |

| 39 | +- **Covariance**: how it's stretched/scaled (3x3 matrix) |

| 40 | +- **Color**: what color it is (RGB) |

| 41 | +- **Alpha**: how transparent it is (α) |

| 42 | + |

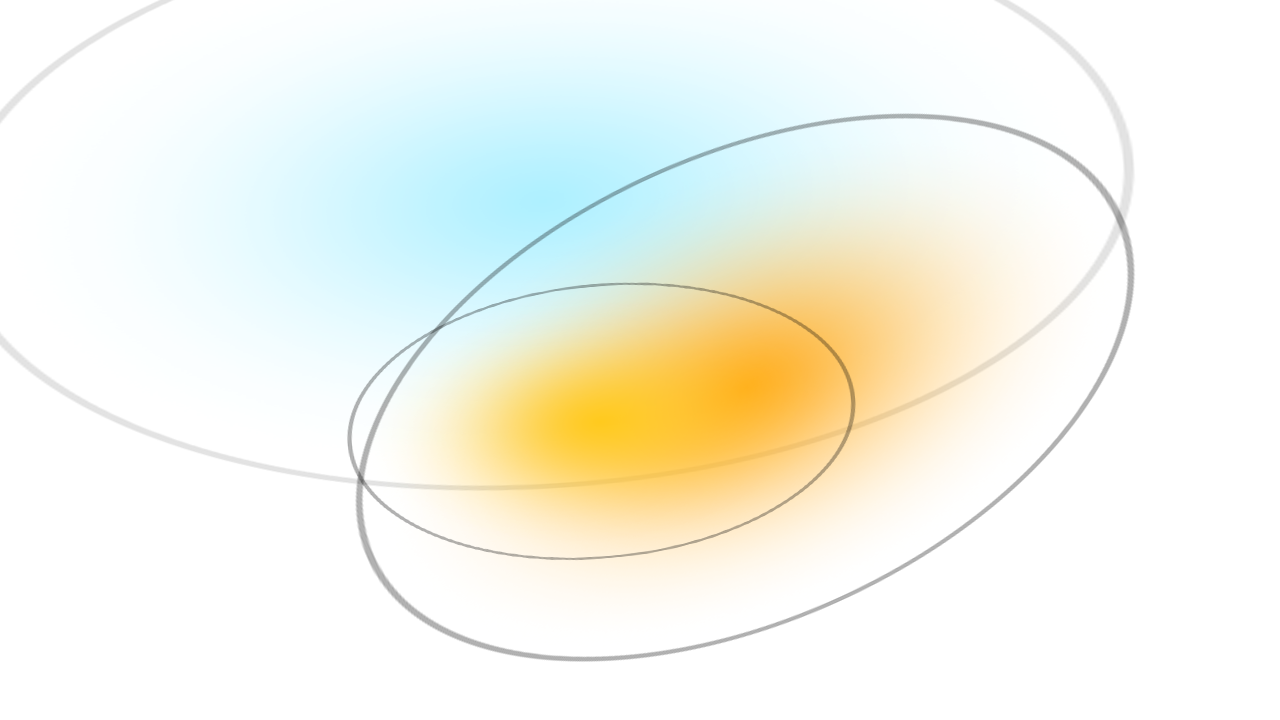

| 43 | +In practice, multiple gaussians are drawn at once. |

| 44 | + |

| 45 | + |

| 46 | + |

| 47 | +That's three gaussians. Now what about 7 million gaussians? |

| 48 | + |

| 49 | + |

| 50 | + |

| 51 | +Here's what it looks like with each gaussian rasterized fully opaque: |

| 52 | + |

| 53 | + |

| 54 | + |

| 55 | +That's a very brief overview of what 3D Gaussian Splatting is. Next, let's walk through the full procedure described in the paper. |

| 56 | + |

| 57 | +## How it works |

| 58 | + |

| 59 | +### 1. Structure from Motion |

| 60 | + |

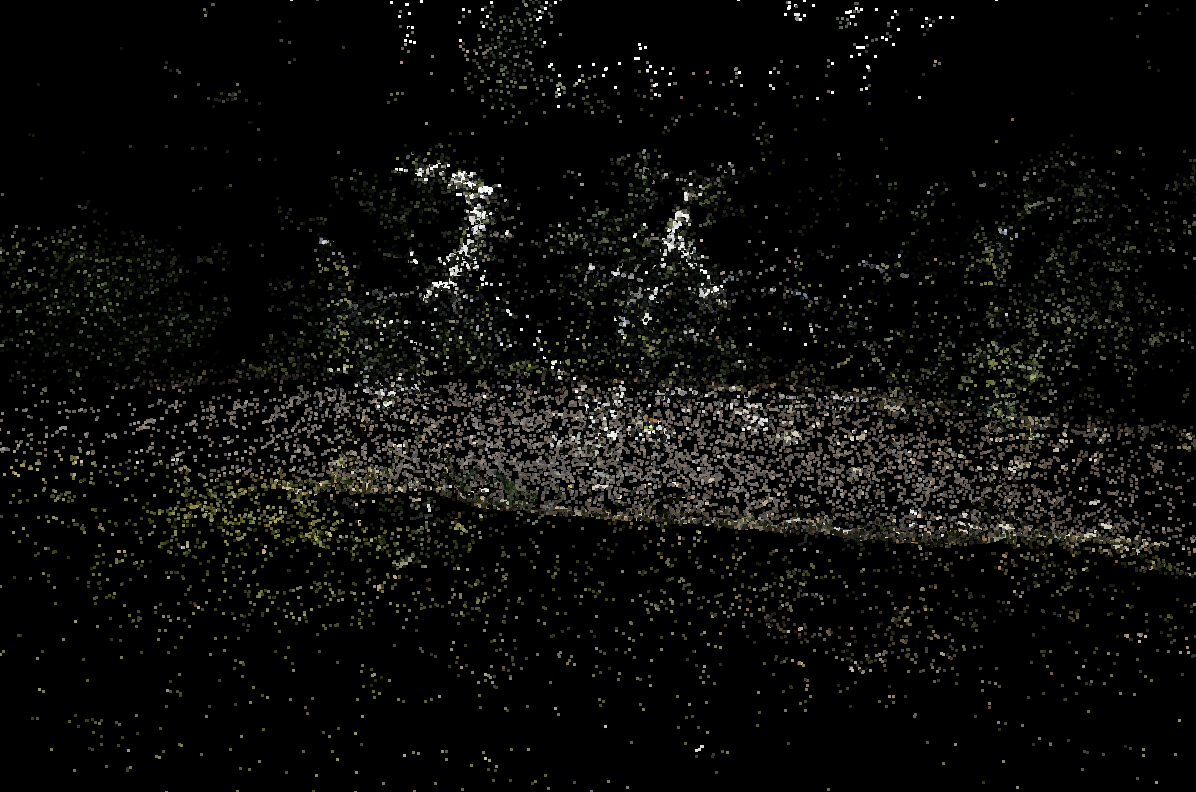

| 61 | +The first step is to use the Structure from Motion (SfM) method to estimate a point cloud from a set of images. This is a method for estimating a 3D point cloud from a set of 2D images. This can be done with the [COLMAP](https://colmap.github.io/) library. |

| 62 | + |

| 63 | + |

| 64 | + |

| 65 | +### 2. Convert to Gaussians |

| 66 | + |

| 67 | +Next, each point is converted to a gaussian. This is already sufficient for rasterization. However, only position and color can be inferred from the SfM data. To learn a representation that yields high quality results, we need to train it. |

| 68 | + |

| 69 | +### 3. Training |

| 70 | + |

| 71 | +The training procedure uses Stochastic Gradient Descent, similar to a neural network, but without the layers. The training steps are: |

| 72 | + |

| 73 | +1. Rasterize the gaussians to an image using differentiable gaussian rasterization (more on that later) |

| 74 | +2. Calculate the loss based on the difference between the rasterized image and ground truth image |

| 75 | +3. Adjust the gaussian parameters according to the loss |

| 76 | +4. Apply automated densification and pruning |

| 77 | + |

| 78 | +Steps 1-3 are conceptually pretty straightforward. Step 4 involves the following: |

| 79 | + |

| 80 | +- If the gradient is large for a given gaussian (i.e. it's too wrong), split/clone it |

| 81 | + - If the gaussian is small, clone it |

| 82 | + - If the gaussian is large, split it |

| 83 | +- If the alpha of a gaussian gets too low, remove it |

| 84 | + |

| 85 | +This procedure helps the gaussians better fit fine-grained details, while pruning unnecessary gaussians. |

| 86 | + |

| 87 | +### 4. Differentiable Gaussian Rasterization |

| 88 | + |

| 89 | +As mentioned earlier, 3D Gaussian Splatting is a *rasterization* approach, which draws the data to the screen. However, some important elements are also that it's: |

| 90 | + |

| 91 | +1. Fast |

| 92 | +2. Differentiable |

| 93 | + |

| 94 | +The original implementation of the rasterizer can be found [here](https://github.com/graphdeco-inria/diff-gaussian-rasterization). The rasterization involves: |

| 95 | + |

| 96 | +1. Project each gaussian into 2D from the camera perspective. |

| 97 | +2. Sort the gaussians by depth. |

| 98 | +3. For each pixel, iterate over each gaussian front-to-back, blending them together. |

| 99 | + |

| 100 | +Additional optimizations are described in [the paper](https://huggingface.co/papers/2308.04079). |

| 101 | + |

| 102 | +It's also essential that the rasterizer is differentiable, so that it can be trained with stochastic gradient descent. However, this is only relevant for training - the trained gaussians can also be rendered with a non-differentiable approach. |

| 103 | + |

| 104 | +## Who cares? |

| 105 | + |

| 106 | +Why has there been so much attention on 3D Gaussian Splatting? The obvious answer is that the results speak for themselves - it's high-quality scenes in real-time. However, there may be more to story. |

| 107 | + |

| 108 | +There are many unknowns as to what else can be done with Gaussian Splatting. Can they be animated? The upcoming paper [Dynamic 3D Gaussians: tracking by Persistent Dynamic View Synthesis](https://arxiv.org/pdf/2308.09713) suggests that they can. There are many other unknowns as well. Can they do reflections? Can they be modeled without training on reference images? |

| 109 | + |

| 110 | +Finally, there is growing research interest in [Embodied AI](https://ieeexplore.ieee.org/iel7/7433297/9741092/09687596.pdf). This is an area of AI research where state-of-the-art performance is still orders of magnitude below human performance, with much of the challenge being in representing 3D space. Given that 3D Gaussian Splatting yields a very dense representation of 3D space, what might the implications be for Embodied AI research? |

| 111 | + |

| 112 | +These questions call attention the the method. It remains to be seen what the actual impact will be. |

| 113 | + |

| 114 | +## The future of graphics |

| 115 | + |

| 116 | +So what does this mean for the future of graphics? Well, let's break it up into pros/cons: |

| 117 | + |

| 118 | +**Pros** |

| 119 | +1. High-quality, photorealistic scenes |

| 120 | +2. Fast, real-time rasterization |

| 121 | +3. Relatively fast to train |

| 122 | + |

| 123 | +**Cons** |

| 124 | +1. High VRAM usage (4GB to view, 12GB to train) |

| 125 | +2. Large disk size (1GB+ for a scene) |

| 126 | +3. Incompatible with existing rendering pipelines |

| 127 | +3. Static (for now) |

| 128 | + |

| 129 | +So far, the original CUDA implementation has not been adapted to production rendering pipelines, like Vulkan, DirectX, WebGPU, etc, so it's yet to be seen what the impact will be. |

| 130 | + |

| 131 | +There have already been the following adaptations: |

| 132 | +1. [Remote viewer](https://huggingface.co/spaces/dylanebert/gaussian-viewer) |

| 133 | +2. [WebGPU viewer](https://github.com/cvlab-epfl/gaussian-splatting-web) |

| 134 | +3. [WebGL viewer](https://huggingface.co/spaces/cakewalk/splat) |

| 135 | +4. [Unity viewer](https://github.com/aras-p/UnityGaussianSplatting) |

| 136 | +5. [Optimized WebGL viewer](https://gsplat.tech/) |

| 137 | + |

| 138 | +These rely either on remote streaming (1) or a traditional quad-based rasterization approach (2-5). While a quad-based approach is compatible with decades of graphics technologies, it may result in lower quality/performance. However, [viewer #5](https://gsplat.tech/) demonstrates that optimization tricks can result in high quality/performance, despite a quad-based approach. |

| 139 | + |

| 140 | +So will we see 3D Gaussian Splatting fully reimplemented in a production environment? The answer is *probably yes*. The primary bottleneck is sorting millions of gaussians, which is done efficiently in the original implementation using [CUB device radix sort](https://nvlabs.github.io/cub/structcub_1_1_device_radix_sort.html), a highly optimized sort only available in CUDA. However, with enough effort, it's certainly possible to achieve this level of performance in other rendering pipelines. |

| 141 | + |

| 142 | +If you have any questions or would like to get involved, join the [Hugging Face Discord](https://hf.co/join/discord)! |

0 commit comments