|

| 1 | +--- |

| 2 | +title: "在 SDXL 上用 T2I-Adapter 实现高效可控的文生图" |

| 3 | +thumbnail: /blog/assets/t2i-sdxl-adapters/thumbnail.png |

| 4 | +authors: |

| 5 | +- user: Adapter |

| 6 | + guest: true |

| 7 | +- user: valhalla |

| 8 | +- user: sayakpaul |

| 9 | +- user: Xintao |

| 10 | + guest: true |

| 11 | +- user: hysts |

| 12 | +translators: |

| 13 | +- user: MatrixYao |

| 14 | +- user: zhongdongy |

| 15 | + proofreader: true |

| 16 | +--- |

| 17 | + |

| 18 | +# 在 SDXL 上用 T2I-Adapter 实现高效可控的文生图 |

| 19 | + |

| 20 | +<!-- {blog_metadata} --> |

| 21 | +<!-- {authors} --> |

| 22 | + |

| 23 | +<p align="center"> |

| 24 | + <img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/t2i-adapters-sdxl/hf_tencent.png" height=180/> |

| 25 | +</p> |

| 26 | + |

| 27 | +[T2I-Adapter](https://huggingface.co/papers/2302.08453) 是一种高效的即插即用模型,其能对冻结的预训练大型文生图模型提供额外引导。T2I-Adapter 将 T2I 模型中的内部知识与外部控制信号结合起来。我们可以根据不同的情况训练各种适配器,实现丰富的控制和编辑效果。 |

| 28 | + |

| 29 | +同期的 [ControlNet](https://hf.co/papers/2302.05543) 也有类似的功能且已有广泛的应用。然而,其运行所需的 **计算成本比较高**。这是因为其反向扩散过程的每个去噪步都需要运行 ControlNet 和 UNet。另外,对 ControlNet 而言,复制 UNet 编码器作为控制模型的一部分对效果非常重要,这也导致了控制模型参数量的进一步增大。因此,ControlNet 的模型大小成了生成速度的瓶颈 (模型越大,生成得越慢)。 |

| 30 | + |

| 31 | +在这方面,T2I-Adapters 相较 ControlNets 而言颇有优势。T2I-Adapter 的尺寸较小,而且,与 ControlNet 不同,T2I-Adapter 可以在整个去噪过程中仅运行一次。 |

| 32 | + |

| 33 | +| **模型** | **参数量** | **所需存储空间(fp16)** | |

| 34 | +| --- | --- | --- | |

| 35 | +| [ControlNet-SDXL](https://huggingface.co/diffusers/controlnet-canny-sdxl-1.0) | 1251 M | 2.5 GB | |

| 36 | +| [ControlLoRA](https://huggingface.co/stabilityai/control-lora) (rank = 128) | 197.78 M (参数量减少 84.19%) | 396 MB (所需空间减少 84.53%) | |

| 37 | +| [T2I-Adapter-SDXL](https://huggingface.co/TencentARC/t2i-adapter-canny-sdxl-1.0) | 79 M (**_参数量减少 93.69%_**) | 158 MB (**_所需空间减少 94%_**) | |

| 38 | + |

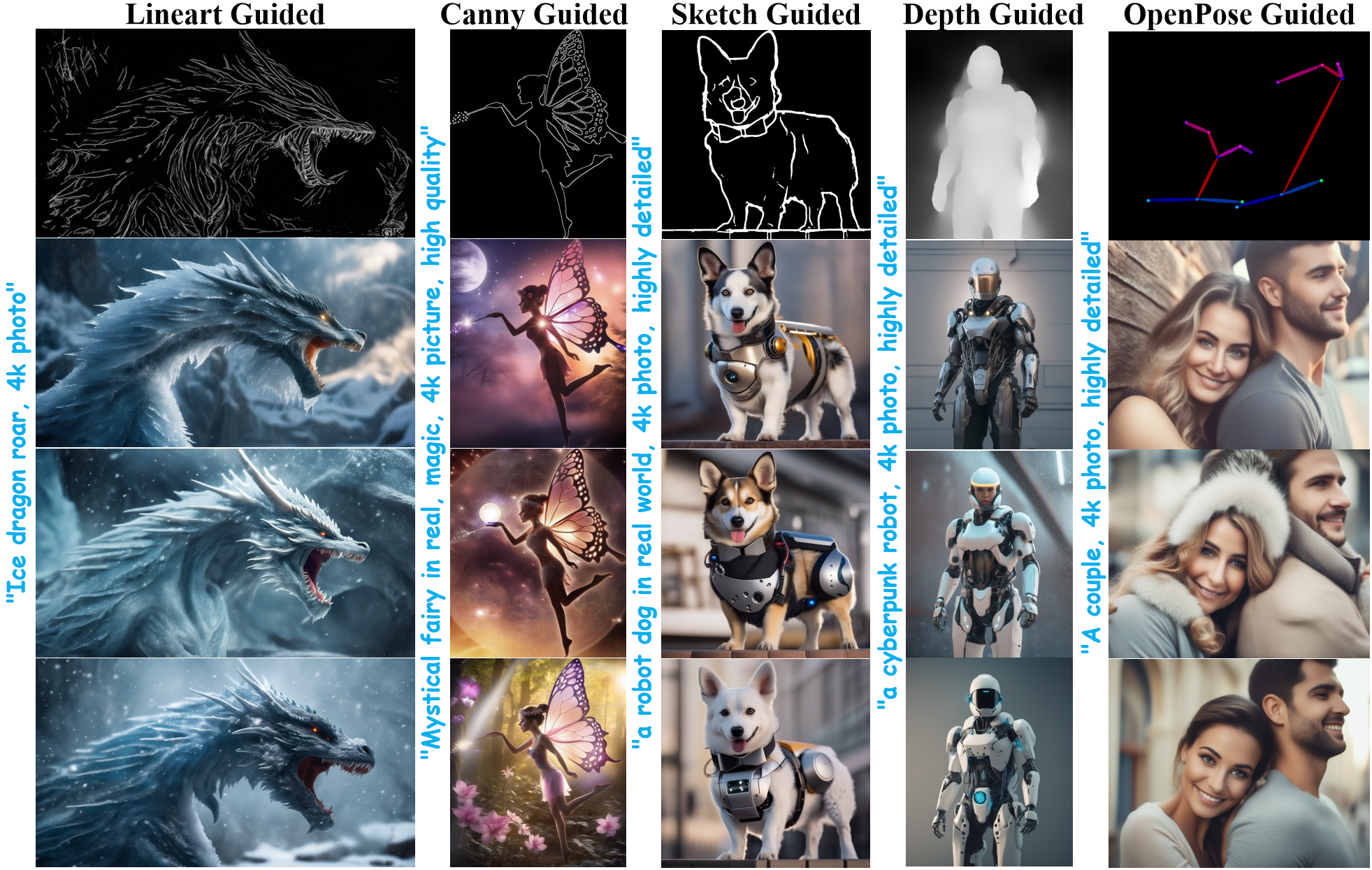

| 39 | +在过去的几周里,Diffusers 团队和 T2I-Adapter 作者紧密合作,在 [`diffusers`](https://github.com/huggingface/diffusers) 库上为 [Stable Diffusion XL (SDXL)](https://huggingface.co/papers/2307.01952) 增加 T2I-Adapter 的支持。本文,我们将分享我们在从头开始训练基于 SDXL 的 T2I-Adapter 过程中的发现、漂亮的结果,以及各种条件 (草图、canny、线稿图、深度图以及 OpenPose 骨骼图) 下的 T2I-Adapter checkpoint! |

| 40 | + |

| 41 | + |

| 42 | + |

| 43 | +与之前版本的 T2I-Adapter (SD-1.4/1.5) 相比,[T2I-Adapter-SDXL](https://github.com/TencentARC/T2I-Adapter) 还是原来的配方,不一样之处在于,用一个 79M 的适配器去驱动 2.6B 的大模型 SDXL! T2I-Adapter-SDXL 在继承 SDXL 的高品质生成能力的同时,保留了强大的控制能力! |

| 44 | + |

| 45 | +## 用 `diffusers` 训练 T2I-Adapter-SDXL |

| 46 | + |

| 47 | +我们基于 `diffusers` 提供的 [这个官方示例](https://github.com/huggingface/diffusers/blob/main/examples/t2i_adapter/README_sdxl.md) 构建了我们的训练脚本。 |

| 48 | + |

| 49 | +本文中提到的大多数 T2I-Adapter 模型都是在 LAION-Aesthetics V2 的 3M 高分辨率 `图文对` 上训练的,配置如下: |

| 50 | + |

| 51 | +- 训练步数: 20000-35000 |

| 52 | +- batch size: 采用数据并行,单 GPU batch size 为 16,总 batch size 为 128 |

| 53 | +- 学习率: 1e-5 的恒定学习率 |

| 54 | +- 混合精度: fp16 |

| 55 | + |

| 56 | +我们鼓励社区使用我们的脚本来训练自己的强大的 T2I-Adapter,并对速度、内存和生成的图像质量进行折衷以获得竞争优势。 |

| 57 | + |

| 58 | +## 在 `diffusers` 中使用 T2I-Adapter-SDXL |

| 59 | + |

| 60 | +这里以线稿图为控制条件来演示 [T2I-Adapter-SDXL](https://github.com/TencentARC/T2I-Adapter/tree/XL) 的使用。首先,安装所需的依赖项: |

| 61 | + |

| 62 | +```bash |

| 63 | +pip install -U git+https://github.com/huggingface/diffusers.git |

| 64 | +pip install -U controlnet_aux==0.0.7 # for conditioning models and detectors |

| 65 | +pip install transformers accelerate |

| 66 | +``` |

| 67 | + |

| 68 | +T2I-Adapter-SDXL 的生成过程主要包含以下两个步骤: |

| 69 | + |

| 70 | +1. 首先将条件图像转换为符合要求的 _控制图像_ 格式。 |

| 71 | +2. 然后将 _控制图像_ 和 _提示_ 传给 [`StableDiffusionXLAdapterPipeline`](https://github.com/huggingface/diffusers/blob/0ec7a02b6a609a31b442cdf18962d7238c5be25d/src/diffusers/pipelines/t2i_adapter/pipeline_stable_diffusion_xl_adapter.py#L126)。 |

| 72 | + |

| 73 | +我们看一个使用 [Lineart Adapter](https://huggingface.co/TencentARC/t2i-adapter-lineart-sdxl-1.0) 的简单示例。我们首先初始化 SDXL 的 T2I-Adapter 流水线以及线稿检测器。 |

| 74 | + |

| 75 | +```python |

| 76 | +import torch |

| 77 | +from controlnet_aux.lineart import LineartDetector |

| 78 | +from diffusers import (AutoencoderKL, EulerAncestralDiscreteScheduler, |

| 79 | + StableDiffusionXLAdapterPipeline, T2IAdapter) |

| 80 | +from diffusers.utils import load_image, make_image_grid |

| 81 | + |

| 82 | +# load adapter |

| 83 | +adapter = T2IAdapter.from_pretrained( |

| 84 | + "TencentARC/t2i-adapter-lineart-sdxl-1.0", torch_dtype=torch.float16, varient="fp16" |

| 85 | +).to("cuda") |

| 86 | + |

| 87 | +# load pipeline |

| 88 | +model_id = "stabilityai/stable-diffusion-xl-base-1.0" |

| 89 | +euler_a = EulerAncestralDiscreteScheduler.from_pretrained( |

| 90 | + model_id, subfolder="scheduler" |

| 91 | +) |

| 92 | +vae = AutoencoderKL.from_pretrained( |

| 93 | + "madebyollin/sdxl-vae-fp16-fix", torch_dtype=torch.float16 |

| 94 | +) |

| 95 | +pipe = StableDiffusionXLAdapterPipeline.from_pretrained( |

| 96 | + model_id, |

| 97 | + vae=vae, |

| 98 | + adapter=adapter, |

| 99 | + scheduler=euler_a, |

| 100 | + torch_dtype=torch.float16, |

| 101 | + variant="fp16", |

| 102 | +).to("cuda") |

| 103 | + |

| 104 | +# load lineart detector |

| 105 | +line_detector = LineartDetector.from_pretrained("lllyasviel/Annotators").to("cuda") |

| 106 | +``` |

| 107 | + |

| 108 | +然后,加载图像并生成其线稿图: |

| 109 | + |

| 110 | +```python |

| 111 | +url = "https://huggingface.co/Adapter/t2iadapter/resolve/main/figs_SDXLV1.0/org_lin.jpg" |

| 112 | +image = load_image(url) |

| 113 | +image = line_detector(image, detect_resolution=384, image_resolution=1024) |

| 114 | +``` |

| 115 | + |

| 116 | + |

| 117 | + |

| 118 | +然后生成: |

| 119 | + |

| 120 | +```python |

| 121 | +prompt = "Ice dragon roar, 4k photo" |

| 122 | +negative_prompt = "anime, cartoon, graphic, text, painting, crayon, graphite, abstract, glitch, deformed, mutated, ugly, disfigured" |

| 123 | +gen_images = pipe( |

| 124 | + prompt=prompt, |

| 125 | + negative_prompt=negative_prompt, |

| 126 | + image=image, |

| 127 | + num_inference_steps=30, |

| 128 | + adapter_conditioning_scale=0.8, |

| 129 | + guidance_scale=7.5, |

| 130 | +).images[0] |

| 131 | +gen_images.save("out_lin.png") |

| 132 | +``` |

| 133 | + |

| 134 | + |

| 135 | + |

| 136 | +理解下述两个重要的参数,可以帮助你调节控制程度。 |

| 137 | + |

| 138 | +1. `adapter_conditioning_scale` |

| 139 | + |

| 140 | + 该参数调节控制图像对输入的影响程度。越大代表控制越强,反之亦然。 |

| 141 | + |

| 142 | +2. `adapter_conditioning_factor` |

| 143 | + |

| 144 | + 该参数调节适配器需应用于生成过程总步数的前面多少步,取值范围在 0-1 之间 (默认值为 1)。 `adapter_conditioning_factor=1` 表示适配器需应用于所有步,而 `adapter_conditioning_factor=0.5` 则表示它仅应用于前 50% 步。 |

| 145 | + |

| 146 | +更多详情,请查看 [官方文档](https://huggingface.co/docs/diffusers/main/en/api/pipelines/stable_diffusion/adapter)。 |

| 147 | + |

| 148 | +## 试玩演示应用 |

| 149 | + |

| 150 | +你可以在 [这儿](https://huggingface.co/spaces/TencentARC/T2I-Adapter-SDXL) 或下述嵌入的游乐场中轻松试玩 T2I-Adapter-SDXL: |

| 151 | + |

| 152 | +<script type="module" src="https://gradio.s3-us-west-2.amazonaws.com/3.43.1/gradio.js"></script> |

| 153 | +<gradio-app src="https://tencentarc-t2i-adapter-sdxl.hf.space"></gradio-app> |

| 154 | + |

| 155 | +你还可以试试 [Doodly](https://huggingface.co/spaces/TencentARC/T2I-Adapter-SDXL-Sketch),它用的是草图版模型,可以在文本监督的配合下,把你的涂鸦变成逼真的图像: |

| 156 | + |

| 157 | +<script type="module" src="https://gradio.s3-us-west-2.amazonaws.com/3.43.1/gradio.js"></script> |

| 158 | +<gradio-app src="https://tencentarc-t2i-adapter-sdxl-sketch.hf.space"></gradio-app> |

| 159 | + |

| 160 | +## 更多结果 |

| 161 | + |

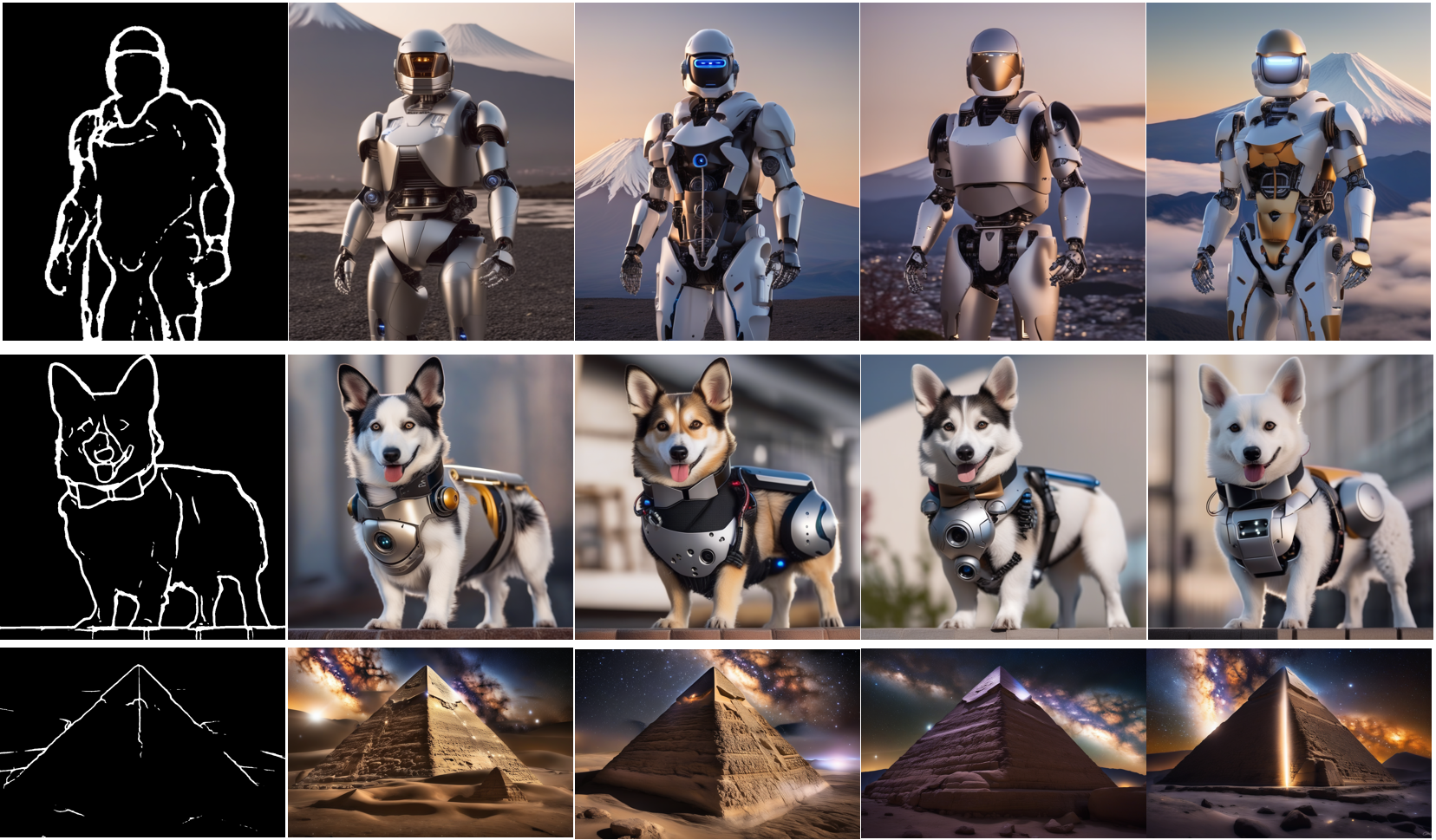

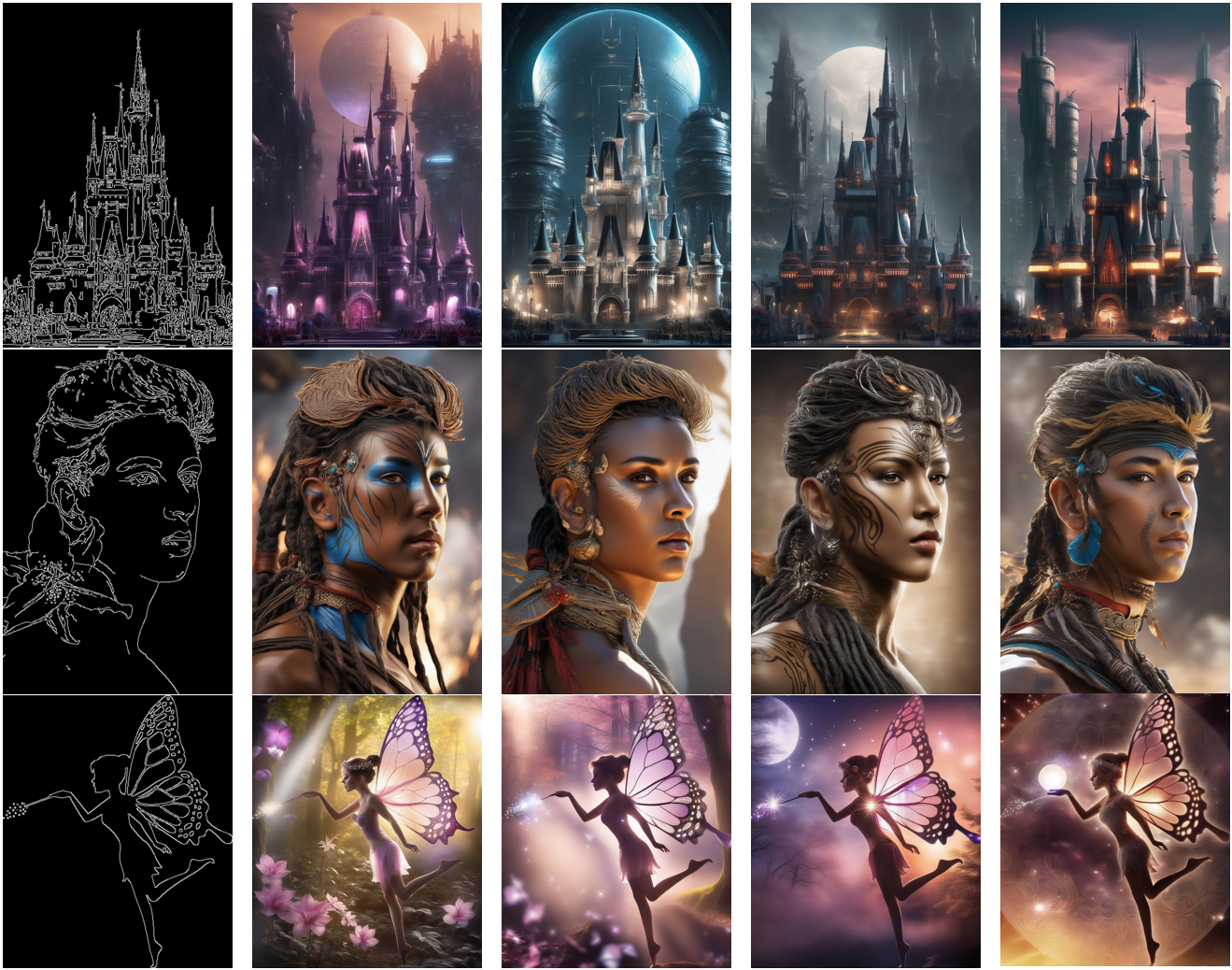

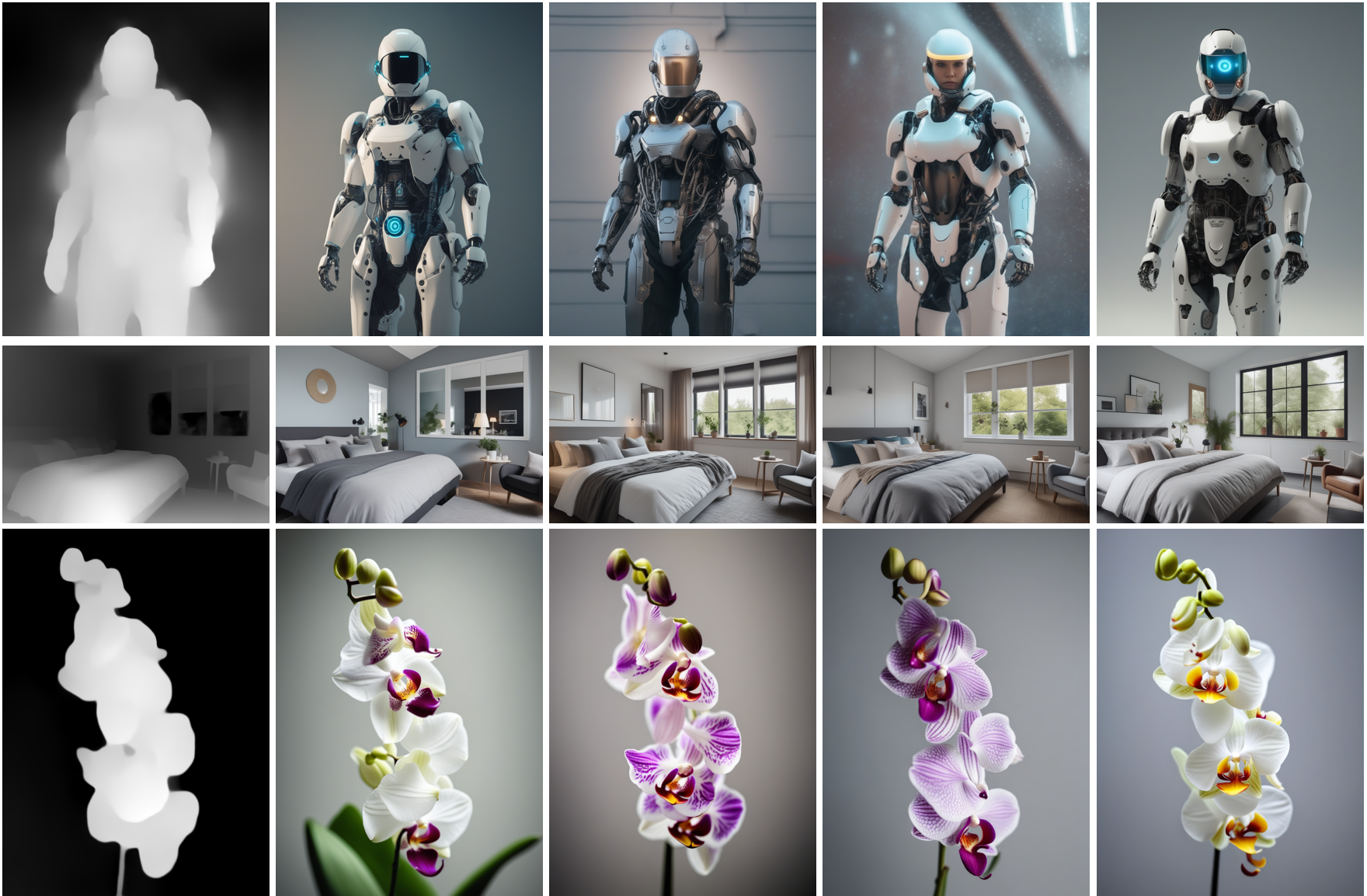

| 162 | +下面,我们展示了使用不同控制图像作为条件获得的结果。除此以外,我们还分享了相应的预训练 checkpoint 的链接。如果想知道有关如何训练这些模型的更多详细信息及其示例用法,可以参考各自模型的模型卡。 |

| 163 | + |

| 164 | +### 使用线稿图引导图像生成 |

| 165 | + |

| 166 | + |

| 167 | +_模型见 [`TencentARC/t2i-adapter-lineart-sdxl-1.0`](https://huggingface.co/TencentARC/t2i-adapter-lineart-sdxl-1.0)_ |

| 168 | + |

| 169 | +### 使用草图引导图像生成 |

| 170 | + |

| 171 | + |

| 172 | +_模型见 [`TencentARC/t2i-adapter-sketch-sdxl-1.0`](https://huggingface.co/TencentARC/t2i-adapter-sketch-sdxl-1.0)_ |

| 173 | + |

| 174 | +### 使用 Canny 检测器检测出的边缘图引导图像生成 |

| 175 | + |

| 176 | + |

| 177 | +_模型见 [`TencentARC/t2i-adapter-canny-sdxl-1.0`](https://huggingface.co/TencentARC/t2i-adapter-canny-sdxl-1.0)_ |

| 178 | + |

| 179 | +### 使用深度图引导图像生成 |

| 180 | + |

| 181 | + |

| 182 | +_模型分别见 [`TencentARC/t2i-adapter-depth-midas-sdxl-1.0`](https://huggingface.co/TencentARC/t2i-adapter-depth-midas-sdxl-1.0) 及 [`TencentARC/t2i-adapter-depth-zoe-sdxl-1.0`](https://huggingface.co/TencentARC/t2i-adapter-depth-zoe-sdxl-1.0)_ |

| 183 | + |

| 184 | +### 使用 OpenPose 骨骼图引导图像生成 |

| 185 | + |

| 186 | + |

| 187 | +_模型见 [`TencentARC/t2i-adapter-openpose-sdxl-1.0`](https://hf.co/TencentARC/t2i-adapter-openpose-sdxl-1.0)_ |

| 188 | + |

| 189 | +--- |

| 190 | + |

| 191 | +_致谢: 非常感谢 [William Berman](https://twitter.com/williamLberman) 帮助我们训练模型并分享他的见解。_ |

| 192 | + |

| 193 | +--- |

| 194 | + |

| 195 | +>>>> 英文原文: <url>https://hf.co/blog/t2i-sdxl-adapters</url> |

| 196 | +>>>> |

| 197 | +>>>> 原文作者: Chong Mou,Suraj Patil,Sayak Paul,Xintao Wang,hysts |

| 198 | +>>>> |

| 199 | +>>>> 译者: Matrix Yao (姚伟峰),英特尔深度学习工程师,工作方向为 transformer-family 模型在各模态数据上的应用及大规模模型的训练推理。 |

| 200 | +>>>> |

| 201 | +>>>> 审校/排版: zhongdongy (阿东) |

0 commit comments