@@ -38,16 +38,16 @@ Any repo (model or dataset) stored in a non-default location will display its Re

## Regulatory and legal compliance

-In regulated industries, companies may be required to store data in a specific region.

+Regulated industries often require data storage in specific regions.

-For companies in the EU, that means you can use the Hub to build ML in a GDPR compliant way: with datasets, models and inference endpoints all stored within EU data centers.

+For EU companies, you can use the Hub for ML development in a GDPR-compliant manner, with datasets, models and inference endpoints stored in EU data centers.

## Performance

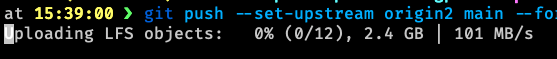

-Storing your models or your datasets closer to your team and infrastructure also means significantly improved performance, for both uploads and downloads.

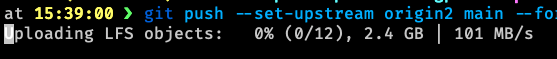

+Storing models and datasets closer to your team and infrastructure significantly improves performance for both uploads and downloads.

-This makes a big difference considering model weights and dataset files are usually very large.

+This impact is substantial given the typically large size of model weights and dataset files.

-As an example, if you are located in Europe and store your repositories in the EU region, you can expect to see ~4-5x faster upload and download speeds vs. if they were stored in the US.

+For example, European users storing repositories in the EU region can expect approximately 4-5x faster upload and download speeds compared to US storage.

+

+  +

+ +

+  +

+ @@ -38,16 +38,16 @@ Any repo (model or dataset) stored in a non-default location will display its Re

## Regulatory and legal compliance

-In regulated industries, companies may be required to store data in a specific region.

+Regulated industries often require data storage in specific regions.

-For companies in the EU, that means you can use the Hub to build ML in a GDPR compliant way: with datasets, models and inference endpoints all stored within EU data centers.

+For EU companies, you can use the Hub for ML development in a GDPR-compliant manner, with datasets, models and inference endpoints stored in EU data centers.

## Performance

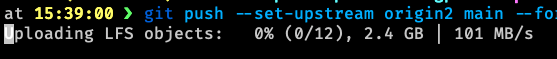

-Storing your models or your datasets closer to your team and infrastructure also means significantly improved performance, for both uploads and downloads.

+Storing models and datasets closer to your team and infrastructure significantly improves performance for both uploads and downloads.

-This makes a big difference considering model weights and dataset files are usually very large.

+This impact is substantial given the typically large size of model weights and dataset files.

-As an example, if you are located in Europe and store your repositories in the EU region, you can expect to see ~4-5x faster upload and download speeds vs. if they were stored in the US.

+For example, European users storing repositories in the EU region can expect approximately 4-5x faster upload and download speeds compared to US storage.

@@ -38,16 +38,16 @@ Any repo (model or dataset) stored in a non-default location will display its Re

## Regulatory and legal compliance

-In regulated industries, companies may be required to store data in a specific region.

+Regulated industries often require data storage in specific regions.

-For companies in the EU, that means you can use the Hub to build ML in a GDPR compliant way: with datasets, models and inference endpoints all stored within EU data centers.

+For EU companies, you can use the Hub for ML development in a GDPR-compliant manner, with datasets, models and inference endpoints stored in EU data centers.

## Performance

-Storing your models or your datasets closer to your team and infrastructure also means significantly improved performance, for both uploads and downloads.

+Storing models and datasets closer to your team and infrastructure significantly improves performance for both uploads and downloads.

-This makes a big difference considering model weights and dataset files are usually very large.

+This impact is substantial given the typically large size of model weights and dataset files.

-As an example, if you are located in Europe and store your repositories in the EU region, you can expect to see ~4-5x faster upload and download speeds vs. if they were stored in the US.

+For example, European users storing repositories in the EU region can expect approximately 4-5x faster upload and download speeds compared to US storage.