diff --git a/docs/hub/_toctree.yml b/docs/hub/_toctree.yml

index 611d832f0..70fa10b7d 100644

--- a/docs/hub/_toctree.yml

+++ b/docs/hub/_toctree.yml

@@ -206,7 +206,7 @@

- local: datasets-webdataset

title: WebDataset

- local: datasets-viewer

- title: Dataset Viewer

+ title: Data Studio

sections:

- local: datasets-viewer-configure

title: Configure the Dataset Viewer

diff --git a/docs/hub/datasets-adding.md b/docs/hub/datasets-adding.md

index 2c7994ed3..92e34db8a 100644

--- a/docs/hub/datasets-adding.md

+++ b/docs/hub/datasets-adding.md

@@ -98,9 +98,9 @@ Other formats and structures may not be recognized by the Hub.

For most types of datasets, **Parquet** is the recommended format due to its efficient compression, rich typing, and since a variety of tools supports this format with optimized read and batched operations. Alternatively, CSV or JSON Lines/JSON can be used for tabular data (prefer JSON Lines for nested data). Although easy to parse compared to Parquet, these formats are not recommended for data larger than several GBs. For image and audio datasets, uploading raw files is the most practical for most use cases since it's easy to access individual files. For large scale image and audio datasets streaming, [WebDataset](https://github.com/webdataset/webdataset) should be preferred over raw image and audio files to avoid the overhead of accessing individual files. Though for more general use cases involving analytics, data filtering or metadata parsing, Parquet is the recommended option for large scale image and audio datasets.

-### Dataset Viewer

+### Data Studio

-The [Dataset Viewer](./datasets-viewer) is useful to know how the data actually looks like before you download it.

+The [Data Studio](./datasets-viewer) is useful to know how the data actually looks like before you download it.

It is enabled by default for all public datasets. It is also available for private datasets owned by a [PRO user](https://huggingface.co/pricing) or an [Enterprise Hub organization](https://huggingface.co/enterprise).

After uploading your dataset, make sure the Dataset Viewer correctly shows your data, or [Configure the Dataset Viewer](./datasets-viewer-configure).

diff --git a/docs/hub/datasets-viewer-sql-console.md b/docs/hub/datasets-viewer-sql-console.md

index b7463b408..af4afee68 100644

--- a/docs/hub/datasets-viewer-sql-console.md

+++ b/docs/hub/datasets-viewer-sql-console.md

@@ -1,10 +1,10 @@

# SQL Console: Query Hugging Face datasets in your browser

-You can run SQL queries on the dataset in the browser using the SQL Console. The SQL Console is powered by [DuckDB](https://duckdb.org/) WASM and runs entirely in the browser. You can access the SQL Console from the dataset page by clicking on the **SQL Console** badge.

+You can run SQL queries on the dataset in the browser using the SQL Console. The SQL Console is powered by [DuckDB](https://duckdb.org/) WASM and runs entirely in the browser. You can access the SQL Console from the Data Studio.

@@ -16,8 +16,9 @@ Through the SQL Console, you can:

- Run [DuckDB SQL queries](https://duckdb.org/docs/sql/query_syntax/select) on the dataset (_checkout [SQL Snippets](https://huggingface.co/spaces/cfahlgren1/sql-snippets) for useful queries_)

- Share results of the query with others via a link (_check out [this example](https://huggingface.co/datasets/gretelai/synthetic-gsm8k-reflection-405b?sql_console=true&sql=FROM+histogram%28%0A++train%2C%0A++topic%2C%0A++bin_count+%3A%3D+10%0A%29)_)

-- Download the results of the query to a parquet file

+- Download the results of the query to a Parquet or CSV file

- Embed the results of the query in your own webpage using an iframe

+- Query datasets with natural language

You can also use the DuckDB locally through the CLI to query the dataset via the `hf://` protocol. See the DuckDB Datasets documentation for more information. The SQL Console provides a convenient `Copy to DuckDB CLI` button that generates the SQL query for creating views and executing your query in the DuckDB CLI.

@@ -31,48 +32,44 @@ You can also use the DuckDB locally through the CLI to query the dataset via the

The SQL Console makes filtering datasets really easy. For example, if you want to filter the `SkunkworksAI/reasoning-0.01` dataset for instructions and responses with a reasoning length of at least 10, you can use the following query:

-In the query, we can use the `len` function to get the length of the `reasoning_chains` column and the `bar` function to create a bar chart of the reasoning lengths.

-

+Here's the SQL to sort by length of the reasoning

```sql

-SELECT len(reasoning_chains) AS reason_len, bar(reason_len, 0, 100), *

+SELECT *

FROM train

-WHERE reason_len > 10

-ORDER BY reason_len DESC

+WHERE LENGTH(reasoning_chains) > 10;

```

-The [bar](https://duckdb.org/docs/sql/functions/char.html#barx-min-max-width) function is a neat built-in DuckDB function that creates a bar chart of the reasoning lengths.

-

### Histogram

Many dataset authors choose to include statistics about the distribution of the data in the dataset. Using the DuckDB `histogram` function, we can plot a histogram of a column's values.

-For example, to plot a histogram of the `reason_len` column in the `SkunkworksAI/reasoning-0.01` dataset, you can use the following query:

+For example, to plot a histogram of the `Rating` column in the [Lichess/chess-puzzles](https://huggingface.co/datasets/Lichess/chess-puzzles) dataset, you can use the following query:

Learn more about the `histogram` function and parameters here.

```sql

-FROM histogram(train, len(reasoning_chains))

+from histogram(train, Rating)

```

### Regex Matching

One of the most powerful features of DuckDB is the deep support for regular expressions. You can use the `regexp` function to match patterns in your data.

- Using the [regexp_matches](https://duckdb.org/docs/sql/functions/char.html#regexp_matchesstring-pattern) function, we can filter the `SkunkworksAI/reasoning-0.01` dataset for instructions that contain markdown code blocks.

+ Using the [regexp_matches](https://duckdb.org/docs/sql/functions/char.html#regexp_matchesstring-pattern) function, we can filter the [GeneralReasoning/GeneralThought-195k](https://huggingface.co/datasets/GeneralReasoning/GeneralThought-195K) dataset for instructions that contain markdown code blocks.

Learn more about the DuckDB regex functions here.

@@ -80,10 +77,10 @@ One of the most powerful features of DuckDB is the deep support for regular expr

```sql

-SELECT *

+SELECT *

FROM train

-WHERE regexp_matches(instruction, '```[a-z]*\n')

-limit 100

+WHERE regexp_matches(model_answer, '```')

+LIMIT 10;

```

@@ -92,8 +89,8 @@ limit 100

Leakage detection is the process of identifying whether data in a dataset is present in multiple splits, for example, whether the test set is present in the training set.

@@ -128,4 +125,4 @@ SELECT

ELSE 0

END AS overlap_percentage

FROM overlapping_rows, total_unique_rows;

-```

\ No newline at end of file

+```

diff --git a/docs/hub/datasets-viewer.md b/docs/hub/datasets-viewer.md

index 28f8715e1..3e242e968 100644

--- a/docs/hub/datasets-viewer.md

+++ b/docs/hub/datasets-viewer.md

@@ -1,10 +1,10 @@

-# Dataset viewer

+# Data Studio

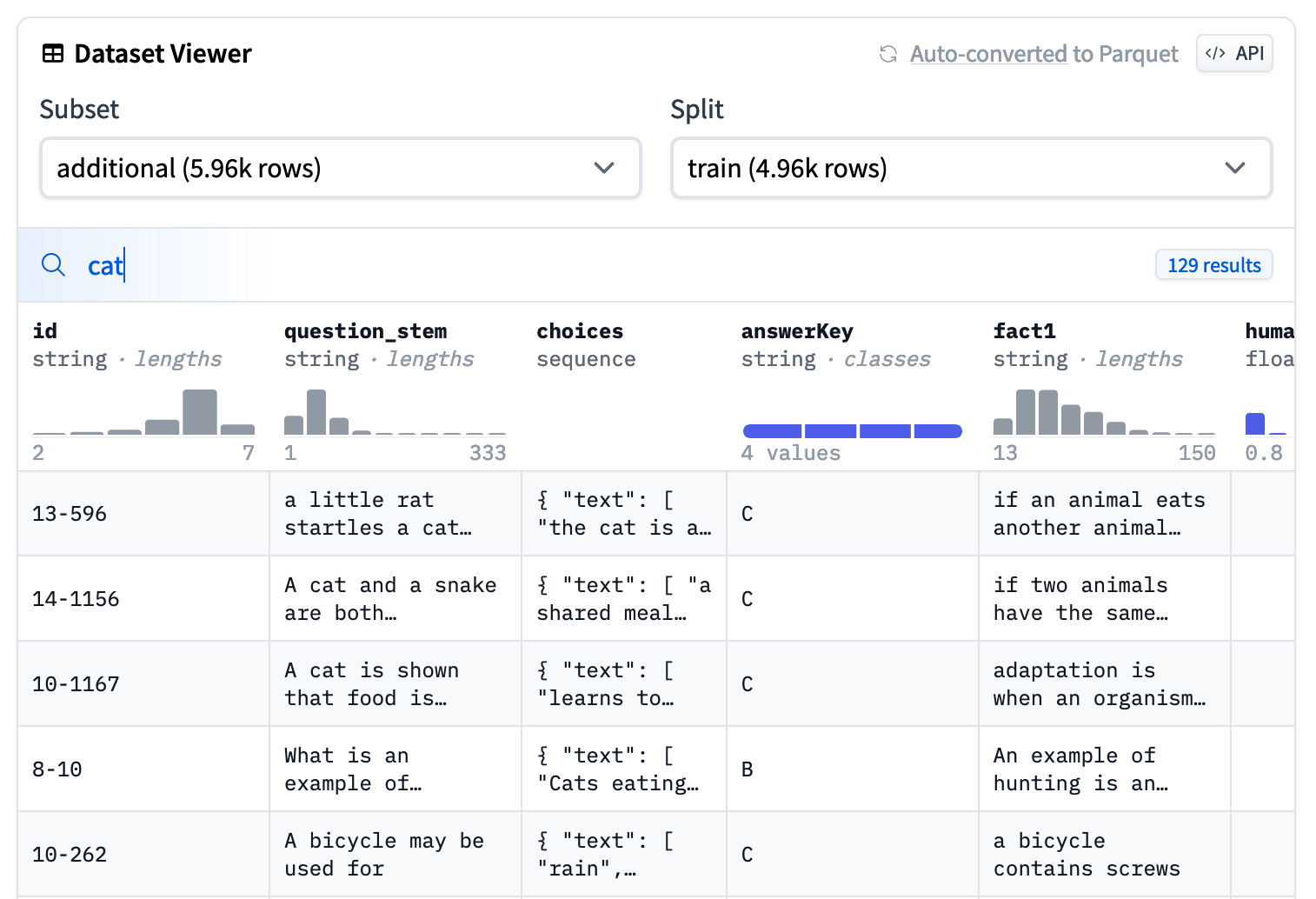

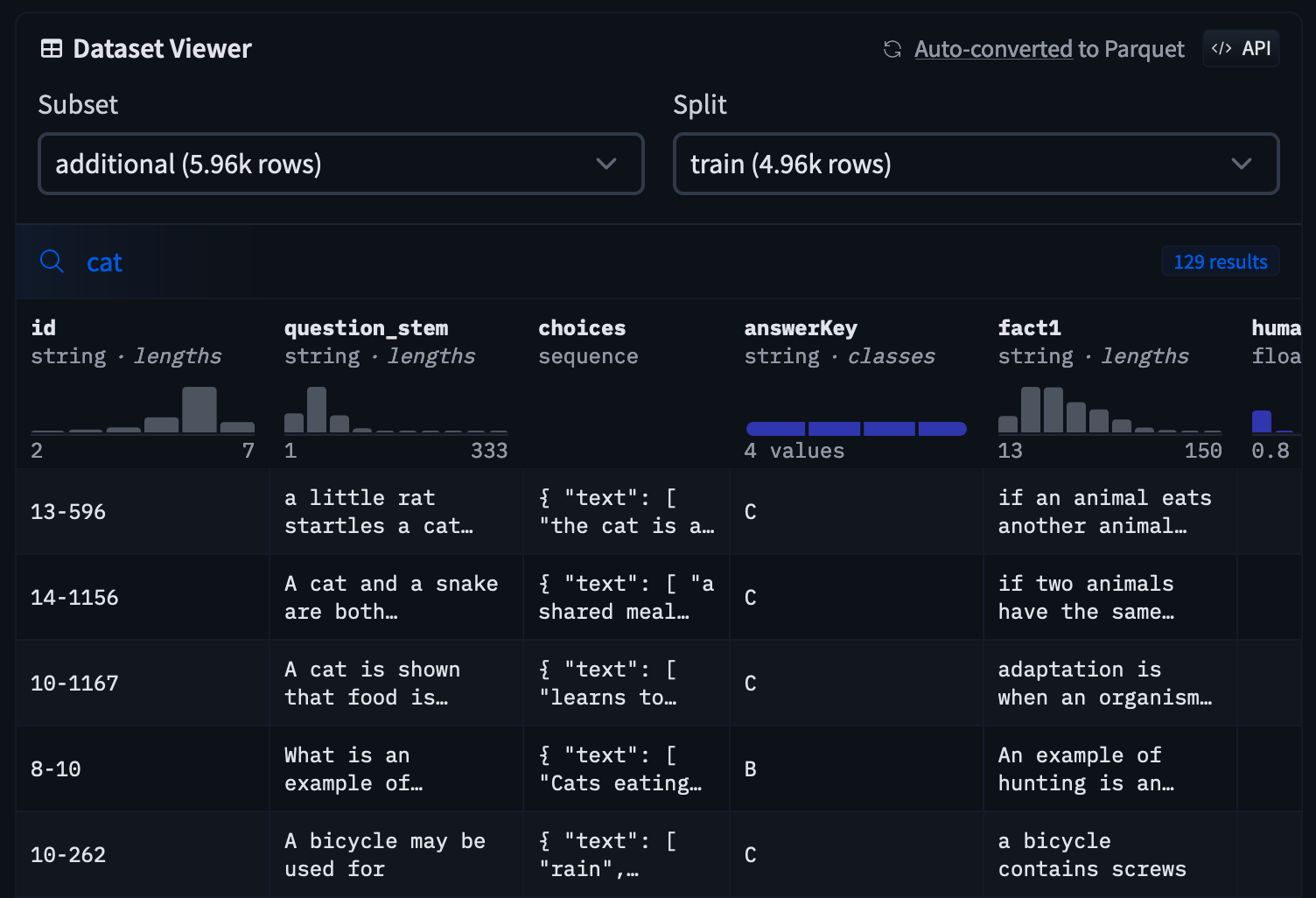

Each dataset page includes a table with the contents of the dataset, arranged by pages of 100 rows. You can navigate between pages using the buttons at the bottom of the table.

## Inspect data distributions

@@ -16,6 +16,11 @@ At the top of the columns you can see the graphs representing the distribution o

If you click on a bar of a histogram from a numerical column, the dataset viewer will filter the data and show only the rows with values that fall in the selected range.

Similarly, if you select one class from a categorical column, it will show only the rows from the selected category.

+

+

+

+

+

+

+

+

+

+

-

-  +

+  +

+

-

-  +

+  +

+

-

-  +

+  +

+

-

-  +

+  +

+

-

-  +

+  +

+

-

-  +

+  +

+

-

- +

+ +

+

+

+ +

+ +

+ +

+ +

+ +

+ -

- +

+ +

+