diff --git a/units/en/_toctree.yml b/units/en/_toctree.yml

index 4232bbf..37d6152 100644

--- a/units/en/_toctree.yml

+++ b/units/en/_toctree.yml

@@ -43,12 +43,21 @@

- local: unit2/tiny-agents

title: Building Tiny Agents with MCP and the Hugging Face Hub

-- title: "3. Use Case: Advanced MCP Development"

+- title: "3.1. Use Case: Build a Pull Request Agent on the Hub"

sections:

- - local: unit3/introduction

- title: Coming Soon

-

-- title: "Bonus Units"

- sections:

- - local: unit4/introduction

- title: Coming Soon

+ - local: unit3_1/introduction

+ title: Build a Pull Request Agent on the Hugging Face Hub

+ - local: unit3_1/setting-up-the-project

+ title: Setting up the Project

+ - local: unit3_1/creating-the-mcp-server

+ title: Creating the MCP Server

+ - local: unit3_1/quiz1

+ title: Quiz 1 - MCP Server Implementation

+ - local: unit3_1/mcp-client

+ title: MCP Client

+ - local: unit3_1/webhook-listener

+ title: Webhook Listener

+ - local: unit3_1/quiz2

+ title: Quiz 2 - Pull Request Agent Integration

+ - local: unit3_1/conclusion

+ title: Conclusion

\ No newline at end of file

diff --git a/units/en/unit3/introduction.mdx b/units/en/unit3/introduction.mdx

deleted file mode 100644

index d3c9b29..0000000

--- a/units/en/unit3/introduction.mdx

+++ /dev/null

@@ -1,3 +0,0 @@

-# Coming Soon

-

-This will be another use case that dives deeper into the MCP protocol and how to use it in more complex ways.

\ No newline at end of file

diff --git a/units/en/unit3_1/conclusion.mdx b/units/en/unit3_1/conclusion.mdx

new file mode 100644

index 0000000..2c93124

--- /dev/null

+++ b/units/en/unit3_1/conclusion.mdx

@@ -0,0 +1,42 @@

+# Conclusion

+

+Congratulations! 🎉 You've successfully built a Pull Request Agent that automatically enhances Hugging Face model repositories through intelligent tagging using MCP (Model Context Protocol).

+

+The patterns you've learned - webhook processing, MCP tool integration, agent orchestration, and production deployment - are foundational skills for agent and MCP building. These techniques are applicable far beyond model tagging and represent a powerful approach to building intelligent systems that augment human capabilities.

+

+## What we've built

+

+Throughout this unit, you created a complete automation system with four key components:

+

+- **MCP Server** (`mcp_server.py`) - FastMCP-based server with Hub API integration

+- **MCP Client** (Agent) - Intelligent orchestration with language model reasoning

+- **Webhook Listener** (FastAPI) - Real-time event processing from Hugging Face Hub

+- **Testing Interface** (Gradio) - Development and monitoring dashboard

+

+## Next Steps

+

+### Continue Learning

+- Explore advanced MCP patterns and tools

+- Study other automation frameworks and AI system architecture

+- Learn about multi-agent systems and tool composition

+

+### Build More Agents

+- Develop domain-specific automation tools for your own projects

+- Try out other types of webhooks (e.g. model uploads, model downloads, etc.)

+- Experiment with different workflows

+

+### Share Your Work

+- Open source your agent for the community

+- Write about your learnings and automation patterns

+- Contribute to the MCP ecosystem

+

+### Scale Your Impact

+- Deploy agents for multiple repositories or organizations

+- Build more sophisticated automation workflows

+- Explore commercial applications of AI automation

+

+

+

+Consider documenting your experience and sharing it with the community! Your journey from learning MCP to building a production agent will help others explore AI automation.

+

+

diff --git a/units/en/unit3_1/creating-the-mcp-server.mdx b/units/en/unit3_1/creating-the-mcp-server.mdx

new file mode 100644

index 0000000..9ab67e0

--- /dev/null

+++ b/units/en/unit3_1/creating-the-mcp-server.mdx

@@ -0,0 +1,336 @@

+# Creating the MCP Server

+

+The MCP server is the heart of our Pull Request Agent. It provides the tools that our agent will use to interact with the Hugging Face Hub, specifically for reading and updating model repository tags. In this section, we'll build the server using FastMCP and the Hugging Face Hub Python SDK.

+

+## Understanding the MCP Server Architecture

+

+Our MCP server provides two essential tools:

+

+| Tool | Description |

+| --- | --- |

+| `get_current_tags` | Retrieves existing tags from a model repository |

+| `add_new_tag` | Adds a new tag to a repository via pull request |

+

+These tools abstract the complexity of Hub API interactions and provide a clean interface for our agent to work with.

+

+

+

+## Complete MCP Server Implementation

+

+Let's create our `mcp_server.py` file step by step. We'll build this incrementally so you understand each component and how they work together.

+

+### 1. Imports and Configuration

+

+First, let's set up all the necessary imports and configuration.

+

+```python

+#!/usr/bin/env python3

+"""

+Simplified MCP Server for HuggingFace Hub Tagging Operations using FastMCP

+"""

+

+import os

+import json

+from fastmcp import FastMCP

+from huggingface_hub import HfApi, model_info, ModelCard, ModelCardData

+from huggingface_hub.utils import HfHubHTTPError

+from dotenv import load_dotenv

+

+load_dotenv()

+```

+

+The imports above give us everything we need to build our MCP server. `FastMCP` provides the server framework, while the `huggingface_hub` imports give us the tools to interact with model repositories.

+

+The `load_dotenv()` call automatically loads environment variables from a `.env` file, making it easy to manage secrets like API tokens during development.

+

+

+

+If you're using uv, you can create a `.env` file in the root of the project and you won't need to use `load_dotenv()` if you use `uv run` to run the server.

+

+

+

+Next, we'll configure our server with the necessary credentials and create the FastMCP instance:

+

+```python

+# Configuration

+HF_TOKEN = os.getenv("HF_TOKEN")

+

+# Initialize HF API client

+hf_api = HfApi(token=HF_TOKEN) if HF_TOKEN else None

+

+# Create the FastMCP server

+mcp = FastMCP("hf-tagging-bot")

+```

+

+This configuration block does three important things:

+1. Retrieves the Hugging Face token from environment variables

+2. Creates an authenticated API client (only if a token is available)

+3. Initializes our FastMCP server with a descriptive name

+

+The conditional creation of `hf_api` ensures our server can start even without a token, which is useful for testing the basic structure.

+

+### 2. Get Current Tags Tool

+

+Now let's implement our first tool - `get_current_tags`. This tool retrieves the existing tags from a model repository:

+

+```python

+@mcp.tool()

+def get_current_tags(repo_id: str) -> str:

+ """Get current tags from a HuggingFace model repository"""

+ print(f"🔧 get_current_tags called with repo_id: {repo_id}")

+

+ if not hf_api:

+ error_result = {"error": "HF token not configured"}

+ json_str = json.dumps(error_result)

+ print(f"❌ No HF API token - returning: {json_str}")

+ return json_str

+```

+

+The function starts with validation - checking if we have an authenticated API client. Notice how we return JSON strings instead of Python objects. This is crucial for MCP communication.

+

+

+

+All MCP tools must return strings, not Python objects. That's why we use `json.dumps()` to convert our results to JSON strings. This ensures reliable data exchange between the MCP server and client.

+

+

+

+Let's continue with the main logic of the `get_current_tags` function:

+

+```python

+ try:

+ print(f"📡 Fetching model info for: {repo_id}")

+ info = model_info(repo_id=repo_id, token=HF_TOKEN)

+ current_tags = info.tags if info.tags else []

+ print(f"🏷️ Found {len(current_tags)} tags: {current_tags}")

+

+ result = {

+ "status": "success",

+ "repo_id": repo_id,

+ "current_tags": current_tags,

+ "count": len(current_tags),

+ }

+ json_str = json.dumps(result)

+ print(f"✅ get_current_tags returning: {json_str}")

+ return json_str

+

+ except Exception as e:

+ print(f"❌ Error in get_current_tags: {str(e)}")

+ error_result = {"status": "error", "repo_id": repo_id, "error": str(e)}

+ json_str = json.dumps(error_result)

+ print(f"❌ get_current_tags error returning: {json_str}")

+ return json_str

+```

+

+This implementation follows a clear pattern:

+1. **Fetch data** using the Hugging Face Hub API

+2. **Process the response** to extract tag information

+3. **Structure the result** in a consistent JSON format

+4. **Handle errors gracefully** with detailed error messages

+

+

+

+The extensive logging might seem overkill, but it helps with debugging and monitoring when the server is running. Remember, your application will autonomously reacting to events from the Hub, so you won't be able to see the logs in real time.

+

+

+

+### 3. Add New Tag Tool

+

+Now for the more complex tool - `add_new_tag`. This tool adds a new tag to a repository by creating a pull request. Let's start with the initial setup and validation:

+

+```python

+@mcp.tool()

+def add_new_tag(repo_id: str, new_tag: str) -> str:

+ """Add a new tag to a HuggingFace model repository via PR"""

+ print(f"🔧 add_new_tag called with repo_id: {repo_id}, new_tag: {new_tag}")

+

+ if not hf_api:

+ error_result = {"error": "HF token not configured"}

+ json_str = json.dumps(error_result)

+ print(f"❌ No HF API token - returning: {json_str}")

+ return json_str

+```

+

+Similar to our first tool, we start with validation. Now let's fetch the current repository state to check if the tag already exists:

+

+```python

+ try:

+ # Get current model info and tags

+ print(f"📡 Fetching current model info for: {repo_id}")

+ info = model_info(repo_id=repo_id, token=HF_TOKEN)

+ current_tags = info.tags if info.tags else []

+ print(f"🏷️ Current tags: {current_tags}")

+

+ # Check if tag already exists

+ if new_tag in current_tags:

+ print(f"⚠️ Tag '{new_tag}' already exists in {current_tags}")

+ result = {

+ "status": "already_exists",

+ "repo_id": repo_id,

+ "tag": new_tag,

+ "message": f"Tag '{new_tag}' already exists",

+ }

+ json_str = json.dumps(result)

+ print(f"🏷️ add_new_tag (already exists) returning: {json_str}")

+ return json_str

+```

+

+This section demonstrates an important principle: **validate before acting**. We check if the tag already exists to avoid creating unnecessary pull requests.

+

+

+

+Always check the current state before making changes. This prevents duplicate work and provides better user feedback. It's especially important when creating pull requests, as duplicate PRs can clutter the repository.

+

+

+

+Next, we'll prepare the updated tag list and handle the model card:

+

+```python

+ # Add the new tag to existing tags

+ updated_tags = current_tags + [new_tag]

+ print(f"🆕 Will update tags from {current_tags} to {updated_tags}")

+

+ # Create model card content with updated tags

+ try:

+ # Load existing model card

+ print(f"📄 Loading existing model card...")

+ card = ModelCard.load(repo_id, token=HF_TOKEN)

+ if not hasattr(card, "data") or card.data is None:

+ card.data = ModelCardData()

+ except HfHubHTTPError:

+ # Create new model card if none exists

+ print(f"📄 Creating new model card (none exists)")

+ card = ModelCard("")

+ card.data = ModelCardData()

+

+ # Update tags - create new ModelCardData with updated tags

+ card_dict = card.data.to_dict()

+ card_dict["tags"] = updated_tags

+ card.data = ModelCardData(**card_dict)

+```

+

+This section handles model card management. We try to load an existing model card first, but create a new one if none exists. This ensures our tool works with any repository, even if it's empty.

+

+The model card (`README.md`) contains the repository metadata, including tags. By updating the model card data and creating a pull request, we're following the standard Hugging Face workflow for metadata changes.

+

+Now for the pull request creation - the main part of our tool:

+

+```python

+ # Create a pull request with the updated model card

+ pr_title = f"Add '{new_tag}' tag"

+ pr_description = f"""

+## Add tag: {new_tag}

+

+This PR adds the `{new_tag}` tag to the model repository.

+

+**Changes:**

+- Added `{new_tag}` to model tags

+- Updated from {len(current_tags)} to {len(updated_tags)} tags

+

+**Current tags:** {", ".join(current_tags) if current_tags else "None"}

+**New tags:** {", ".join(updated_tags)}

+

+🤖 This is a pull request created by the Hugging Face Hub Tagging Bot.

+"""

+

+ print(f"🚀 Creating PR with title: {pr_title}")

+```

+

+We create a detailed pull request description that explains what's changing and why. This transparency is crucial for repository maintainers who will review the PR.

+

+

+

+Clear, detailed PR descriptions are essential for automated pull requests. They help repository maintainers understand what's happening and make informed decisions about whether to merge the changes.

+

+Also, it's good practice to clearly state that the PR is created by an automated tool. This helps repository maintainers understand how to deal with the PR.

+

+

+

+Finally, we create the commit and pull request:

+

+```python

+ # Create commit with updated model card using CommitOperationAdd

+ from huggingface_hub import CommitOperationAdd

+

+ commit_info = hf_api.create_commit(

+ repo_id=repo_id,

+ operations=[

+ CommitOperationAdd(

+ path_in_repo="README.md", path_or_fileobj=str(card).encode("utf-8")

+ )

+ ],

+ commit_message=pr_title,

+ commit_description=pr_description,

+ token=HF_TOKEN,

+ create_pr=True,

+ )

+

+ # Extract PR URL from commit info

+ pr_url_attr = commit_info.pr_url

+ pr_url = pr_url_attr if hasattr(commit_info, "pr_url") else str(commit_info)

+

+ print(f"✅ PR created successfully! URL: {pr_url}")

+

+ result = {

+ "status": "success",

+ "repo_id": repo_id,

+ "tag": new_tag,

+ "pr_url": pr_url,

+ "previous_tags": current_tags,

+ "new_tags": updated_tags,

+ "message": f"Created PR to add tag '{new_tag}'",

+ }

+ json_str = json.dumps(result)

+ print(f"✅ add_new_tag success returning: {json_str}")

+ return json_str

+```

+

+The `create_commit` function with `create_pr=True` is the key to our automation. It creates a commit with the updated `README.md` file and automatically opens a pull request for review.

+

+Don't forget the error handling for this complex operation:

+

+```python

+ except Exception as e:

+ print(f"❌ Error in add_new_tag: {str(e)}")

+ print(f"❌ Error type: {type(e)}")

+ import traceback

+ print(f"❌ Traceback: {traceback.format_exc()}")

+

+ error_result = {

+ "status": "error",

+ "repo_id": repo_id,

+ "tag": new_tag,

+ "error": str(e),

+ }

+ json_str = json.dumps(error_result)

+ print(f"❌ add_new_tag error returning: {json_str}")

+ return json_str

+```

+

+The comprehensive error handling includes the full traceback, which is invaluable for debugging when things go wrong.

+

+Emojis in log messages might seem silly, but they make scanning logs much faster. 🔧 for function calls, 📡 for API requests, ✅ for success, and ❌ for errors create visual patterns that help you quickly find what you're looking for.

+

+

+

+Whilst building this application, it's easy to accidentally create an infinite loop of PRs. This is because the `create_commit` function with `create_pr=True` will create a PR for every commit. If the PR is not merged, the `create_commit` function will be called again, and again, and again...

+

+We've added checks to prevent this, but it's something to be aware of.

+

+

+

+## Next Steps

+

+Now that we have our MCP server implemented with robust tagging tools, we need to:

+

+1. **Create the MCP Client** - Build the interface between our agent and MCP server

+2. **Implement Webhook Handling** - Listen for Hub discussion events

+3. **Integrate Agent Logic** - Connect webhooks with MCP tool calls

+4. **Test the Complete System** - Validate end-to-end functionality

+

+In the next section, we'll create the MCP client that will allow our webhook handler to interact with these tools intelligently.

+

+

+

+The MCP server runs as a separate process from your main application. This isolation provides better error handling and allows the server to be reused by multiple clients or applications.

+

+

\ No newline at end of file

diff --git a/units/en/unit3_1/introduction.mdx b/units/en/unit3_1/introduction.mdx

new file mode 100644

index 0000000..075129d

--- /dev/null

+++ b/units/en/unit3_1/introduction.mdx

@@ -0,0 +1,108 @@

+# Build a Pull Request Agent on the Hugging Face Hub

+

+Welcome to Unit 3 of the MCP Course!

+

+In this unit, we'll build a pull request agent that automatically tags Hugging Face model repositories based on discussions and comments. This real-world application demonstrates how to integrate MCP with webhook listeners and automated workflows.

+

+

+

+This unit showcases a real world use case where MCP servers can respond to real-time events from the Hugging Face Hub, automatically creating pull requests to improve repository metadata.

+

+

+

+## What You'll Learn

+

+In this unit, you will:

+

+- Create an MCP Server that interacts with the Hugging Face Hub API

+- Implement webhook listeners to respond to discussion events

+- Set up automated tagging workflows for model repositories

+- Deploy a complete webhook-driven application to Hugging Face Spaces

+

+By the end of this unit, you'll have a working PR agent that can monitor discussions and automatically improve repository metadata through pull requests.

+

+## Prerequisites

+

+Before proceeding with this unit, make sure you:

+

+- Have completed Units 1 and 2, or have experience with MCP concepts

+- Are comfortable with Python, FastAPI, and webhook concepts

+- Have a basic understanding of Hugging Face Hub workflows and pull requests

+- Have a development environment with:

+ - Python 3.11+

+ - A Hugging Face account with API access

+

+## Our Pull Request Agent Project

+

+We'll build a tagging agent that consists of four main components: the MCP server, webhook listener, agent logic, and deployment infrastructure. The agent will be able to tag model repositories based on discussions and comments. This should save model authors time by receiving ready to use PRs, instead of having to manually tag their repositories.

+

+

+

+In the diagram above, we have a MCP server that can read and update model tags. We have a webhook listener that can receive webhooks from the Hugging Face Hub. We have an agent that can analyze discussions and comments and create PRs to update model tags. We have a deployment infrastructure that can deploy the MCP server to Hugging Face Spaces.

+

+### Project Overview

+

+ To build this application we will need the following files:

+

+| File | Purpose | Description |

+|------|---------|-------------|

+| `mcp_server.py` | **Core MCP Server** | FastMCP-based server with tools for reading and updating model tags |

+| `app.py` | **Webhook Listener & Agent** | FastAPI app that receives webhooks, processes discussions, and creates PRs |

+| `requirements.txt` | **Dependencies** | Python packages including FastMCP, FastAPI, and huggingface-hub |

+| `pyproject.toml` | **Project Configuration** | Modern Python packaging with uv dependency management |

+| `Dockerfile` | **Deployment** | Container configuration for Hugging Face Spaces |

+| `env.example` | **Configuration Template** | Required environment variables and secrets |

+| `cleanup.py` | **Utility** | Helper script for development and testing cleanup |

+

+Let's go through each of these files and understand their purpose.

+

+### MCP Server (`mcp_server.py`)

+

+The heart of our application - a FastMCP server that provides tools for:

+- Reading current tags from model repositories

+- Adding new tags via pull requests to the Hub

+- Error handling and validation

+

+This is where you will implement the MCP server and do most of the work for this project. The Gradio app and FastAPI app will be used to test the MCP server and the webhook listener, and they are ready to use.

+

+### Webhook Integration

+

+Following the [Hugging Face Webhooks Guide](https://huggingface.co/docs/hub/webhooks-guide-discussion-bot), our agent:

+- Listens for discussion comment events

+- Validates webhook signatures for security

+- Processes mentions and tag suggestions

+- Creates pull requests automatically

+

+### Agent Functionality

+

+The agent analyzes discussion content to:

+- Extract explicit tag mentions (`tag: pytorch`, `#transformers`)

+- Recognize implicit tags from natural language

+- Validate tags against known ML/AI categories

+- Generate appropriate pull request descriptions

+

+### Deployment & Production

+

+- Containerized deployment to Hugging Face Spaces

+- Environment variable management for secrets

+- Background task processing for webhook responses

+- Gradio interface for testing and monitoring

+

+## Webhook Integration Overview

+

+Our PR agent leverages the same webhook infrastructure used by Hugging Face's discussion bots. Here's how webhooks enable real-time responses:

+

+

+

+The webhook flow works as follows:

+1. **Event Trigger**: A user creates a comment in a model repository discussion

+2. **Webhook Delivery**: Hugging Face sends a POST request to our endpoint

+3. **Authentication**: We validate the webhook secret for security

+4. **Processing**: Our agent analyzes the comment for tag suggestions

+5. **Action**: If relevant tags are found, we create a pull request

+6. **Response**: The webhook returns immediately while PR creation happens in the background

+

+## Let's Get Started!

+

+Ready to build a production-ready PR agent that can automatically improve Hugging Face repositories? Let's begin by setting up the project structure and understanding the MCP server implementation.

+

diff --git a/units/en/unit3_1/mcp-client.mdx b/units/en/unit3_1/mcp-client.mdx

new file mode 100644

index 0000000..dd517a6

--- /dev/null

+++ b/units/en/unit3_1/mcp-client.mdx

@@ -0,0 +1,370 @@

+# MCP Client

+

+Now that we have our MCP server with tagging tools, we need to create a client that can interact with these tools. The MCP client serves as the bridge between our webhook handler and the MCP server, enabling our agent to use the Hub tagging functionality.

+

+For the sake of this project, we'll build both an API and a Gradio app. The API will be used to test the MCP server and the webhook listener, and the Gradio app will be used to test the MCP client with simulated webhook events.

+

+

+

+For educational purposes, we will build the MCP Server and MCP Client in the same repo. In a real-world application, you would likely have a separate repo for the MCP Server and MCP Client. In fact, you might only build one of these components.

+

+

+

+## Understanding the MCP Client Architecture

+

+In our application, the MCP client is integrated into the main FastAPI application (`app.py`). It creates and manages connections to our MCP server, providing a seamless interface for tool execution.

+

+

+

+## Agent-Based MCP Client

+

+We use the `huggingface_hub` Agent class that has built-in MCP support. This provides both language model capabilities and MCP tool integration in a single component.

+

+### 1. Agent Configuration

+

+Let's start by setting up the agent configuration and understanding each component:

+

+```python

+from huggingface_hub.inference._mcp.agent import Agent

+from typing import Optional, Literal

+

+# Configuration

+HF_TOKEN = os.getenv("HF_TOKEN")

+HF_MODEL = os.getenv("HF_MODEL", "microsoft/DialoGPT-medium")

+DEFAULT_PROVIDER: Literal["hf-inference"] = "hf-inference"

+

+# Global agent instance

+agent_instance: Optional[Agent] = None

+```

+

+We start with the necessary imports and configuration. The global `agent_instance` variable ensures we create the agent only once and reuse it across multiple requests. This is important for performance since agent initialization can be expensive.

+

+Now let's implement the function that creates and manages our agent:

+

+```python

+async def get_agent():

+ """Get or create Agent instance"""

+ print("🤖 get_agent() called...")

+ global agent_instance

+ if agent_instance is None and HF_TOKEN:

+ print("🔧 Creating new Agent instance...")

+ print(f"🔑 HF_TOKEN present: {bool(HF_TOKEN)}")

+ print(f"🤖 Model: {HF_MODEL}")

+ print(f"🔗 Provider: {DEFAULT_PROVIDER}")

+```

+

+The function starts by checking if we already have an agent instance. This singleton pattern prevents unnecessary recreations and ensures consistent state.

+

+Let's continue with the agent creation:

+

+```python

+ try:

+ agent_instance = Agent(

+ model=HF_MODEL,

+ provider=DEFAULT_PROVIDER,

+ api_key=HF_TOKEN,

+ servers=[

+ {

+ "type": "stdio",

+ "config": {

+ "command": "python",

+ "args": ["mcp_server.py"],

+ "cwd": ".",

+ "env": {"HF_TOKEN": HF_TOKEN} if HF_TOKEN else {},

+ },

+ }

+ ],

+ )

+ print("✅ Agent instance created successfully")

+ print("🔧 Loading tools...")

+ await agent_instance.load_tools()

+ print("✅ Tools loaded successfully")

+ except Exception as e:

+ print(f"❌ Error creating/loading agent: {str(e)}")

+ agent_instance = None

+```

+

+This is where the important part happens! Let's break down the Agent configuration:

+

+**Agent Parameters:**

+- `model`: The language model that will reason about tool usage

+- `provider`: How to access the model (Hugging Face Inference Providers)

+- `api_key`: Hugging Face API key

+

+**MCP Server Connection:**

+- `type: "stdio"`: Connect to the MCP server via standard input/output

+- `command: "python"`: Run our MCP server as a Python subprocess

+- `args: ["mcp_server.py"]`: The script file to execute

+- `env`: Pass the HF_TOKEN to the server process

+

+

+

+The `stdio` connection type means the agent starts your MCP server as a subprocess and communicates with it through standard input/output. This is perfect for development and single-machine deployments.

+

+

+

+The `load_tools()` call is crucial - it discovers what tools are available from the MCP server and makes them accessible to the agent's reasoning engine.

+

+This completes our agent management function with proper error handling and logging.

+

+## Tool Discovery and Usage

+

+Once the agent is created and tools are loaded, it can automatically discover and use the MCP tools. This is where the real power of the Agent approach shines.

+

+### Available Tools

+

+The agent discovers our MCP tools automatically:

+- `get_current_tags(repo_id: str)` - Retrieve existing repository tags

+- `add_new_tag(repo_id: str, new_tag: str)` - Add new tag via pull request

+

+The agent doesn't just call these tools blindly - it reasons about when and how to use them based on the prompt you give it.

+

+### Tool Execution Example

+

+Here's how the agent intelligently uses tools:

+

+```python

+# Example of how the agent would use tools

+async def example_tool_usage():

+ agent = await get_agent()

+

+ if agent:

+ # The agent can reason about which tools to use

+ response = await agent.run(

+ "Check the current tags for microsoft/DialoGPT-medium and add the tag 'conversational-ai' if it's not already present"

+ )

+ print(response)

+```

+

+Notice how we give the agent a natural language instruction, and it figures out:

+1. First call `get_current_tags` to see what tags exist

+2. Check if `conversational-ai` is already there

+3. If not, call `add_new_tag` to add it

+4. Provide a summary of what it did

+

+This is much more intelligent than calling tools directly!

+

+## Integration with Webhook Processing

+

+Now let's see how the MCP client integrates into our webhook processing pipeline. This is where everything comes together.

+

+### 1. Tag Extraction and Processing

+

+Here's the main function that processes webhook events and uses our MCP agent:

+

+```python

+async def process_webhook_comment(webhook_data: Dict[str, Any]):

+ """Process webhook to detect and add tags"""

+ print("🏷️ Starting process_webhook_comment...")

+

+ try:

+ comment_content = webhook_data["comment"]["content"]

+ discussion_title = webhook_data["discussion"]["title"]

+ repo_name = webhook_data["repo"]["name"]

+

+ # Extract potential tags from the comment and discussion title

+ comment_tags = extract_tags_from_text(comment_content)

+ title_tags = extract_tags_from_text(discussion_title)

+ all_tags = list(set(comment_tags + title_tags))

+

+ print(f"🔍 All unique tags: {all_tags}")

+

+ if not all_tags:

+ return ["No recognizable tags found in the discussion."]

+```

+

+This first part extracts and combines tags from both the comment content and discussion title. We use a set to deduplicate any tags that appear in both places.

+

+

+

+Processing both the comment and discussion title increases our chances of catching relevant tags. Users might mention tags in the title like "Missing pytorch tag" or in comments like "This needs #transformers".

+

+

+

+Next, we get our agent and process each tag:

+

+```python

+ # Get agent instance

+ agent = await get_agent()

+ if not agent:

+ return ["Error: Agent not configured (missing HF_TOKEN)"]

+

+ # Process each tag

+ result_messages = []

+ for tag in all_tags:

+ try:

+ # Use agent to process the tag

+ prompt = f"""

+ For the repository '{repo_name}', check if the tag '{tag}' already exists.

+ If it doesn't exist, add it via a pull request.

+

+ Repository: {repo_name}

+ Tag to check/add: {tag}

+ """

+

+ print(f"🤖 Processing tag '{tag}' for repo '{repo_name}'")

+ response = await agent.run(prompt)

+

+ # Parse agent response for success/failure

+ if "success" in response.lower():

+ result_messages.append(f"✅ Tag '{tag}' processed successfully")

+ else:

+ result_messages.append(f"⚠️ Issue with tag '{tag}': {response}")

+

+ except Exception as e:

+ error_msg = f"❌ Error processing tag '{tag}': {str(e)}"

+ print(error_msg)

+ result_messages.append(error_msg)

+

+ return result_messages

+```

+

+The key insight here is that we give the agent a clear, structured prompt for each tag. The agent then:

+1. Understands it needs to check the current tags first

+2. Compares with the new tag we want to add

+3. Creates a pull request if needed

+4. Returns a summary of its actions

+

+This approach handles the complexity of tool orchestration automatically.

+

+### 2. Tag Extraction Logic

+

+Let's examine the tag extraction logic that feeds into our MCP processing:

+

+```python

+import re

+from typing import List

+

+# Recognized ML/AI tags for validation

+RECOGNIZED_TAGS = {

+ "pytorch", "tensorflow", "jax", "transformers", "diffusers",

+ "text-generation", "text-classification", "question-answering",

+ "text-to-image", "image-classification", "object-detection",

+ "fill-mask", "token-classification", "translation", "summarization",

+ "feature-extraction", "sentence-similarity", "zero-shot-classification",

+ "image-to-text", "automatic-speech-recognition", "audio-classification",

+ "voice-activity-detection", "depth-estimation", "image-segmentation",

+ "video-classification", "reinforcement-learning", "tabular-classification",

+ "tabular-regression", "time-series-forecasting", "graph-ml", "robotics",

+ "computer-vision", "nlp", "cv", "multimodal",

+}

+```

+

+This curated list of recognized tags helps us focus on relevant ML/AI tags and avoid adding inappropriate tags to repositories.

+

+Now the extraction function itself:

+

+```python

+def extract_tags_from_text(text: str) -> List[str]:

+ """Extract potential tags from discussion text"""

+ text_lower = text.lower()

+ explicit_tags = []

+

+ # Pattern 1: "tag: something" or "tags: something"

+ tag_pattern = r"tags?:\s*([a-zA-Z0-9-_,\s]+)"

+ matches = re.findall(tag_pattern, text_lower)

+ for match in matches:

+ tags = [tag.strip() for tag in match.split(",")]

+ explicit_tags.extend(tags)

+

+ # Pattern 2: "#hashtag" style

+ hashtag_pattern = r"#([a-zA-Z0-9-_]+)"

+ hashtag_matches = re.findall(hashtag_pattern, text_lower)

+ explicit_tags.extend(hashtag_matches)

+

+ # Pattern 3: Look for recognized tags mentioned in natural text

+ mentioned_tags = []

+ for tag in RECOGNIZED_TAGS:

+ if tag in text_lower:

+ mentioned_tags.append(tag)

+

+ # Combine and deduplicate

+ all_tags = list(set(explicit_tags + mentioned_tags))

+

+ # Filter to only include recognized tags or explicitly mentioned ones

+ valid_tags = []

+ for tag in all_tags:

+ if tag in RECOGNIZED_TAGS or tag in explicit_tags:

+ valid_tags.append(tag)

+

+ return valid_tags

+```

+

+This function uses multiple strategies to extract tags:

+

+1. **Explicit patterns**: "tags: pytorch, transformers" or "tag: nlp"

+2. **Hashtags**: "#pytorch #nlp"

+3. **Natural mentions**: "This transformers model does text-generation"

+

+The validation step ensures we only suggest appropriate tags, preventing spam or irrelevant tags from being added.

+

+

+## Performance Considerations

+

+When building production MCP clients, performance is critical for maintaining responsive webhook processing. Let's look at some of the considerations we've made.

+

+### 1. Agent Singleton Pattern

+

+The agent is created once and reused to avoid:

+- Repeated MCP server startup overhead

+- Tool loading delays

+- Connection establishment costs

+

+This pattern is essential for webhook handlers that need to respond quickly.

+

+### 2. Async Processing

+

+All MCP operations are async to:

+- Handle multiple webhook requests concurrently

+- Avoid blocking the main FastAPI thread

+- Provide responsive webhook responses

+

+The async nature allows your webhook handler to accept new requests while processing tags in the background.

+

+### 3. Background Task Processing

+

+FastAPI has a built in `BackgroundTasks` class that can be used to run tasks in the background. This is useful for running long running tasks without blocking the main thread.

+

+```python

+from fastapi import BackgroundTasks

+

+@app.post("/webhook")

+async def webhook_handler(request: Request, background_tasks: BackgroundTasks):

+ """Handle webhook and process in background"""

+

+ # Validate webhook quickly

+ if request.headers.get("X-Webhook-Secret") != WEBHOOK_SECRET:

+ return {"error": "Invalid secret"}

+

+ webhook_data = await request.json()

+

+ # Process in background to return quickly

+ background_tasks.add_task(process_webhook_comment, webhook_data)

+

+ return {"status": "accepted"}

+```

+

+This pattern ensures webhook responses are fast (under 1 second) while allowing complex tag processing to happen in the background.

+

+

+

+Webhook endpoints should respond within 10 seconds or the platform may consider them timed out. Using background tasks ensures you can always respond quickly while handling complex processing asynchronously.

+

+

+

+## Next Steps

+

+With our MCP client implemented, we can now:

+

+1. **Implement the Webhook Listener** - Create the FastAPI endpoint that receives Hub events

+2. **Integrate Everything** - Connect webhooks, client, and server into a complete system

+3. **Add Testing Interface** - Create a Gradio interface for development and monitoring

+4. **Deploy and Test** - Validate the complete system in production

+

+In the next section, we'll implement the webhook listener that will trigger our MCP-powered tagging agent.

+

+

+

+The Agent class from `huggingface_hub` provides both MCP tool integration and language model reasoning, making it perfect for building intelligent automation workflows like our PR agent.

+

+

\ No newline at end of file

diff --git a/units/en/unit3_1/quiz1.mdx b/units/en/unit3_1/quiz1.mdx

new file mode 100644

index 0000000..9e74b3b

--- /dev/null

+++ b/units/en/unit3_1/quiz1.mdx

@@ -0,0 +1,149 @@

+# Quiz 1: MCP Server Implementation

+

+Test your knowledge of MCP server concepts and implementation for the Pull Request Agent.

+

+### Q1: What is the primary role of an MCP Server in the Pull Request Agent architecture?

+

+

+

+### Q2: In the FastMCP implementation, why must all MCP tool functions return strings instead of Python objects?

+

+

+

+### Q3: When implementing the `add_new_tag` tool, what is the purpose of checking if a tag already exists before creating a pull request?

+

+

+

+### Q4: In the MCP server implementation, what happens when a model repository doesn't have an existing README.md file?

+

+

+

+### Q5: What is the significance of using `create_pr=True` in the `hf_api.create_commit()` function call?

+

+

+

+### Q6: Why does the MCP server implementation use extensive logging with emojis throughout the code?

+

+

+

+Congrats on finishing this Quiz 🥳! If you need to review any elements, take the time to revisit the chapter to reinforce your knowledge.

\ No newline at end of file

diff --git a/units/en/unit3_1/quiz2.mdx b/units/en/unit3_1/quiz2.mdx

new file mode 100644

index 0000000..ee1a132

--- /dev/null

+++ b/units/en/unit3_1/quiz2.mdx

@@ -0,0 +1,173 @@

+# Quiz 2: Pull Request Agent Integration

+

+Test your knowledge of the complete Pull Request Agent system including MCP client integration and webhook handling.

+

+### Q1: What is the primary purpose of the webhook listener in the Pull Request Agent architecture?

+

+

+

+### Q2: In the Agent-based MCP client implementation, how does the client connect to the MCP server?

+

+

+

+### Q3: Why does the webhook handler use FastAPI's `background_tasks.add_task()` instead of processing requests synchronously?

+

+

+

+### Q4: What is the purpose of validating the `X-Webhook-Secret` header in the webhook handler?

+

+

+

+### Q5: In the Agent implementation, what happens when `await agent_instance.load_tools()` is called?

+

+

+

+### Q6: How does the Agent intelligently use MCP tools when processing a natural language instruction?

+

+

+

+### Q7: What filtering logic determines whether a webhook event should trigger tag processing?

+

+

+

+Congrats on finishing this Quiz 🥳! If you need to review any elements, take the time to revisit the chapter to reinforce your knowledge.

\ No newline at end of file

diff --git a/units/en/unit3_1/setting-up-the-project.mdx b/units/en/unit3_1/setting-up-the-project.mdx

new file mode 100644

index 0000000..4354672

--- /dev/null

+++ b/units/en/unit3_1/setting-up-the-project.mdx

@@ -0,0 +1,133 @@

+# Setting up the Project

+

+In this section, we'll set up the development environment for our Pull Request Agent.

+

+

+

+We'll use modern Python tooling with `uv` for dependency management and create the necessary configuration files. If you're not familiar with `uv`, you can learn more about it [here](https://docs.astral.sh/uv/).

+

+

+

+

+## Project Structure

+

+Let's start by creating the project directory and understanding the file structure:

+

+```bash

+git clone https://huggingface.co/spaces/mcp-course/tag-this-repo

+```

+

+Our final project structure will look like this:

+

+```

+hf-pr-agent/

+├── mcp_server.py # Core MCP server with tagging tools

+├── app.py # FastAPI webhook listener and agent

+├── requirements.txt # Python dependencies

+├── pyproject.toml # Project configuration

+├── env.example # Environment variables template

+├── cleanup.py # Development utility

+```

+

+## Dependencies and Configuration

+

+Let's walk through the dependencies and configuration for our project.

+

+### 1. Python Project Configuration

+

+We will use `uv` to create the `pyproject.toml` file to define our project:

+

+

+

+If you don't have `uv` installed, you can follow the instructions [here](https://docs.astral.sh/uv/getting-started/installation/).

+

+

+

+```toml

+[project]

+name = "mcp-course-unit3-example"

+version = "0.1.0"

+description = "FastAPI and Gradio app for Hugging Face Hub discussion webhooks"

+readme = "README.md"

+requires-python = ">=3.11"

+dependencies = [

+ "fastapi>=0.104.0",

+ "uvicorn[standard]>=0.24.0",

+ "gradio>=4.0.0",

+ "huggingface-hub[mcp]>=0.32.0",

+ "pydantic>=2.0.0",

+ "python-multipart>=0.0.6",

+ "requests>=2.31.0",

+ "python-dotenv>=1.0.0",

+ "fastmcp>=2.0.0",

+]

+

+[build-system]

+requires = ["hatchling"]

+build-backend = "hatchling.build"

+

+[tool.hatch.build.targets.wheel]

+packages = ["src"]

+```

+

+For compatibility with various deployment platforms, the same is repeated in `requirements.txt`

+

+To create a virtual environment, run:

+

+```bash

+uv venv

+source .venv/bin/activate # or .venv/Scripts/activate on Windows

+```

+

+To install the dependencies, run:

+

+```bash

+uv sync

+```

+

+### 2. Environment Configuration

+

+Create `env.example` to document required environment variables:

+

+```bash

+# Hugging Face API Token (required)

+# Get from: https://huggingface.co/settings/tokens

+HF_TOKEN=hf_your_token_here

+

+# Webhook Secret (required for production)

+# Use a strong, random string

+WEBHOOK_SECRET=your-webhook-secret-here

+

+# Model for the agent (optional)

+HF_MODEL=owner/model

+

+# Provider for MCP agent (optional)

+HF_PROVIDER=huggingface

+```

+

+You will need to get your Hugging Face API token from [here](https://huggingface.co/settings/tokens).

+

+You will also need to generate a webhook secret. You can do this by running the following command:

+

+```bash

+python -c "import secrets; print(secrets.token_hex(32))"

+```

+

+You will then need to add the webhook secret to your `.env` file based on the `env.example` file.

+

+## Next Steps

+

+With our project structure and environment set up, we're ready to:

+

+1. **Create the MCP Server** - Implement the core tagging functionality

+2. **Build the Webhook Listener** - Handle incoming discussion events

+3. **Integrate the Agent** - Connect MCP tools with webhook processing

+4. **Test and Deploy** - Validate functionality and deploy to Spaces

+

+In the next section, we'll dive into creating our MCP server that will handle all the Hugging Face Hub interactions.

+

+

+

+Keep your `.env` file secure and never commit it to version control. The `.env` file should be added to your `.gitignore` file to prevent accidental exposure of secrets.

+

+

\ No newline at end of file

diff --git a/units/en/unit3_1/webhook-listener.mdx b/units/en/unit3_1/webhook-listener.mdx

new file mode 100644

index 0000000..62e0fa3

--- /dev/null

+++ b/units/en/unit3_1/webhook-listener.mdx

@@ -0,0 +1,519 @@

+# Webhook Listener

+

+The webhook listener is the entry point for our Pull Request Agent. It receives real-time events from the Hugging Face Hub when discussions are created or updated, triggering our MCP-powered tagging workflow. In this section, we'll implement a webhook handler using FastAPI.

+

+## Understanding Webhook Integration

+

+Following the [Hugging Face Webhooks Guide](https://raw.githubusercontent.com/huggingface/hub-docs/refs/heads/main/docs/hub/webhooks-guide-discussion-bot.md), our webhook listener validates incoming requests and processes discussion events in real-time.

+

+

+

+### Webhook Event Flow

+

+Understanding the webhook flow is crucial for building a reliable listener:

+

+1. **User Action**: Someone creates a comment in a model repository discussion

+2. **Hub Event**: Hugging Face generates a webhook event

+3. **Webhook Delivery**: Hub sends POST request to our endpoint

+4. **Authentication**: We validate the webhook secret

+5. **Processing**: Extract tags from the comment content

+6. **Action**: Use MCP tools to create pull requests for new tags

+

+

+

+Webhooks are push notifications - the Hugging Face Hub actively sends events to your application rather than you polling for changes. This enables real-time responses to discussions and comments.

+

+

+

+## FastAPI Webhook Application

+

+Let's build our webhook listener step by step, starting with the foundation and building up to the complete processing logic.

+

+### 1. Application Setup

+

+First, let's set up the basic FastAPI application with all necessary imports and configuration:

+

+```python

+import os

+import json

+from datetime import datetime

+from typing import List, Dict, Any, Optional

+

+from fastapi import FastAPI, Request, BackgroundTasks

+from fastapi.middleware.cors import CORSMiddleware

+from pydantic import BaseModel

+```

+

+These imports give us everything we need to build a robust webhook handler. `FastAPI` provides the web framework, `BackgroundTasks` enables async processing, and the typing imports help with data validation.

+

+Now let's configure our application:

+

+```python

+# Configuration

+WEBHOOK_SECRET = os.getenv("WEBHOOK_SECRET")

+HF_TOKEN = os.getenv("HF_TOKEN")

+

+# Simple storage for processed operations

+tag_operations_store: List[Dict[str, Any]] = []

+

+app = FastAPI(title="HF Tagging Bot")

+app.add_middleware(CORSMiddleware, allow_origins=["*"])

+```

+

+This configuration sets up:

+- **Webhook secret**: For validating incoming webhooks

+- **HF token**: For authenticating with the Hub API

+- **Operations store**: In-memory storage for monitoring processed operations

+- **CORS middleware**: Allows cross-origin requests for the web interface

+

+

+The `tag_operations_store` list keeps track of recent webhook processing operations. This is useful for debugging and monitoring, but in production you might want to use a database or limit the size of this list.

+

+

+### 2. Webhook Data Models

+

+Based on the [Hugging Face webhook documentation](https://raw.githubusercontent.com/huggingface/hub-docs/refs/heads/main/docs/hub/webhooks-guide-discussion-bot.md), we need to understand the webhook data structure:

+

+```python

+class WebhookEvent(BaseModel):

+ event: Dict[str, str] # Contains action and scope information

+ comment: Dict[str, Any] # Comment content and metadata

+ discussion: Dict[str, Any] # Discussion information

+ repo: Dict[str, str] # Repository details

+```

+

+This Pydantic model helps us understand the webhook structure.

+

+The key fields we care about are:

+- `event.action`: Usually "create" for new comments

+- `event.scope`: Usually "discussion.comment" for comment events

+- `comment.content`: The actual comment text

+- `repo.name`: The repository where the comment was made

+

+### 3. Core Webhook Handler

+

+Now for the main webhook handler - this is where the important part happens. Let's break it down into digestible pieces:

+

+```python

+@app.post("/webhook")

+async def webhook_handler(request: Request, background_tasks: BackgroundTasks):

+ """

+ Handle incoming webhooks from Hugging Face Hub

+ Following the pattern from: https://raw.githubusercontent.com/huggingface/hub-docs/refs/heads/main/docs/hub/webhooks-guide-discussion-bot.md

+ """

+ print("🔔 Webhook received!")

+

+ # Step 1: Validate webhook secret (security)

+ webhook_secret = request.headers.get("X-Webhook-Secret")

+ if webhook_secret != WEBHOOK_SECRET:

+ print("❌ Invalid webhook secret")

+ return {"error": "incorrect secret"}, 400

+```

+

+The first step is security validation. We check the `X-Webhook-Secret` header against our configured secret to ensure the webhook is legitimate.

+

+

+

+Always validate webhook secrets! Without this check, anyone could send fake webhook requests to your application. The secret acts as a shared password between Hugging Face and your application.

+

+

+

+Next, let's parse and validate the webhook data:

+

+```python

+ # Step 2: Parse webhook data

+ try:

+ webhook_data = await request.json()

+ print(f"📥 Webhook data: {json.dumps(webhook_data, indent=2)}")

+ except Exception as e:

+ print(f"❌ Error parsing webhook data: {str(e)}")

+ return {"error": "invalid JSON"}, 400

+

+ # Step 3: Validate event structure

+ event = webhook_data.get("event", {})

+ if not event:

+ print("❌ No event data in webhook")

+ return {"error": "missing event data"}, 400

+```

+

+This parsing step handles potential JSON errors gracefully and validates that we have the expected event structure.

+

+Now for the event filtering logic:

+

+```python

+ # Step 4: Check if this is a discussion comment creation

+ # Following the webhook guide pattern:

+ if (

+ event.get("action") == "create" and

+ event.get("scope") == "discussion.comment"

+ ):

+ print("✅ Valid discussion comment creation event")

+

+ # Process in background to return quickly to Hub

+ background_tasks.add_task(process_webhook_comment, webhook_data)

+

+ return {

+ "status": "accepted",

+ "message": "Comment processing started",

+ "timestamp": datetime.now().isoformat()

+ }

+ else:

+ print(f"ℹ️ Ignoring event: action={event.get('action')}, scope={event.get('scope')}")

+ return {

+ "status": "ignored",

+ "reason": "Not a discussion comment creation"

+ }

+```

+

+This filtering ensures we only process the events we care about - new discussion comments. We ignore other events like repository creation, model uploads, etc.

+

+We use FastAPI's `background_tasks.add_task()` to process the webhook asynchronously. This allows us to return a response quickly (within seconds) while the actual tag processing happens in the background.

+

+

+

+Webhook endpoints should respond within 10 seconds, or the sending platform may consider them failed. Using background tasks ensures fast responses while allowing complex processing to happen asynchronously.

+

+

+

+### 4. Comment Processing Logic

+

+Now let's implement the core comment processing function that does the actual tag extraction and MCP tool usage:

+

+```python

+async def process_webhook_comment(webhook_data: Dict[str, Any]):

+ """

+ Process webhook comment to detect and add tags

+ Integrates with our MCP client for Hub interactions

+ """

+ print("🏷️ Starting process_webhook_comment...")

+

+ try:

+ # Extract comment and repository information

+ comment_content = webhook_data["comment"]["content"]

+ discussion_title = webhook_data["discussion"]["title"]

+ repo_name = webhook_data["repo"]["name"]

+ discussion_num = webhook_data["discussion"]["num"]

+ comment_author = webhook_data["comment"]["author"].get("id", "unknown")

+

+ print(f"📝 Comment from {comment_author}: {comment_content}")

+ print(f"📰 Discussion: {discussion_title}")

+ print(f"📦 Repository: {repo_name}")

+```

+

+This initial section extracts all the relevant information from the webhook data. We get both the comment content and discussion title since tags might be mentioned in either place.

+

+Next, we extract and process the tags:

+

+```python

+ # Extract potential tags from comment and title

+ comment_tags = extract_tags_from_text(comment_content)

+ title_tags = extract_tags_from_text(discussion_title)

+ all_tags = list(set(comment_tags + title_tags))

+

+ print(f"🔍 Found tags: {all_tags}")

+

+ # Store operation for monitoring

+ operation = {

+ "timestamp": datetime.now().isoformat(),

+ "repo_name": repo_name,

+ "discussion_num": discussion_num,

+ "comment_author": comment_author,

+ "extracted_tags": all_tags,

+ "comment_preview": comment_content[:100] + "..." if len(comment_content) > 100 else comment_content,

+ "status": "processing"

+ }

+ tag_operations_store.append(operation)

+```

+

+We combine tags from both sources and create an operation record for monitoring. This record tracks the progress of each webhook processing operation.

+

+

+

+Storing operation records is crucial for debugging and monitoring. When something goes wrong, you can look at recent operations to understand what happened and why.

+

+

+

+Now for the MCP agent integration:

+

+```python

+ if not all_tags:

+ operation["status"] = "no_tags"

+ operation["message"] = "No recognizable tags found"

+ print("❌ No tags found to process")

+ return

+

+ # Get MCP agent for tag processing

+ agent = await get_agent()

+ if not agent:

+ operation["status"] = "error"

+ operation["message"] = "Agent not configured (missing HF_TOKEN)"

+ print("❌ No agent available")

+ return

+

+ # Process each extracted tag

+ operation["results"] = []

+ for tag in all_tags:

+ try:

+ print(f"🤖 Processing tag '{tag}' for repo '{repo_name}'")

+

+ # Create prompt for agent to handle tag processing

+ prompt = f"""

+ Analyze the repository '{repo_name}' and determine if the tag '{tag}' should be added.

+

+ First, check the current tags using get_current_tags.

+ If '{tag}' is not already present and it's a valid tag, add it using add_new_tag.

+

+ Repository: {repo_name}

+ Tag to process: {tag}

+

+ Provide a clear summary of what was done.

+ """

+

+ response = await agent.run(prompt)

+ print(f"🤖 Agent response for '{tag}': {response}")

+

+ # Parse response and store result

+ tag_result = {

+ "tag": tag,

+ "response": response,

+ "timestamp": datetime.now().isoformat()

+ }

+ operation["results"].append(tag_result)

+

+ except Exception as e:

+ error_msg = f"❌ Error processing tag '{tag}': {str(e)}"

+ print(error_msg)

+ operation["results"].append({

+ "tag": tag,

+ "error": str(e),

+ "timestamp": datetime.now().isoformat()

+ })

+

+ operation["status"] = "completed"

+ print(f"✅ Completed processing {len(all_tags)} tags")

+```

+

+This section handles the core business logic:

+1. **Validation**: Ensure we have tags to process and an available agent

+2. **Processing**: For each tag, create a natural language prompt for the agent

+3. **Recording**: Store all results for monitoring and debugging

+4. **Error handling**: Gracefully handle errors for individual tags

+

+The agent prompt is carefully crafted to instruct the AI on exactly what steps to take: check current tags first, then add the new tag if appropriate.

+

+

+### 5. Health and Monitoring Endpoints

+

+Besides the webhook handler, we need endpoints for monitoring and debugging. Let's add these essential endpoints:

+

+```python

+@app.get("/")

+async def root():

+ """Root endpoint with basic information"""

+ return {

+ "name": "HF Tagging Bot",

+ "status": "running",

+ "description": "Webhook listener for automatic model tagging",

+ "endpoints": {

+ "webhook": "/webhook",

+ "health": "/health",

+ "operations": "/operations"

+ }

+ }

+```

+

+The root endpoint provides basic information about your service and its available endpoints.

+

+```python

+@app.get("/health")

+async def health_check():

+ """Health check endpoint for monitoring"""

+ agent = await get_agent()

+

+ return {

+ "status": "healthy",

+ "timestamp": datetime.now().isoformat(),

+ "components": {

+ "webhook_secret": "configured" if WEBHOOK_SECRET else "missing",

+ "hf_token": "configured" if HF_TOKEN else "missing",

+ "mcp_agent": "ready" if agent else "not_ready"

+ }

+ }

+```

+

+The health check endpoint validates that all your components are properly configured. This is essential for production monitoring.

+

+```python

+@app.get("/operations")

+async def get_operations():

+ """Get recent tag operations for monitoring"""

+ # Return last 50 operations

+ recent_ops = tag_operations_store[-50:] if tag_operations_store else []

+ return {

+ "total_operations": len(tag_operations_store),

+ "recent_operations": recent_ops

+ }

+```

+

+The operations endpoint lets you see recent webhook processing activity, which is invaluable for debugging and monitoring.

+

+

+

+Health and monitoring endpoints are crucial for production deployments. They help you quickly identify configuration issues and monitor your application's activity without digging through logs.

+

+

+

+## Webhook Configuration on Hugging Face Hub

+

+Now that we have our webhook listener ready, let's configure it on the Hugging Face Hub. This is where we connect our application to real repository events.

+

+### 1. Create Webhook in Settings

+

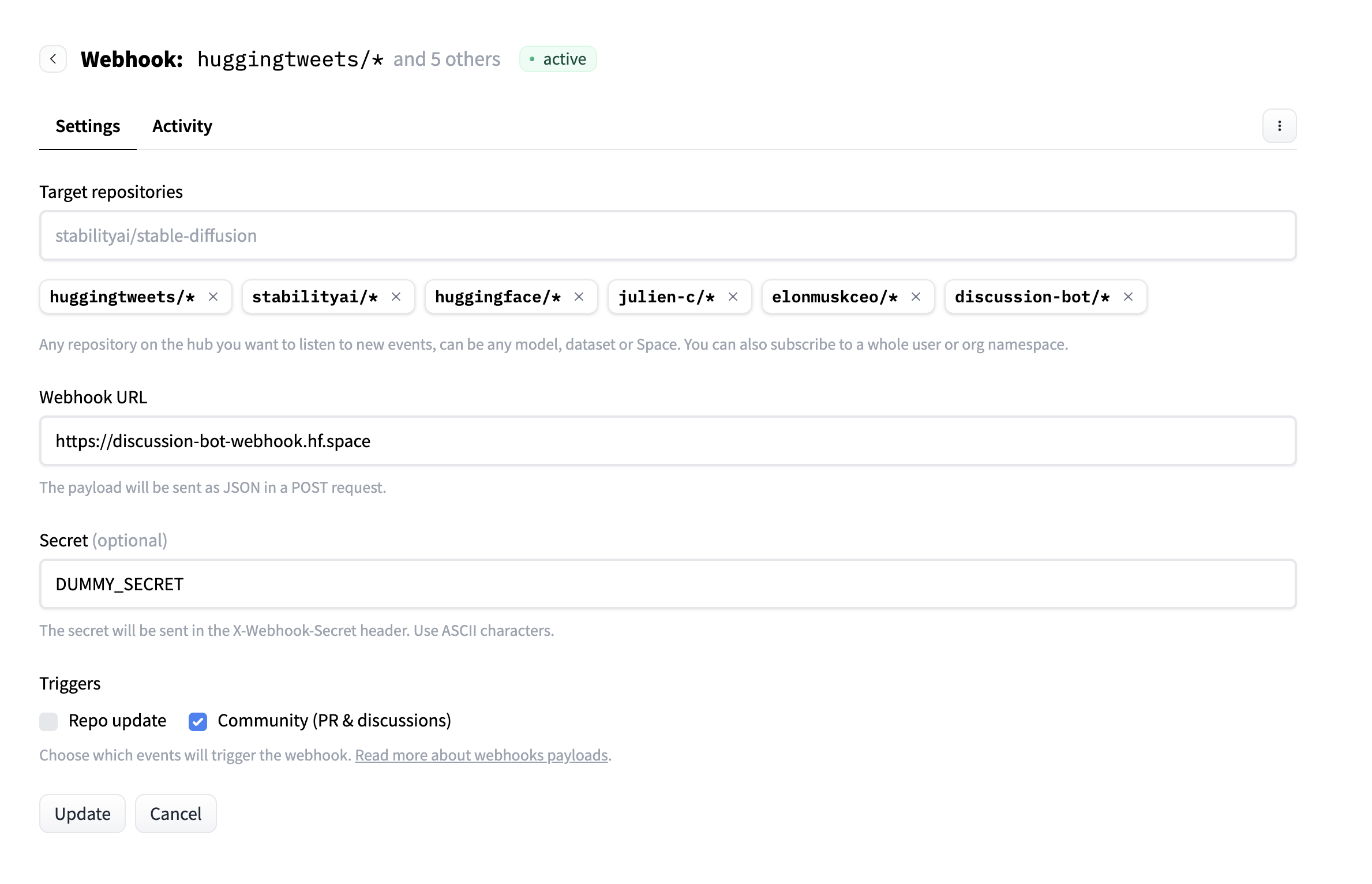

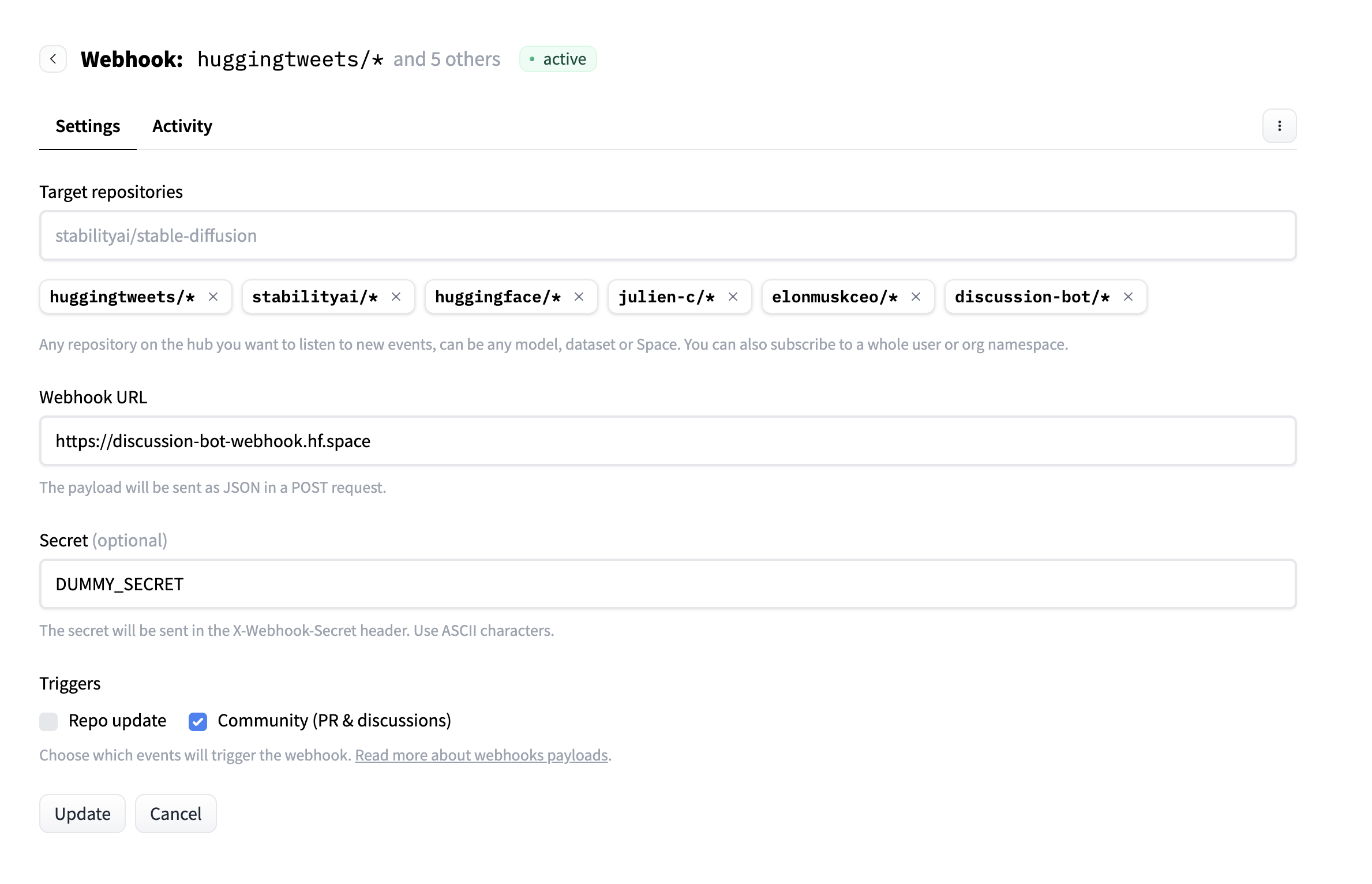

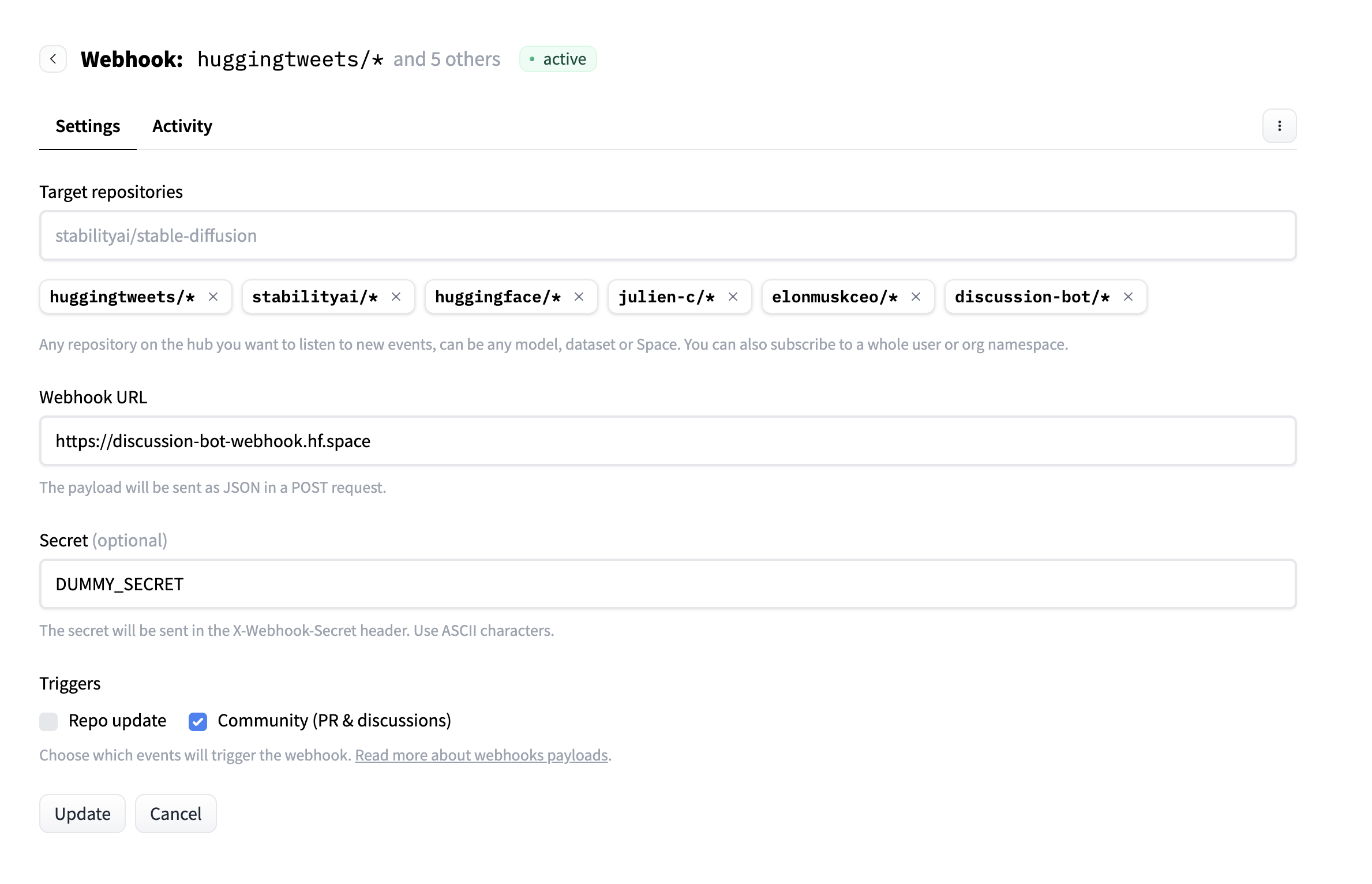

+Following the [webhook setup guide](https://huggingface.co/docs/hub/webhooks-guide-discussion-bot):

+

+

+

+Navigate to your [Hugging Face Settings](https://huggingface.co/settings/webhooks) and configure:

+

+1. **Target Repositories**: Specify which repositories to monitor

+2. **Webhook URL**: Your deployed application endpoint (e.g., `https://your-space.hf.space/webhook`)

+3. **Secret**: Use the same secret from your `WEBHOOK_SECRET` environment variable

+4. **Events**: Subscribe to "Community (PR & discussions)" events

+

+

+

+Start with one or two test repositories before configuring webhooks for many repositories. This lets you validate your application works correctly before scaling up.

+

+

+

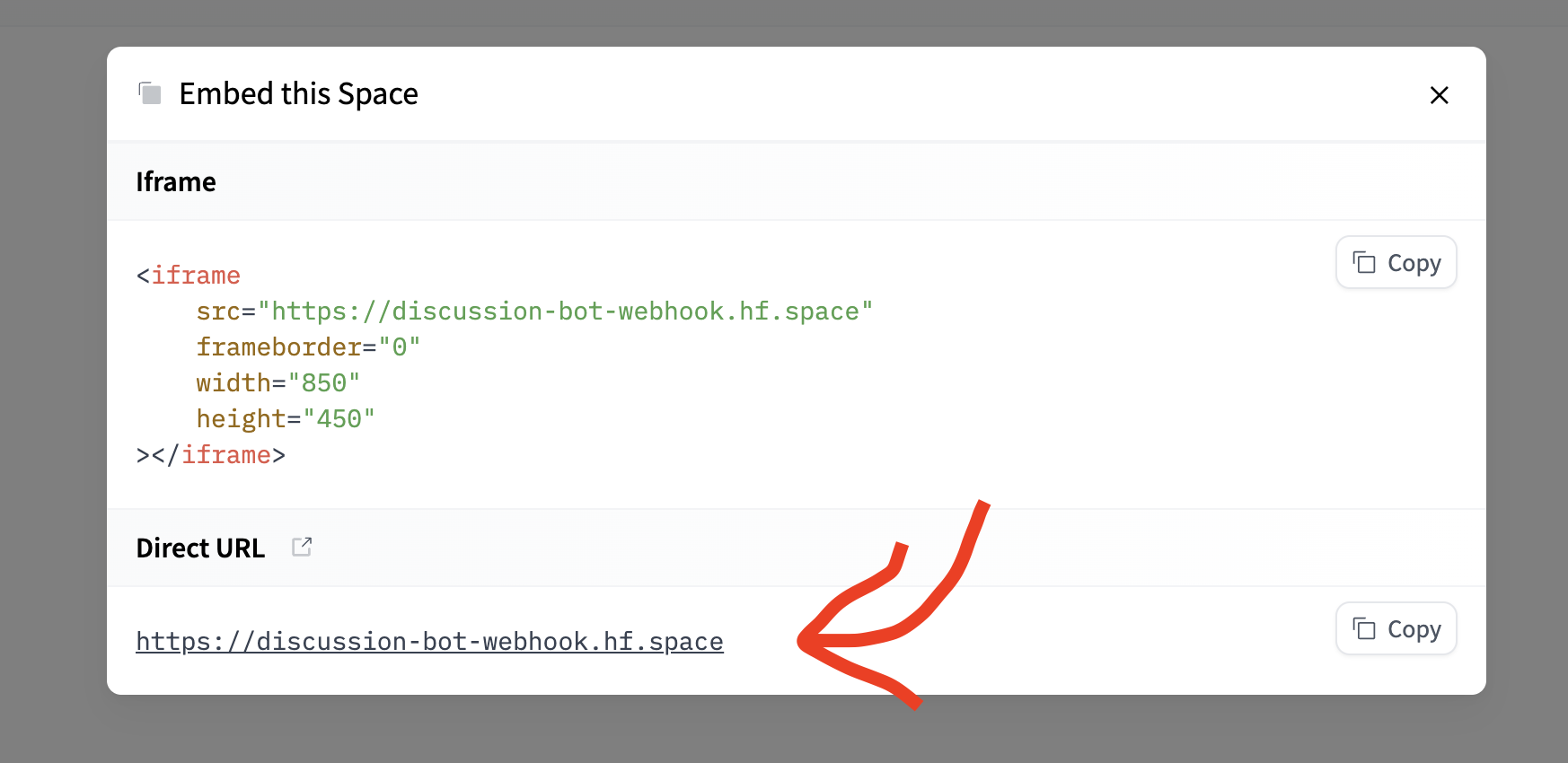

+### 2. Space URL Configuration

+

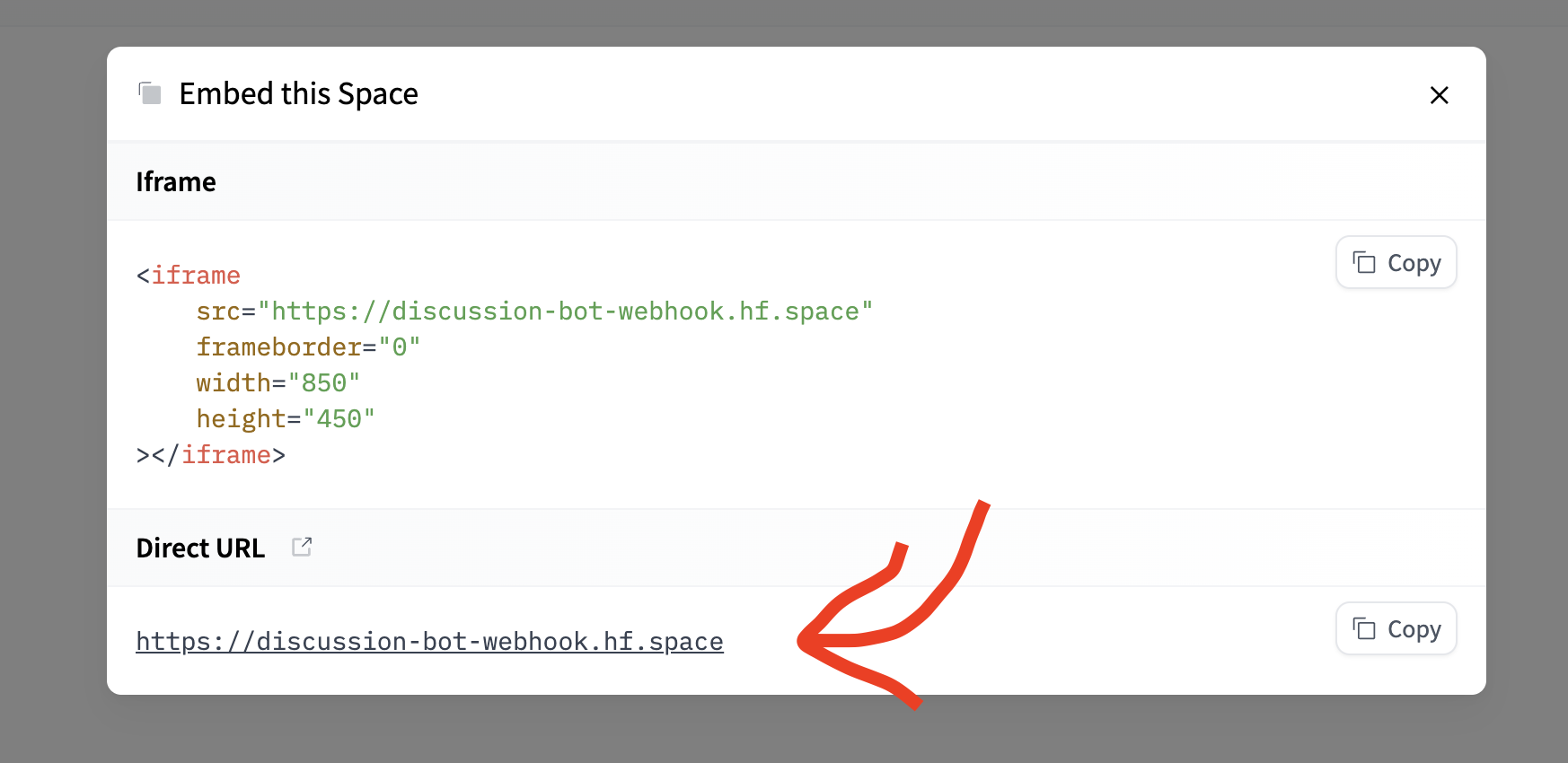

+For Hugging Face Spaces deployment, you'll need to get your direct URL:

+

+

+

+The process is:

+1. Click "Embed this Space" in your Space settings

+2. Copy the "Direct URL"

+3. Append `/webhook` to create your webhook endpoint

+4. Update your webhook configuration with this URL

+

+For example, if your Space URL is `https://username-space-name.hf.space`, your webhook endpoint would be `https://username-space-name.hf.space/webhook`.

+

+

+

+## Testing the Webhook Listener

+

+Testing is crucial before deploying to production. Let's walk through different testing approaches:

+

+### 1. Local Testing

+

+You can test your webhook handler locally using a simple script:

+

+```python

+# test_webhook_local.py

+import requests

+import json

+

+# Test data matching webhook format

+test_webhook_data = {

+ "event": {

+ "action": "create",

+ "scope": "discussion.comment"

+ },

+ "comment": {

+ "content": "This model needs tags: pytorch, transformers",

+ "author": {"id": "test-user"}

+ },

+ "discussion": {

+ "title": "Missing tags",

+ "num": 1

+ },

+ "repo": {

+ "name": "test-user/test-model"

+ }

+}

+

+# Send test webhook

+response = requests.post(

+ "http://localhost:8000/webhook",

+ json=test_webhook_data,

+ headers={"X-Webhook-Secret": "your-test-secret"}

+)

+

+print(f"Status: {response.status_code}")

+print(f"Response: {response.json()}")

+```

+

+This script simulates a real webhook request, allowing you to test your handler without waiting for real events.

+

+### 2. Simulation Endpoint for Development

+

+You can also add a simulation endpoint to your FastAPI application for easier testing:

+

+```python

+@app.post("/simulate_webhook")

+async def simulate_webhook(

+ repo_name: str,

+ discussion_title: str,

+ comment_content: str

+) -> str:

+ """Simulate webhook for testing purposes"""

+

+ # Create mock webhook data

+ mock_webhook_data = {

+ "event": {

+ "action": "create",

+ "scope": "discussion.comment"

+ },

+ "comment": {

+ "content": comment_content,

+ "author": {"id": "test-user"}

+ },

+ "discussion": {

+ "title": discussion_title,

+ "num": 999

+ },

+ "repo": {

+ "name": repo_name

+ }

+ }

+

+ # Process the simulated webhook

+ await process_webhook_comment(mock_webhook_data)

+

+ return f"Simulated webhook processed for {repo_name}"

+```

+

+This endpoint makes it easy to test different scenarios through your application's interface.

+

+

+Simulation endpoints are incredibly useful during development. They let you test different tag combinations and edge cases without creating actual repository discussions.

+

+

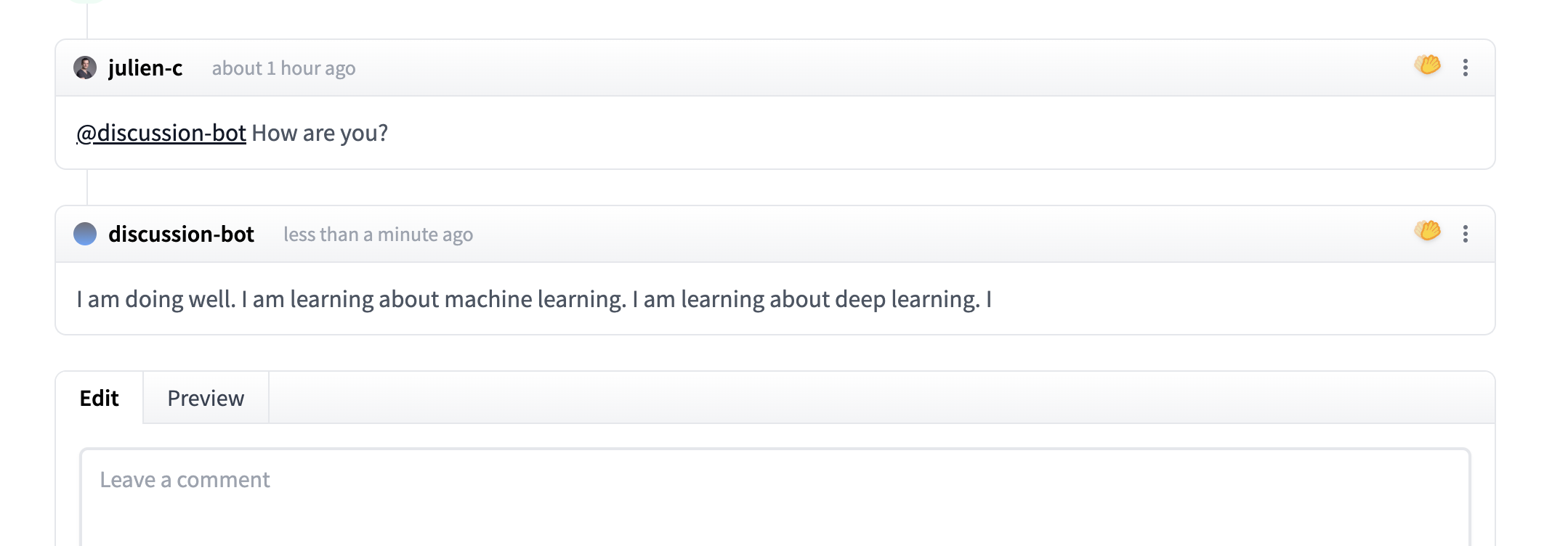

+## Expected Webhook Result

+

+When everything is working correctly, you should see results like the discussion bot example:

+

+

+

+

+

+This screenshot shows a successful webhook processing where the bot creates a pull request in response to a discussion comment.

+

+## Next Steps

+

+With our webhook listener implemented, we now have:

+

+1. **Secure webhook validation** following Hugging Face best practices

+2. **Real-time event processing** with background task handling

+3. **MCP integration** for intelligent tag management

+4. **Monitoring and debugging** capabilities

+

+In the next section, we'll integrate everything into a complete Pull Request Agent that demonstrates the full workflow from webhook to PR creation.

+

+

+

+Always return webhook responses quickly (within 10 seconds) to avoid timeouts. Use background tasks for longer processing operations like MCP tool execution and pull request creation.

+

+

\ No newline at end of file

diff --git a/units/en/unit4/introduction.mdx b/units/en/unit4/introduction.mdx

deleted file mode 100644

index 5eed14d..0000000

--- a/units/en/unit4/introduction.mdx

+++ /dev/null

@@ -1,5 +0,0 @@

-# Coming Soon

-

-This unit will be a collaboration with partners from the AI community.

-

-If you're building tools for MCP, please reach out to us and we'll add you to the unit. Open a [discussion](https://huggingface.co/spaces/mcp-course/README/discussions) on the hub organization.

\ No newline at end of file