|

| 1 | +# Building a Vanilla JavaScript Application |

| 2 | + |

| 3 | +In this tutorial, you’ll build a simple web application that detects objects in images using Transformers.js! To follow along, all you need is a code editor, a browser, and a simple server (e.g., VS Code Live Server). |

| 4 | + |

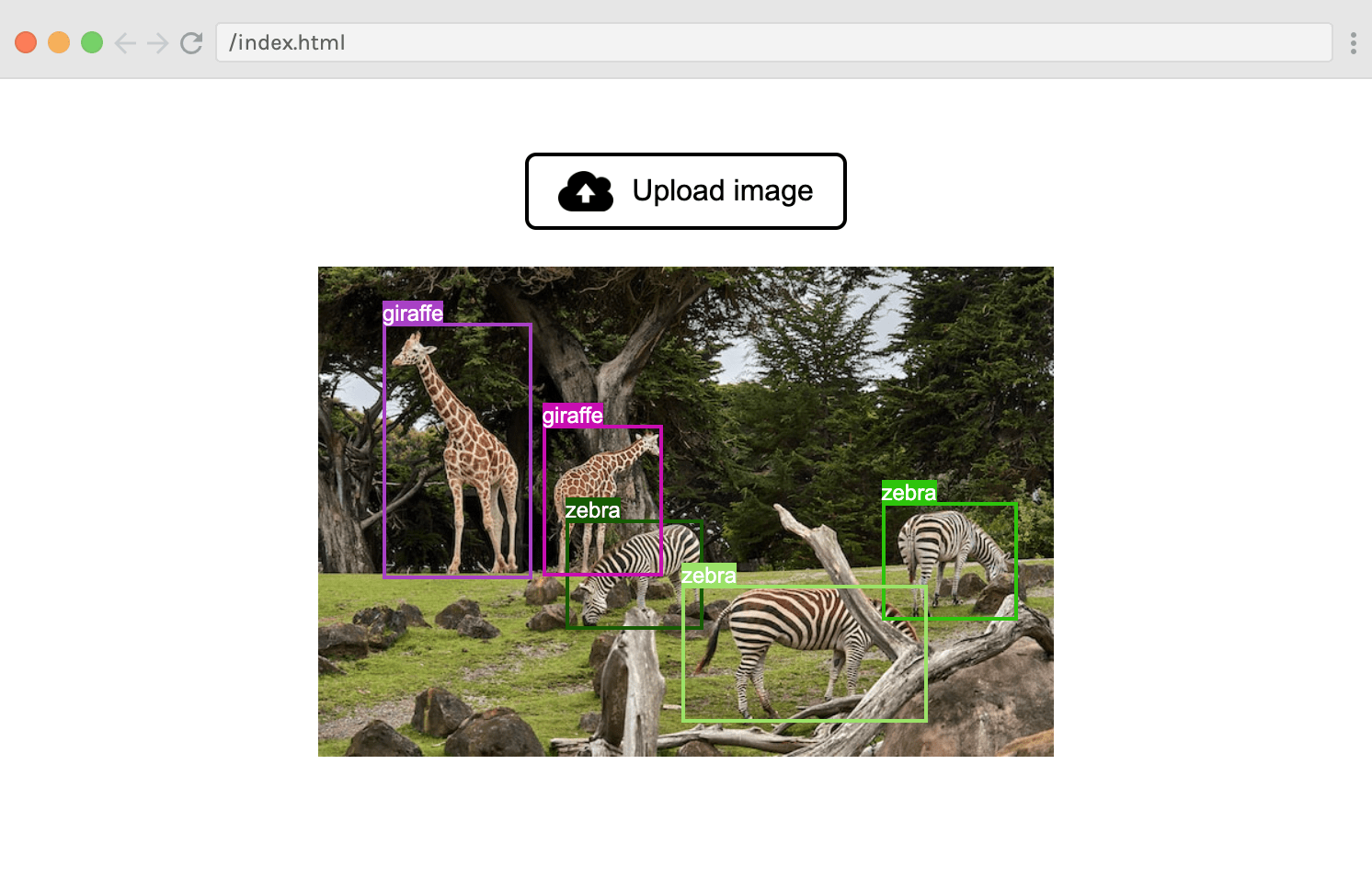

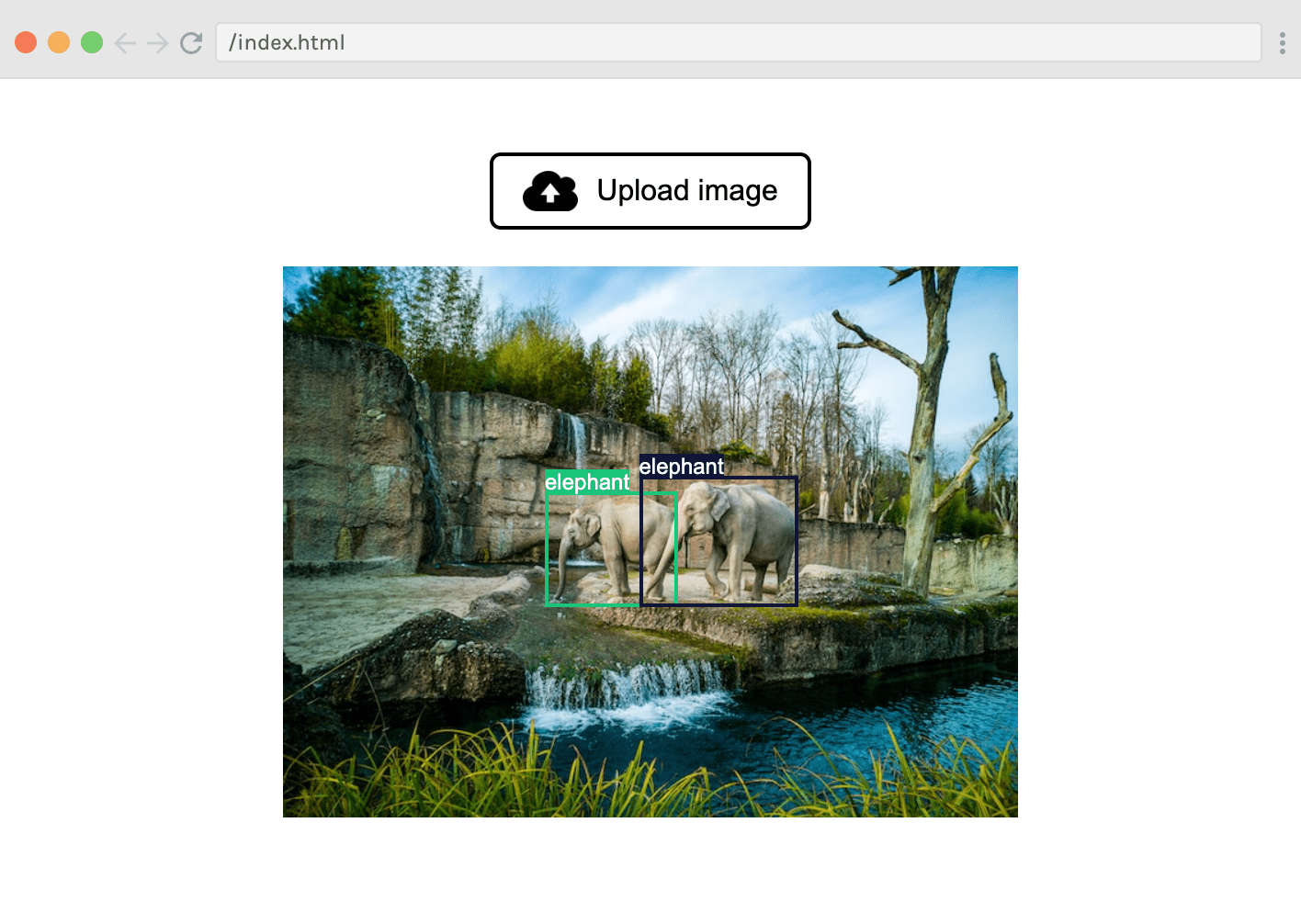

| 5 | +Here's how it works: the user clicks “Upload image” and selects an image using an input dialog. After analysing the image with an object detection model, the predicted bounding boxes are overlaid on top of the image, like this: |

| 6 | + |

| 7 | + |

| 8 | + |

| 9 | +Useful links: |

| 10 | + |

| 11 | +- [Demo site](https://huggingface.co/spaces/Scrimba/vanilla-js-object-detector) |

| 12 | +- [Interactive code walk-through (scrim)](https://scrimba.com/scrim/cKm9bDAg) |

| 13 | +- [Source code](https://github.com/xenova/transformers.js/tree/main/examples/vanilla-js) |

| 14 | + |

| 15 | +## Step 1: HTML and CSS setup |

| 16 | + |

| 17 | +Before we start building with Transformers.js, we first need to lay the groundwork with some markup and styling. Create an `index.html` file with a basic HTML skeleton, and add the following `<main>` tag to the `<body>`: |

| 18 | + |

| 19 | +```html |

| 20 | +<main class="container"> |

| 21 | + <label class="custom-file-upload"> |

| 22 | + <input id="file-upload" type="file" accept="image/*" /> |

| 23 | + <img class="upload-icon" src="https://huggingface.co/datasets/Xenova/transformers.js-docs/resolve/main/upload-icon.png" /> |

| 24 | + Upload image |

| 25 | + </label> |

| 26 | + <div id="image-container"></div> |

| 27 | + <p id="status"></p> |

| 28 | +</main> |

| 29 | +``` |

| 30 | + |

| 31 | +<details> |

| 32 | + |

| 33 | +<summary>Click here to see a breakdown of this markup.</summary> |

| 34 | + |

| 35 | +We’re adding an `<input>` element with `type="file"` that accepts images. This allows the user to select an image from their local file system using a popup dialog. The default styling for this element looks quite bad, so let's add some styling. The easiest way to achieve this is to wrap the `<input>` element in a `<label>`, hide the input, and then style the label as a button. |

| 36 | + |

| 37 | +We’re also adding an empty `<div>` container for displaying the image, plus an empty `<p>` tag that we'll use to give status updates to the user while we download and run the model, since both of these operations take some time. |

| 38 | + |

| 39 | +</details> |

| 40 | + |

| 41 | +Next, add the following CSS rules in a `style.css` file and and link it to the HTML: |

| 42 | + |

| 43 | +```css |

| 44 | +html, |

| 45 | +body { |

| 46 | + font-family: Arial, Helvetica, sans-serif; |

| 47 | +} |

| 48 | + |

| 49 | +.container { |

| 50 | + margin: 40px auto; |

| 51 | + width: max(50vw, 400px); |

| 52 | + display: flex; |

| 53 | + flex-direction: column; |

| 54 | + align-items: center; |

| 55 | +} |

| 56 | + |

| 57 | +.custom-file-upload { |

| 58 | + display: flex; |

| 59 | + align-items: center; |

| 60 | + cursor: pointer; |

| 61 | + gap: 10px; |

| 62 | + border: 2px solid black; |

| 63 | + padding: 8px 16px; |

| 64 | + cursor: pointer; |

| 65 | + border-radius: 6px; |

| 66 | +} |

| 67 | + |

| 68 | +#file-upload { |

| 69 | + display: none; |

| 70 | +} |

| 71 | + |

| 72 | +.upload-icon { |

| 73 | + width: 30px; |

| 74 | +} |

| 75 | + |

| 76 | +#image-container { |

| 77 | + width: 100%; |

| 78 | + margin-top: 20px; |

| 79 | + position: relative; |

| 80 | +} |

| 81 | + |

| 82 | +#image-container>img { |

| 83 | + width: 100%; |

| 84 | +} |

| 85 | +``` |

| 86 | + |

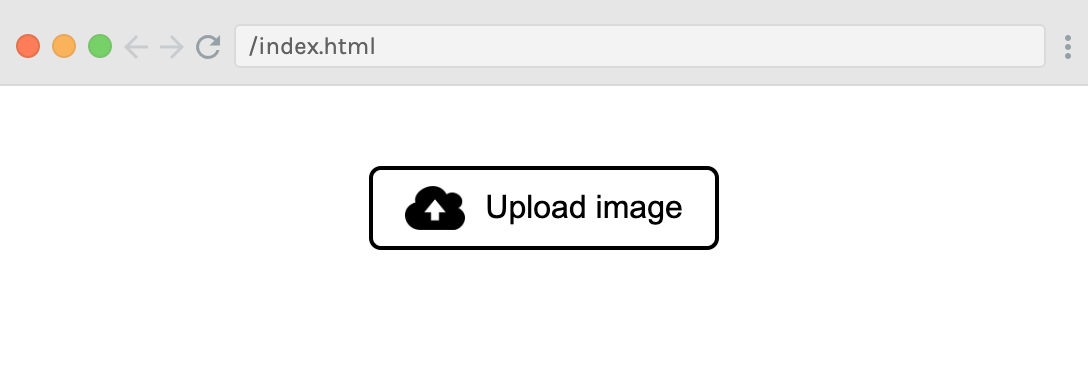

| 87 | +Here's how the UI looks at this point: |

| 88 | + |

| 89 | + |

| 90 | + |

| 91 | +## Step 2: JavaScript setup |

| 92 | + |

| 93 | +With the *boring* part out of the way, let's start writing some JavaScript code! Create a file called `index.js` and link to it in `index.html` by adding the following to the end of the `<body>`: |

| 94 | + |

| 95 | +```html |

| 96 | +<script src="./index.js" type="module"></script> |

| 97 | +``` |

| 98 | + |

| 99 | +<Tip> |

| 100 | + |

| 101 | +The `type="module"` attribute is important, as it turns our file into a [JavaScript module](https://developer.mozilla.org/en-US/docs/Web/JavaScript/Guide/Modules), meaning that we’ll be able to use imports and exports. |

| 102 | + |

| 103 | +</Tip> |

| 104 | + |

| 105 | +Moving into `index.js`, let's import Transformers.js by adding the following line to the top of the file: |

| 106 | + |

| 107 | +```js |

| 108 | +import { pipeline, env } from "https://cdn.jsdelivr.net/npm/@xenova/[email protected]"; |

| 109 | +``` |

| 110 | + |

| 111 | +Since we will be downloading the model from the Hugging Face Hub, we can skip the local model check by setting: |

| 112 | + |

| 113 | +```js |

| 114 | +env.allowLocalModels = false; |

| 115 | +``` |

| 116 | + |

| 117 | +Next, let's create references to the various DOM elements we will access later: |

| 118 | + |

| 119 | +```js |

| 120 | +const fileUpload = document.getElementById("file-upload"); |

| 121 | +const imageContainer = document.getElementById("image-container"); |

| 122 | +const status = document.getElementById("status"); |

| 123 | +``` |

| 124 | + |

| 125 | +## Step 3: Create an object detection pipeline |

| 126 | + |

| 127 | +We’re finally ready to create our object detection pipeline! As a reminder, a [pipeline](./pipelines). is a high-level interface provided by the library to perform a specific task. In our case, we will instantiate an object detection pipeline with the `pipeline()` helper function. |

| 128 | + |

| 129 | +Since this can take some time (especially the first time when we have to download the ~40MB model), we first update the `status` paragraph so that the user knows that we’re about to load the model. |

| 130 | + |

| 131 | +```js |

| 132 | +status.textContent = "Loading model..."; |

| 133 | +``` |

| 134 | + |

| 135 | +<Tip> |

| 136 | + |

| 137 | +To keep this tutorial simple, we'll be loading and running the model in the main (UI) thread. This is not recommended for production applications, since the UI will freeze when we're performing these actions. This is because JavaScript is a single-threaded language. To overcome this, you can use a [web worker](https://developer.mozilla.org/en-US/docs/Web/API/Web_Workers_API/Using_web_workers) to download and run the model in the background. However, we’re not going to do cover that in this tutorial... |

| 138 | + |

| 139 | +</Tip> |

| 140 | + |

| 141 | + |

| 142 | +We can now call the `pipeline()` function that we imported at the top of our file, to create our object detection pipeline: |

| 143 | + |

| 144 | +```js |

| 145 | +const detector = await pipeline("object-detection", "Xenova/detr-resnet-50"); |

| 146 | +``` |

| 147 | + |

| 148 | +We’re passing two arguments into the `pipeline()` function: (1) task and (2) model. |

| 149 | + |

| 150 | +1. The first tells Transformers.js what kind of task we want to perform. In our case, that is `object-detection`, but there are many other tasks that the library supports, including `text-generation`, `sentiment-analysis`, `summarization`, or `automatic-speech-recognition`. See [here](https://huggingface.co/docs/transformers.js/pipelines#tasks) for the full list. |

| 151 | + |

| 152 | +2. The second argument specifies which model we would like to use to solve the given task. We will use [`Xenova/detr-resnet-50`](https://huggingface.co/Xenova/detr-resnet-50), as it is a relatively small (~40MB) but powerful model for detecting objects in an image. |

| 153 | + |

| 154 | +Once the function returns, we’ll tell the user that the app is ready to be used. |

| 155 | + |

| 156 | +```js |

| 157 | +status.textContent = "Ready"; |

| 158 | +``` |

| 159 | + |

| 160 | +## Step 4: Create the image uploader |

| 161 | + |

| 162 | +The next step is to support uploading/selection of images. To achieve this, we will listen for "change" events from the `fileUpload` element. In the callback function, we use a `FileReader()` to read the contents of the image if one is selected (and nothing otherwise). |

| 163 | + |

| 164 | +```js |

| 165 | +fileUpload.addEventListener("change", function (e) { |

| 166 | + const file = e.target.files[0]; |

| 167 | + if (!file) { |

| 168 | + return; |

| 169 | + } |

| 170 | + |

| 171 | + const reader = new FileReader(); |

| 172 | + |

| 173 | + // Set up a callback when the file is loaded |

| 174 | + reader.onload = function (e2) { |

| 175 | + imageContainer.innerHTML = ""; |

| 176 | + const image = document.createElement("img"); |

| 177 | + image.src = e2.target.result; |

| 178 | + imageContainer.appendChild(image); |

| 179 | + // detect(image); // Uncomment this line to run the model |

| 180 | + }; |

| 181 | + reader.readAsDataURL(file); |

| 182 | +}); |

| 183 | +``` |

| 184 | + |

| 185 | +Once the image has been loaded into the browser, the `reader.onload` callback function will be invoked. In it, we append the new `<img>` element to the `imageContainer` to be displayed to the user. |

| 186 | + |

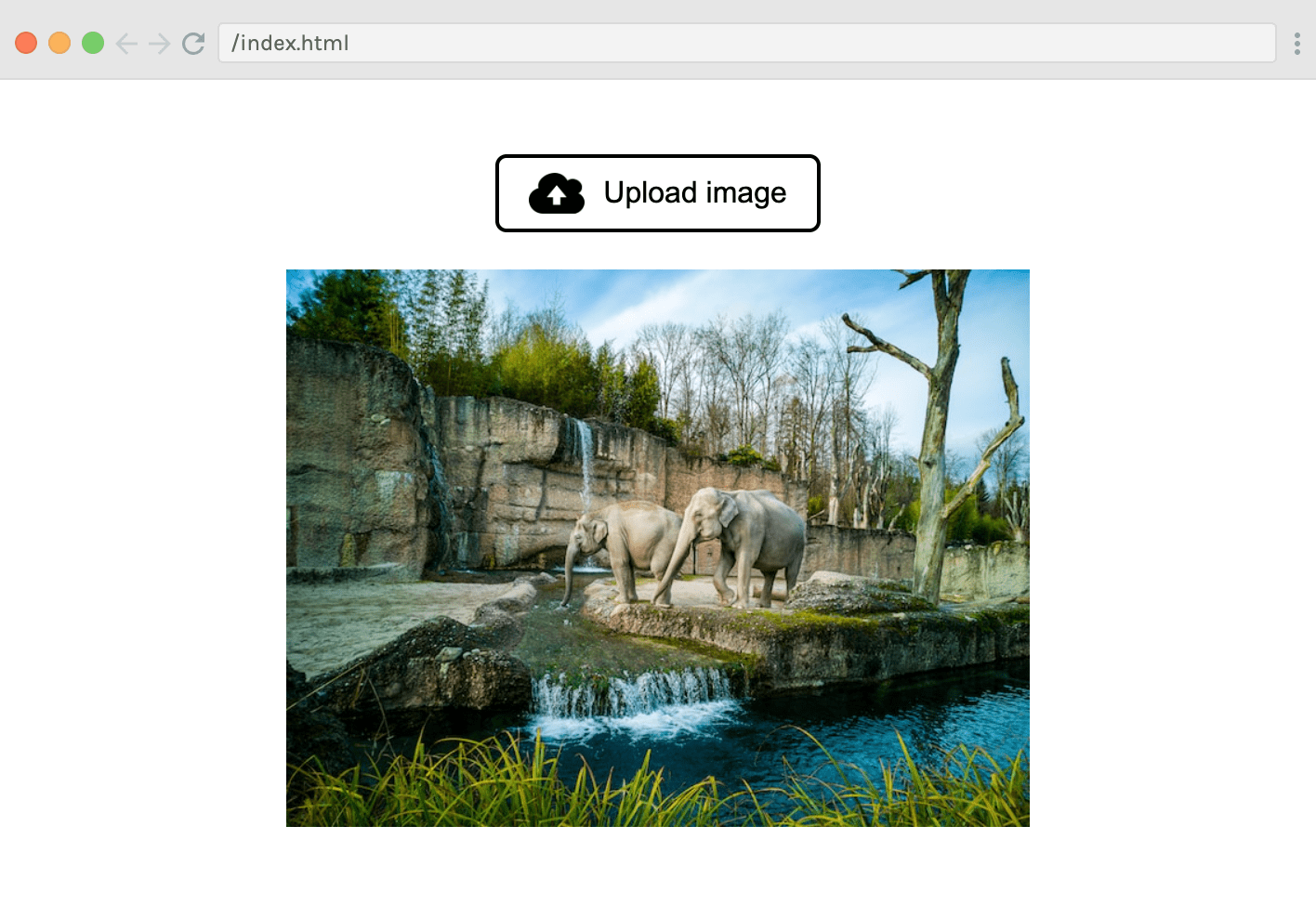

| 187 | +Don’t worry about the `detect(image)` function call (which is commented out) - we will explain it later! For now, try to run the app and upload an image to the browser. You should see your image displayed under the button like this: |

| 188 | + |

| 189 | + |

| 190 | + |

| 191 | +## Step 5: Run the model |

| 192 | + |

| 193 | +We’re finally ready to start interacting with Transformers.js! Let’s uncomment the `detect(image)` function call from the snippet above. Then we’ll define the function itself: |

| 194 | + |

| 195 | +```js |

| 196 | +async function detect(img) { |

| 197 | + status.textContent = "Analysing..."; |

| 198 | + const output = await detector(img.src, { |

| 199 | + threshold: 0.5, |

| 200 | + percentage: true, |

| 201 | + }); |

| 202 | + status.textContent = ""; |

| 203 | + console.log("output", output); |

| 204 | + // ... |

| 205 | +} |

| 206 | +``` |

| 207 | + |

| 208 | +<Tip> |

| 209 | + |

| 210 | +NOTE: The `detect` function needs to be asynchronous, since we’ll `await` the result of the the model. |

| 211 | + |

| 212 | +</Tip> |

| 213 | + |

| 214 | +Once we’ve updated the `status` to "Analysing", we’re ready to perform *inference*, which simply means to run the model with some data. This is done via the `detector()` function that was returned from `pipeline()`. The first argument we’re passing is the image data (`img.src`). |

| 215 | + |

| 216 | +The second argument is an options object: |

| 217 | + - We set the `threshold` property to `0.5`. This means that we want the model to be at least 50% confident before claiming it has detected an object in the image. The lower the threshold, the more objects it'll detect (but may misidentify objects); the higher the threshold, the fewer objects it'll detect (but may miss objects in the scene). |

| 218 | + - We also specify `percentage: true`, which means that we want the bounding box for the objects to be returned as percentages (instead of pixels). |

| 219 | + |

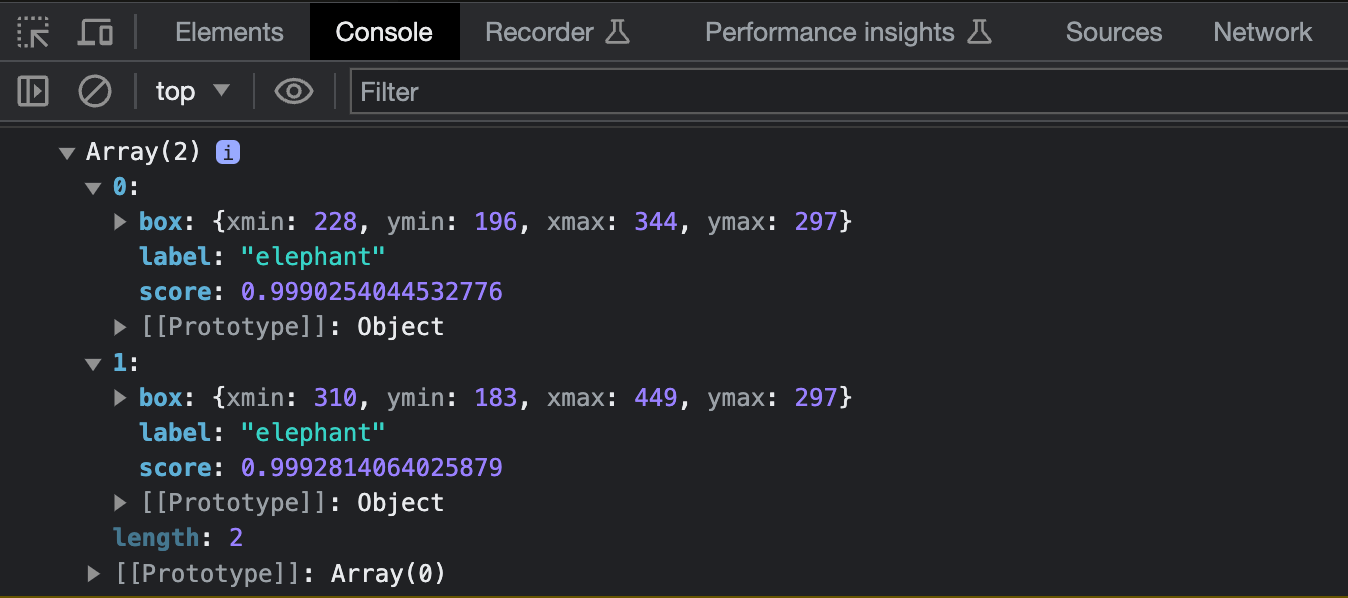

| 220 | +If you now try to run the app and upload an image, you should see the following output logged to the console: |

| 221 | + |

| 222 | + |

| 223 | + |

| 224 | +In the example above, we uploaded an image of two elephants, so the `output` variable holds an array with two objects, each containing a `label` (the string “elephant”), a `score` (indicating the model's confidence in its prediction) and a `box` object (representing the bounding box of the detected entity). |

| 225 | + |

| 226 | +## Step 6: Render the boxes |

| 227 | + |

| 228 | +The final step is to display the `box` coordinates as rectangles around each of the elephants. |

| 229 | + |

| 230 | +At the end of our `detect()` function, we’ll run the `renderBox` function on each object in the `output` array, using `.forEach()`. |

| 231 | + |

| 232 | +```js |

| 233 | +output.forEach(renderBox); |

| 234 | +``` |

| 235 | + |

| 236 | +Here’s the code for the `renderBox()` function with comments to help you understand what’s going on: |

| 237 | + |

| 238 | +```js |

| 239 | +// Render a bounding box and label on the image |

| 240 | +function renderBox({ box, label }) { |

| 241 | + const { xmax, xmin, ymax, ymin } = box; |

| 242 | + |

| 243 | + // Generate a random color for the box |

| 244 | + const color = "#" + Math.floor(Math.random() * 0xffffff).toString(16).padStart(6, 0); |

| 245 | + |

| 246 | + // Draw the box |

| 247 | + const boxElement = document.createElement("div"); |

| 248 | + boxElement.className = "bounding-box"; |

| 249 | + Object.assign(boxElement.style, { |

| 250 | + borderColor: color, |

| 251 | + left: 100 * xmin + "%", |

| 252 | + top: 100 * ymin + "%", |

| 253 | + width: 100 * (xmax - xmin) + "%", |

| 254 | + height: 100 * (ymax - ymin) + "%", |

| 255 | + }); |

| 256 | + |

| 257 | + // Draw the label |

| 258 | + const labelElement = document.createElement("span"); |

| 259 | + labelElement.textContent = label; |

| 260 | + labelElement.className = "bounding-box-label"; |

| 261 | + labelElement.style.backgroundColor = color; |

| 262 | + |

| 263 | + boxElement.appendChild(labelElement); |

| 264 | + imageContainer.appendChild(boxElement); |

| 265 | +} |

| 266 | +``` |

| 267 | + |

| 268 | +The bounding box and label span also need some styling, so add the following to the `style.css` file: |

| 269 | + |

| 270 | +```css |

| 271 | +.bounding-box { |

| 272 | + position: absolute; |

| 273 | + box-sizing: border-box; |

| 274 | +} |

| 275 | + |

| 276 | +.bounding-box-label { |

| 277 | + position: absolute; |

| 278 | + color: white; |

| 279 | + font-size: 12px; |

| 280 | +} |

| 281 | +``` |

| 282 | + |

| 283 | +**And that’s it!** |

| 284 | + |

| 285 | +You've now built your own fully-functional AI application that detects objects in images, which runns completely in your browser: no external server, APIs, or build tools. Pretty cool! 🥳 |

| 286 | + |

| 287 | + |

| 288 | + |

| 289 | +The app is live at the following URL: [https://huggingface.co/spaces/Scrimba/vanilla-js-object-detector](https://huggingface.co/spaces/Scrimba/vanilla-js-object-detector) |

0 commit comments