You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

[SPARK-25521][SQL] Job id showing null in the logs when insert into command Job is finished.

## What changes were proposed in this pull request?

``As part of insert command in FileFormatWriter, a job context is created for handling the write operation , While initializing the job context using setupJob() API

in HadoopMapReduceCommitProtocol , we set the jobid in the Jobcontext configuration.In FileFormatWriter since we are directly getting the jobId from the map reduce JobContext the job id will come as null while adding the log. As a solution we shall get the jobID from the configuration of the map reduce Jobcontext.``

## How was this patch tested?

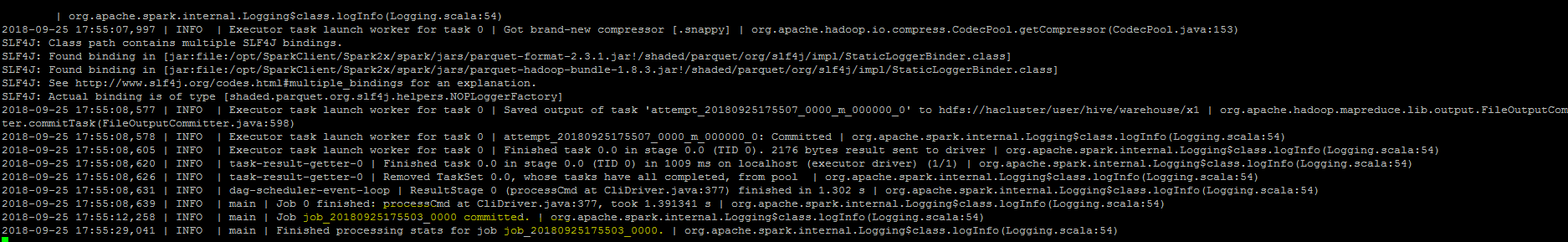

Manually, verified the logs after the changes.

Closesapache#22572 from sujith71955/master_log_issue.

Authored-by: s71955 <[email protected]>

Signed-off-by: Wenchen Fan <[email protected]>

0 commit comments