You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

**Description:** This node calculates an ideal image size for a first pass of a multi-pass upscaling. The aim is to avoid duplication that results from choosing a size larger than the model is capable of.

**Description:** This node adds a film grain effect to the input image based on the weights, seeds, and blur radii parameters. It works with RGB input images only.

**Description:** This InvokeAI node takes in a collection of images and randomly chooses one. This can be useful when you have a number of poses to choose from for a ControlNet node, or a number of input images for another purpose.

@@ -95,6 +109,91 @@ a Text-Generation-Webui instance (might work remotely too, but I never tried it)

95

109

96

110

This node works best with SDXL models, especially as the style can be described independantly of the LLM's output.

97

111

112

+

--------------------------------

113

+

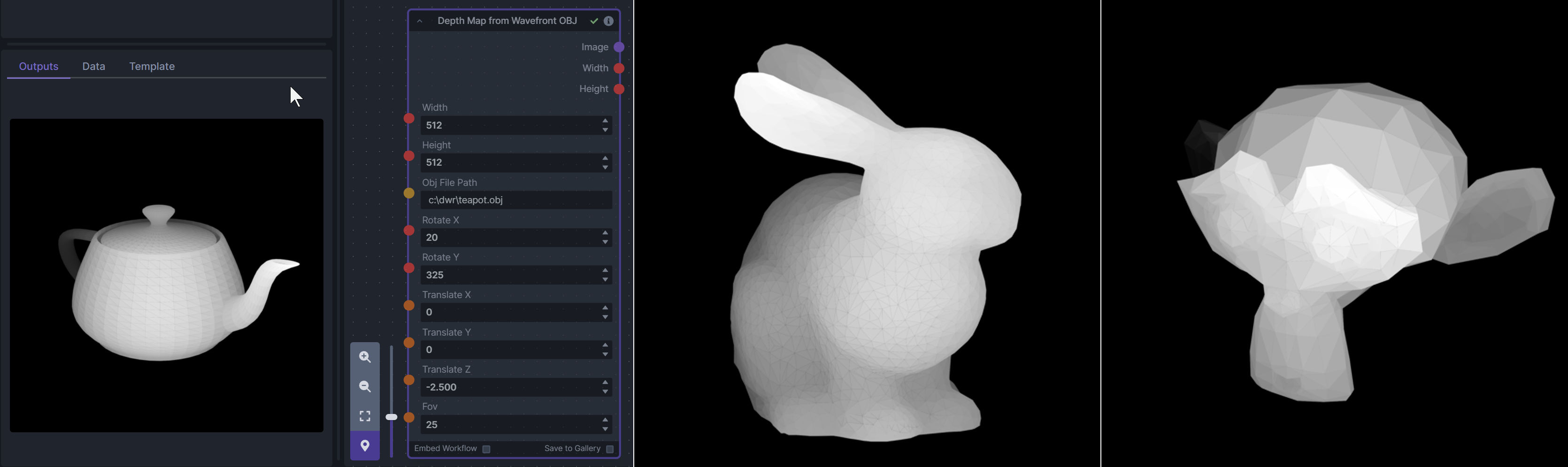

### Depth Map from Wavefront OBJ

114

+

115

+

**Description:** Render depth maps from Wavefront .obj files (triangulated) using this simple 3D renderer utilizing numpy and matplotlib to compute and color the scene. There are simple parameters to change the FOV, camera position, and model orientation.

116

+

117

+

To be imported, an .obj must use triangulated meshes, so make sure to enable that option if exporting from a 3D modeling program. This renderer makes each triangle a solid color based on its average depth, so it will cause anomalies if your .obj has large triangles. In Blender, the Remesh modifier can be helpful to subdivide a mesh into small pieces that work well given these limitations.

123

+

124

+

--------------------------------

125

+

### Enhance Image (simple adjustments)

126

+

127

+

**Description:** Boost or reduce color saturation, contrast, brightness, sharpness, or invert colors of any image at any stage with this simple wrapper for pillow [PIL]'s ImageEnhance module.

128

+

129

+

Color inversion is toggled with a simple switch, while each of the four enhancer modes are activated by entering a value other than 1 in each corresponding input field. Values less than 1 will reduce the corresponding property, while values greater than 1 will enhance it.

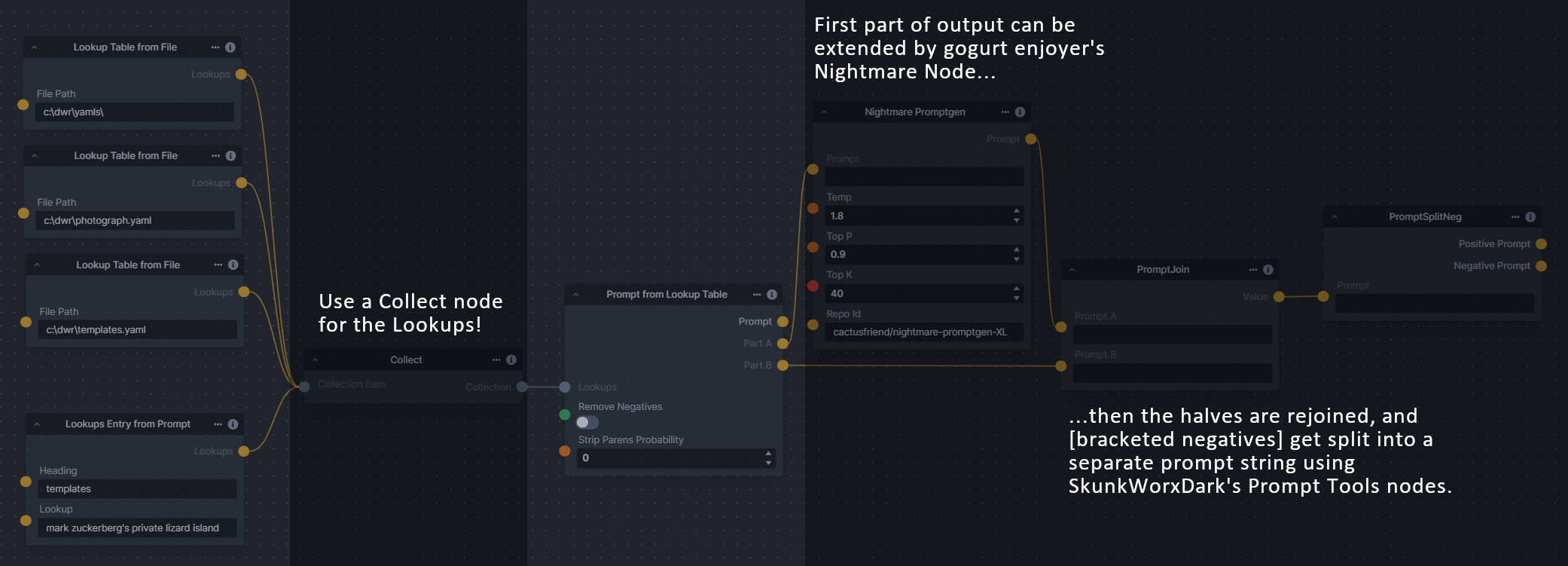

**Description:** This set of 3 nodes generates prompts from simple user-defined grammar rules (loaded from custom files - examples provided below). The prompts are made by recursively expanding a special template string, replacing nonterminal "parts-of-speech" until no more nonterminal terms remain in the string.

140

+

141

+

This includes 3 Nodes:

142

+

-*Lookup Table from File* - loads a YAML file "prompt" section (or of a whole folder of YAML's) into a JSON-ified dictionary (Lookups output)

143

+

-*Lookups Entry from Prompt* - places a single entry in a new Lookups output under the specified heading

144

+

-*Prompt from Lookup Table* - uses a Collection of Lookups as grammar rules from which to randomly generate prompts.

150

+

151

+

--------------------------------

152

+

### Image and Mask Composition Pack

153

+

154

+

**Description:** This is a pack of nodes for composing masks and images, including a simple text mask creator and both image and latent offset nodes. The offsets wrap around, so these can be used in conjunction with the Seamless node to progressively generate centered on different parts of the seamless tiling.

155

+

156

+

This includes 4 Nodes:

157

+

-*Text Mask (simple 2D)* - create and position a white on black (or black on white) line of text using any font locally available to Invoke.

158

+

-*Image Compositor* - Take a subject from an image with a flat backdrop and layer it on another image using a chroma key or flood select background removal.

159

+

-*Offset Latents* - Offset a latents tensor in the vertical and/or horizontal dimensions, wrapping it around.

160

+

-*Offset Image* - Offset an image in the vertical and/or horizontal dimensions, wrapping it around.

**Description:** This is a set of nodes for calculating the necessary size increments for doing upscaling workflows. Use the *Final Size & Orientation* node to enter your full size dimensions and orientation (portrait/landscape/random), then plug that and your initial generation dimensions into the *Ideal Size Stepper* and get 1, 2, or 3 intermediate pairs of dimensions for upscaling. Note this does not output the initial size or full size dimensions: the 1, 2, or 3 outputs of this node are only the intermediate sizes.

171

+

172

+

A third node is included, *Random Switch (Integers)*, which is just a generic version of Final Size with no orientation selection.

**Description:** text font to text image node for InvokeAI, download a font to use (or if in font cache uses it from there), the text is always resized to the image size, but can control that with padding, optional 2nd line

0 commit comments