-

Notifications

You must be signed in to change notification settings - Fork 78

Description

@yuvipanda wrote:

https://archive.analytics.mybinder.org/ looks like it stopped last week

Inspection gave me that the pod was unhealthy and failing to start.

analytics-publisher 0/1 1 0 2y2d

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Pulled 32m (x2756 over 24d) kubelet Container image "jupyterhub/mybinder.org-analytics-publisher:2020.12.4-0.dev.git.4529.h99dd8b0" already present on machine

Warning BackOff 2m51s (x64858 over 9d) kubelet Back-off restarting failed container

The following logs were seen

Archiving today's events 2022-10-30

Finding blobs with prefix binderhub-events-text/2022/10/30

Traceback (most recent call last):

File "/srv/run.py", line 34, in <module>

archive_events(

File "/srv/archiver.py", line 54, in archive_events

event = json.loads(json.loads(line)["jsonPayload"]["message"])

KeyError: 'message'

Understanding what caused this

The line of code that fails

mybinder.org-deploy/images/analytics-publisher/archiver.py

Lines 38 to 54 in 9ef1ae2

| storage_client = storage.Client() | |

| src_bucket = storage.Bucket(storage_client, source_bucket) | |

| dest_bucket = storage.Bucket(storage_client, destination_bucket) | |

| prefix = log_name + "/" + date.strftime("%Y/%m/%d") | |

| print(f"Finding blobs with prefix {prefix}") | |

| src_blobs = src_bucket.list_blobs(prefix=prefix) | |

| count = 0 | |

| all_events = [] | |

| for src_blob in src_blobs: | |

| with tempfile.TemporaryFile(mode="wb+") as temp: | |

| src_blob.download_to_file(temp) | |

| temp.seek(0) | |

| for line in temp: | |

| event = json.loads(json.loads(line)["jsonPayload"]["message"]) |

The configuration of source bucket for prod

mybinder.org-deploy/config/prod.yaml

Lines 201 to 206 in 9ef1ae2

| analyticsPublisher: | |

| enabled: true | |

| project: binderhub-288415 | |

| destinationBucket: binder-events-archive | |

| events: | |

| sourceBucket: binder-events-raw-export |

The GCP bucket

The uploaded data are lines of JSON objects, where how each object looks has changed.

Before

{

"insertId": "d1iovxfoalkix",

"jsonPayload": {

"message": "{\"timestamp\": \"2022-10-16T00:10:39.415163Z\", \"schema\": \"binderhub.jupyter.org/launch\", \"version\": 5, \"provider\": \"GitHub\", \"spec\": \"spyder-ide/spyder/5.x\", \"ref\": \"a76b6f09d692ebf09049fb83d26c1e7e669fbaa6\", \"status\": \"success\", \"build_token\": true, \"origin\": \"ovh.mybinder.org\"}",

"python_logger": null

},

"logName": "projects/binderhub-288415/logs/binderhub-events-text",

"receiveTimestamp": "2022-10-16T00:10:39.482690331Z",

"resource": {

"labels": {

"project_id": "binderhub-288415"

},

"type": "global"

},

"severity": "INFO",

"timestamp": "2022-10-16T00:10:39.415195Z"

}where the "jsonPayload.message" field is a string of JSON that would parse like this:

{

"timestamp": "2022-10-16T00:10:39.415163Z",

"schema": "binderhub.jupyter.org/launch",

"version": 5,

"provider": "GitHub",

"spec": "spyder-ide/spyder/5.x",

"ref": "a76b6f09d692ebf09049fb83d26c1e7e669fbaa6",

"status": "success",

"build_token": true,

"origin": "ovh.mybinder.org"

}

After

With 2022-10-20 - 11:00 and onwards, a new format started showing that didn't include jsonPayload.message.

{

"insertId": "1de0btlfd1znx0",

"jsonPayload": {

"build_token": true,

"origin": "gesis.mybinder.org",

"provider": "GitLab",

"ref": "18b0c773b170ce45b23547f135e783174e81c18f",

"schema": "binderhub.jupyter.org/launch",

"spec": "comp2421-numerical-computation%2Fbook/master",

"status": "success",

"timestamp": "2022-10-30T00:00:37.591502Z",

"version": 5

},

"labels": {

"python_logger": "binderhub.events"

},

"logName": "projects/binderhub-288415/logs/binderhub-events-text",

"receiveTimestamp": "2022-10-30T00:00:37.641295756Z",

"resource": {

"labels": {

"project_id": "binderhub-288415"

},

"type": "global"

},

"severity": "INFO",

"sourceLocation": {

"file": "<string>",

"function": "emit",

"line": "25"

},

"timestamp": "2022-10-30T00:00:37.591546Z"

}Hypothesis concluded

After 2022-10-20 (about 11:00), the jsonPayload changed its structure, where jsonPayload.message is unpacked directly in jsonPayload it seems.

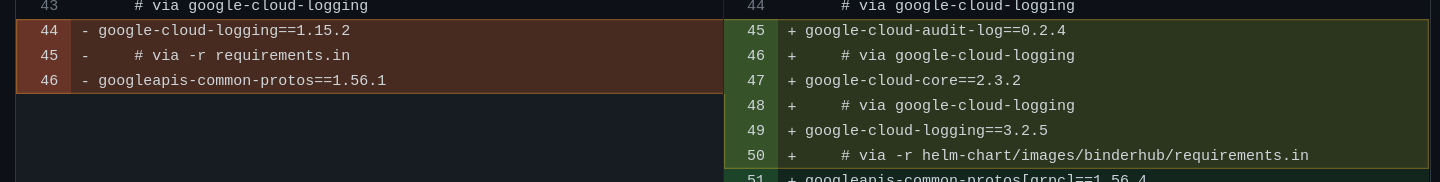

This coincides with #2369, which in turn includes this changes

That in turn comes from jupyterhub/binderhub#1536

Action points

- Open an issue about catching this failure on deployment to staging

mybinder.org-deploy upgrade succeeded even though analytics-publisher didn't startup #2391 - Resolve failure using one option below

- To adjust analytics-publisher parsing uploaded data

We could make analytics-publisher script adjust to the new format, or if needed, attempt to parse either.

analytics-publisher: fix for breaking binderhub change, refreeze dependencies, modernize Dockerfile #2393 - To adjust binderhub uploads data

We could make binderhub, using google's logger library, manage to retain the format we used previously somehow. - Downgrading software in binderhubs image

Using v1 caused a problem bound to happen sooner or later, so working around it would just give us some time at best - and be an effort to do by itself.

- To adjust analytics-publisher parsing uploaded data

I suggest resolving it with adjusting analytics-publisher's parsing of uploaded data in this location

mybinder.org-deploy/images/analytics-publisher/archiver.py

Lines 38 to 54 in 9ef1ae2

| storage_client = storage.Client() | |

| src_bucket = storage.Bucket(storage_client, source_bucket) | |

| dest_bucket = storage.Bucket(storage_client, destination_bucket) | |

| prefix = log_name + "/" + date.strftime("%Y/%m/%d") | |

| print(f"Finding blobs with prefix {prefix}") | |

| src_blobs = src_bucket.list_blobs(prefix=prefix) | |

| count = 0 | |

| all_events = [] | |

| for src_blob in src_blobs: | |

| with tempfile.TemporaryFile(mode="wb+") as temp: | |

| src_blob.download_to_file(temp) | |

| temp.seek(0) | |

| for line in temp: | |

| event = json.loads(json.loads(line)["jsonPayload"]["message"]) |