|

82 | 82 |

|

83 | 83 |  |

84 | 84 |

|

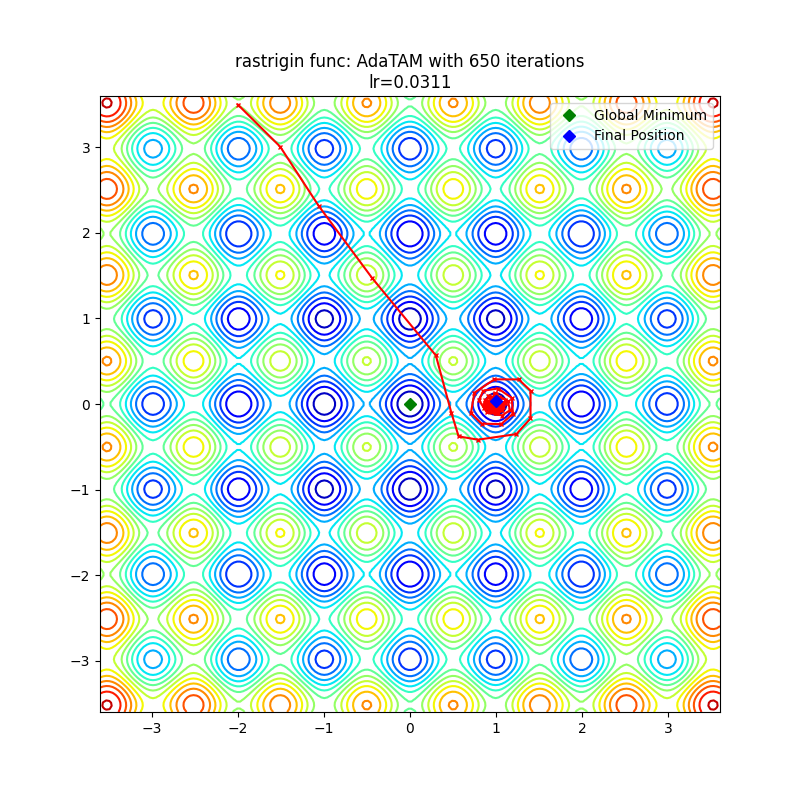

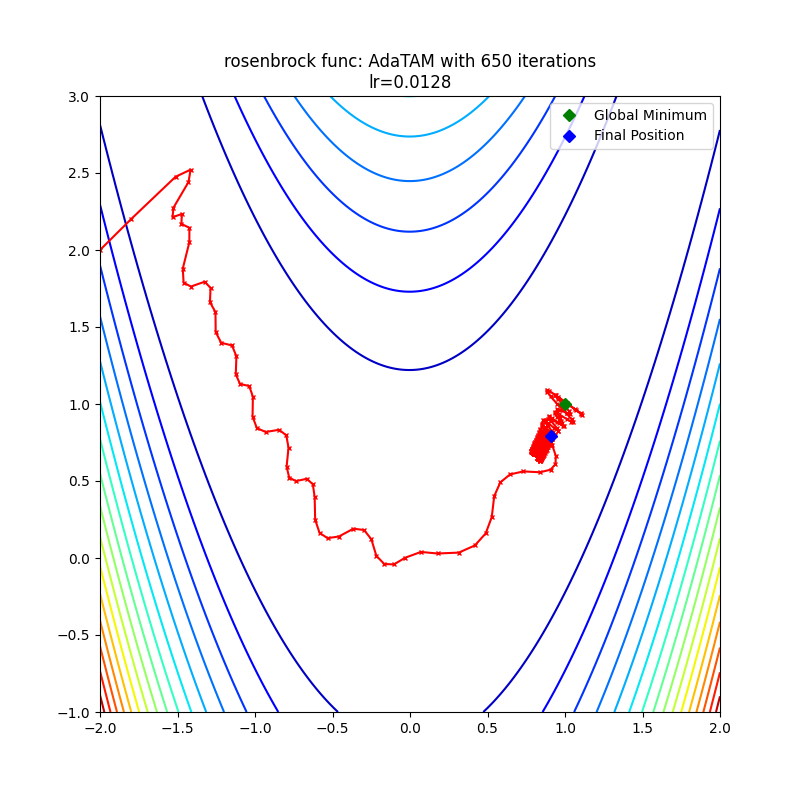

| 85 | +### AdaTAM |

| 86 | + |

| 87 | + |

| 88 | + |

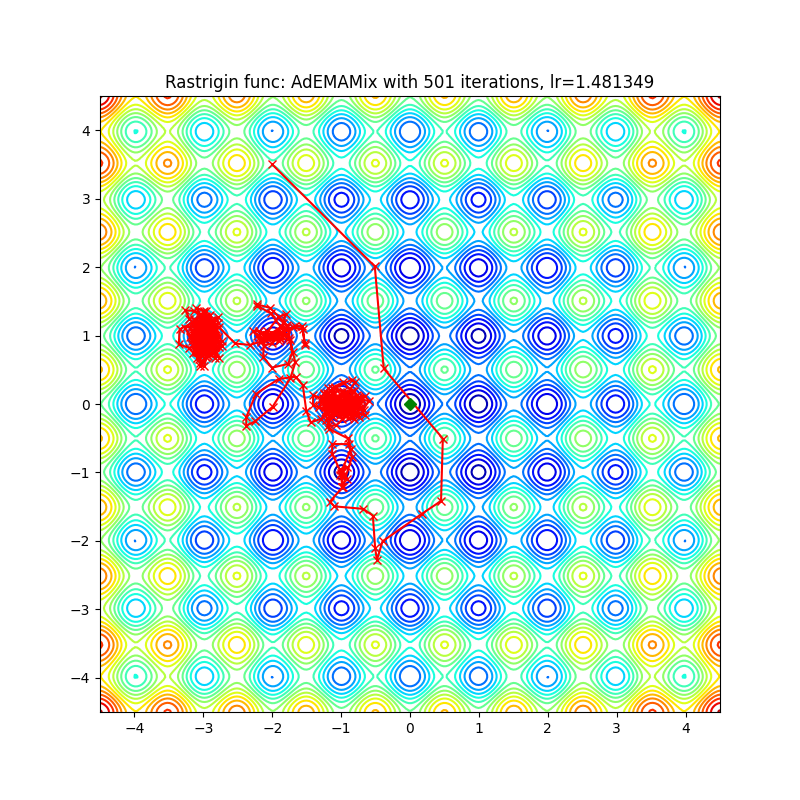

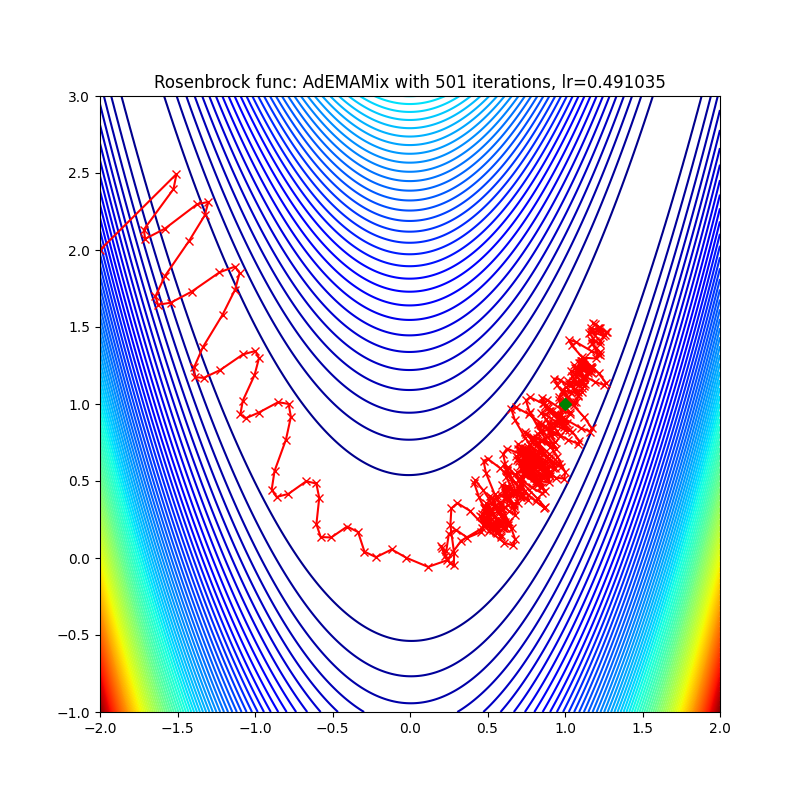

85 | 89 | ### AdEMAMix |

86 | 90 |

|

87 | 91 |  |

|

254 | 258 |

|

255 | 259 |  |

256 | 260 |

|

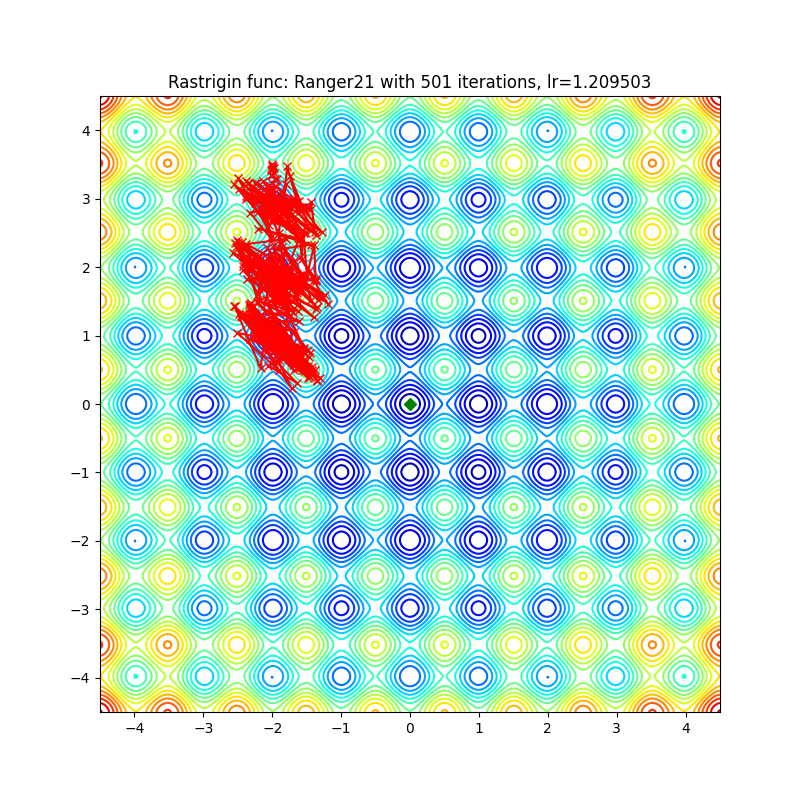

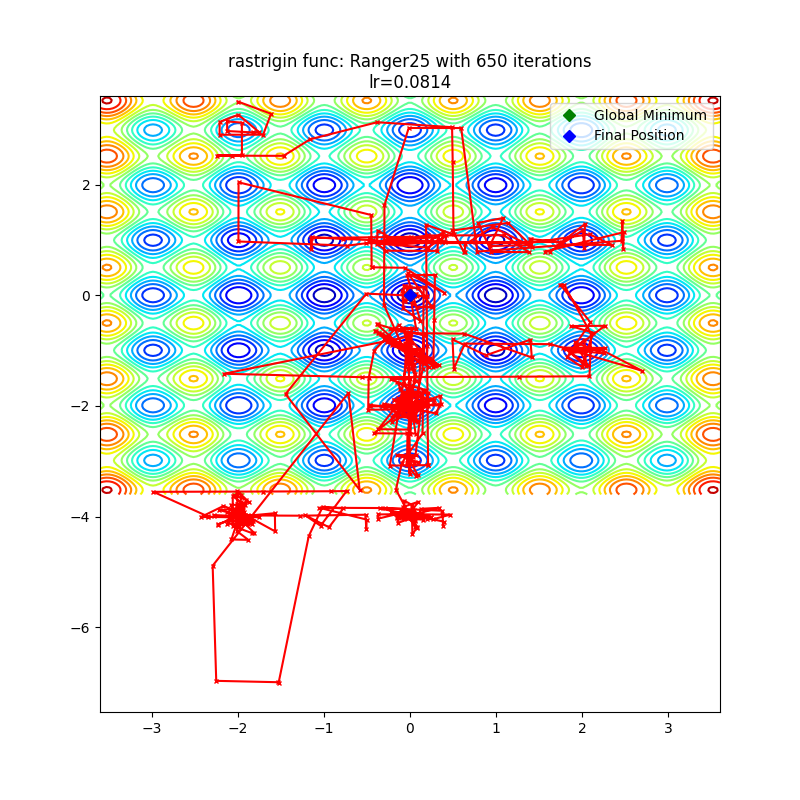

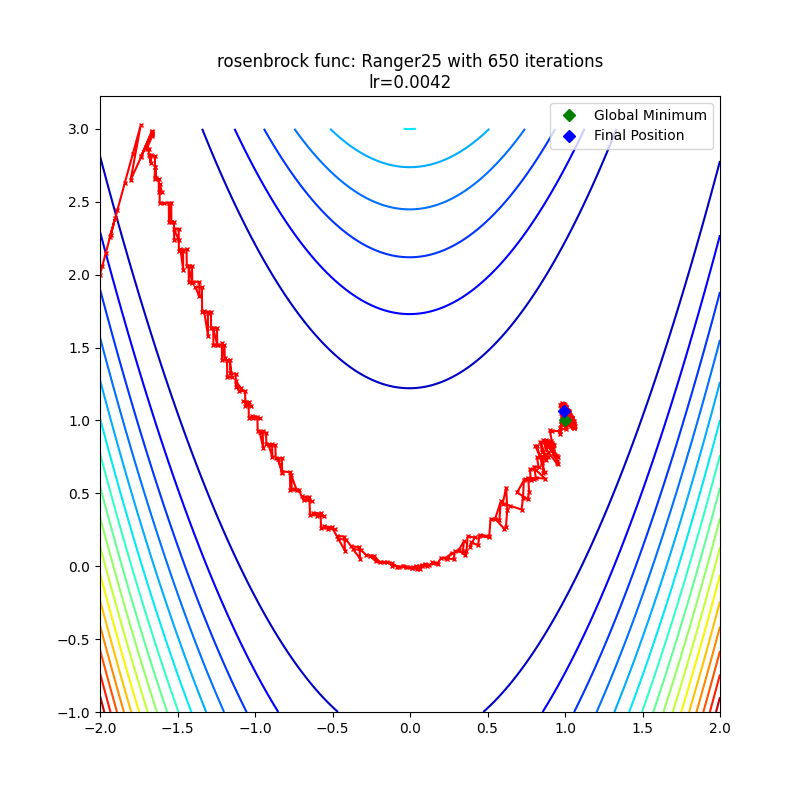

| 261 | +### Ranger25 |

| 262 | + |

| 263 | + |

| 264 | + |

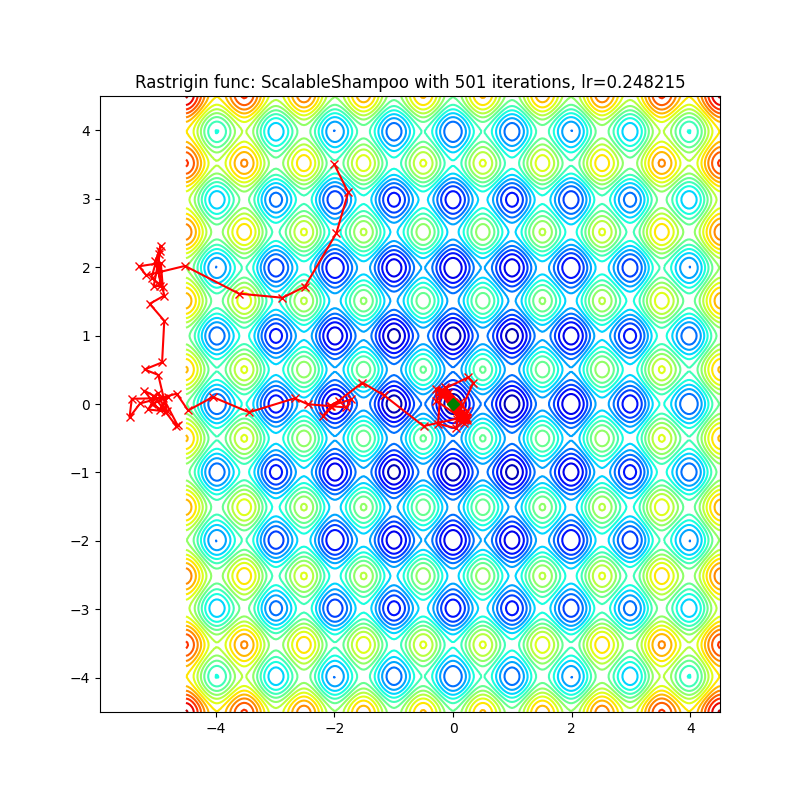

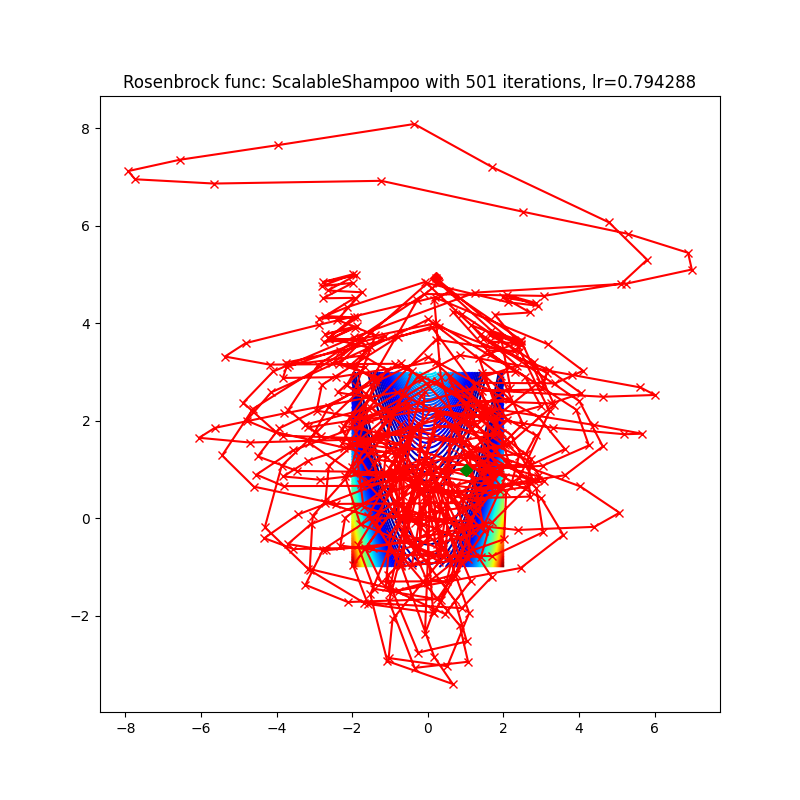

257 | 265 | ### ScalableShampoo |

258 | 266 |

|

259 | 267 |  |

|

306 | 314 |

|

307 | 315 |  |

308 | 316 |

|

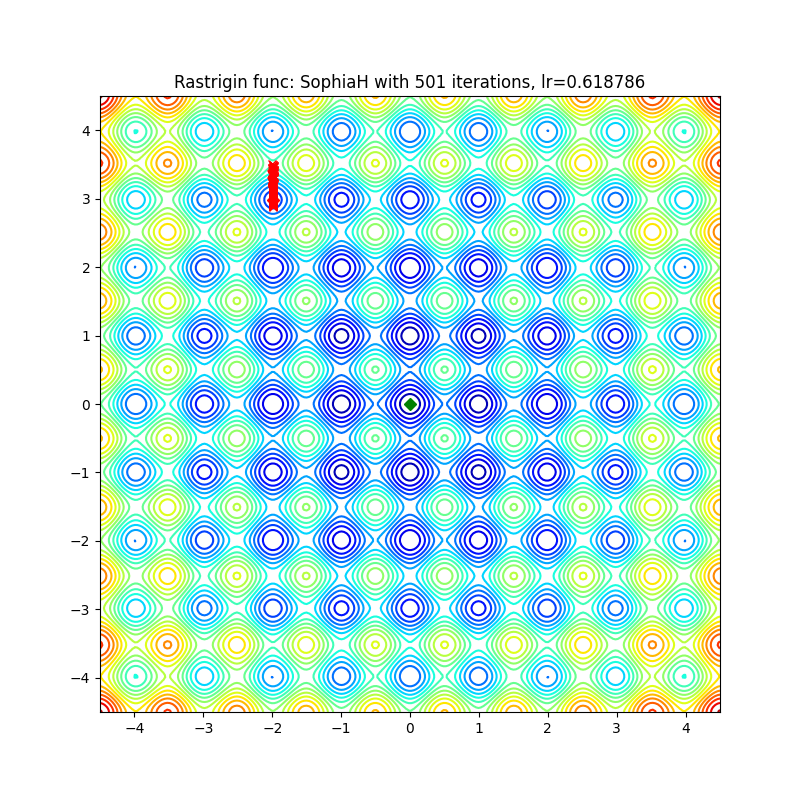

| 317 | +### SPAM |

| 318 | + |

| 319 | + |

| 320 | + |

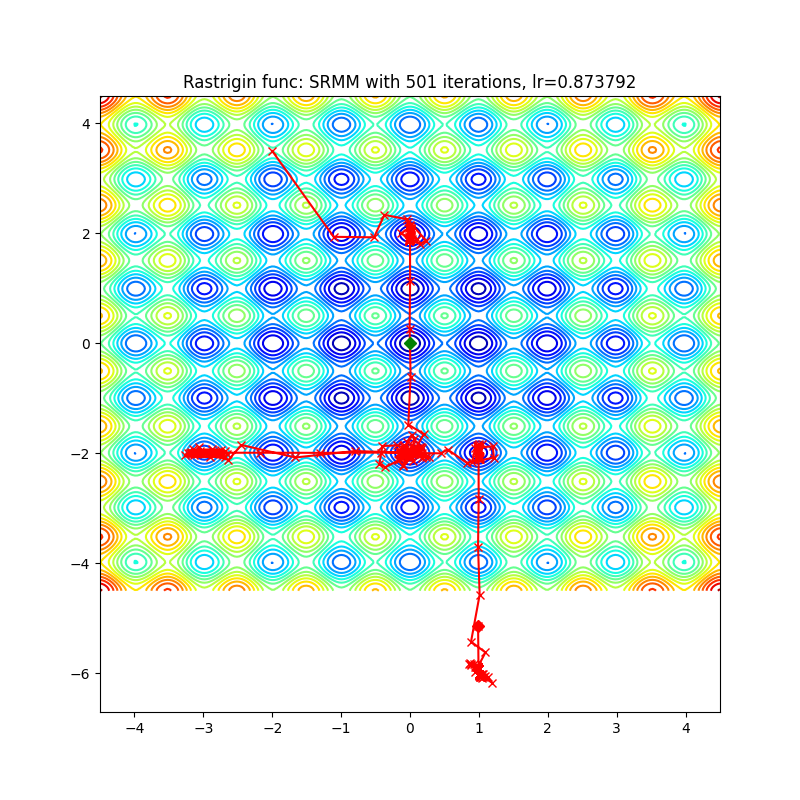

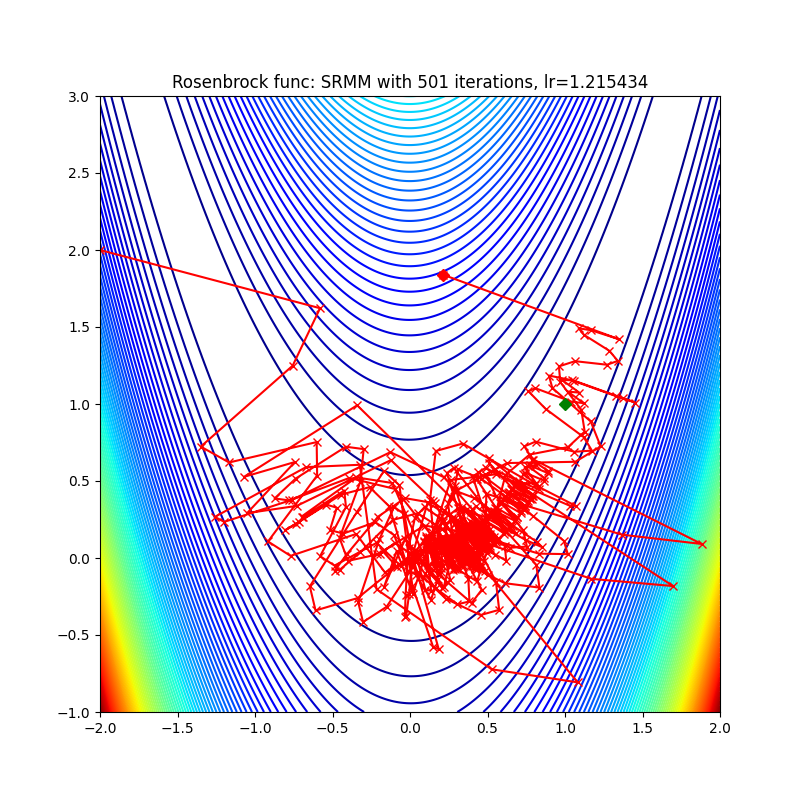

309 | 321 | ### SRMM |

310 | 322 |

|

311 | 323 |  |

|

318 | 330 |

|

319 | 331 |  |

320 | 332 |

|

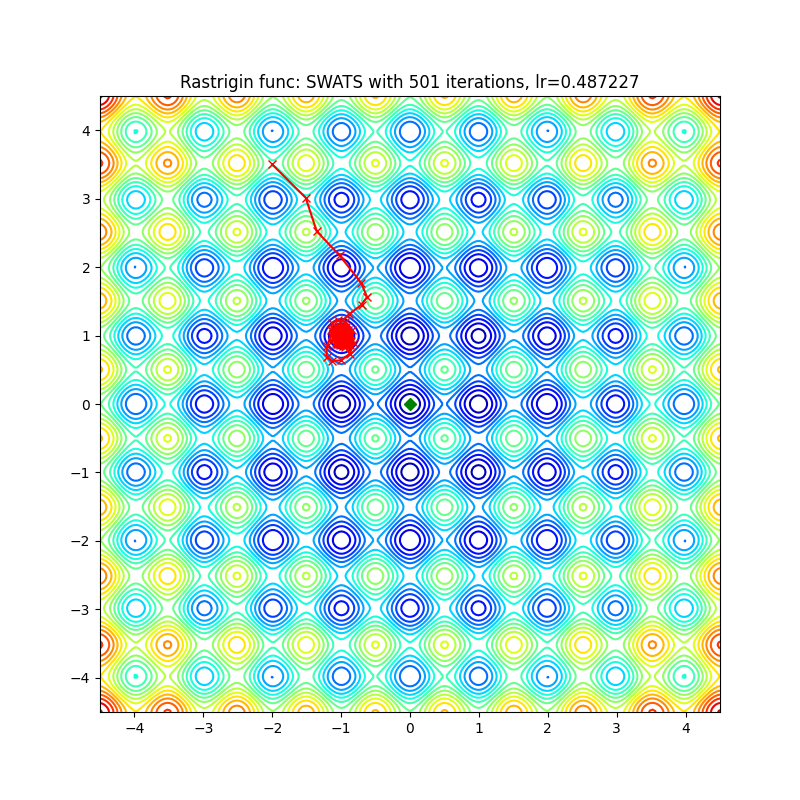

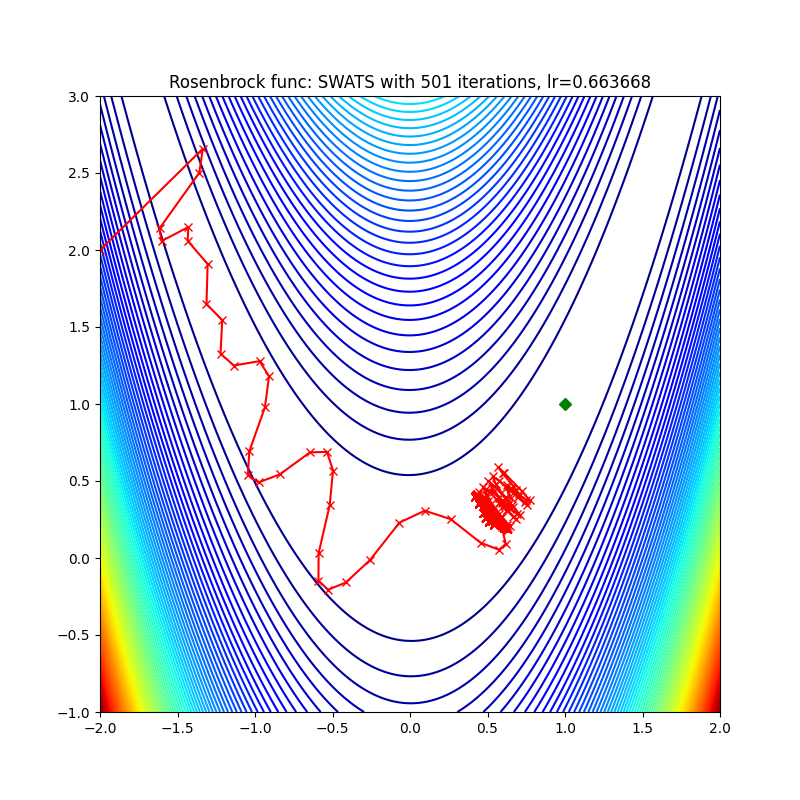

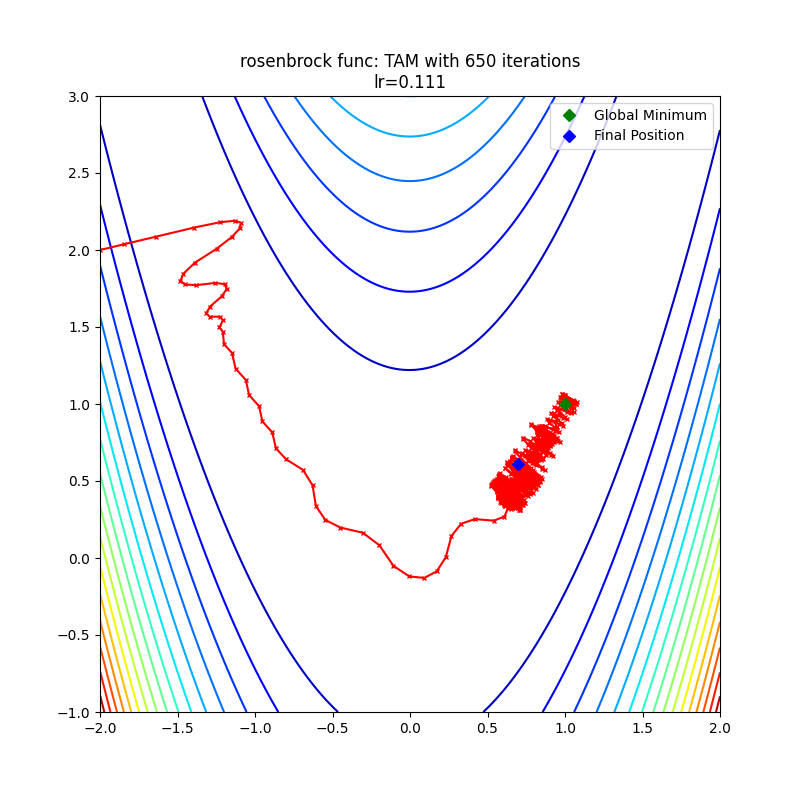

| 333 | +### TAM |

| 334 | + |

| 335 | + |

| 336 | + |

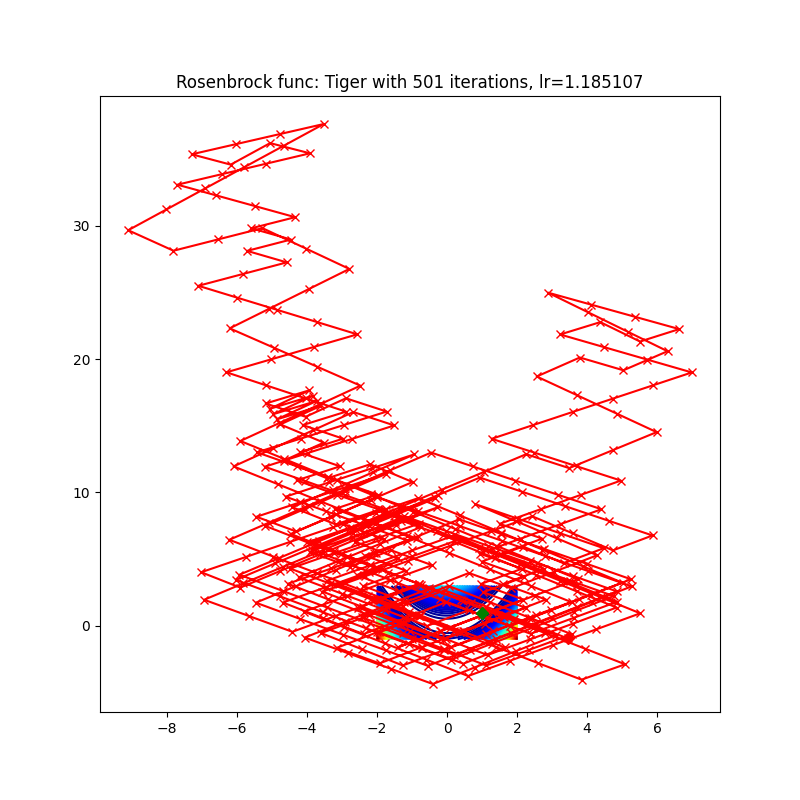

321 | 337 | ### Tiger |

322 | 338 |

|

323 | 339 |  |

|

408 | 424 |

|

409 | 425 |  |

410 | 426 |

|

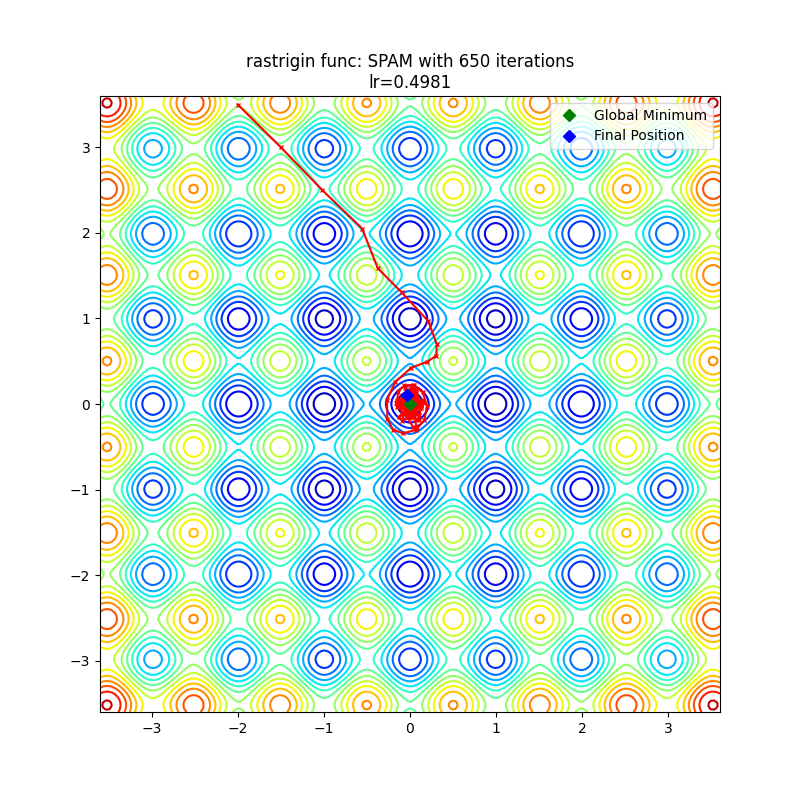

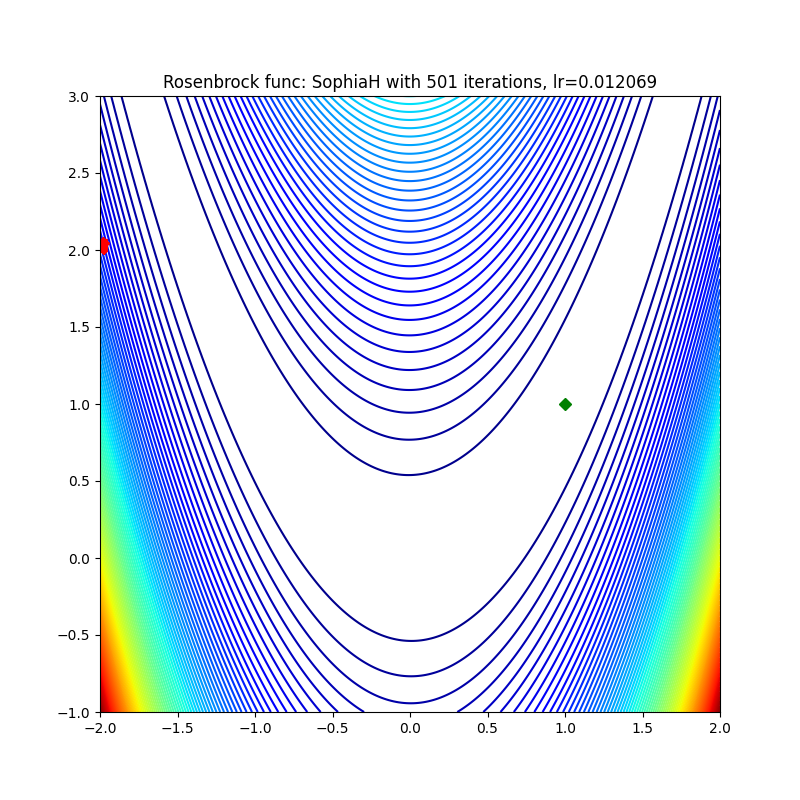

| 427 | +### AdaTAM |

| 428 | + |

| 429 | + |

| 430 | + |

411 | 431 | ### AdEMAMix |

412 | 432 |

|

413 | 433 |  |

|

580 | 600 |

|

581 | 601 |  |

582 | 602 |

|

| 603 | +### Ranger25 |

| 604 | + |

| 605 | + |

| 606 | + |

583 | 607 | ### ScalableShampoo |

584 | 608 |

|

585 | 609 |  |

|

632 | 656 |

|

633 | 657 |  |

634 | 658 |

|

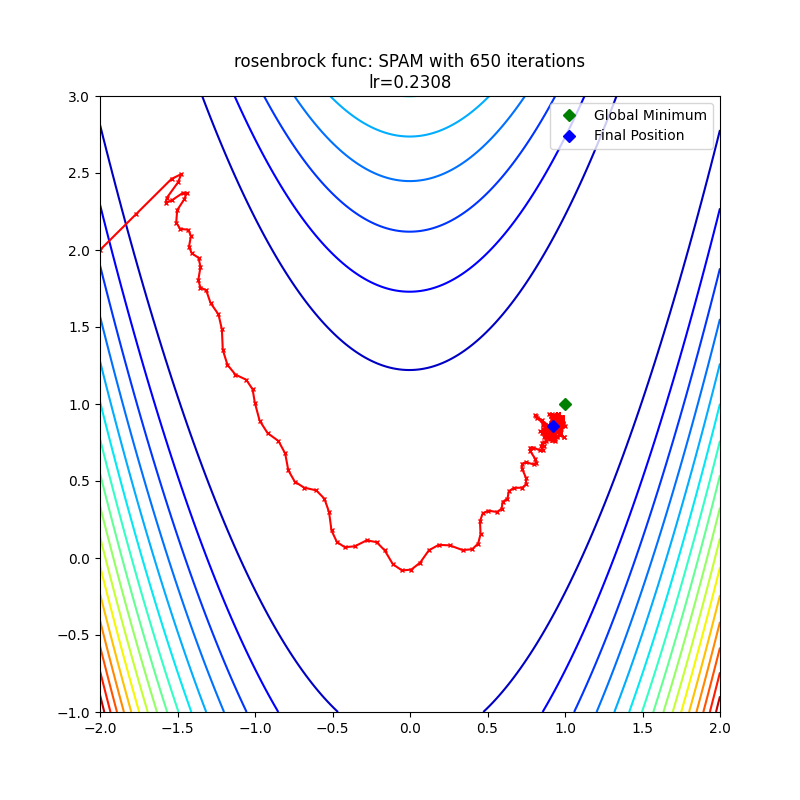

| 659 | +### SPAM |

| 660 | + |

| 661 | + |

| 662 | + |

635 | 663 | ### SRMM |

636 | 664 |

|

637 | 665 |  |

|

644 | 672 |

|

645 | 673 |  |

646 | 674 |

|

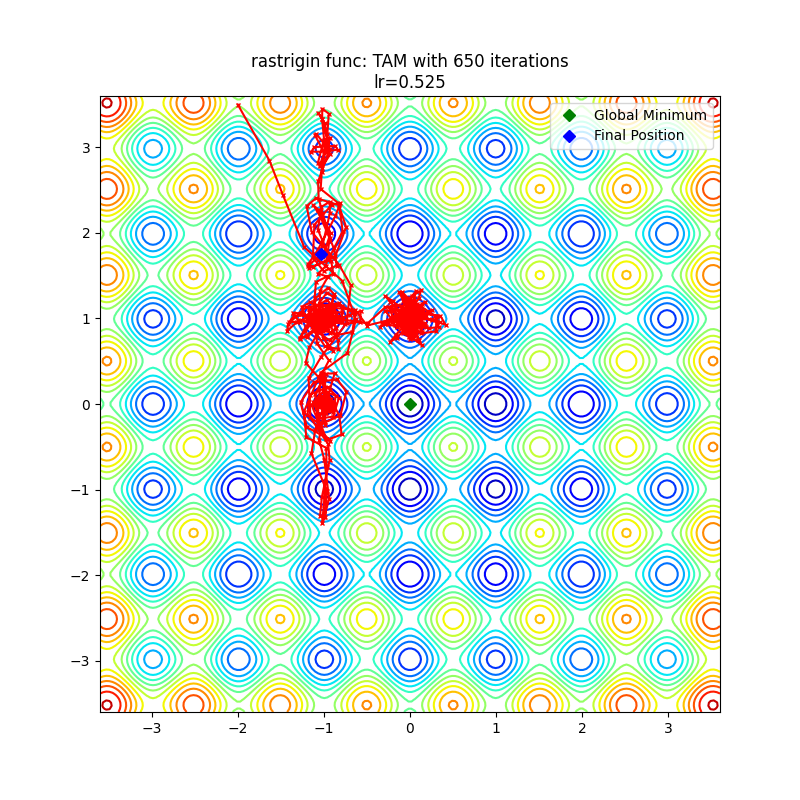

| 675 | +### TAM |

| 676 | + |

| 677 | + |

| 678 | + |

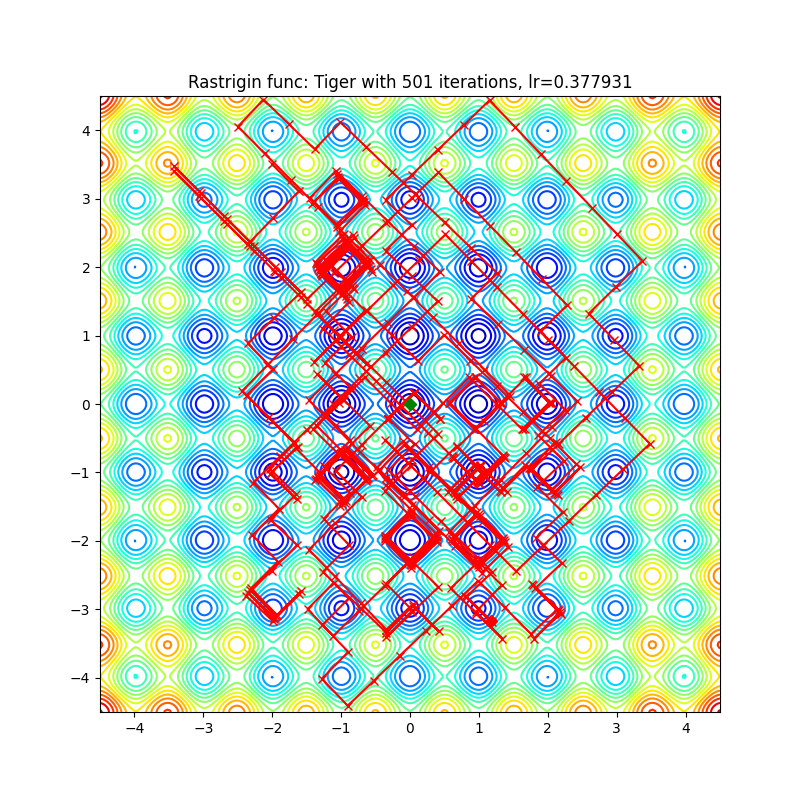

647 | 679 | ### Tiger |

648 | 680 |

|

649 | 681 |  |

|

0 commit comments