-

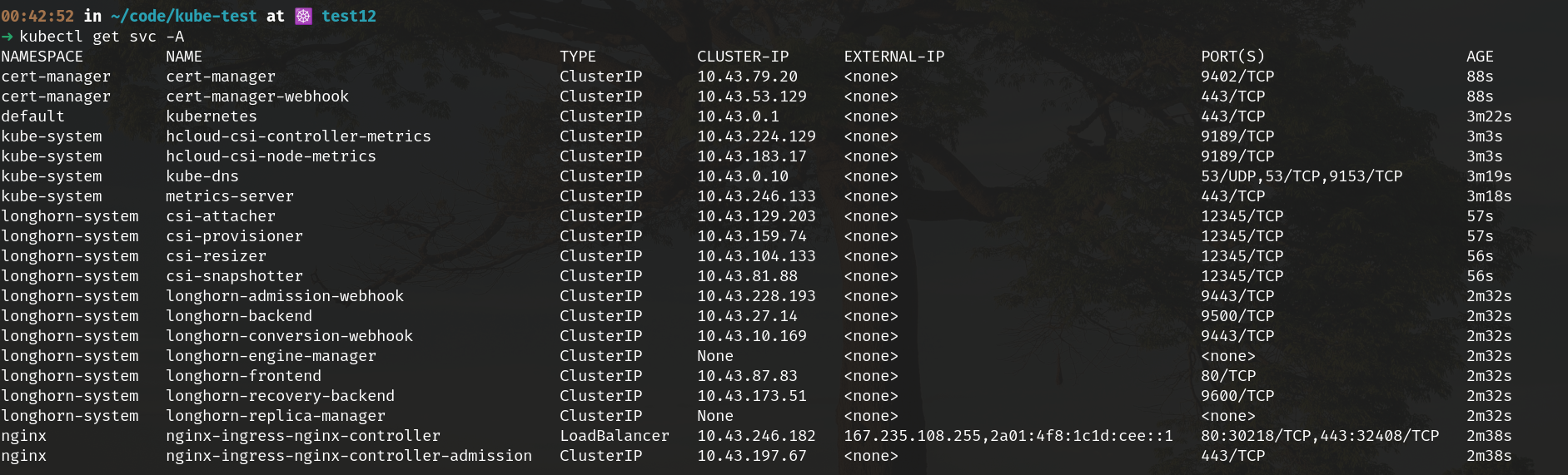

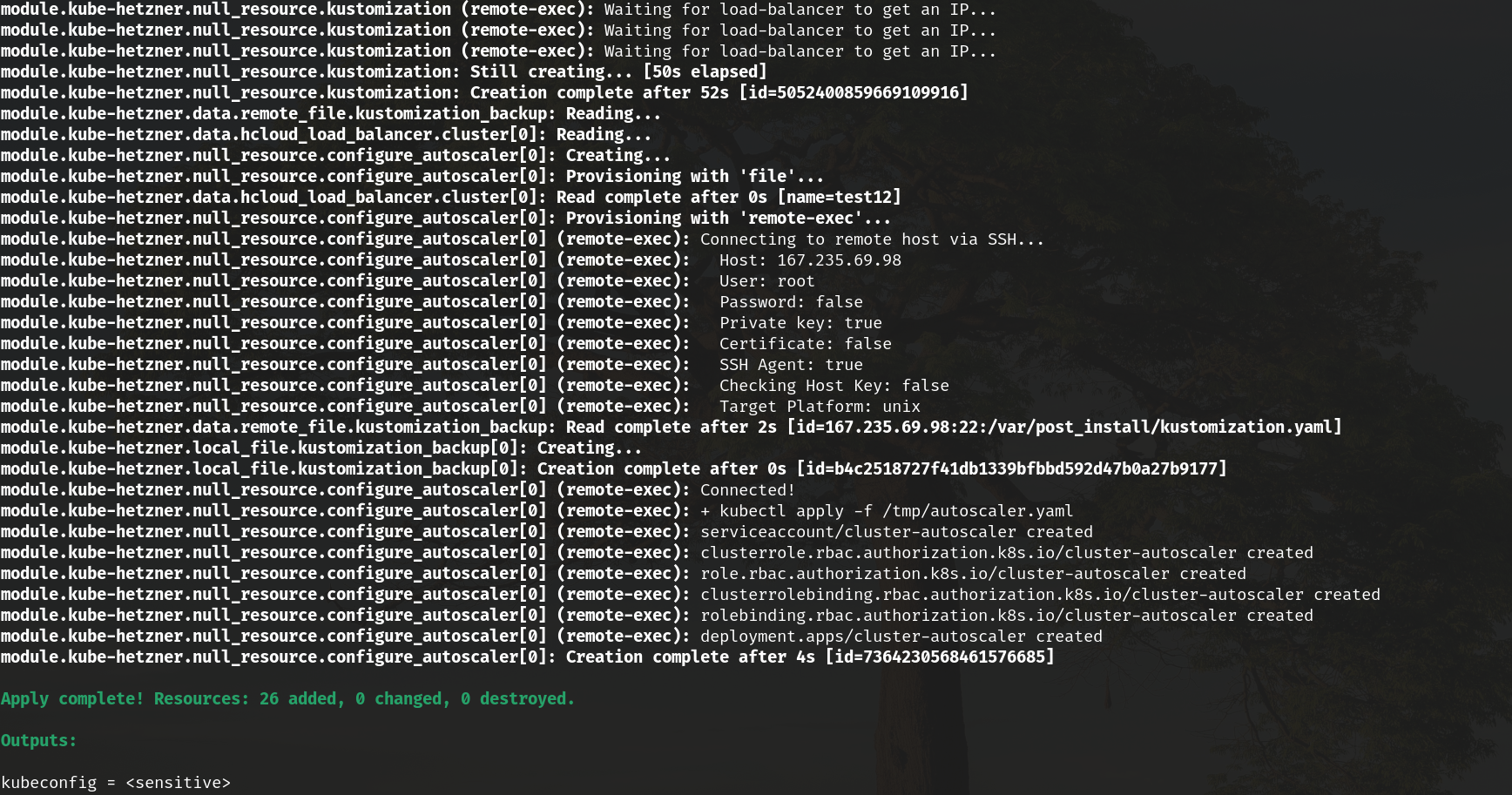

Descriptionterraform apply The LoadBalancer service is created in Kubernetes and is healthy in Hetzner. I saw another issue with the same issue. #539 Kube.tf filelocals {

# Fill first and foremost your Hetzner API token, found in your project, Security, API Token, of type Read & Write.

hcloud_token = var.hcloud_token

}

module "kube-hetzner" {

providers = {

hcloud = hcloud

}

hcloud_token = local.hcloud_token

source = "kube-hetzner/kube-hetzner/hcloud"

version = "2.1.5"

ssh_public_key = file("/Users/user/.ssh/id_ed25519.pub")

ssh_private_key = file("/Users/user/.ssh/id_ed25519")

ssh_hcloud_key_label = "role=admin"

network_region = "eu-central" # change to `us-east` if location is ash

control_plane_nodepools = [

{

name = "captain-nbg1",

server_type = "cx21",

location = "nbg1",

labels = [],

taints = [],

count = 3

},

]

agent_nodepools = [

{

name = "ship-small",

server_type = "cx21",

location = "nbg1",

labels = [],

taints = [],

count = 0

},

{

name = "ship-large",

server_type = "cx31",

location = "nbg1",

labels = [],

taints = [],

count = 8

}

]

load_balancer_type = "lb11"

load_balancer_location = "nbg1"

etcd_s3_backup = {

etcd-s3-access-key = var.aws_key

etcd-s3-secret-key = var.aws_secret_key

etcd-s3-bucket = "k3s-etcd-snapshots"

etcd-s3-region = "eu-central-1"

}

ingress_controller = "nginx"

cluster_name = "galaxy"

extra_firewall_rules = [

{

description = "To allow acces to resources via SSH"

direction = "out"

protocol = "tcp"

port = "22"

source_ips = [] # Won't be used for this rule

destination_ips = ["0.0.0.0/0", "::/0"]

}

]

cni_plugin = "cilium"

enable_cert_manager = true

use_control_plane_lb = true

lb_hostname = "cluster.io"

enable_rancher = false

rancher_hostname = "rancher.cluster.io"

rancher_values = <<EOT

ingress:

tls:

source: "letsEncrypt"

hostname: "rancher.cluster.io"

letsEncrypt:

email: [email protected]

replicas: 3

bootstrapPassword: "password"

EOT

}

provider "hcloud" {

token = local.hcloud_token

}

terraform {

required_version = ">= 1.3.3"

required_providers {

hcloud = {

source = "hetznercloud/hcloud"

version = ">= 1.38.2"

}

}

}

output "kubeconfig" {

value = module.kube-hetzner.kubeconfig

sensitive = true

}ScreenshotsNo response PlatformMac |

Beta Was this translation helpful? Give feedback.

Replies: 4 comments 8 replies

-

|

I just had a look into the init.tf. It tries to search for the service in the nginx namespace but my service is located in kube-system. |

Beta Was this translation helpful? Give feedback.

-

|

I deployed everything with the terraform module. |

Beta Was this translation helpful? Give feedback.

-

|

i just setup a new cluster, maybe hetzner is the culprit |

Beta Was this translation helpful? Give feedback.

-

|

Thanks for confirming @aDingil! @Keyruu Everything looks fine on my end too, just tried a test cluster with nginx and I get the LB just fine. Please note that there is an issue with your kube.tf file that could be indirectly causing this. Rancher is not yet compatible with the latest version of Kubenetes, please check on their site (and read the explanation well around the rancher variables in kube.tf, there are also links in there). A safe bet would be to set Some screenshots below from my test just now. |

Beta Was this translation helpful? Give feedback.

Okay I fixed it with removing the old Helm Chart, which was called ngx and applying the templated yaml from terraform manually, so a new Ingress will be created. With this also comes a new LoadBalancer. This should probably be mentioned in the docs. I wasted a lot of hours trying to dig into this because the terraform module isn't really easily upgradable.