|

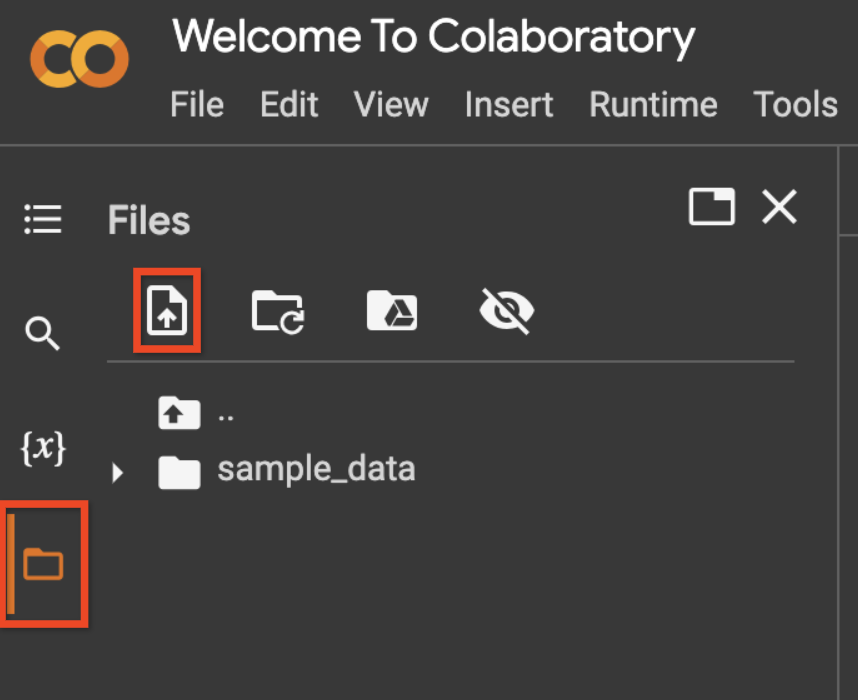

113 | 113 | "If you want to run this notebook in Colab start by uploading your\n", |

114 | 114 | "`client_secret*.json` file using the \"File > Upload\" option.\n", |

115 | 115 | "\n", |

116 | | - "" |

| 116 | + "<img width=400 src=\"https://developers.generativeai.google/tutorials/images/colab_upload.png\">" |

117 | 117 | ] |

118 | 118 | }, |

119 | 119 | { |

|

133 | 133 | ], |

134 | 134 | "source": [ |

135 | 135 | "!cp client_secret*.json client_secret.json\n", |

136 | | - "!ls" |

| 136 | + "!ls client_secret.json" |

137 | 137 | ] |

138 | 138 | }, |

139 | 139 | { |

|

174 | 174 | }, |

175 | 175 | { |

176 | 176 | "cell_type": "code", |

177 | | - "execution_count": null, |

| 177 | + "execution_count": 7, |

178 | 178 | "metadata": { |

179 | | - "id": "VYetBMbknUVp" |

| 179 | + "id": "cbcf72bcb56d" |

180 | 180 | }, |

181 | | - "outputs": [ |

182 | | - { |

183 | | - "name": "stdout", |

184 | | - "output_type": "stream", |

185 | | - "text": [ |

186 | | - "\u001b[?25l \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m0.0/122.9 kB\u001b[0m \u001b[31m?\u001b[0m eta \u001b[36m-:--:--\u001b[0m\r", |

187 | | - "\u001b[2K \u001b[91m━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m\u001b[90m╺\u001b[0m\u001b[90m━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m71.7/122.9 kB\u001b[0m \u001b[31m1.9 MB/s\u001b[0m eta \u001b[36m0:00:01\u001b[0m\r", |

188 | | - "\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m122.9/122.9 kB\u001b[0m \u001b[31m2.2 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n", |

189 | | - "\u001b[?25h\u001b[?25l \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m0.0/113.3 kB\u001b[0m \u001b[31m?\u001b[0m eta \u001b[36m-:--:--\u001b[0m\r", |

190 | | - "\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m113.3/113.3 kB\u001b[0m \u001b[31m5.3 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n", |

191 | | - "\u001b[?25h" |

192 | | - ] |

193 | | - } |

194 | | - ], |

| 181 | + "outputs": [], |

195 | 182 | "source": [ |

196 | | - "!pip install google-generativeai" |

| 183 | + "!pip install -q google-generativeai" |

197 | 184 | ] |

198 | 185 | }, |

199 | 186 | { |

|

213 | 200 | }, |

214 | 201 | "outputs": [], |

215 | 202 | "source": [ |

216 | | - "import google.generativeai as palm\n", |

217 | | - "\n", |

218 | | - "import google.ai.generativelanguage as glm\n", |

219 | | - "import pprint" |

| 203 | + "import google.generativeai as palm\n" |

220 | 204 | ] |

221 | 205 | }, |

222 | 206 | { |

|

239 | 223 | "name": "stdout", |

240 | 224 | "output_type": "stream", |

241 | 225 | "text": [ |

242 | | - "['tunedModels/testnumbergenerator-fvitocr834l6',\n", |

243 | | - " 'tunedModels/my-display-name-81-9wpmc1m920vq',\n", |

244 | | - " 'tunedModels/number-generator-model-kctlevca1g3q',\n", |

245 | | - " 'tunedModels/my-display-name-81-r9wcuda14lyy']\n" |

| 226 | + "tunedModels/my-model-8527\n", |

| 227 | + "tunedModels/my-model-7092\n", |

| 228 | + "tunedModels/my-model-2778\n", |

| 229 | + "tunedModels/my-model-1298\n", |

| 230 | + "tunedModels/my-model-3883\n" |

246 | 231 | ] |

247 | 232 | } |

248 | 233 | ], |

249 | 234 | "source": [ |

250 | | - "tuned_models = list(palm.list_tuned_models())\n", |

251 | | - "pprint.pprint([m.name for m in tuned_models])" |

| 235 | + "for i, m in zip(range(5), palm.list_tuned_models()):\n", |

| 236 | + " print(m.name)" |

252 | 237 | ] |

253 | 238 | }, |

254 | 239 | { |

|

311 | 296 | "\n", |

312 | 297 | "name = f'generate-num-{random.randint(0,10000)}'\n", |

313 | 298 | "operation = palm.create_tuned_model(\n", |

| 299 | + " # You can use a tuned model here too. Set `source_model=\"tunedModels/...\"` \n", |

314 | 300 | " source_model=base_model.name,\n", |

315 | 301 | " training_data=[\n", |

316 | 302 | " {\n", |

|

387 | 373 | "name": "stdout", |

388 | 374 | "output_type": "stream", |

389 | 375 | "text": [ |

390 | | - "TunedModel(name='tunedModels/generate-num-4668',\n", |

391 | | - " source_model=None,\n", |

| 376 | + "TunedModel(name='tunedModels/generate-num-9028',\n", |

| 377 | + " source_model='tunedModels/generate-num-4110',\n", |

392 | 378 | " base_model='models/text-bison-001',\n", |

393 | 379 | " display_name='',\n", |

394 | 380 | " description='',\n", |

395 | 381 | " temperature=0.7,\n", |

396 | 382 | " top_p=0.95,\n", |

397 | 383 | " top_k=40,\n", |

398 | 384 | " state=<State.CREATING: 1>,\n", |

399 | | - " create_time=datetime.datetime(2023, 9, 19, 19, 3, 38, 22249, tzinfo=<UTC>),\n", |

400 | | - " update_time=datetime.datetime(2023, 9, 19, 19, 3, 38, 22249, tzinfo=<UTC>),\n", |

401 | | - " tuning_task=TuningTask(start_time=datetime.datetime(2023, 9, 19, 19, 3, 38, 562798, tzinfo=<UTC>),\n", |

| 385 | + " create_time=datetime.datetime(2023, 9, 29, 21, 37, 32, 188028, tzinfo=datetime.timezone.utc),\n", |

| 386 | + " update_time=datetime.datetime(2023, 9, 29, 21, 37, 32, 188028, tzinfo=datetime.timezone.utc),\n", |

| 387 | + " tuning_task=TuningTask(start_time=datetime.datetime(2023, 9, 29, 21, 37, 32, 734118, tzinfo=datetime.timezone.utc),\n", |

402 | 388 | " complete_time=None,\n", |

403 | 389 | " snapshots=[],\n", |

404 | 390 | " hyperparameters=Hyperparameters(epoch_count=100,\n", |

|

410 | 396 | "source": [ |

411 | 397 | "model = palm.get_tuned_model(f'tunedModels/{name}')\n", |

412 | 398 | "\n", |

413 | | - "pprint.pprint(model)" |

| 399 | + "model" |

414 | 400 | ] |

415 | 401 | }, |

416 | 402 | { |

417 | 403 | "cell_type": "code", |

418 | | - "execution_count": null, |

| 404 | + "execution_count": 40, |

419 | 405 | "metadata": { |

420 | 406 | "id": "EUodUwZkKPi-" |

421 | 407 | }, |

|

463 | 449 | { |

464 | 450 | "data": { |

465 | 451 | "text/plain": [ |

466 | | - "total_steps: 375\n", |

467 | | - "tuned_model: \"tunedModels/generate-num-4668\"" |

| 452 | + "tuned_model: \"tunedModels/generate-num-9028\"\n", |

| 453 | + "total_steps: 375" |

468 | 454 | ] |

469 | 455 | }, |

470 | | - "execution_count": 15, |

| 456 | + "execution_count": 41, |

471 | 457 | "metadata": {}, |

472 | 458 | "output_type": "execute_result" |

473 | 459 | } |

|

487 | 473 | }, |

488 | 474 | { |

489 | 475 | "cell_type": "code", |

490 | | - "execution_count": null, |

| 476 | + "execution_count": 42, |

491 | 477 | "metadata": { |

492 | 478 | "id": "SOUowIv1HgSE" |

493 | 479 | }, |

494 | 480 | "outputs": [ |

495 | 481 | { |

496 | 482 | "data": { |

497 | 483 | "application/vnd.jupyter.widget-view+json": { |

498 | | - "model_id": "c07d8bb1884d460cb7e7e40aa459c2ea", |

| 484 | + "model_id": "98e4b6958bfc43c98e6e77354f7bf315", |

499 | 485 | "version_major": 2, |

500 | 486 | "version_minor": 0 |

501 | 487 | }, |

|

509 | 495 | ], |

510 | 496 | "source": [ |

511 | 497 | "import time\n", |

| 498 | + "\n", |

512 | 499 | "for status in operation.wait_bar():\n", |

513 | 500 | " time.sleep(30)" |

514 | 501 | ] |

|

2250 | 2237 | "source": [ |

2251 | 2238 | "model = palm.get_tuned_model(f'tunedModels/{name}')\n", |

2252 | 2239 | "\n", |

2253 | | - "pprint.pprint(model)" |

| 2240 | + "model" |

2254 | 2241 | ] |

2255 | 2242 | }, |

2256 | 2243 | { |

|

2309 | 2296 | "\n", |

2310 | 2297 | "try:\n", |

2311 | 2298 | " m = palm.get_tuned_model(f'tunedModels/{name}')\n", |

2312 | | - " pprint.pprint(m)\n", |

| 2299 | + " print(m)\n", |

2313 | 2300 | "except Exception as e:\n", |

2314 | 2301 | " print(f\"{type(e)}: {e}\")" |

2315 | 2302 | ] |

|

0 commit comments