|

4 | 4 | "cell_type": "markdown", |

5 | 5 | "metadata": {}, |

6 | 6 | "source": [ |

7 | | - "<!-- NOTEBOOK_METADATA source: \"⚠️ Jupyter Notebook\" title: \"Monitor Parallel AI Tasks with Langfuse\" sidebarTitle: \"Parallel AI\" logo: \"/images/integrations/parallel_icon.svg\" description: \"Learn how to trace Parallel AI task execution using Langfuse to capture detailed observability data for your AI workflow operations.\" category: \"Integrations\" -->\n", |

| 7 | + "<!-- NOTEBOOK_METADATA source: \"⚠️ Jupyter Notebook\" title: \"Monitor Parallel Tasks with Langfuse\" sidebarTitle: \"Parallel\" logo: \"/images/integrations/parallel_icon.svg\" description: \"Learn how to trace Parallel task execution using Langfuse to capture detailed observability data for your AI workflow operations.\" category: \"Integrations\" -->\n", |

8 | 8 | "\n", |

9 | | - "# Parallel AI Integration\n", |

| 9 | + "# Parallel Integration\n", |

10 | 10 | "\n", |

11 | | - "In this guide, we'll show you how to integrate [Langfuse](https://langfuse.com) with [Parallel AI](https://parallel.ai/) to trace your AI task operations. By leveraging Langfuse's tracing capabilities, you can automatically capture details such as inputs, outputs, and execution times of your Parallel AI tasks.\n", |

| 11 | + "In this guide, we'll show you how to integrate [Langfuse](https://langfuse.com) with [Parallel](https://parallel.ai/) to trace your AI task operations. By leveraging Langfuse's tracing capabilities, you can automatically capture details such as inputs, outputs, and execution times of your Parallel tasks.\n", |

12 | 12 | "\n", |

13 | | - "> **What is Parallel AI?** [Parallel AI](https://parallel.ai/) is an API service that enables you to execute AI tasks in parallel, optimizing workflow efficiency. It provides a powerful task API that allows you to run multiple AI operations concurrently, making it ideal for building scalable AI applications.\n", |

| 13 | + "> **What is Parallel?** [Parallel](https://parallel.ai/) develops a suite of web search and web agent APIs that connect AI agents, applications, and workflows to the open internet, enabling programmable tasks from simple searches to complex knowledge work.\n", |

14 | 14 | "\n", |

15 | 15 | "> **What is Langfuse?** [Langfuse](https://langfuse.com) is an open source LLM engineering platform that helps teams trace API calls, monitor performance, and debug issues in their AI applications.\n", |

16 | 16 | "\n", |

|

33 | 33 | "cell_type": "markdown", |

34 | 34 | "metadata": {}, |

35 | 35 | "source": [ |

36 | | - "Next, configure your environment with your Parallel AI and Langfuse API keys. You can get these keys by signing up for a free [Langfuse Cloud](https://cloud.langfuse.com/) account or by [self-hosting Langfuse](https://langfuse.com/self-hosting) and from the [Parallel AI dashboard](https://parallel.ai/)." |

| 36 | + "Next, configure your environment with your Parallel and Langfuse API keys. You can get these keys by signing up for a free [Langfuse Cloud](https://cloud.langfuse.com/) account or by [self-hosting Langfuse](https://langfuse.com/self-hosting) and from the [Parallel dashboard](https://parallel.ai/)." |

37 | 37 | ] |

38 | 38 | }, |

39 | 39 | { |

|

50 | 50 | "os.environ[\"LANGFUSE_HOST\"] = \"https://cloud.langfuse.com\" # 🇪🇺 EU region\n", |

51 | 51 | "# os.environ[\"LANGFUSE_HOST\"] = \"https://us.cloud.langfuse.com\" # 🇺🇸 US region\n", |

52 | 52 | "\n", |

53 | | - "# Your Parallel AI key\n", |

| 53 | + "# Your Parallel key\n", |

54 | 54 | "os.environ[\"PARALLEL_API_KEY\"] = \"...\"\n", |

55 | 55 | "\n", |

56 | 56 | "# Your openai key\n", |

|

122 | 122 | "\n", |

123 | 123 | "response = client.chat.completions.create(\n", |

124 | 124 | " model=\"speed\", # Parallel model name\n", |

125 | | - " name=\"Parallel AI Chat\",\n", |

| 125 | + " name=\"Parallel Chat\",\n", |

126 | 126 | " messages=[\n", |

127 | 127 | " {\"role\": \"user\", \"content\": \"What does Parallel Web Systems do?\"}\n", |

128 | 128 | " ],\n", |

|

161 | 161 | "source": [ |

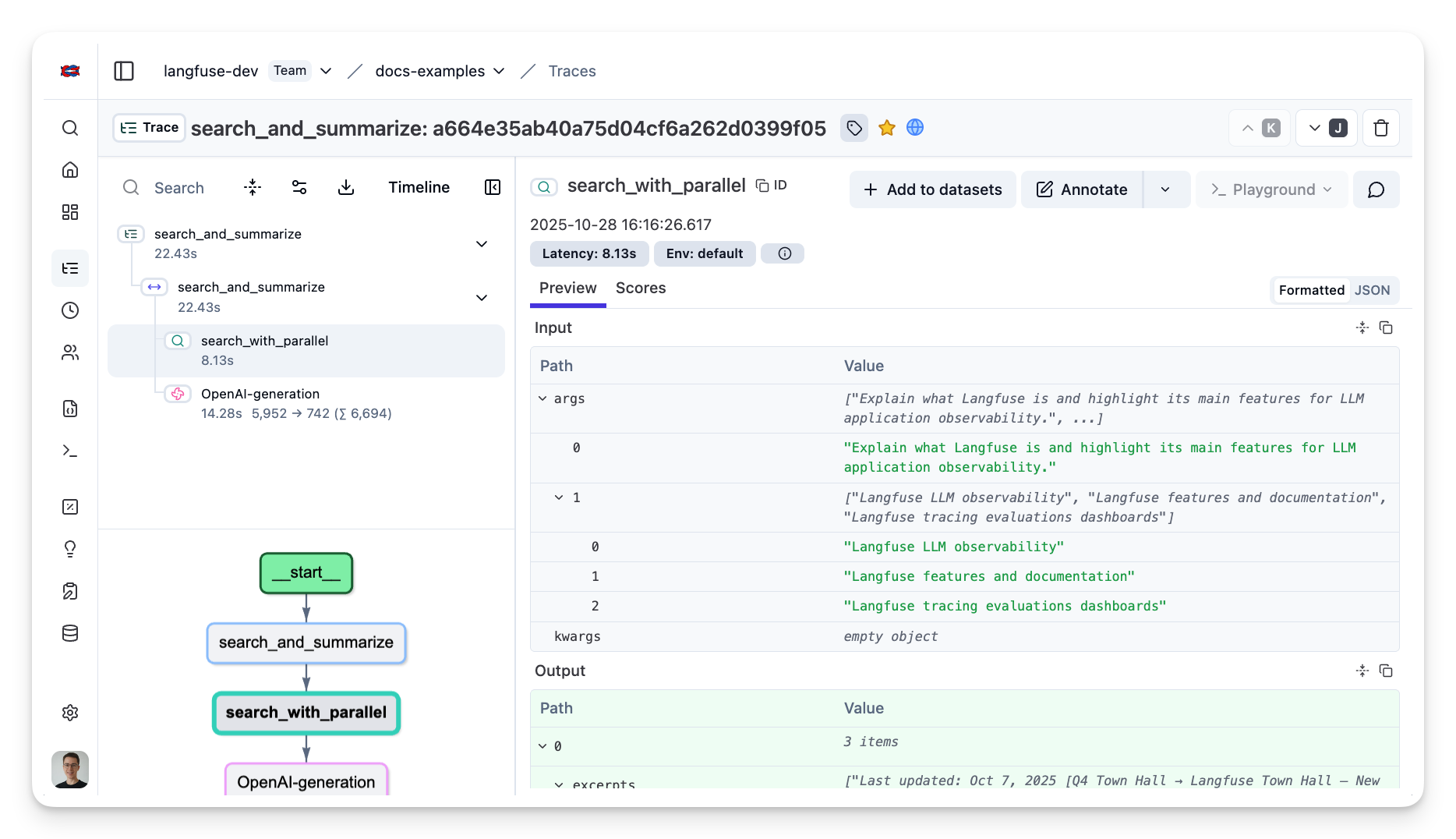

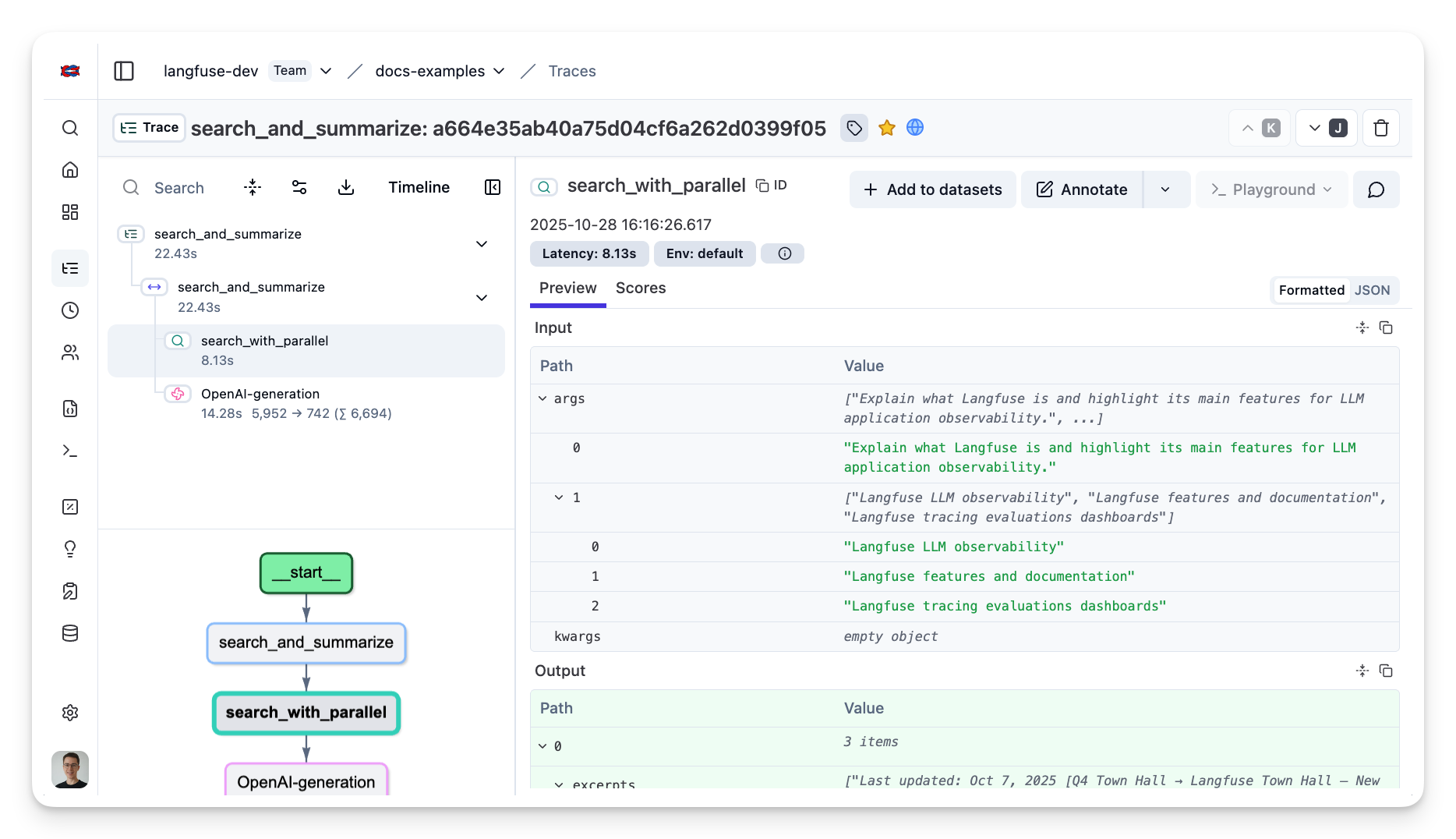

162 | 162 | "## Example 3: Parallel Search API and OpenAI\n", |

163 | 163 | "\n", |

164 | | - "You can also trace more complex workflows that involve summarizing the search results with OpenAI. Here we use the [Langfuse `@observe()` decorator](https://langfuse.com/docs/sdk/python/decorators) to group both the Parallel AI search and the OpenAI generation into one trace. " |

| 164 | + "You can also trace more complex workflows that involve summarizing the search results with OpenAI. Here we use the [Langfuse `@observe()` decorator](https://langfuse.com/docs/sdk/python/decorators) to group both the Parallel search and the OpenAI generation into one trace. " |

165 | 165 | ] |

166 | 166 | }, |

167 | 167 | { |

|

183 | 183 | "\n", |

184 | 184 | " @observe(as_type=\"retriever\")\n", |

185 | 185 | " def search_with_parallel(objective, search_queries, num_results: int = 5):\n", |

186 | | - " \"\"\"Search the web using Parallel AI and return results.\"\"\"\n", |

| 186 | + " \"\"\"Search the web using Parallel and return results.\"\"\"\n", |

187 | 187 | " search = parallel_client.beta.search(\n", |

188 | 188 | " objective=objective,\n", |

189 | 189 | " search_queries=search_queries,\n", |

|

231 | 231 | "- Input prompts and output results\n", |

232 | 232 | "- Performance metrics for each task\n", |

233 | 233 | "\n", |

234 | | - "\n", |

| 234 | + "\n", |

235 | 235 | "\n", |

236 | 236 | "[Example trace in Langfuse](https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/a664e35ab40a75d04cf6a262d0399f05?timestamp=2025-10-28T15%3A16%3A26.617Z&observation=13fc56583aafeb06)\n", |

237 | 237 | "\n", |

|

0 commit comments