|

21 | 21 | "source": [ |

22 | 22 | "## 1. Install Dependencies\n", |

23 | 23 | "\n", |

24 | | - "Below we install the `openai-agents` library (the OpenAI Agents SDK), and the `pydantic-ai[logfire]` OpenTelemetry instrumentation." |

| 24 | + "Below we install the `openai-agents` library (the OpenAI Agents SDK), and the [OpenInference OpenAI Agents instrumentation](https://github.com/Arize-ai/openinference/tree/main/python/instrumentation/openinference-instrumentation-openai-agents) library." |

25 | 25 | ] |

26 | 26 | }, |

27 | 27 | { |

|

30 | 30 | "metadata": {}, |

31 | 31 | "outputs": [], |

32 | 32 | "source": [ |

33 | | - "%pip install openai-agents langfuse nest_asyncio \"pydantic-ai[logfire]\"" |

| 33 | + "%pip install openai-agents langfuse nest_asyncio openinference-instrumentation-openai-agents" |

34 | 34 | ] |

35 | 35 | }, |

36 | 36 | { |

|

64 | 64 | "cell_type": "markdown", |

65 | 65 | "metadata": {}, |

66 | 66 | "source": [ |

67 | | - "## 3. Instrumenting the Agent\n", |

68 | | - "\n", |

69 | | - "Pydantic Logfire offers an instrumentation for the OpenAi Agent SDK. We use this to send traces to Langfuse." |

| 67 | + "## 3. Instrumenting the Agent" |

70 | 68 | ] |

71 | 69 | }, |

72 | 70 | { |

|

79 | 77 | "nest_asyncio.apply()" |

80 | 78 | ] |

81 | 79 | }, |

| 80 | + { |

| 81 | + "cell_type": "markdown", |

| 82 | + "metadata": {}, |

| 83 | + "source": [ |

| 84 | + "Now, we initialize the [OpenInference OpenAI Agents instrumentation](https://github.com/Arize-ai/openinference/tree/main/python/instrumentation/openinference-instrumentation-openai-agents). This third-party instrumentation automatically captures OpenAI Agents operations and exports OpenTelemetry (OTel) spans to Langfuse." |

| 85 | + ] |

| 86 | + }, |

82 | 87 | { |

83 | 88 | "cell_type": "code", |

84 | | - "execution_count": 4, |

| 89 | + "execution_count": null, |

85 | 90 | "metadata": {}, |

86 | 91 | "outputs": [], |

87 | 92 | "source": [ |

88 | | - "import logfire\n", |

| 93 | + "from openinference.instrumentation.openai_agents import OpenAIAgentsInstrumentor\n", |

89 | 94 | "\n", |

90 | | - "# Configure logfire instrumentation.\n", |

91 | | - "logfire.configure(\n", |

92 | | - " service_name='my_agent_service',\n", |

93 | | - " send_to_logfire=False,\n", |

94 | | - ")\n", |

95 | | - "# This method automatically patches the OpenAI Agents SDK to send logs via OTLP to Langfuse.\n", |

96 | | - "logfire.instrument_openai_agents()" |

| 95 | + "OpenAIAgentsInstrumentor().instrument()" |

97 | 96 | ] |

98 | 97 | }, |

99 | 98 | { |

|

157 | 156 | "source": [ |

158 | 157 | "\n", |

159 | 158 | "\n", |

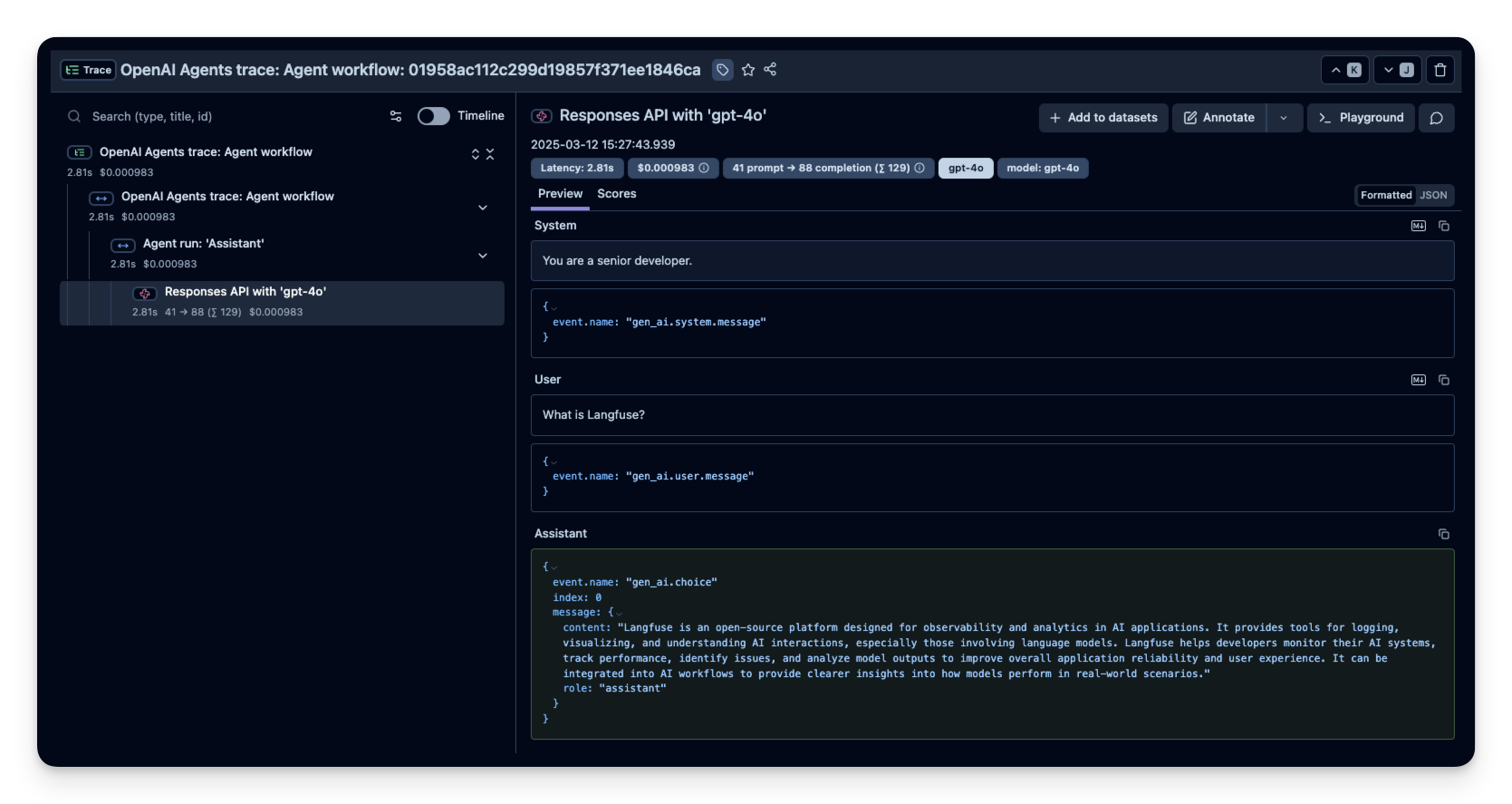

160 | | - "**Example**: [Langfuse Trace](https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/019593c7330da67c08219bd1c75b7a6d?timestamp=2025-03-14T08%3A31%3A00.365Z&observation=81e525d819153eed)\n", |

| 159 | + "**Example**: [Langfuse Trace](https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/483a2afb22b41ee49d7d1e5b41b4967a?timestamp=2025-09-30T09%3A01%3A29.194Z&observation=e3e3580728f3fec4)\n", |

161 | 160 | "\n", |

162 | 161 | "Clicking the link above (or your own project link) lets you view all sub-spans, token usage, latencies, etc., for debugging or optimization." |

163 | 162 | ] |

|

211 | 210 | "source": [ |

212 | 211 | "\n", |

213 | 212 | "\n", |

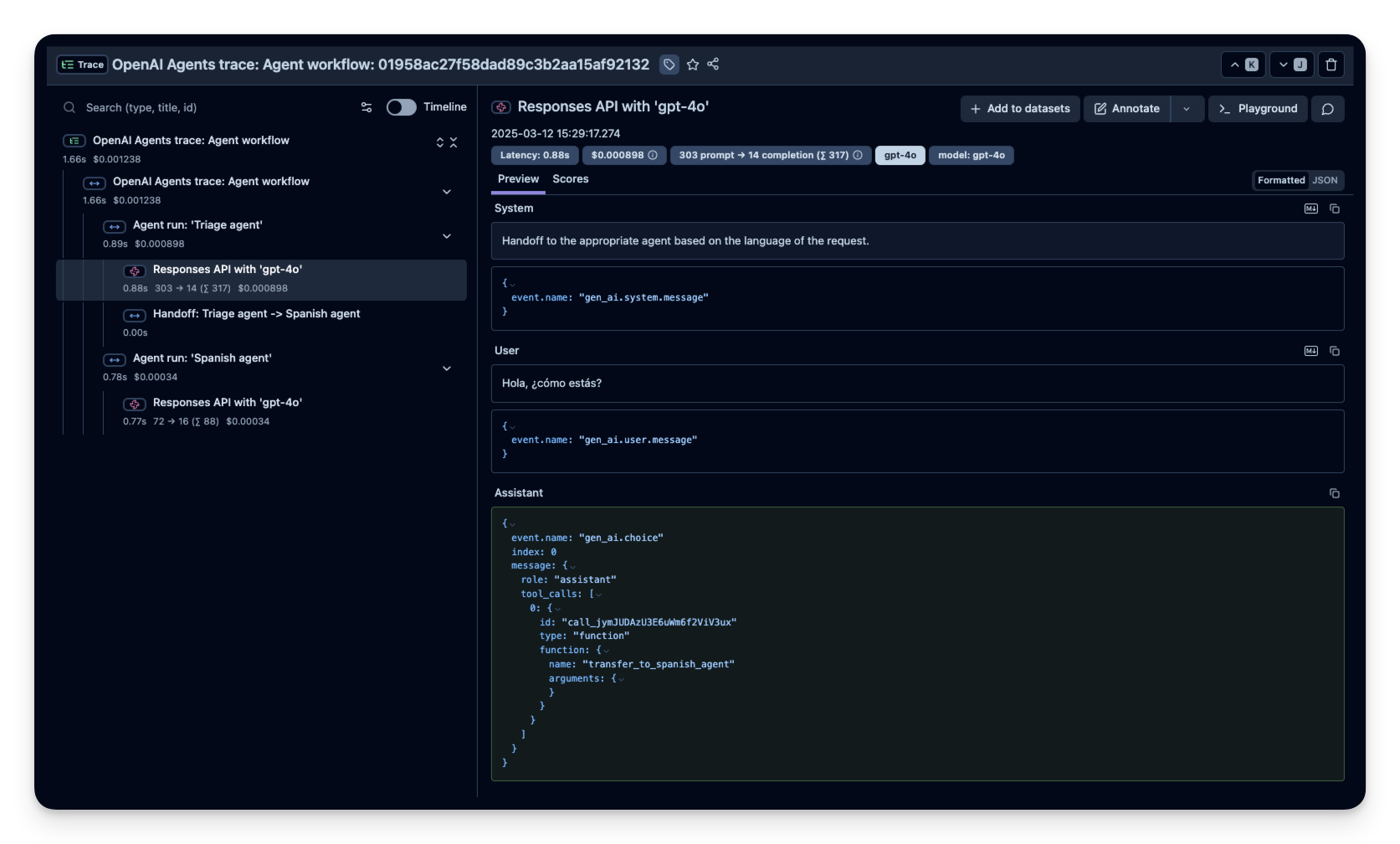

214 | | - "**Example**: [Langfuse Trace](https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/019593c74429a6d0489e9259703a1148?timestamp=2025-03-14T08%3A31%3A04.745Z&observation=e83609282c443b0d)" |

| 213 | + "**Example**: [Langfuse Trace](https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/c376e44920527b875add9d97b4ed9312?observation=94a1dc00d7067ae8×tamp=2025-09-30T09%3A03%3A51.191Z)" |

215 | 214 | ] |

216 | 215 | }, |

217 | 216 | { |

|

257 | 256 | "source": [ |

258 | 257 | "\n", |

259 | 258 | "\n", |

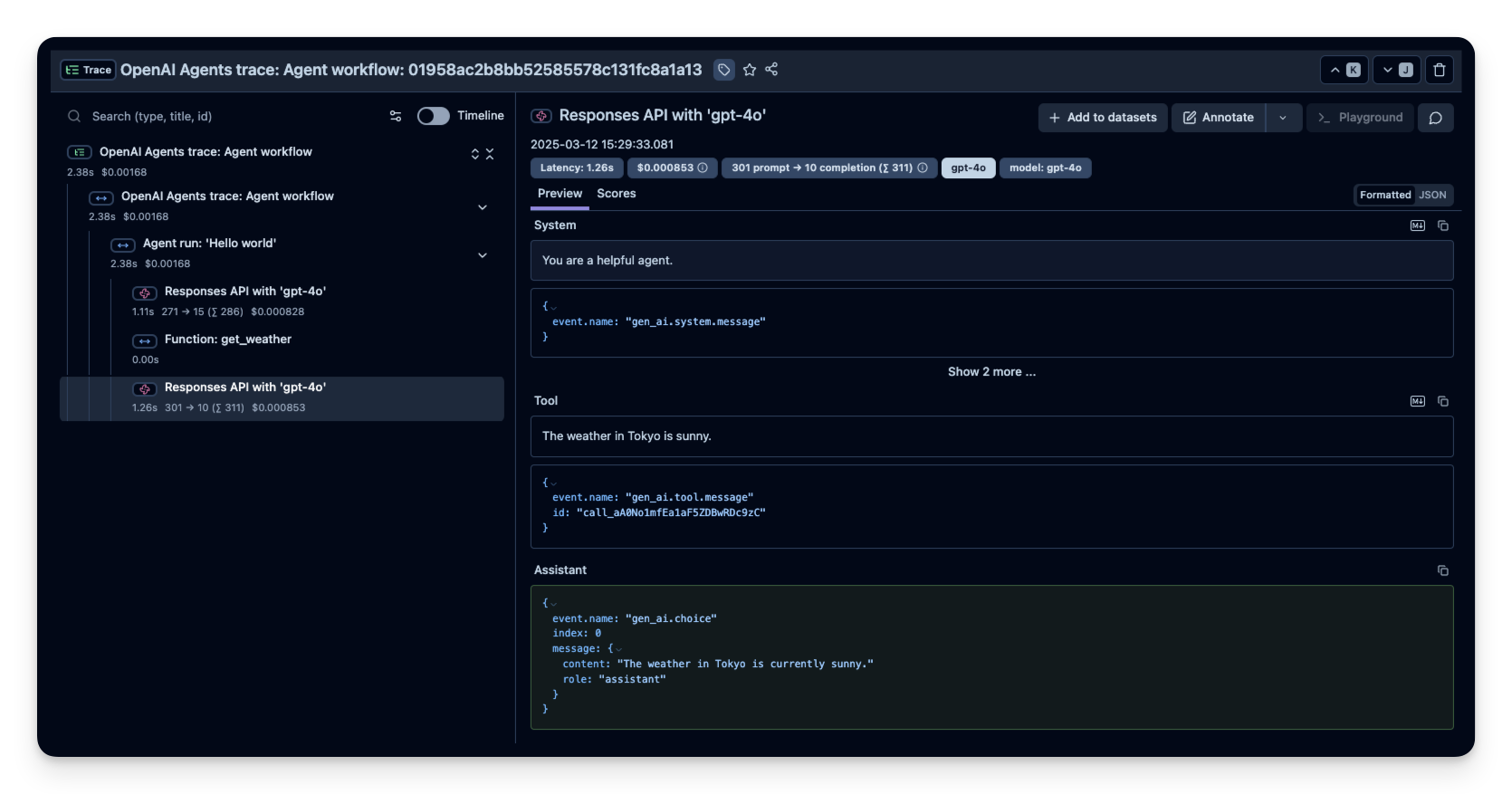

260 | | - "**Example**: [Langfuse Trace](https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/019593c74a162f93387d9261b01f9ca9?timestamp=2025-03-14T08%3A31%3A06.262Z&observation=0e2988966786cdf4)\n", |

| 259 | + "**Example**: [Langfuse Trace](https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/fee618f96dc31e0ca38b2f7b26eb8b29?timestamp=2025-09-30T09%3A03%3A59.962Z&observation=5b99c3e3411ed17b)\n", |

261 | 260 | "\n", |

262 | 261 | "When viewing the trace, you’ll see a span capturing the function call `get_weather` and the arguments passed." |

263 | 262 | ] |

|

299 | 298 | "source": [ |

300 | 299 | "\n", |

301 | 300 | "\n", |

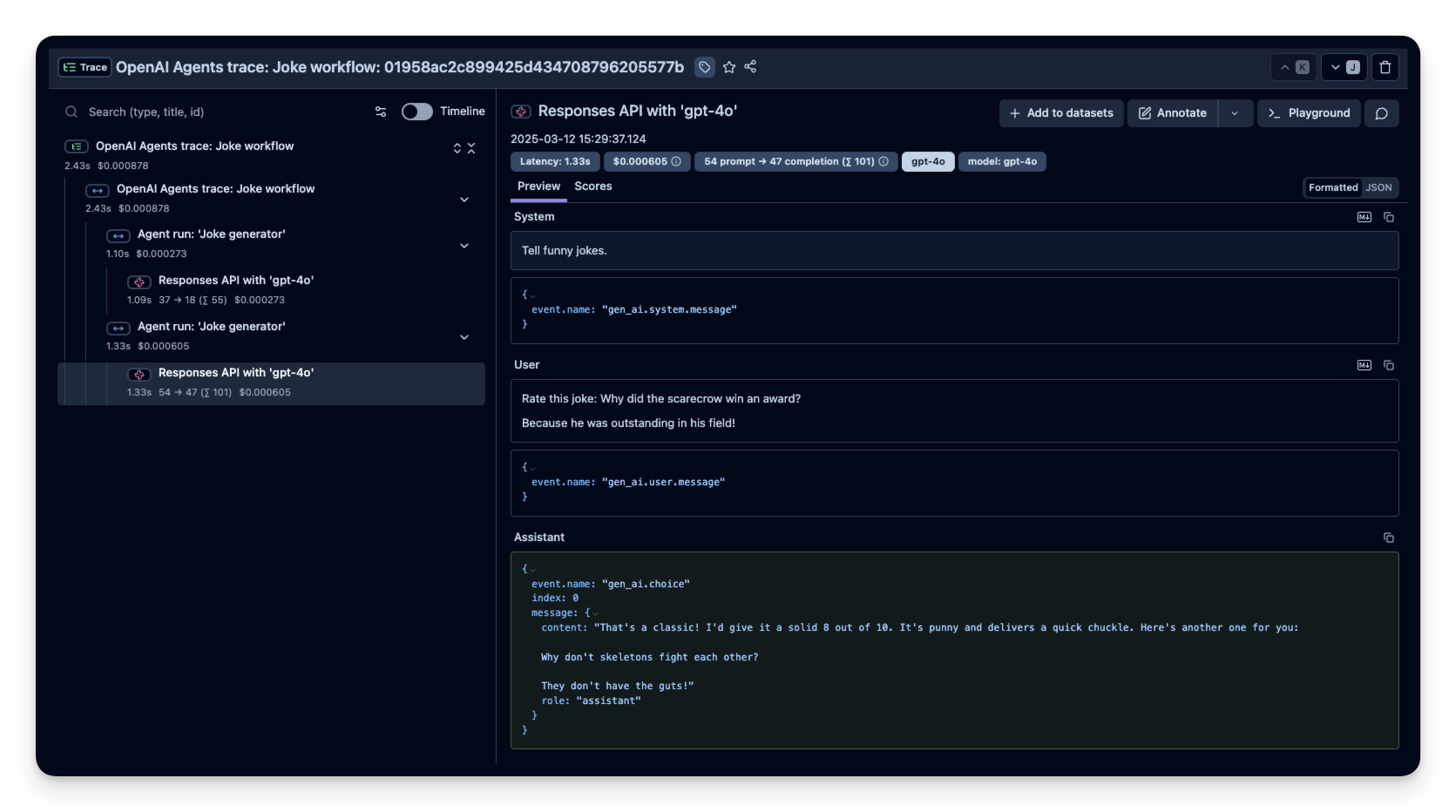

302 | | - "**Example**: [Langfuse Trace](https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/019593c7523686ff7667b85673d033bf?timestamp=2025-03-14T08%3A31%3A08.342Z&observation=d69e377f62b1d331)\n", |

| 301 | + "**Example**: [Langfuse Trace](https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/afa1ae379b6c3a5333e2bab357f31cdc?timestamp=2025-09-30T09%3A04%3A06.678Z&observation=496efdc060a5399b)\n", |

303 | 302 | "\n", |

304 | 303 | "Each child call is represented as a sub-span under the top-level **Joke workflow** span, making it easy to see the entire conversation or sequence of calls." |

305 | 304 | ] |

|

325 | 324 | "source": [ |

326 | 325 | "from contextvars import ContextVar\n", |

327 | 326 | "from typing import Optional\n", |

328 | | - "from opentelemetry import context as context_api\n", |

| 327 | + "from opentelemetry import context as context_api, trace\n", |

| 328 | + "from opentelemetry.sdk.trace import TracerProvider\n", |

329 | 329 | "from opentelemetry.sdk.trace.export import Span, SpanProcessor\n", |

330 | 330 | "\n", |

331 | 331 | "prompt_info_var = ContextVar(\"prompt_info\", default=None)\n", |

332 | 332 | "\n", |

| 333 | + "# Make sure to set the name of the generation spans in your trace\n", |

333 | 334 | "class LangfuseProcessor(SpanProcessor):\n", |

334 | | - " def on_start(\n", |

335 | | - " self,\n", |

336 | | - " span: 'Span',\n", |

337 | | - " parent_context: Optional[context_api.Context] = None,\n", |

338 | | - " ) -> None:\n", |

339 | | - " if span.name.startswith('Responses API'): # The name of the generation spans in your OpenAI Agent trace \n", |

| 335 | + " def on_start(self, span: \"Span\", parent_context: Optional[context_api.Context] = None) -> None:\n", |

| 336 | + " if span.name.startswith(\"response\"): # The name of the generation spans in your trace \n", |

340 | 337 | " prompt_info = prompt_info_var.get()\n", |

341 | 338 | " if prompt_info:\n", |

342 | | - " span.set_attribute('langfuse.prompt.name', prompt_info.get(\"name\"))\n", |

343 | | - " span.set_attribute('langfuse.prompt.version', prompt_info.get(\"version\"))" |

| 339 | + " span.set_attribute(\"langfuse.prompt.name\", prompt_info.get(\"name\"))\n", |

| 340 | + " span.set_attribute(\"langfuse.prompt.version\", prompt_info.get(\"version\"))\n", |

| 341 | + "\n", |

| 342 | + "\n", |

| 343 | + "# 1) Register your processor BEFORE instantiating Langfuse\n", |

| 344 | + "trace.get_tracer_provider().add_span_processor(LangfuseProcessor())\n", |

| 345 | + "\n", |

| 346 | + "# 2) Now bring up Langfuse (it will attach its own span processor/exporter to the same provider)\n", |

| 347 | + "from langfuse import Langfuse, get_client\n", |

| 348 | + "langfuse = get_client()" |

344 | 349 | ] |

345 | 350 | }, |

346 | 351 | { |

|

349 | 354 | "metadata": {}, |

350 | 355 | "outputs": [], |

351 | 356 | "source": [ |

352 | | - "import logfire\n", |

353 | | - "from langfuse import get_client\n", |

354 | | - "\n", |

355 | | - "logfire.configure(\n", |

356 | | - " service_name='my_agent_service',\n", |

357 | | - " additional_span_processors=[LangfuseProcessor()], # Passing the LangfuseProcessor to the logfire configuration will automatically link the prompt to the trace\n", |

358 | | - " send_to_logfire=False,\n", |

359 | | - ")\n", |

| 357 | + "from openinference.instrumentation.openai_agents import OpenAIAgentsInstrumentor\n", |

360 | 358 | "\n", |

361 | | - "logfire.instrument_openai_agents()\n", |

362 | | - "langfuse = get_client()" |

| 359 | + "OpenAIAgentsInstrumentor().instrument()" |

363 | 360 | ] |

364 | 361 | }, |

365 | 362 | { |

|

0 commit comments