TCP Connection behaviour / re-use between proxy and app #9381

-

|

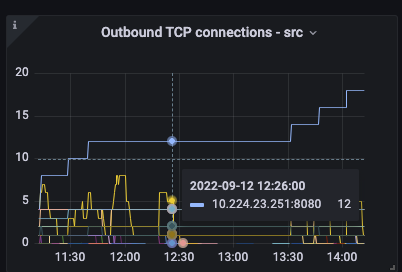

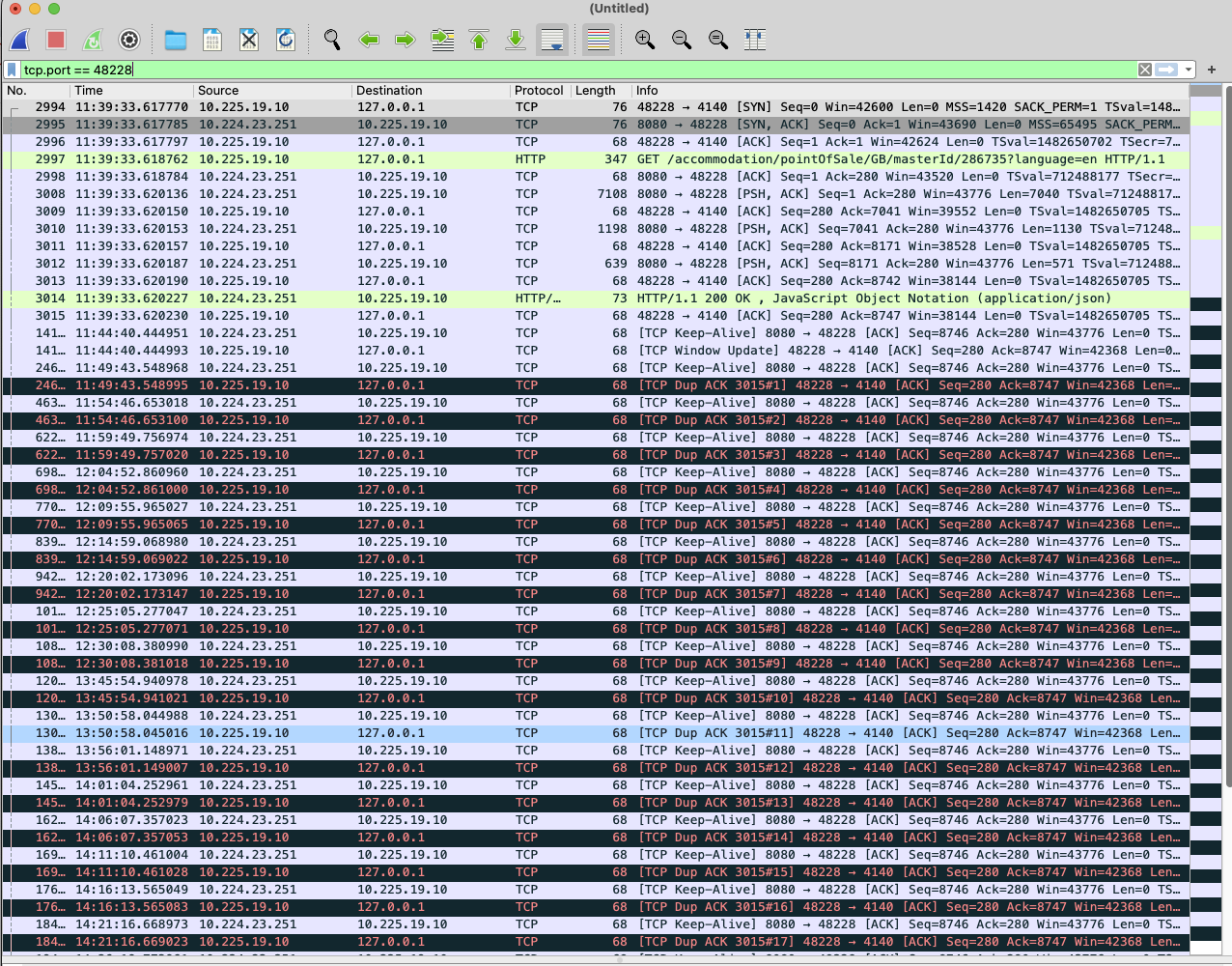

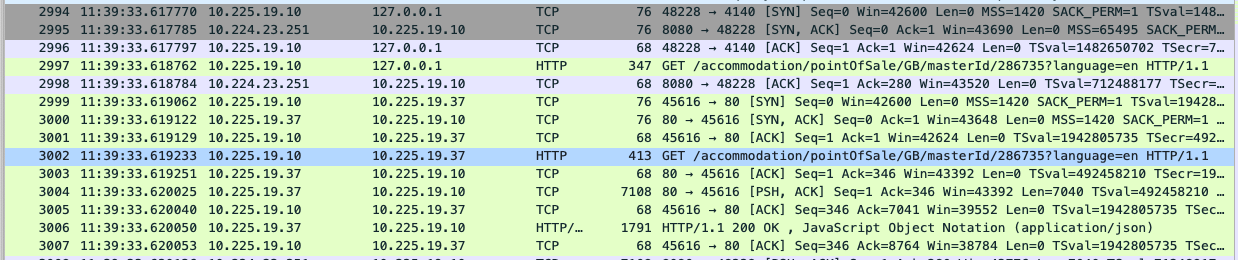

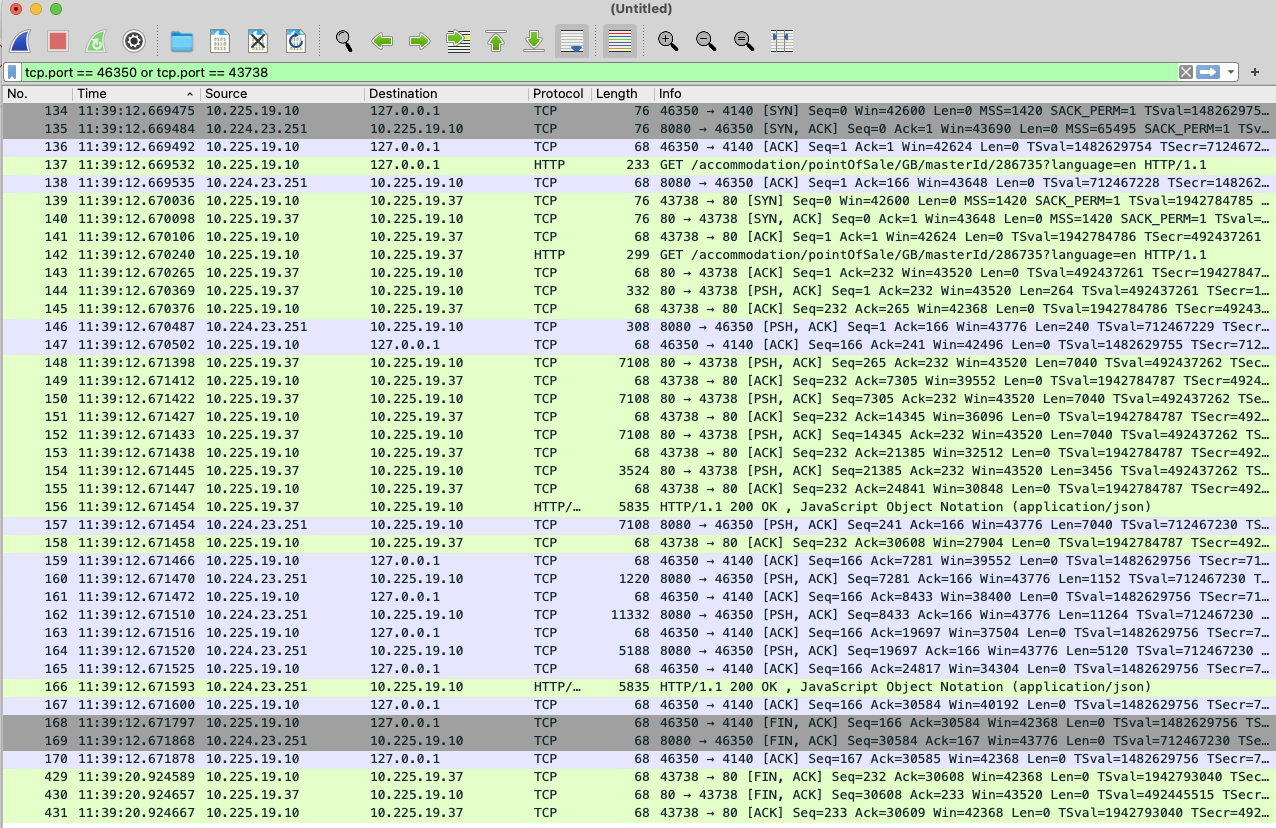

Hi all, We have observed an issue with two applications onboarded into Linkerd (2.12.0) where the proxy is being OOMkilled, due to connections remaining open. The receiving end of these requests is a cluster IP service in front of a statefulset which, to simplify it, runs nginx and returns json documents. Nothing complicated. The metric for This connection count doesn't decrease until the pod is terminated. The value of In our staging environment the number of requests is very small, but in production we are easily ramping up to over 2000 open connections in a short space of time before it OOMkills. We have a memory limit of 512mb on the proxy - I can increase the memory, but this feels to me like there's a problem so I've unmeshed it rather than increasing the limit? Using tcpdump / wireshark, if we filter for a port used as an outgoing request, we can see it doesn't terminate. In these images:

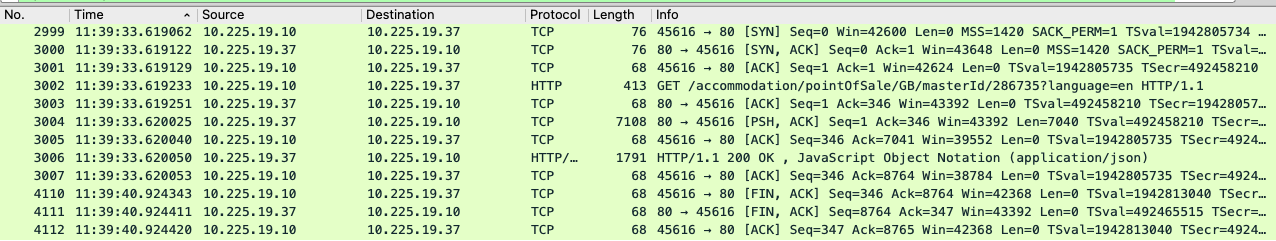

This first image shows packets for an outgoing port, 48228 (note: I stopped capturing tcpdump between 12:30 and 13:45, then merged the .pcap files for this screenshot). The keep-alives every 5 minutes 3 seconds from supposedly the clusterIP service IP is mighty curious! From what I can tell, the traffic for this particular request goes application (48228) -> linkerd proxy (4140 in, 45616 out) -> target port 80 (is this understanding correct?): The request from the proxy to the target app on port 45616 does terminate: Which leaves the connection from the app to the proxy on port 48228 open. From what we have observed, this connection remains open until the pod terminates, which is how we end up with thousands of open connections. Out of interest, I manually made a curl request to the same service / endpoint: Both the connection to the proxy and to the application terminate correctly. This leads me to believe there is some sort of issue between whatever http client / settings the java application is using and the proxy (if I unmesh the application, the problem goes away), but I first wanted to verify whether the former is not the expected behaviour and it's not just that I need to give it more than 512mb memory!? |

Beta Was this translation helpful? Give feedback.

Replies: 3 comments 2 replies

-

|

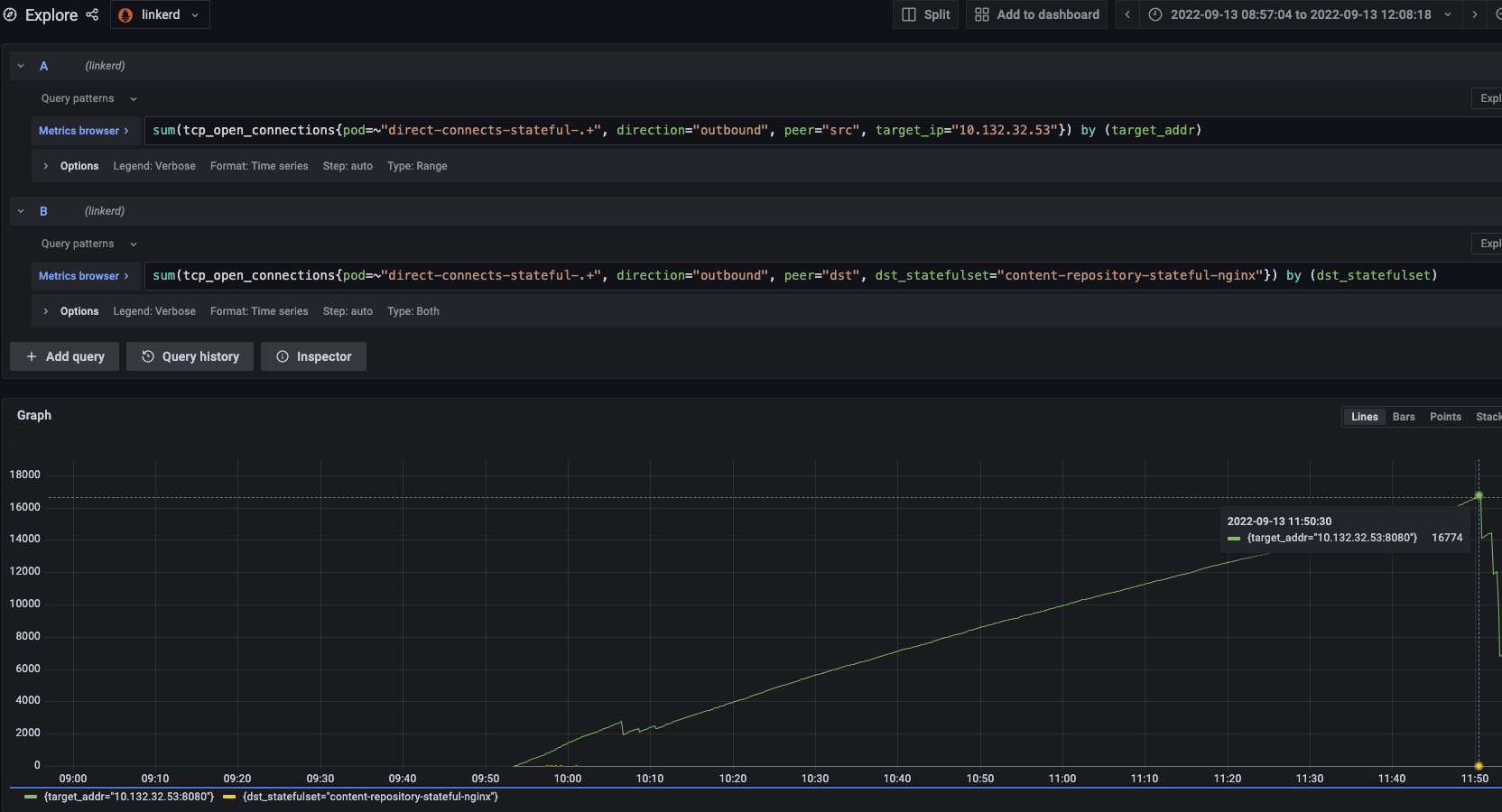

I reonboarded the application yesterday for about three hours and left it running to monitor. The below graph shows the connection difference between src and dst to the same IP. After 3 hours we were hitting ~16k total / roughly 3k connections per pod. You can see the connection from the app to the proxy never closes / decreases: Our application is a java app using the Reactive WebClient based on ReactorNetty This does look to be an issue stemming from the Java app, but the problem only occurs with Linkerd present. The application doesn't retain connections unmeshed. Short of digging through parameters for ReactorNetty / WebClient (not anything I'm familiar with), I'm out of ideas on how to progress forwards with this. Any thoughts, suggestions or even just confirmation that this isn't expected behaviour? |

Beta Was this translation helpful? Give feedback.

-

|

Hi all. We are still seeing this issue with connections leaking between the application and the proxy. A colleague has been attempting to reproduce this outside of the above application, to rule out java idiocies. We have reproduced a few scenarios where we can leak connections just using a .py script and a nginx server, but before sharing I would appreciate if someone could explain/confirm the exact, expected flow of traffic between app <> proxy <> server. My understanding is:

We are observing up to # 4, where connections to/from the target server FIN correctly, but the connection to the applications remains open indefinitely. What is supposed to happen to close this connection? |

Beta Was this translation helpful? Give feedback.

-

|

Hi all, As a follow up, we finally got to the bottom of this. We have Java apps using Reactor Netty and otel-agent version 1.9.0, turns out there is a bug in otel-agent that caused a new client pool to open with every request, essentially leaving all TCP connections open permanently. The fix was simply to upgrade the version of otel-agent: open-telemetry/opentelemetry-java-instrumentation#4862 |

Beta Was this translation helpful? Give feedback.

Hi all,

As a follow up, we finally got to the bottom of this. We have Java apps using Reactor Netty and otel-agent version 1.9.0, turns out there is a bug in otel-agent that caused a new client pool to open with every request, essentially leaving all TCP connections open permanently. The fix was simply to upgrade the version of otel-agent: open-telemetry/opentelemetry-java-instrumentation#4862