|

| 1 | +# Exercise 6: Image translation - Part 1 |

| 2 | + |

| 3 | +This demo script was developed for the DL@MBL 2024 course by Eduardo Hirata-Miyasaki, Ziwen Liu and Shalin Mehta, with many inputs and bugfixes by [Morgan Schwartz](https://github.com/msschwartz21), [Caroline Malin-Mayor](https://github.com/cmalinmayor), and [Peter Park](https://github.com/peterhpark). |

| 4 | + |

| 5 | + |

| 6 | +# Image translation (Virtual Staining) |

| 7 | + |

| 8 | +Written by Eduardo Hirata-Miyasaki, Ziwen Liu, and Shalin Mehta, CZ Biohub San Francisco. |

| 9 | + |

| 10 | +## Overview |

| 11 | + |

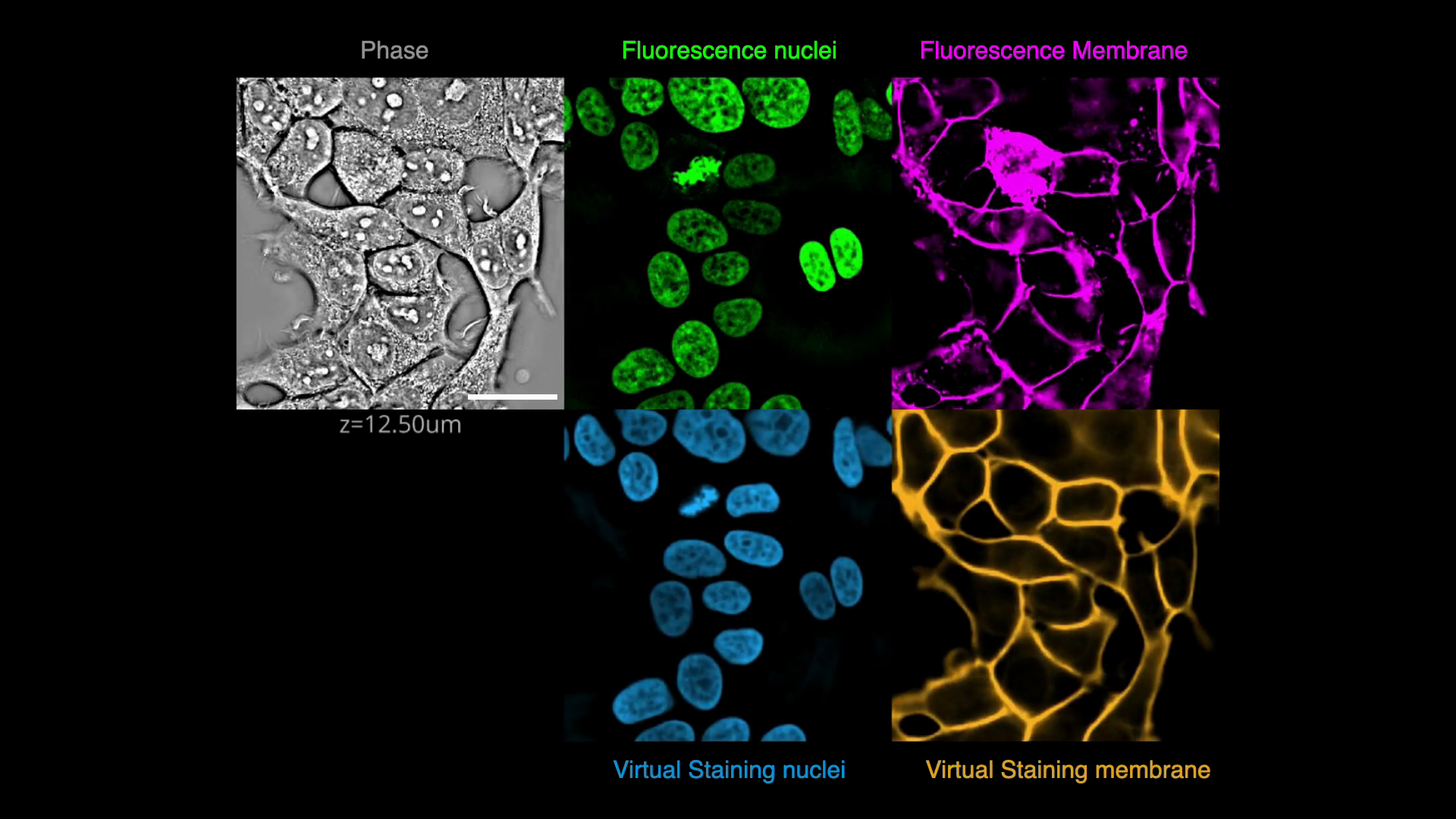

| 12 | +In this exercise, we will predict fluorescence images of nuclei and plasma membrane markers from quantitative phase images of cells, i.e., we will _virtually stain_ the nuclei and plasma membrane visible in the phase image. |

| 13 | +This is an example of an image translation task. We will apply spatial and intensity augmentations to train robust models and evaluate their performance. Finally, we will explore the opposite process of predicting a phase image from a fluorescence membrane label. |

| 14 | + |

| 15 | +[](https://github.com/mehta-lab/VisCy/assets/67518483/d53a81eb-eb37-44f3-b522-8bd7bddc7755) |

| 16 | +(Click on image to play video) |

| 17 | + |

| 18 | +## Goals |

| 19 | + |

| 20 | +### Part 1: Learn to use iohub (I/O library), VisCy dataloaders, and TensorBoard. |

| 21 | + |

| 22 | + - Use a OME-Zarr dataset of 34 FOVs of adenocarcinomic human alveolar basal epithelial cells (A549), |

| 23 | + each FOV has 3 channels (phase, nuclei, and cell membrane). |

| 24 | + The nuclei were stained with DAPI and the cell membrane with Cellmask. |

| 25 | + - Explore OME-Zarr using [iohub](https://czbiohub-sf.github.io/iohub/main/index.html) |

| 26 | + and the high-content-screen (HCS) format. |

| 27 | + - Use [MONAI](https://monai.io/) to implement data augmentations. |

| 28 | + |

| 29 | +### Part 2: Train and evaluate the model to translate phase into fluorescence, and vice versa. |

| 30 | + - Train a 2D UNeXt2 model to predict nuclei and membrane from phase images. |

| 31 | + - Compare the performance of the trained model and a pre-trained model. |

| 32 | + - Evaluate the model using pixel-level and instance-level metrics. |

| 33 | + |

| 34 | + |

| 35 | +Checkout [VisCy](https://github.com/mehta-lab/VisCy/tree/main/examples/demos), |

| 36 | +our deep learning pipeline for training and deploying computer vision models |

| 37 | +for image-based phenotyping including the robust virtual staining of landmark organelles. |

| 38 | +VisCy exploits recent advances in data and metadata formats |

| 39 | +([OME-zarr](https://www.nature.com/articles/s41592-021-01326-w)) and DL frameworks, |

| 40 | +[PyTorch Lightning](https://lightning.ai/) and [MONAI](https://monai.io/). |

| 41 | + |

| 42 | +## Setup |

| 43 | + |

| 44 | +Make sure that you are inside of the `image_translation` folder by using the `cd` command to change directories if needed. |

| 45 | + |

| 46 | +Make sure that you can use conda to switch environments. |

| 47 | + |

| 48 | +```bash |

| 49 | +conda init |

| 50 | +``` |

| 51 | + |

| 52 | +**Close your shell, and login again.** |

| 53 | + |

| 54 | +Run the setup script to create the environment for this exercise and download the dataset. |

| 55 | +```bash |

| 56 | +sh setup.sh |

| 57 | +``` |

| 58 | +Activate your environment |

| 59 | +```bash |

| 60 | +conda activate 06_image_translation |

| 61 | +``` |

| 62 | + |

| 63 | +## Use vscode |

| 64 | + |

| 65 | +Install vscode, install jupyter extension inside vscode, and setup [cell mode](https://code.visualstudio.com/docs/python/jupyter-support-py). Open [solution.py](solution.py) and run the script interactively. |

| 66 | + |

| 67 | +## Use Jupyter Notebook |

| 68 | + |

| 69 | +The matching exercise and solution notebooks can be found [here](https://github.com/dlmbl/image_translation/tree/28e0e515b4a8ad3f392a69c8341e105f730d204f) on the course repository. |

| 70 | + |

| 71 | +Launch a jupyter environment |

| 72 | + |

| 73 | +``` |

| 74 | +jupyter notebook |

| 75 | +``` |

| 76 | + |

| 77 | +...and continue with the instructions in the notebook. |

| 78 | + |

| 79 | +If `06_image_translation` is not available as a kernel in jupyter, run: |

| 80 | + |

| 81 | +``` |

| 82 | +python -m ipykernel install --user --name=06_image_translation |

| 83 | +``` |

| 84 | + |

| 85 | +### References |

| 86 | + |

| 87 | +- [Liu, Z. and Hirata-Miyasaki, E. et al. (2024) Robust Virtual Staining of Cellular Landmarks](https://www.biorxiv.org/content/10.1101/2024.05.31.596901v2.full.pdf) |

| 88 | +- [Guo et al. (2020) Revealing architectural order with quantitative label-free imaging and deep learning. eLife](https://elifesciences.org/articles/55502) |

0 commit comments