You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

Copy file name to clipboardExpand all lines: 1-Introduction/01-defining-data-science/README.md

+2-2Lines changed: 2 additions & 2 deletions

Display the source diff

Display the rich diff

Original file line number

Diff line number

Diff line change

@@ -67,11 +67,11 @@ Vast amounts of data are incomprehensible for a human being, but once we create

67

67

68

68

## Types of Data

69

69

70

-

As we have already mentioned, data is everywhere. We just need to capture it in the right way! It is useful to distinguish between **structured** and **unstructured** data. The former is typically represented in some well-structured form, often as a table or number of tables, while the latter is just a collection of files. Sometimes we can also talk about **semistructured** data, that have some sort of a structure that may vary greatly.

70

+

As we have already mentioned, data is everywhere. We just need to capture it in the right way! It is useful to distinguish between **structured** and **unstructured** data. The former is typically represented in some well-structured form, often as a table or number of tables, while the latter is just a collection of files. Sometimes we can also talk about **semi-structured** data, that have some sort of a structure that may vary greatly.

| List of people with their phone numbers | Wikipedia pages with links | Text of Encyclopaedia Britannica |

74

+

| List of people with their phone numbers | Wikipedia pages with links | Text of Encyclopedia Britannica |

75

75

| Temperature in all rooms of a building at every minute for the last 20 years | Collection of scientific papers in JSON format with authors, data of publication, and abstract | File share with corporate documents |

76

76

| Data for age and gender of all people entering the building | Internet pages | Raw video feed from surveillance camera |

Copy file name to clipboardExpand all lines: 1-Introduction/02-ethics/README.md

+2-2Lines changed: 2 additions & 2 deletions

Display the source diff

Display the rich diff

Original file line number

Diff line number

Diff line change

@@ -12,7 +12,7 @@ Market trends tell us that by 2022, 1-in-3 large organizations will buy and sell

12

12

13

13

Trends also indicate that we will create and consume over [180 zettabytes](https://www.statista.com/statistics/871513/worldwide-data-created/) of data by 2025. As **Data Scientists**, this gives us unprecedented levels of access to personal data. This means we can build behavioral profiles of users and influence decision-making in ways that create an [illusion of free choice](https://www.datasciencecentral.com/profiles/blogs/the-illusion-of-choice) while potentially nudging users towards outcomes we prefer. It also raises broader questions on data privacy and user protections.

14

14

15

-

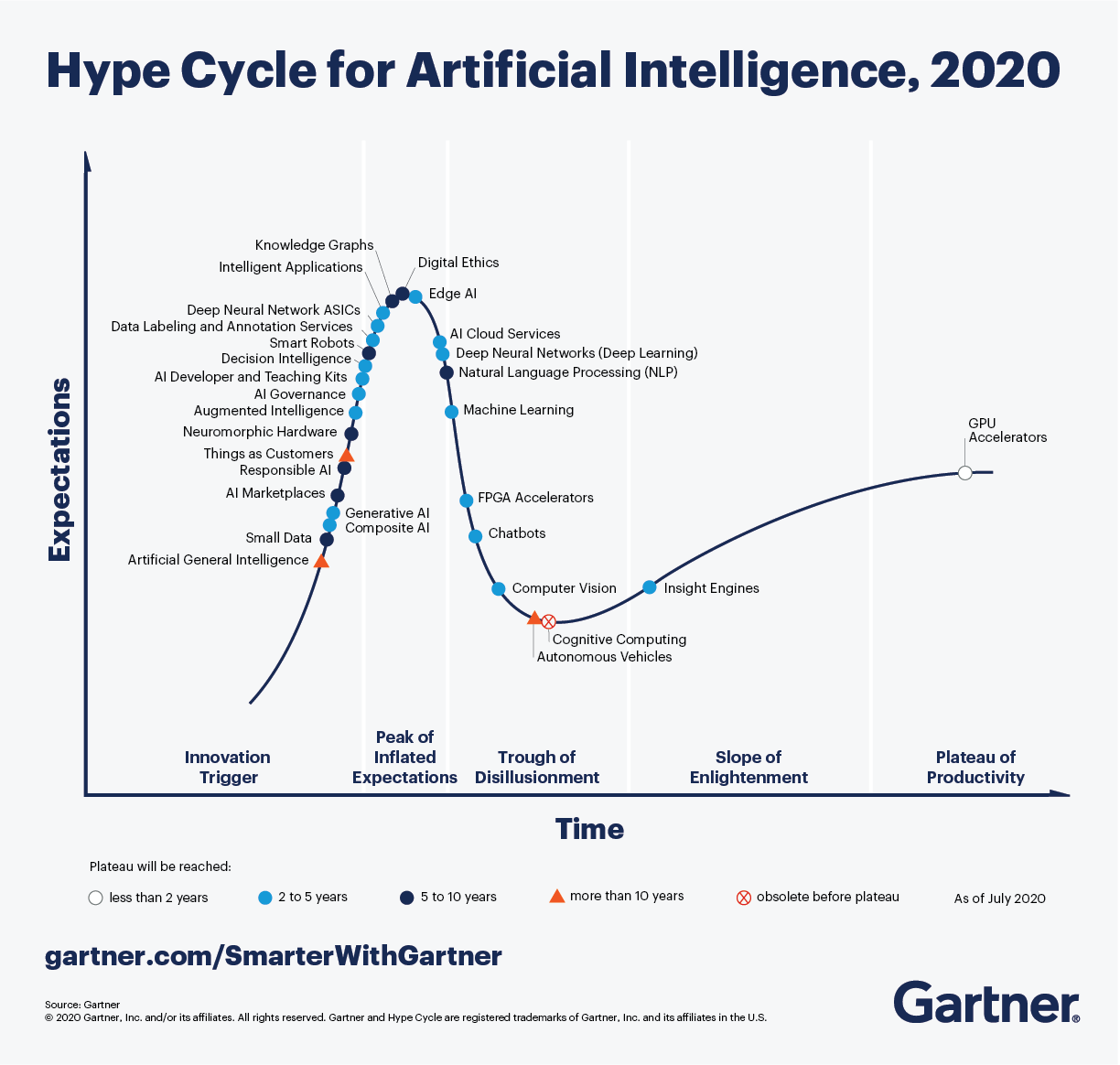

Data ethics are now _necessary guardrails_ for data science and engineering, helping us minimize potential harms and unintended consequences from our data-driven actions. The [Gartner Hype Cycle for AI](https://www.gartner.com/smarterwithgartner/2-megatrends-dominate-the-gartner-hype-cycle-for-artificial-intelligence-2020/) identifies relevant trends in digital ethics, responsible AI ,and AI governances as key drivers for larger megatrends around _democratization_ and _industrialization_ of AI.

15

+

Data ethics are now _necessary guardrails_ for data science and engineering, helping us minimize potential harms and unintended consequences from our data-driven actions. The [Gartner Hype Cycle for AI](https://www.gartner.com/smarterwithgartner/2-megatrends-dominate-the-gartner-hype-cycle-for-artificial-intelligence-2020/) identifies relevant trends in digital ethics, responsible AI ,and AI governance as key drivers for larger megatrends around _democratization_ and _industrialization_ of AI.

16

16

17

17

18

18

@@ -179,7 +179,7 @@ Here are a few examples:

179

179

180

180

| Ethics Challenge | Case Study |

181

181

|--- |--- |

182

-

|**Informed Consent**| 1972 - [Tuskegee Syphillis Study](https://en.wikipedia.org/wiki/Tuskegee_Syphilis_Study) - African American men who participated in the study were promised free medical care _but deceived_ by researchers who failed to inform subjects of their diagnosis or about availability of treatment. Many subjects died & partners or children were affected; the study lasted 40 years. |

182

+

|**Informed Consent**| 1972 - [Tuskegee Syphilis Study](https://en.wikipedia.org/wiki/Tuskegee_Syphilis_Study) - African American men who participated in the study were promised free medical care _but deceived_ by researchers who failed to inform subjects of their diagnosis or about availability of treatment. Many subjects died & partners or children were affected; the study lasted 40 years. |

183

183

|**Data Privacy**| 2007 - The [Netflix data prize](https://www.wired.com/2007/12/why-anonymous-data-sometimes-isnt/) provided researchers with _10M anonymized movie rankings from 50K customers_ to help improve recommendation algorithms. However, researchers were able to correlate anonymized data with personally-identifiable data in _external datasets_ (e.g., IMDb comments) - effectively "de-anonymizing" some Netflix subscribers.|

184

184

|**Collection Bias**| 2013 - The City of Boston [developed Street Bump](https://www.boston.gov/transportation/street-bump), an app that let citizens report potholes, giving the city better roadway data to find and fix issues. However, [people in lower income groups had less access to cars and phones](https://hbr.org/2013/04/the-hidden-biases-in-big-data), making their roadway issues invisible in this app. Developers worked with academics to _equitable access and digital divides_ issues for fairness. |

185

185

|**Algorithmic Fairness**| 2018 - The MIT [Gender Shades Study](http://gendershades.org/overview.html) evaluated the accuracy of gender classification AI products, exposing gaps in accuracy for women and persons of color. A [2019 Apple Card](https://www.wired.com/story/the-apple-card-didnt-see-genderand-thats-the-problem/) seemed to offer less credit to women than men. Both illustrated issues in algorithmic bias leading to socio-economic harms.|

Copy file name to clipboardExpand all lines: 1-Introduction/04-stats-and-probability/README.md

+1-1Lines changed: 1 addition & 1 deletion

Display the source diff

Display the rich diff

Original file line number

Diff line number

Diff line change

@@ -250,7 +250,7 @@ Use the sample code in the notebook to test other hypothesis that:

250

250

251

251

Probability and statistics is such a broad topic that it deserves its own course. If you are interested to go deeper into theory, you may want to continue reading some of the following books:

252

252

253

-

1.[Carlos Fernanderz-Granda](https://cims.nyu.edu/~cfgranda/) from New York University has great lecture notes [Probability and Statistics for Data Science](https://cims.nyu.edu/~cfgranda/pages/stuff/probability_stats_for_DS.pdf) (available online)

253

+

1.[Carlos Fernandez-Granda](https://cims.nyu.edu/~cfgranda/) from New York University has great lecture notes [Probability and Statistics for Data Science](https://cims.nyu.edu/~cfgranda/pages/stuff/probability_stats_for_DS.pdf) (available online)

254

254

1.[Peter and Andrew Bruce. Practical Statistics for Data Scientists.](https://www.oreilly.com/library/view/practical-statistics-for/9781491952955/)[[sample code in R](https://github.com/andrewgbruce/statistics-for-data-scientists)].

255

255

1.[James D. Miller. Statistics for Data Science](https://www.packtpub.com/product/statistics-for-data-science/9781788290678)[[sample code in R](https://github.com/PacktPublishing/Statistics-for-Data-Science)]

0 commit comments