From 6570a0503968c647b2f4b9269ae7502b230abe14 Mon Sep 17 00:00:00 2001

From: pandora-s-git <128635000+pandora-s-git@users.noreply.github.com>

Date: Tue, 14 Oct 2025 12:19:17 +0000

Subject: [PATCH] chore(docs): auto-update llms.txt & llms-full.txt

---

static/llms-full.txt | 179 +++++++++++++------------------------------

static/llms.txt | 98 +++++++++++------------

2 files changed, 101 insertions(+), 176 deletions(-)

diff --git a/static/llms-full.txt b/static/llms-full.txt

index 5b9f435..a54b343 100644

--- a/static/llms-full.txt

+++ b/static/llms-full.txt

@@ -3117,7 +3117,7 @@ Now that we have our document library agent ready, we can search them on demand

```py

response = client.beta.conversations.start(

- agent_id=image_agent.id,

+ agent_id=library_agent.id,

inputs="How does the vision encoder for pixtral 12b work"

)

```

@@ -11711,11 +11711,11 @@ Currently we have two reasoning models:

- `magistral-medium-latest`: Our more powerful reasoning model balancing performance and cost.

:::info

-Currently, `-latest` points to `-2507`, our most recent version of our reasoning models. If you were previously using `-2506`, a **migration** regarding the thinking chunks is required.

-- `-2507` **(new)**: Uses tokenized thinking chunks via control tokens, providing the thinking traces in different types of content chunks.

+Currently, `-latest` points to `-2509`, our most recent version of our reasoning models. If you were previously using `-2506`, a **migration** regarding the thinking chunks is required.

+- `-2507` & `-2509` **(new)**: Uses tokenized thinking chunks via control tokens, providing the thinking traces in different types of content chunks.

- `-2506` **(old)**: Used `\n` and `\n\n` tags as strings to encapsulate the thinking traces for input and output within the same content type.

-

+

```json

[

{

@@ -11794,7 +11794,33 @@ To have the best performance out of our models, we recommend having the followin

System Prompt

-

+

+```json

+{

+ "role": "system",

+ "content": [

+ {

+ "type": "text",

+ "text": "# HOW YOU SHOULD THINK AND ANSWER\n\nFirst draft your thinking process (inner monologue) until you arrive at a response. Format your response using Markdown, and use LaTeX for any mathematical equations. Write both your thoughts and the response in the same language as the input.\n\nYour thinking process must follow the template below:"

+ },

+ {

+ "type": "thinking",

+ "thinking": [

+ {

+ "type": "text",

+ "text": "Your thoughts or/and draft, like working through an exercise on scratch paper. Be as casual and as long as you want until you are confident to generate the response to the user."

+ }

+ ]

+ },

+ {

+ "type": "text",

+ "text": "Here, provide a self-contained response."

+ }

+ ]

+}

+```

+

+

```json

{

"role": "system",

@@ -11915,7 +11941,7 @@ curl --location "https://api.mistral.ai/v1/chat/completions" \

-

+

The output of the model will include different chunks of content, but mostly a `thinking` type with the reasoning traces and a `text` type with the answer like so:

```json

"content": [

@@ -13101,10 +13127,12 @@ Vision capabilities enable models to analyze images and provide insights based o

For more specific use cases regarding document parsing and data extraction we recommend taking a look at our Document AI stack [here](../document_ai/document_ai_overview).

## Models with Vision Capabilities:

+- Mistral Medium 3.1 2508 (`mistral-medium-latest`)

+- Mistral Small 3.2 2506 (`mistral-small-latest`)

+- Magistral Small 1.2 2509 (`magistral-small-latest`)

+- Magistral Medium 1.2 2509 (`magistral-medium-latest`)

- Pixtral 12B (`pixtral-12b-latest`)

- Pixtral Large 2411 (`pixtral-large-latest`)

-- Mistral Medium 2505 (`mistral-medium-latest`)

-- Mistral Small 2503 (`mistral-small-latest`)

## Passing an Image URL

If the image is hosted online, you can simply provide the URL of the image in the request. This method is straightforward and does not require any encoding.

@@ -13136,7 +13164,7 @@ messages = [

},

{

"type": "image_url",

- "image_url": "https://tripfixers.com/wp-content/uploads/2019/11/eiffel-tower-with-snow.jpeg"

+ "image_url": "https://docs.mistral.ai/img/eiffel-tower-paris.jpg"

}

]

}

@@ -13171,7 +13199,7 @@ const chatResponse = await client.chat.complete({

{ type: "text", text: "What's in this image?" },

{

type: "image_url",

- imageUrl: "https://tripfixers.com/wp-content/uploads/2019/11/eiffel-tower-with-snow.jpeg",

+ imageUrl: "https://docs.mistral.ai/img/eiffel-tower-paris.jpg",

},

],

},

@@ -13200,7 +13228,7 @@ curl https://api.mistral.ai/v1/chat/completions \

},

{

"type": "image_url",

- "image_url": "https://tripfixers.com/wp-content/uploads/2019/11/eiffel-tower-with-snow.jpeg"

+ "image_url": "https://docs.mistral.ai/img/eiffel-tower-paris.jpg"

}

]

}

@@ -13394,51 +13422,6 @@ The chart is a bar chart titled 'France's Social Divide,' comparing socio-econom

-

-Compare images

-

-

-

-

-

-```bash

-curl https://api.mistral.ai/v1/chat/completions \

- -H "Content-Type: application/json" \

- -H "Authorization: Bearer $MISTRAL_API_KEY" \

- -d '{

- "model": "pixtral-12b-2409",

- "messages": [

- {

- "role": "user",

- "content": [

- {

- "type": "text",

- "text": "what are the differences between two images?"

- },

- {

- "type": "image_url",

- "image_url": "https://tripfixers.com/wp-content/uploads/2019/11/eiffel-tower-with-snow.jpeg"

- },

- {

- "type": "image_url",

- "image_url": {

- "url": "https://assets.visitorscoverage.com/production/wp-content/uploads/2024/04/AdobeStock_626542468-min-1024x683.jpeg"

- }

- }

- ]

- }

- ],

- "max_tokens": 300

- }'

-```

-

-Model output:

-```

-The first image features the Eiffel Tower surrounded by snow-covered trees and pathways, with a clear view of the tower's intricate iron lattice structure. The second image shows the Eiffel Tower in the background of a large, outdoor stadium filled with spectators, with a red tennis court in the center. The most notable differences are the setting - one is a winter scene with snow, while the other is a summer scene with a crowd at a sporting event. The mood of the first image is serene and quiet, whereas the second image conveys a lively and energetic atmosphere. These differences highlight the versatility of the Eiffel Tower as a landmark that can be enjoyed in various contexts and seasons.

-```

-

-

-

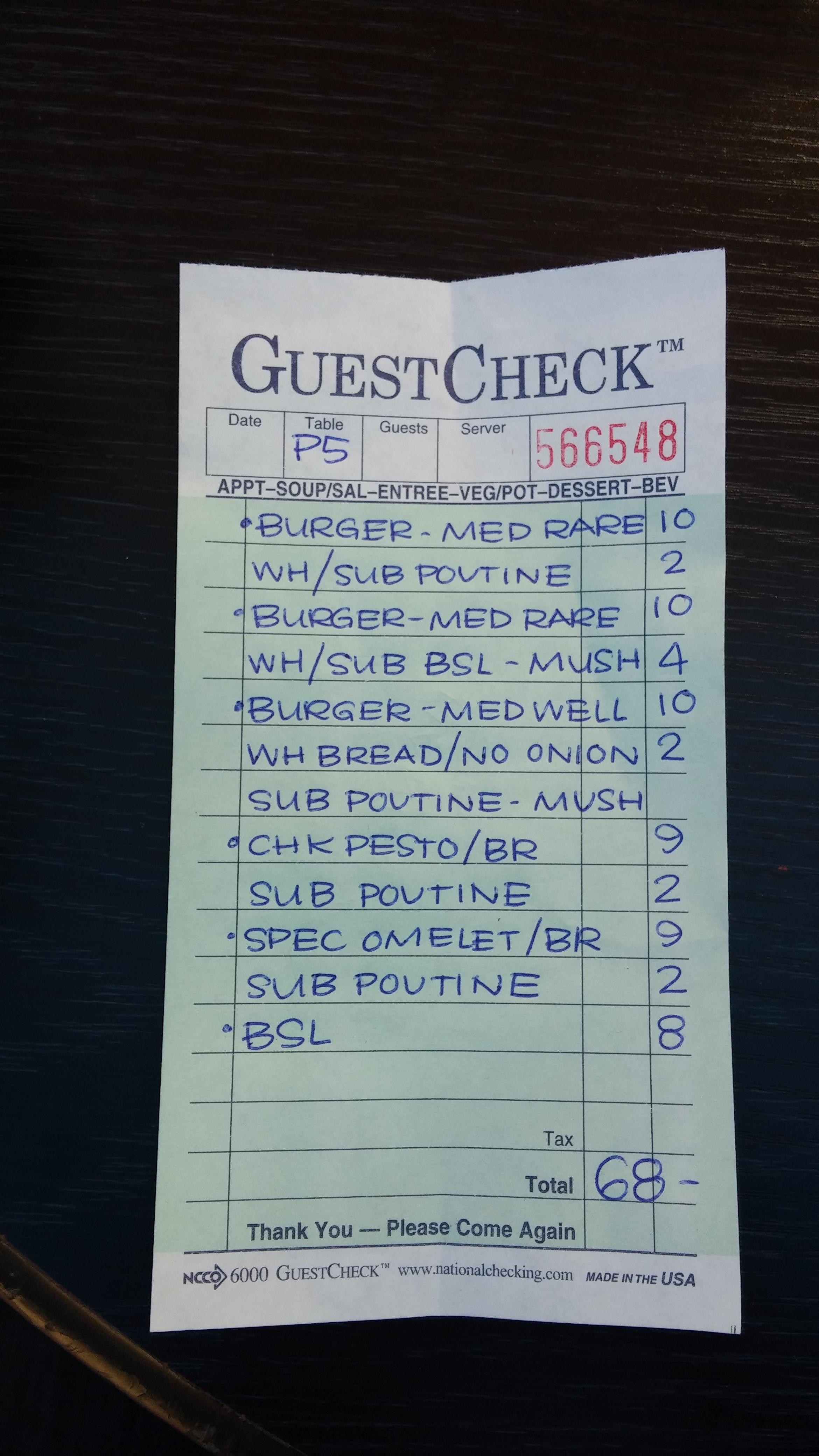

Transcribe receipts

@@ -13514,68 +13497,6 @@ Model output:

-

-OCR with structured output

-

-

-

-```bash

-curl https://api.mistral.ai/v1/chat/completions \

- -H "Content-Type: application/json" \

- -H "Authorization: Bearer $MISTRAL_API_KEY" \

- -d '{

- "model": "pixtral-12b-2409",

- "messages": [

- {

- "role": "system",

- "content": [

- {"type": "text",

- "text" : "Extract the text elements described by the user from the picture, and return the result formatted as a json in the following format : {name_of_element : [value]}"

- }

- ]

- },

- {

- "role": "user",

- "content": [

- {

- "type": "text",

- "text": "From this restaurant bill, extract the bill number, item names and associated prices, and total price and return it as a string in a Json object"

- },

- {

- "type": "image_url",

- "image_url": "https://i.imghippo.com/files/kgXi81726851246.jpg"

- }

- ]

- }

- ],

- "response_format":

- {

- "type": "json_object"

- }

- }'

-

-```

-

-Model output:

-```json

-{'bill_number': '566548',

- 'items': [{'item_name': 'BURGER - MED RARE', 'price': 10},

- {'item_name': 'WH/SUB POUTINE', 'price': 2},

- {'item_name': 'BURGER - MED RARE', 'price': 10},

- {'item_name': 'WH/SUB BSL - MUSH', 'price': 4},

- {'item_name': 'BURGER - MED WELL', 'price': 10},

- {'item_name': 'WH BREAD/NO ONION', 'price': 2},

- {'item_name': 'SUB POUTINE - MUSH', 'price': 2},

- {'item_name': 'CHK PESTO/BR', 'price': 9},

- {'item_name': 'SUB POUTINE', 'price': 2},

- {'item_name': 'SPEC OMELET/BR', 'price': 9},

- {'item_name': 'SUB POUTINE', 'price': 2},

- {'item_name': 'BSL', 'price': 8}],

- 'total_price': 68}

-```

-

-

-

## FAQ

- **What is the price per image?**

@@ -13588,6 +13509,8 @@ Model output:

| Model | Max Resolution | ≈ Formula | ≈ N Max Tokens |

| - | - | - | - |

+ | Magistral Medium 1.2 | 1540x1540 | `≈ (ResolutionX * ResolutionY) / 784` | ≈ 3025 |

+ | Magistral Small 1.2 | 1540x1540 | `≈ (ResolutionX * ResolutionY) / 784` | ≈ 3025 |

| Mistral Small 3.2 | 1540x1540 | `≈ (ResolutionX * ResolutionY) / 784` | ≈ 3025 |

| Mistral Medium 3 | 1540x1540 | `≈ (ResolutionX * ResolutionY) / 784` | ≈ 3025 |

| Mistral Small 3.1 | 1540x1540 | `≈ (ResolutionX * ResolutionY) / 784` | ≈ 3025 |

@@ -14601,7 +14524,7 @@ We offer two types of rate limits:

Key points to note:

-- Rate limits are set at the workspace level.

+- Rate limits are set at the organization level.

- Limits are defined by usage tier, where each tier is associated with a different set of rate limits.

- In case you need to raise your usage limits, please feel free to contact us by utilizing the support button, providing details about your specific use case.

@@ -16214,17 +16137,16 @@ Mistral provides two types of models: open models and premier models.

| Model | Weight availability|Available via API| Description | Max Tokens| API Endpoints|Version|

|--------------------|:--------------------:|:--------------------:|:--------------------:|:--------------------:|:--------------------:|:--------------------:|

| Mistral Medium 3.1 | | :heavy_check_mark: | Our frontier-class multimodal model released August 2025. Improving tone and performance. Read more about Medium 3 in our [blog post](https://mistral.ai/news/mistral-medium-3/) | 128k | `mistral-medium-2508` | 25.08|

-| Magistral Medium 1.1 | | :heavy_check_mark: | Our frontier-class reasoning model released July 2025. | 40k | `magistral-medium-2507` | 25.07|

+| Magistral Medium 1.2 | | :heavy_check_mark: | Our frontier-class reasoning model update released September 2025 with vision support. | 128k | `magistral-medium-2509` | 25.09|

| Codestral 2508 | | :heavy_check_mark: | Our cutting-edge language model for coding released end of July 2025, Codestral specializes in low-latency, high-frequency tasks such as fill-in-the-middle (FIM), code correction and test generation. Learn more in our [blog post](https://mistral.ai/news/codestral-25-08/) | 256k | `codestral-2508` | 25.08|

| Voxtral Mini Transcribe | | :heavy_check_mark: | An efficient audio input model, fine-tuned and optimized for transcription purposes only. | | `voxtral-mini-2507` via `audio/transcriptions` | 25.07|

| Devstral Medium | | :heavy_check_mark: | An enterprise grade text model, that excels at using tools to explore codebases, editing multiple files and power software engineering agents. Learn more in our [blog post](https://mistral.ai/news/devstral-2507) | 128k | `devstral-medium-2507` | 25.07|

| Mistral OCR 2505 | | :heavy_check_mark: | Our OCR service powering our Document AI stack that enables our users to extract interleaved text and images | | `mistral-ocr-2505` | 25.05|

-| Magistral Medium 1 | | :heavy_check_mark: | Our first frontier-class reasoning model released June 2025. Learn more in our [blog post](https://mistral.ai/news/magistral/) | 40k | `magistral-medium-2506` | 25.06|

| Ministral 3B | | :heavy_check_mark: | World’s best edge model. Learn more in our [blog post](https://mistral.ai/news/ministraux/) | 128k | `ministral-3b-2410` | 24.10|

| Ministral 8B | :heavy_check_mark:

[Mistral Research License](https://mistral.ai/licenses/MRL-0.1.md)| :heavy_check_mark: |Powerful edge model with extremely high performance/price ratio. Learn more in our [blog post](https://mistral.ai/news/ministraux/) | 128k | `ministral-8b-2410` | 24.10|

| Mistral Medium 3 | | :heavy_check_mark: | Our frontier-class multimodal model released May 2025. Learn more in our [blog post](https://mistral.ai/news/mistral-medium-3/) | 128k | `mistral-medium-2505` | 25.05|

-| Codestral 2501 | | :heavy_check_mark: | Our cutting-edge language model for coding with the second version released January 2025, Codestral specializes in low-latency, high-frequency tasks such as fill-in-the-middle (FIM), code correction and test generation. Learn more in our [blog post](https://mistral.ai/news/codestral-2501/) | 256k | `codestral-2501` | 25.01|

| Mistral Large 2.1 |:heavy_check_mark:

[Mistral Research License](https://mistral.ai/licenses/MRL-0.1.md)| :heavy_check_mark: | Our top-tier large model for high-complexity tasks with the lastest version released November 2024. Learn more in our [blog post](https://mistral.ai/news/pixtral-large/) | 128k | `mistral-large-2411` | 24.11|

+| Codestral 2501 | | :heavy_check_mark: | Our cutting-edge language model for coding released in January 2025, Codestral specializes in low-latency, high-frequency tasks such as fill-in-the-middle (FIM), code correction and test generation. Learn more in our [blog post](https://mistral.ai/news/codestral-2501) | 256k | `codestral-2501` | 25.01|

| Pixtral Large |:heavy_check_mark:

[Mistral Research License](https://mistral.ai/licenses/MRL-0.1.md)| :heavy_check_mark: | Our first frontier-class multimodal model released November 2024. Learn more in our [blog post](https://mistral.ai/news/pixtral-large/) | 128k | `pixtral-large-2411` | 24.11|

| Mistral Small 2| :heavy_check_mark:

[Mistral Research License](https://mistral.ai/licenses/MRL-0.1.md) | :heavy_check_mark: | Our updated small version, released September 2024. Learn more in our [blog post](https://mistral.ai/news/september-24-release) | 32k | `mistral-small-2407` | 24.07|

| Mistral Embed | | :heavy_check_mark: | Our state-of-the-art semantic for extracting representation of text extracts | 8k | `mistral-embed` | 23.12|

@@ -16235,15 +16157,13 @@ Mistral provides two types of models: open models and premier models.

| Model | Weight availability|Available via API| Description | Max Tokens| API Endpoints|Version|

|--------------------|:--------------------:|:--------------------:|:--------------------:|:--------------------:|:--------------------:|:--------------------:|

-| Magistral Small 1.1 | :heavy_check_mark:

Apache2 | :heavy_check_mark: | Our small reasoning model released July 2025. | 40k | `magistral-small-2507` | 25.07|

+| Magistral Small 1.2 | :heavy_check_mark:

Apache2 | :heavy_check_mark: | Our small reasoning model released September 2025 with vision support. | 128k | `magistral-small-2509` | 25.09|

| Voxtral Small | :heavy_check_mark:

Apache2 | :heavy_check_mark: | Our first model with audio input capabilities for instruct use cases. | 32k | `voxtral-small-2507` | 25.07|

| Voxtral Mini | :heavy_check_mark:

Apache2 | :heavy_check_mark: | A mini version of our first audio input model. | 32k | `voxtral-mini-2507` | 25.07|

| Mistral Small 3.2 | :heavy_check_mark:

Apache2 | :heavy_check_mark: | An update to our previous small model, released June 2025. | 128k | `mistral-small-2506` | 25.06|

-| Magistral Small 1 | :heavy_check_mark:

Apache2 | :heavy_check_mark: | Our first small reasoning model released June 2025. Learn more in our [blog post](https://mistral.ai/news/magistral/) | 40k | `magistral-small-2506` | 25.06|

| Devstral Small 1.1 | :heavy_check_mark:

Apache2 | :heavy_check_mark: | An update to our open source model that excels at using tools to explore codebases, editing multiple files and power software engineering agents. Learn more in our [blog post](https://mistral.ai/news/devstral-2507) | 128k | `devstral-small-2507` | 25.07|

| Mistral Small 3.1 | :heavy_check_mark:

Apache2 | :heavy_check_mark: | A new leader in the small models category with image understanding capabilities, released March 2025. Learn more in our [blog post](https://mistral.ai/news/mistral-small-3-1/) | 128k | `mistral-small-2503` | 25.03|

| Mistral Small 3| :heavy_check_mark:

Apache2 | :heavy_check_mark: | A new leader in the small models category, released January 2025. Learn more in our [blog post](https://mistral.ai/news/mistral-small-3) | 32k | `mistral-small-2501` | 25.01|

-| Devstral Small 1| :heavy_check_mark:

Apache2 | :heavy_check_mark: | A 24B text model, open source model that excels at using tools to explore codebases, editing multiple files and power software engineering agents. Learn more in our [blog post](https://mistral.ai/news/devstral/) | 128k | `devstral-small-2505` | 25.05|

| Pixtral 12B | :heavy_check_mark:

Apache2 | :heavy_check_mark: | A 12B model with image understanding capabilities in addition to text. Learn more in our [blog post](https://mistral.ai/news/pixtral-12b/)| 128k | `pixtral-12b-2409` | 24.09|

| Mistral Nemo 12B | :heavy_check_mark:

Apache2 | :heavy_check_mark: | Our best multilingual open source model released July 2024. Learn more in our [blog post](https://mistral.ai/news/mistral-nemo/) | 128k | `open-mistral-nemo`| 24.07|

@@ -16255,8 +16175,8 @@ it is recommended to use the dated versions of the Mistral AI API.

Additionally, be prepared for the deprecation of certain endpoints in the coming months.

Here are the details of the available versions:

-- `magistral-medium-latest`: currently points to `magistral-medium-2507`.

-- `magistral-small-latest`: currently points to `magistral-small-2507`.

+- `magistral-medium-latest`: currently points to `magistral-medium-2509`.

+- `magistral-small-latest`: currently points to `magistral-small-2509`.

- `mistral-medium-latest`: currently points to `mistral-medium-2508`.

- `mistral-large-latest`: currently points to `mistral-medium-2508`, previously `mistral-large-2411`.

- `pixtral-large-latest`: currently points to `pixtral-large-2411`.

@@ -16300,6 +16220,11 @@ To prepare for model retirements and version upgrades, we recommend that custome

| Mistral Saba 2502 | | `mistral-saba-2502` | 25.02| 2025/06/10|2025/09/30| `mistral-small-latest`|

| Mathstral 7B | :heavy_check_mark:

Apache2 | | v0.1| || `magistral-small-latest`|

| Codestral Mamba | :heavy_check_mark:

Apache2 |`open-codestral-mamba` | v0.1|2525/06/06 |2525/06/06| `codestral-latest`|

+| Devstral Small 1.0 | :heavy_check_mark:

Apache2 | `devstral-small-2505` | 25.05|2025/10/31|2025/11/30| `devstral-small-latest`|

+| Magistral Small 1.0 | :heavy_check_mark:

Apache2 | `magistral-small-2506` | 25.06|2025/10/31|2025/11/30| `magistral-small-latest`|

+| Magistral Medium 1.0 | | `magistral-medium-2506` | 25.06|2025/10/31|2025/11/30| `magistral-medium-latest`|

+| Magistral Small 1.1 | :heavy_check_mark:

Apache2 | `magistral-small-2507` | 25.07|2025/10/31|2025/11/30| `magistral-small-latest`|

+| Magistral Medium 1.1 | | `magistral-medium-2507` | 25.07|2025/10/31|2025/11/30| `magistral-medium-latest`|

[Model weights]

diff --git a/static/llms.txt b/static/llms.txt

index 144e1d7..de5415f 100644

--- a/static/llms.txt

+++ b/static/llms.txt

@@ -3,49 +3,49 @@

## Docs

[Agents & Conversations](https://docs.mistral.ai/docs/agents/agents_and_conversations.md): Agents, Conversations, and Entries enhance API interactions with tools, history, and flexible event representation

-[Agents Function Calling](https://docs.mistral.ai/docs/agents/agents_function_calling.md): Agents use function calling to execute tools and workflows, with built-in and customizable JSON schema support

-[Agents Introduction](https://docs.mistral.ai/docs/agents/agents_introduction.md): AI agents are autonomous systems powered by LLMs that plan, use tools, and execute tasks, with APIs for multimodal models, persistent state, and workflow collaboration

-[Code Interpreter](https://docs.mistral.ai/docs/agents/connectors/code_interpreter.md): Code Interpreter enables secure, on-demand code execution for data analysis, graphing, and more in isolated containers

+[Agents Function Calling](https://docs.mistral.ai/docs/agents/agents_function_calling.md): Agents use function calling to execute tools and workflows, with built-in connectors and custom JSON schema support

+[Agents Introduction](https://docs.mistral.ai/docs/agents/agents_introduction.md): AI agents are autonomous systems powered by LLMs that plan, use tools, and execute tasks to achieve goals, with APIs for multimodal models, persistent state, and collaboration

+[Code Interpreter](https://docs.mistral.ai/docs/agents/connectors/code_interpreter.md): Code Interpreter enables safe, on-demand code execution in isolated containers for data analysis, graphing, and more

[Connectors Overview](https://docs.mistral.ai/docs/agents/connectors/connectors_overview.md): Connectors enable Agents and users to access tools like websearch, code interpreter, and more for on-demand answers

-[Document Library](https://docs.mistral.ai/docs/agents/connectors/document_library.md): Document Library is a built-in RAG tool for agents to access and manage uploaded documents via Mistral Cloud

-[Image Generation](https://docs.mistral.ai/docs/agents/connectors/image_generation.md): Image Generation tool enables agents to create images on demand

-[Websearch](https://docs.mistral.ai/docs/agents/connectors/websearch.md): Websearch enables models to browse the web for real-time, up-to-date information or access specific URLs

-[Agents Handoffs](https://docs.mistral.ai/docs/agents/handoffs.md): Agents Handoffs enable seamless task delegation and conversation handover between multiple agents in automated workflows

-[MCP](https://docs.mistral.ai/docs/agents/mcp.md): MCP standardizes AI model integration with data sources for seamless, secure, and efficient contextual access

-[Audio & Transcription](https://docs.mistral.ai/docs/capabilities/audio_and_transcription.md): Audio & Transcription: Models for chat and transcription with audio input support

-[Batch Inference](https://docs.mistral.ai/docs/capabilities/batch_inference.md): Prepare and upload batch requests, then create a job to process them with specified models and endpoints

-[Citations and References](https://docs.mistral.ai/docs/capabilities/citations_and_references.md): Citations and references enable models to ground responses with sources, enhancing accuracy in RAG and agentic applications

-[Coding](https://docs.mistral.ai/docs/capabilities/coding.md): LLMs for coding: Codestral for code generation, Devstral for agentic tool use, with FIM and chat endpoints

-[Annotations](https://docs.mistral.ai/docs/capabilities/document_ai/annotations.md): Mistral Document AI API enhances OCR with structured JSON annotations for bbox and document data extraction

-[Basic OCR](https://docs.mistral.ai/docs/capabilities/document_ai/basic_ocr.md): Extract text and structure from PDFs with Mistral's Document AI OCR processor." (99 characters)

+[Document Library](https://docs.mistral.ai/docs/agents/connectors/document_library.md): Document Library is a built-in RAG tool for agents to access and manage uploaded documents in Mistral Cloud

+[Image Generation](https://docs.mistral.ai/docs/agents/connectors/image_generation.md): Built-in tool for agents to generate images on demand

+[Websearch](https://docs.mistral.ai/docs/agents/connectors/websearch.md): Websearch enables models to browse the web for real-time info, overcoming outdated training data limitations

+[Agents Handoffs](https://docs.mistral.ai/docs/agents/handoffs.md): Agents Handoffs enable seamless task delegation and conversation transfers between multiple agents in automated workflows

+[MCP](https://docs.mistral.ai/docs/agents/mcp.md): MCP standardizes AI model integration with data sources for seamless, secure, and efficient contextual connections

+[Audio & Transcription](https://docs.mistral.ai/docs/capabilities/audio_and_transcription.md): Audio & Transcription: Models for real-time chat and efficient transcription via audio input

+[Batch Inference](https://docs.mistral.ai/docs/capabilities/batch_inference.md): Prepare and upload JSONL batch files, then create a job with model, endpoint, and optional metadata

+[Citations and References](https://docs.mistral.ai/docs/capabilities/citations_and_references.md): Citations and references enable models to ground responses with sources, enhancing accuracy for RAG and agentic applications

+[Coding](https://docs.mistral.ai/docs/capabilities/coding.md): LLMs for coding: Codestral for code generation, Devstral for agentic tool use, and Codestral Embed for semantic search

+[Annotations](https://docs.mistral.ai/docs/capabilities/document_ai/annotations.md): Mistral Document AI API adds structured JSON annotations for OCR, including bbox and document annotations for efficient data extraction

+[Basic OCR](https://docs.mistral.ai/docs/capabilities/document_ai/basic_ocr.md): Extract text, structure, and images from PDFs and images with Mistral's OCR API." (99 characters)

[Document AI](https://docs.mistral.ai/docs/capabilities/document_ai/document_ai_overview.md): Mistral Document AI offers enterprise-level OCR and structured data extraction with multilingual support and scalable workflows

-[Document QnA](https://docs.mistral.ai/docs/capabilities/document_ai/document_qna.md): Document AI QnA combines OCR and LLMs to enable natural language queries for document insights and analysis

-[Code Embeddings](https://docs.mistral.ai/docs/capabilities/embeddings/code_embeddings.md): Code embeddings enable powerful retrieval, clustering, and analytics for code databases and assistants

-[Embeddings Overview](https://docs.mistral.ai/docs/capabilities/embeddings/embeddings_overview.md): Mistral AI's Embeddings API provides advanced vector representations for text and code, enabling NLP tasks like retrieval, clustering, and search

+[Document QnA](https://docs.mistral.ai/docs/capabilities/document_ai/document_qna.md): Document AI QnA combines OCR and LLMs for natural language interaction with document content, enabling question answering and insights

+[Code Embeddings](https://docs.mistral.ai/docs/capabilities/embeddings/code_embeddings.md): Code embeddings enable advanced code analysis, retrieval, and AI assistant capabilities for enterprise applications

+[Embeddings Overview](https://docs.mistral.ai/docs/capabilities/embeddings/embeddings_overview.md): Vector representations of text and code for NLP tasks like search, clustering, and classification

[Text Embeddings](https://docs.mistral.ai/docs/capabilities/embeddings/text_embeddings.md): Generate 1024-dimension text embeddings using Mistral AI's embeddings API for NLP applications

[Classifier Factory](https://docs.mistral.ai/docs/capabilities/finetuning/classifier-factory.md): Classifier Factory: Tools for moderation, intent detection, and sentiment analysis to enhance efficiency and user experience

-[Fine-tuning Overview](https://docs.mistral.ai/docs/capabilities/finetuning/finetuning_overview.md): Learn about fine-tuning costs, benefits vs. prompting, and when to use each method for AI models

-[Text & Vision Fine-tuning](https://docs.mistral.ai/docs/capabilities/finetuning/text-vision-finetuning.md): Fine-tune text and vision models for domain-specific tasks or unique conversational styles using your dataset

+[Fine-tuning Overview](https://docs.mistral.ai/docs/capabilities/finetuning/finetuning_overview.md): Learn about fine-tuning costs, benefits vs. prompting, and when to use each for AI model optimization

+[Text & Vision Fine-tuning](https://docs.mistral.ai/docs/capabilities/finetuning/text-vision-finetuning.md): Fine-tune text and vision models for domain-specific tasks or conversational styles using JSONL datasets

[Function calling](https://docs.mistral.ai/docs/capabilities/function-calling.md): Mistral models enable function calling to integrate external tools for enhanced application development

[Moderation](https://docs.mistral.ai/docs/capabilities/moderation.md): New moderation API with Mistral model for detecting harmful text in raw and conversational content

[Predicted outputs](https://docs.mistral.ai/docs/capabilities/predicted-outputs.md): Optimizes response time by predefining predictable content for faster, high-quality outputs." (99 characters)

-[Reasoning](https://docs.mistral.ai/docs/capabilities/reasoning.md): Reasoning enhances CoT by generating logical steps before final answers, improving problem-solving depth and accuracy

-[Custom Structured Output](https://docs.mistral.ai/docs/capabilities/structured-output/custom.md): Define structured JSON outputs using Pydantic models with Mistral AI for consistent, typed responses

-[JSON mode](https://docs.mistral.ai/docs/capabilities/structured-output/json-mode.md): Enable JSON mode by setting `response_format` to `{\"type\": \"json_object\"}` in API requests

-[Structured Output](https://docs.mistral.ai/docs/capabilities/structured-output/overview.md): Learn to generate structured outputs (JSON or custom) for LLM agents and pipelines with reliability tips

+[Reasoning](https://docs.mistral.ai/docs/capabilities/reasoning.md): Reasoning enhances CoT by generating logical steps before final answers, improving problem-solving through deeper exploration

+[Custom Structured Output](https://docs.mistral.ai/docs/capabilities/structured-output/custom.md): Define JSON schemas for consistent structured outputs using Pydantic and Mistral AI

+[JSON mode](https://docs.mistral.ai/docs/capabilities/structured-output/json-mode.md): Enable JSON mode in API responses by setting `response_format` to `{\"type\": \"json_object\"}`. (100 characters)

+[Structured Output](https://docs.mistral.ai/docs/capabilities/structured-output/overview.md): Learn to generate structured outputs like JSON or custom formats for reliable LLM agent workflows

[Text and Chat Completions](https://docs.mistral.ai/docs/capabilities/text_and_chat_completions.md): Mistral models enable chat and text completions via natural language prompts, with flexible API options for streaming and async responses

-[Vision](https://docs.mistral.ai/docs/capabilities/vision.md): Vision capabilities enable models to analyze images and text for multimodal insights, with support for URL and base64 image inputs

-[AWS Bedrock](https://docs.mistral.ai/docs/deployment/cloud/aws.md): Deploy Mistral AI models on AWS Bedrock as fully managed, serverless endpoints." (99 characters)

-[Azure AI](https://docs.mistral.ai/docs/deployment/cloud/azure.md): Deploy Mistral AI models on Azure AI with pay-as-you-go or GPU-based real-time endpoints

-[IBM watsonx.ai](https://docs.mistral.ai/docs/deployment/cloud/ibm-watsonx.md): Mistral AI's Large model on IBM watsonx.ai for managed and on-premise deployments with API access setup

+[Vision](https://docs.mistral.ai/docs/capabilities/vision.md): Vision capabilities enable models to analyze images and text for multimodal insights, with support for URL or Base64 image inputs

+[AWS Bedrock](https://docs.mistral.ai/docs/deployment/cloud/aws.md): Deploy Mistral AI models on AWS Bedrock as fully managed, serverless endpoints

+[Azure AI](https://docs.mistral.ai/docs/deployment/cloud/azure.md): Deploy Mistral AI models on Azure AI with pay-as-you-go or real-time GPU-based endpoints

+[IBM watsonx.ai](https://docs.mistral.ai/docs/deployment/cloud/ibm-watsonx.md): Mistral AI's Large model on IBM watsonx.ai: SaaS & on-premise deployment with setup & query guidance

[Outscale](https://docs.mistral.ai/docs/deployment/cloud/outscale.md): Deploy and query Mistral AI models on Outscale via managed VMs and GPUs

[Cloud](https://docs.mistral.ai/docs/deployment/cloud/overview.md): Access Mistral AI models via Azure, AWS, Google Cloud, Snowflake, IBM, and Outscale using cloud credits

-[Snowflake Cortex](https://docs.mistral.ai/docs/deployment/cloud/sfcortex.md): Access Mistral AI models on Snowflake Cortex as fully managed, serverless endpoints

-[Vertex AI](https://docs.mistral.ai/docs/deployment/cloud/vertex.md): Deploy and query Mistral AI models on Google Cloud's serverless Vertex AI platform." (99 characters)

-[Workspaces](https://docs.mistral.ai/docs/deployment/laplateforme/organization.md): La Plateforme workspaces enable team collaboration, access management, and shared fine-tuned models." (99 characters)

-[La Plateforme](https://docs.mistral.ai/docs/deployment/laplateforme/overview.md): Mistral AI's pay-as-you-go API platform for accessing latest large language models." (99 characters)

-[Pricing](https://docs.mistral.ai/docs/deployment/laplateforme/pricing.md): Check the pricing page for detailed API cost information." (99 characters)

-[Rate limit and usage tiers](https://docs.mistral.ai/docs/deployment/laplateforme/tier.md): Learn about Mistral's API rate limits, usage tiers, and how to check or upgrade your workspace limits

+[Snowflake Cortex](https://docs.mistral.ai/docs/deployment/cloud/sfcortex.md): Access Mistral AI models on Snowflake Cortex as serverless, fully managed endpoints

+[Vertex AI](https://docs.mistral.ai/docs/deployment/cloud/vertex.md): Deploy and query Mistral AI models on Google Cloud's serverless Vertex AI platform

+[Workspaces](https://docs.mistral.ai/docs/deployment/laplateforme/organization.md): La Plateforme workspaces enable team collaboration, access control, and shared fine-tuned models." (99 characters)

+[La Plateforme](https://docs.mistral.ai/docs/deployment/laplateforme/overview.md): Mistral AI's pay-as-you-go API platform for accessing latest models via [La Plateforme][platform_url]

+[Pricing](https://docs.mistral.ai/docs/deployment/laplateforme/pricing.md): Check the pricing page for detailed API cost information

+[Rate limit and usage tiers](https://docs.mistral.ai/docs/deployment/laplateforme/tier.md): Learn about Mistral's API rate limits, usage tiers, and how to check or adjust your workspace limits

[Deploy with Cerebrium](https://docs.mistral.ai/docs/deployment/self-deployment/cerebrium.md): Deploy AI apps effortlessly with Cerebrium's serverless GPU infrastructure, auto-scaling and pay-per-use

[Deploy with Cloudflare Workers AI](https://docs.mistral.ai/docs/deployment/self-deployment/cloudflare.md): Deploy AI models on Cloudflare's global network with Workers AI for serverless GPU-powered LLMs

[Self-deployment](https://docs.mistral.ai/docs/deployment/self-deployment/overview.md): Deploy Mistral AI models on your infrastructure using vLLM, TensorRT-LLM, TGI, or tools like SkyPilot and Cerebrium

@@ -53,27 +53,27 @@

[Text Generation Inference](https://docs.mistral.ai/docs/deployment/self-deployment/tgi.md): TGI is a high-performance toolkit for deploying and serving open-access LLMs with features like quantization and streaming

[TensorRT](https://docs.mistral.ai/docs/deployment/self-deployment/trt.md): Guide to building and deploying TensorRT-LLM engines for Mistral and Mixtral models using Triton Inference Server

[vLLM](https://docs.mistral.ai/docs/deployment/self-deployment/vllm.md): vLLM is an open-source LLM inference engine optimized for deploying Mistral models on-premise

-[SDK Clients](https://docs.mistral.ai/docs/getting-started/clients.md): SDK clients for Python, Typescript, and community-supported languages

-[Bienvenue to Mistral AI Documentation](https://docs.mistral.ai/docs/getting-started/docs_introduction.md): Mistral AI offers open-source and commercial LLMs for developers, with premier models like Mistral Medium and Codestral

-[Glossary](https://docs.mistral.ai/docs/getting-started/glossary.md): Glossary of key terms related to large language models, text generation, and AI concepts

-[Model customization](https://docs.mistral.ai/docs/getting-started/model_customization.md): Guide to building applications with custom LLMs for deployment, emphasizing iterative development and user feedback

+[SDK Clients](https://docs.mistral.ai/docs/getting-started/clients.md): SDK clients available in Python, Typescript, and community-supported languages

+[Bienvenue to Mistral AI Documentation](https://docs.mistral.ai/docs/getting-started/docs_introduction.md): Mistral AI offers open-source and commercial LLMs for developers, featuring multilingual, coding, and reasoning capabilities

+[Glossary](https://docs.mistral.ai/docs/getting-started/glossary.md): Glossary of key terms related to large language models (LLMs), text generation, and tokens

+[Model customization](https://docs.mistral.ai/docs/getting-started/model_customization.md): Learn how to build applications with custom LLMs for iterative, user-driven AI development

[Models Benchmarks](https://docs.mistral.ai/docs/getting-started/models/benchmark.md): Standardized tests evaluating LLM performance, including Mistral's top-tier reasoning and multilingual benchmarks

-[Model selection](https://docs.mistral.ai/docs/getting-started/models/model_selection.md): Guide to selecting Mistral models based on performance, cost, and use-case complexity

-[Models Overview](https://docs.mistral.ai/docs/getting-started/models/overview.md): Mistral offers open and premier models, including multimodal and reasoning options with API access

-[Model weights](https://docs.mistral.ai/docs/getting-started/models/weights.md): Open-source pre-trained and instruction-tuned models with varying licenses; commercial options available

-[Quickstart](https://docs.mistral.ai/docs/getting-started/quickstart.md): Quickstart guide for setting up a Mistral AI account, managing billing, and generating API keys

-[Basic RAG](https://docs.mistral.ai/docs/guides/basic-RAG.md): RAG combines LLMs with retrieval systems to generate answers using external knowledge." (99 characters)

-[Ambassador](https://docs.mistral.ai/docs/guides/contribute/ambassador.md): Join Mistral AI's Ambassador Program to advocate for AI models, share expertise, and support the community. Apply by July 1, 2025

+[Model selection](https://docs.mistral.ai/docs/getting-started/models/model_selection.md): Guide to selecting Mistral models based on task complexity, performance, and cost for optimal use cases

+[Models Overview](https://docs.mistral.ai/docs/getting-started/models/overview.md): Mistral offers open and premier models, including multimodal and reasoning options with API access and commercial licensing

+[Model weights](https://docs.mistral.ai/docs/getting-started/models/weights.md): Open-source pre-trained and instruction-tuned models under various licenses, with download links and usage guidelines

+[Quickstart](https://docs.mistral.ai/docs/getting-started/quickstart.md): Quickstart guide to setting up a Mistral account, configuring billing, and generating API keys for Mistral AI

+[Basic RAG](https://docs.mistral.ai/docs/guides/basic-RAG.md): RAG combines LLMs with retrieval systems to generate answers using external knowledge, involving retrieval and generation steps

+[Ambassador](https://docs.mistral.ai/docs/guides/contribute/ambassador.md): Join Mistral AI's Ambassador Program to advocate for AI, share expertise, and support the community. Apply by July 1, 2025

[Contribute](https://docs.mistral.ai/docs/guides/contribute/overview.md): Learn how to contribute to Mistral AI through docs, code, or the Ambassador Program

-[Evaluation](https://docs.mistral.ai/docs/guides/evaluation.md): Guide to evaluating LLMs for specific use cases with metrics, LLM, and human-based methods." (99 characters)

+[Evaluation](https://docs.mistral.ai/docs/guides/evaluation.md): Guide to evaluating LLMs for specific use cases with metrics, LLM, and human-based methods

[Fine-tuning](https://docs.mistral.ai/docs/guides/finetuning.md): Fine-tuning models incurs a $2 monthly storage fee; see pricing for details

-[ 01 Intro Basics](https://docs.mistral.ai/docs/guides/finetuning_sections/_01_intro_basics.md): Learn the basics of fine-tuning LLMs to optimize performance for specific tasks using Mistral AI's tools

-[ 02 Prepare Dataset](https://docs.mistral.ai/docs/guides/finetuning_sections/_02_prepare_dataset.md): Prepare training data for fine-tuning models with specific use cases and examples

-[download the validation and reformat script](https://docs.mistral.ai/docs/guides/finetuning_sections/_03_e2e_examples.md): Download the reformat_data.py script to validate and reformat Mistral API fine-tuning datasets

+[ 01 Intro Basics](https://docs.mistral.ai/docs/guides/finetuning_sections/_01_intro_basics.md): Learn the basics of fine-tuning LLMs to optimize performance for specific tasks using Mistral AI's API and open-source tools

+[ 02 Prepare Dataset](https://docs.mistral.ai/docs/guides/finetuning_sections/_02_prepare_dataset.md): Prepare training data for fine-tuning models with specific use cases and examples." (99 characters)

+[download the validation and reformat script](https://docs.mistral.ai/docs/guides/finetuning_sections/_03_e2e_examples.md): Download and use the reformat_data.py script to validate and reformat Mistral API fine-tuning datasets

[get data from hugging face](https://docs.mistral.ai/docs/guides/finetuning_sections/_04_faq.md): Learn how to fetch, validate, and format data from Hugging Face for Mistral models

[Observability](https://docs.mistral.ai/docs/guides/observability.md): Observability ensures visibility, debugging, and continuous improvement for LLM systems in production." (99 characters)

[Other resources](https://docs.mistral.ai/docs/guides/other-resources.md): Explore Mistral AI Cookbook for code examples, community contributions, and third-party tool integrations

-[Prefix](https://docs.mistral.ai/docs/guides/prefix.md): Prefixes enhance instruction adherence and response control for various use cases." (99 characters)

+[Prefix](https://docs.mistral.ai/docs/guides/prefix.md): Prefixes enhance instruction following and response control for models in various use cases

[Prompting capabilities](https://docs.mistral.ai/docs/guides/prompting-capabilities.md): Learn how to craft effective prompts for classification, summarization, personalization, and evaluation with Mistral models

[Sampling](https://docs.mistral.ai/docs/guides/sampling.md): Learn how to adjust LLM sampling parameters like Temperature, Top P, and penalties for better output control

-[Tokenization](https://docs.mistral.ai/docs/guides/tokenization.md): Tokenization breaks text into subword units for LLM processing, with Mistral AI's open-source tools and Python integration

\ No newline at end of file

+[Tokenization](https://docs.mistral.ai/docs/guides/tokenization.md): Tokenization breaks text into tokens for LLM processing, with Mistral AI's open-source tools for Python implementation

\ No newline at end of file