Cannot backprop gradients from PyTorch model loss to Mitsuba3 #350

Unanswered

matthewdhull

asked this question in

Q&A

Replies: 1 comment 4 replies

-

|

Could you make sure you get some gradients using a simple loss function implemented in Dr.Jit? E.g. Also, I would recommend you try combining both AD wrappers into one, maybe this is causing some issues here? |

Beta Was this translation helpful? Give feedback.

4 replies

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Uh oh!

There was an error while loading. Please reload this page.

-

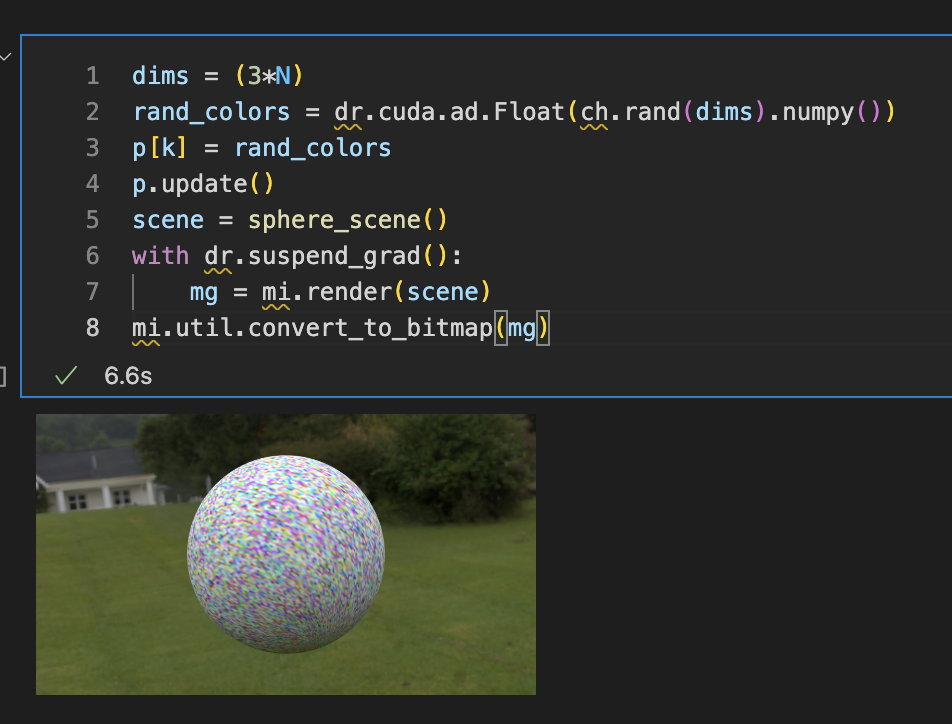

I am making use of

drjit.wrap_addecorators with the intent of taking the x-entropy loss of a PyTorch Image Classifier and backprop'ing the gradient of the loss through Mitsuba to get the grad of the loss w.r.t. to the vertex colors of a mesh. I cannot get gradients for a scene parameter with my current approach.I have a mesh in my scene with a differentiable

sphere_color.vertex_colorparamI have the following wrapped funcs to classify the rendered image and calculate the loss:

In my custom optimization loop, I render the image, pass it to the model, obtain classification logits and loss and then call

dr.backward(loss)but the gradient is always 0.0 for each vertex. Minimal example of my loop:Thank you for any assistance you can provide in resolving the gradient issue.

Beta Was this translation helpful? Give feedback.

All reactions