-

|

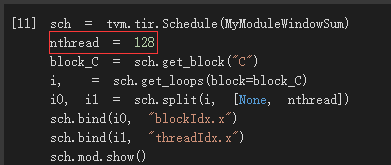

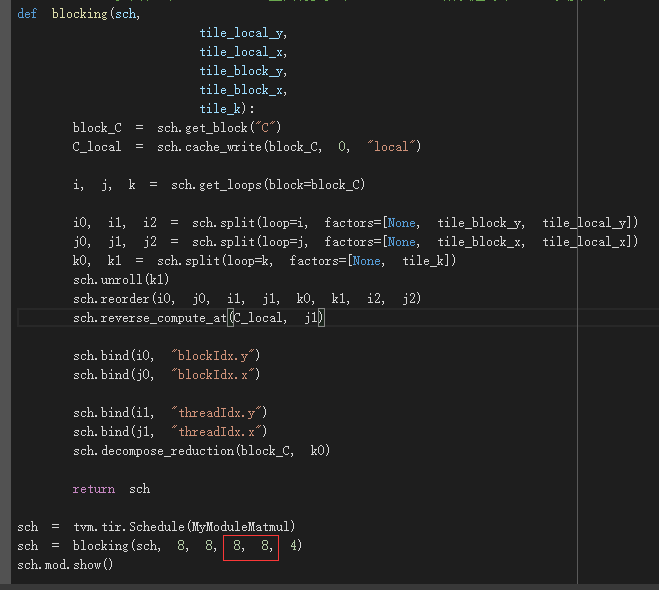

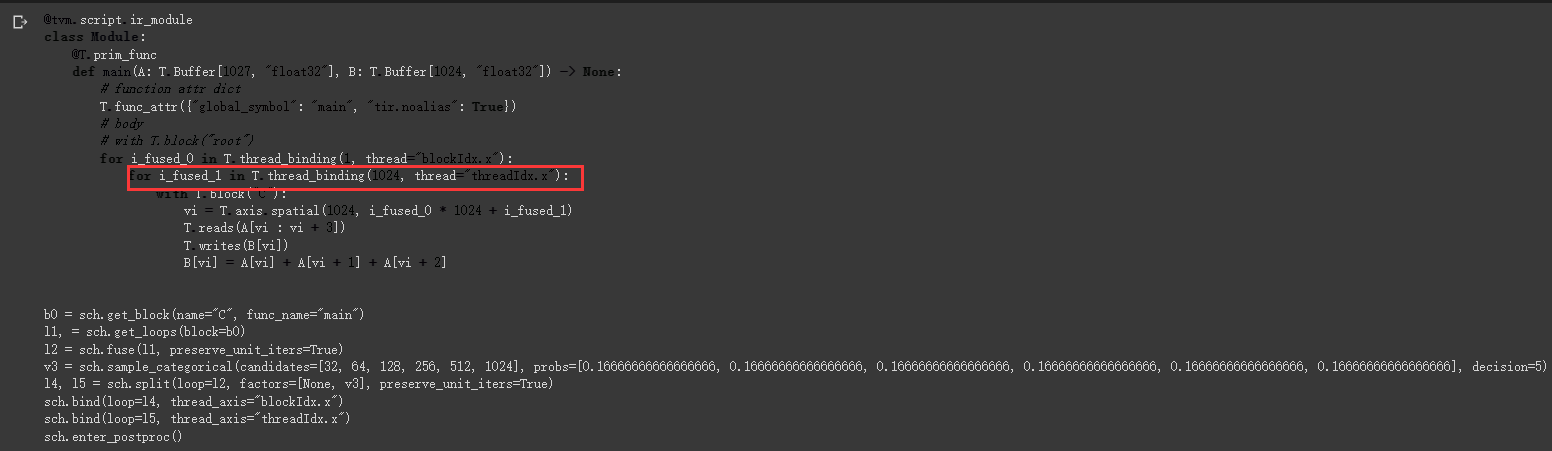

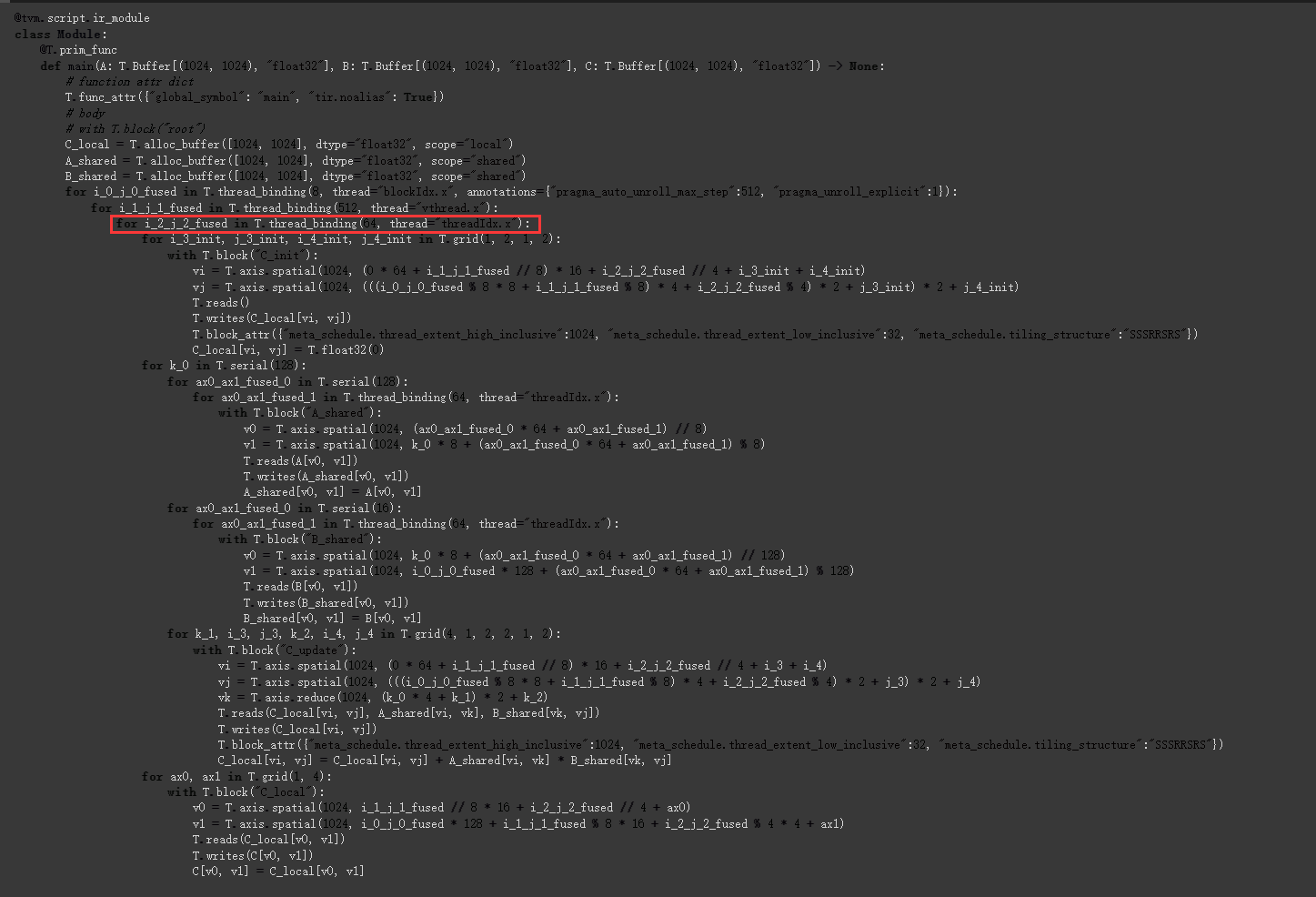

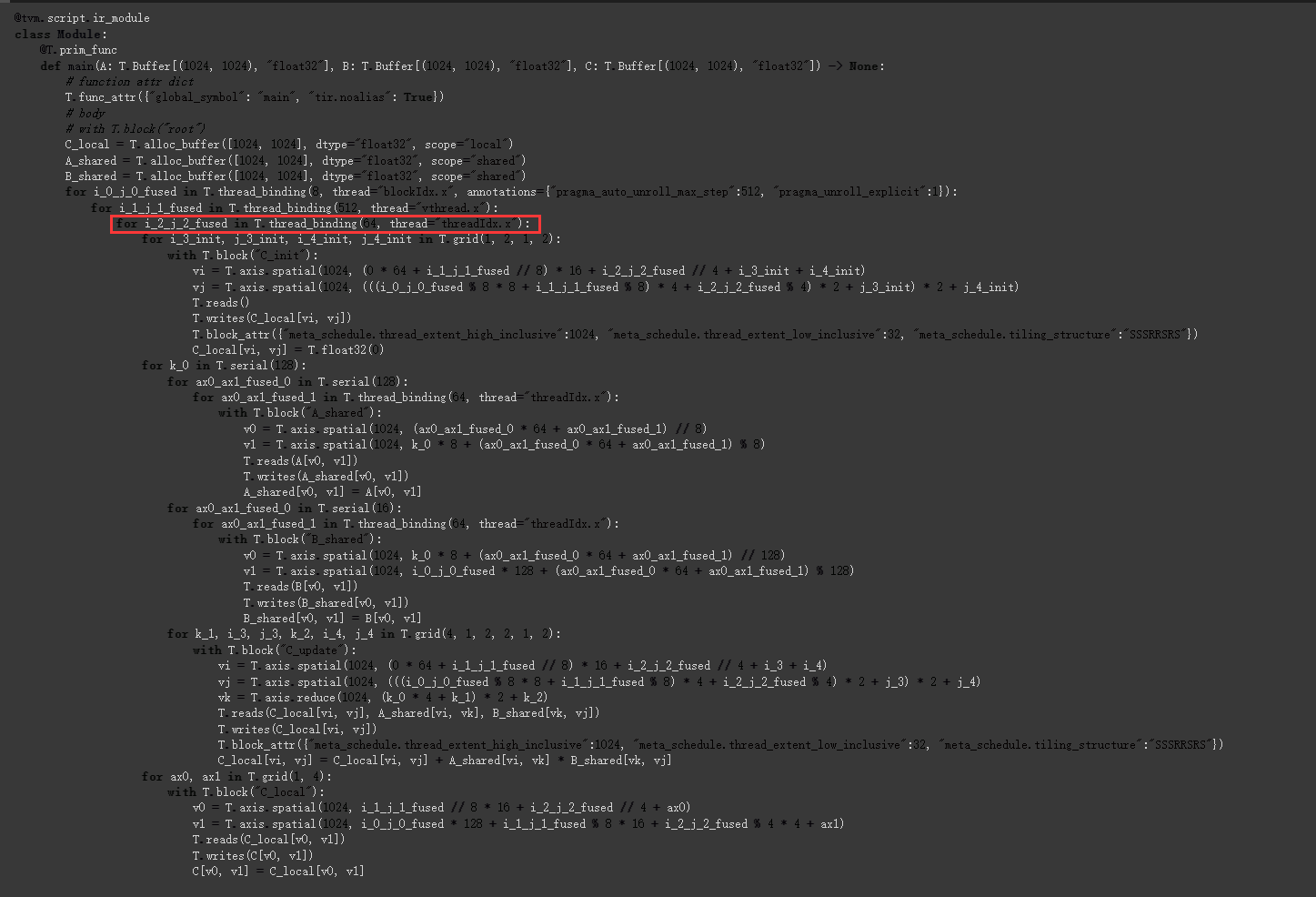

在7_GPU_and_Specialized_Hardware发现对于不同模型设置的thread num是不同的。我的疑问:为了提供并行度,不是应该尽可能把一个thread block(e.g. stream multi-processors)里所有的thread都用上吗? 为什么thread num会不同? 对于 对于 如果使用

|

Beta Was this translation helpful? Give feedback.

Replies: 2 comments 2 replies

-

|

对于GPU而言,一个warp的线程数是32,参考 (https://docs.nvidia.com/cuda/cuda-c-best-practices-guide/index.html#differences-between-host-and-device) ,超过32个thread会被串行执行 |

Beta Was this translation helpful? Give feedback.

-

|

一个thread block内可以有多个warp,gpu的warp scheduler可以调度这些warp的执行(可以参考https://www.cs.ucr.edu/~nael/217-f19/lectures/WarpScheduling.pptx)。

|

Beta Was this translation helpful? Give feedback.

对于GPU而言,一个warp的线程数是32,参考 (https://docs.nvidia.com/cuda/cuda-c-best-practices-guide/index.html#differences-between-host-and-device) ,超过32个thread会被串行执行