-

|

您好,在MLC课程中多次使用了compute_at和reverse_compute_at这两个调度原语。然而,这两个调度原语的文档描述中也很抽象难以理解。请问reverse_compute_at和compute_at的区别是什么呢?何时该使用reverse_compute_at呢?何时又该使用compute_at呢? # This is needed for deferring annotation parsing in TVMScript

from __future__ import annotations

import tvm

from tvm.script import tir as T

@tvm.script.ir_module

class MatmulModule:

@T.prim_func

def main(

A: T.Buffer[(1024, 1024), "float32"],

B: T.Buffer[(1024, 1024), "float32"],

C: T.Buffer[(1024, 1024), "float32"],

) -> None:

T.func_attr({"global_symbol": "main", "tir.noalias": True})

for i, j, k in T.grid(1024, 1024, 1024):

with T.block("matmul"):

vi, vj, vk = T.axis.remap("SSR", [i, j, k])

with T.init():

C[vi, vj] = T.float32(0)

C[vi, vj] += A[vi, vk] * B[vj, vk]

sch = tvm.tir.Schedule(MatmulModule)

block_mm = sch.get_block("matmul")

i, j, k = sch.get_loops(block=block_mm)

A_reg = sch.cache_read(block_mm, 0, storage_scope="global.A_reg")

B_reg = sch.cache_read(block_mm, 1, storage_scope="global.B_reg")

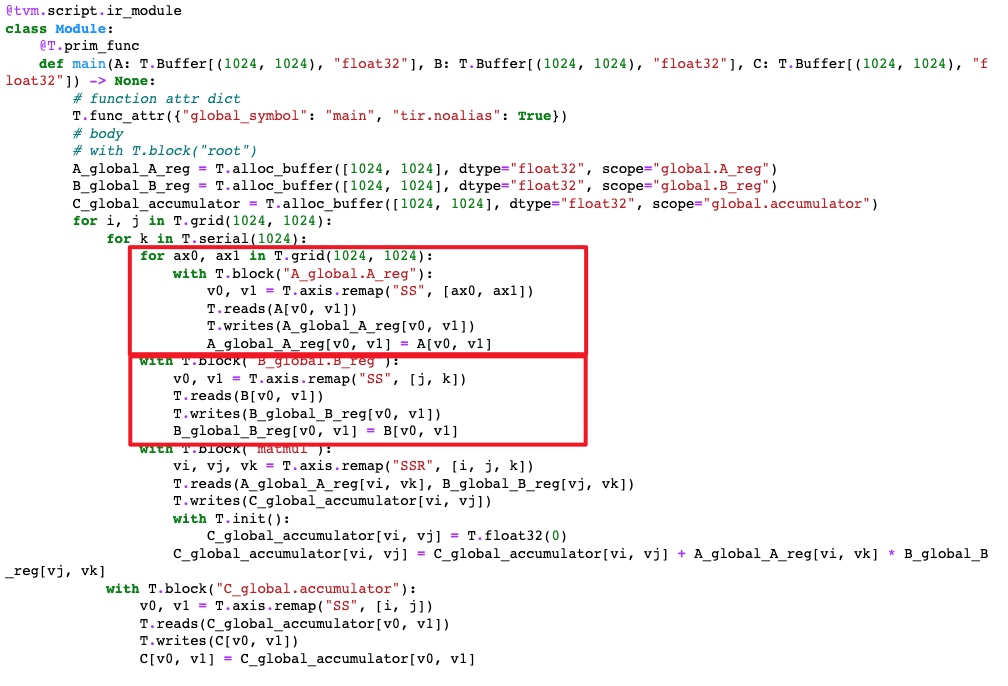

sch.reverse_compute_at(A_reg, k) # use reverse_compute_at here, in order to compare

sch.compute_at(B_reg, k)

write_back_block = sch.cache_write(block_mm, 0, storage_scope="global.accumulator")

sch.reverse_compute_at(write_back_block, j)

sch.mod.show()红框是使用reverse_compute_at和compute_at的对比结果,但是仍然不明白两者的本质区别和使用场景。 |

Beta Was this translation helpful? Give feedback.

Answered by

Hzfengsy

Aug 29, 2022

Replies: 1 comment 3 replies

-

|

|

Beta Was this translation helpful? Give feedback.

3 replies

Answer selected by

wzzju

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

compute_at是把producer移动到consumer的loop底下;reverse_compute_at是把consumer移动到producer底下。