Optional Server/Client Architecture #36

Replies: 11 comments

-

|

I agree with the fact that we should probably start looking at this sooner rather than later; I was hoping to get networking in after hotloading is complete, though obviously it's best that the design for it is thoroughly thought through beforehand. Ideally I'd like to make it so that games can use a dedicated "singleplayer mode", however, maintaining this would probably be an unnecessary pain since there'd be little to no difference in terms of development - so maybe the best recourse here is to just "use multiplayer everywhere" with singleplayer games just being single-slot servers. There are a lot of different design points that need thinking over:

APII've thought through two different high-level ways we can represent networking. Attribute-basedIdeally we'd have properties marked with attributes, and then code generation will handle backing fields and functions and such, with the engine handling these effectively automatically. We'd be able to do Client <--> Server RPCs with attributes too. [Net, Predicted] public float NetworkedFloat { get; set; }

[Rpc.Server]

public void RpcDropItem( Item item )

{

// ...

}

[Rpc.Client]

public void RpcPlayerKilled( Player player )

{

// ...

}Message-basedI suspect this will be the less popular choice because it's more manual, but we could also go the alternative route and send messages, with no clientside and no prediction. public void SendDropItem( Item item )

{

var message = new DropItemMessage { Item = item };

Send( message );

}

public void Handle( DropItemMessage message )

{

// ...

}

public void SendPlayerKilled( Player player )

{

var message = new PlayerKilledMessage { Player = player };

Send( message );

}

public void Handle( PlayerKilledMessage message )

{

// ...

}This allows for more fine-grained control over networked functionality, but also requires more manual coding and testing; a developer would need to handle things like message serialization and handling. It's also not as easy to get right, it's easy to fall into the trap of sending too much data too often, or send the wrong data. It's also harder to debug, as the developer needs to track and understand the flow of messages in the game. ConclusionAs the engine grows, it's becoming increasingly important to consider the implementation of multiplayer support and network architecture early on. The attribute-based approach offers a more convenient and efficient way for developers to handle networked properties and RPCs, while the message-based approach offers more fine-grained control and flexibility. I know @peter-r-g has a strong interest in networking, so it'd be great to hear his insights on this! |

Beta Was this translation helpful? Give feedback.

-

Yeah, considering the extra workload on the engine side of maintaining two ways ways of doing it and the fact that multiplayer API really not that much to deal with even in a singleplayer game once it's abstracted well enough, I'm personally inclined to agree. Design pointsSlightly out of order, makes more sense this way Transport

There are indeed reliable UDP solutions! Definitely a good idea to go for that. There are old ones like ENet that I have experience with, but the one I've been eyeing for a long time is Valve's GameNetworkingSockets library (also on vcpkg). It has modern features and it's also a subset of Steamworks, so if we ever want Steam support then we can just swap over, or do #defines for it, or whatever. There are also existing C# bindings for it, so we don't have to make our own if we don't want to: https://github.com/nxrighthere/ValveSockets-CSharp Architecture

Honestly, I wonder if we couldn't abstract it all away to a certain extent and allow both, do a sort of hybrid approach. Tell me what you think, maybe I'm overengineering everything. First let's maybe define some terms (I'm not attached to them though, switch them up if it's confusing):

This is because, well, the major pain point in my mind is I really just can't fathom how you could easily and accurately sync game state across, say, 30 different instances of the game all in direct communication with each other. So for everyone's sanity it's probably best that just one of the instances becomes a host, and the rest use that as an authority on the game state. At the low level, you can send data over the network to any instance you have a connection with. Peer-to-peerEvery peer maps to an instance.

Server / clientThis is for dedicated servers. The server gets abstracted to a host instance, and clients get abstracted to regular instances.

DevelopmentFrom the game dev's standpoint, it should hopefully be just the same as developing for server / client, except now it's host / instance, and you get the benefits of peer-to-peer for free. At the engine level, my initial thoughts for handling player input are as such: If it's not a dedicated host, the instance prepares user messages for the input, then checks if it's the host. If it's not the host, it sends it to the instance that is, and if it is the host, it instantly processes it locally. The idea here is to hopefully avoid running two instances of the exact same game world on the same machine, but we'll have to see how that works out. ーIf allowing for true peer-to-peer ends up being too complex, then I say we should go for a traditional server / client model, and let some of the clients host their own servers (listen servers). It's not quite as good as it still involves single points of failure (and fixing that would be more difficult), but it's better than forcing everyone to host their own dedicated server, and I imagine more people will use server / client to begin with? Dedicated servers

Ideally if things are decoupled well enough we should be able to run the simulation (ticks) headlessly without issue, and it would be structured more or less the same as a client, just with some bits missing/changed, for instance we'll probably need a slightly more genericised way of checking for close events (currently it's tied to the SDL window quit event). Synchronisation & Latency compensation

Latency compensation and prediction are tied together. I say we handle this in the same way as Source (and I think Quake 3 too) - we know it works, why reinvent the wheel? I'll need to think some more about synchronisation... API

Quite honestly, RPCs and message-based networking don't look all that different to me. One could do all of their message-based networking through RPCs if they wanted to. That together with my negative experiences in purely message-based networking makes me lean heavily towards the attribute-based API you laid out. |

Beta Was this translation helpful? Give feedback.

-

I think client/server architecture would benefit most people. @MuffinTastic s idea of supporting both does sound plausible as an abstraction layer for the transport but in general peer-to-peer will fall apart much faster than a client/server setup when player counts increase. I would say it is worth looking into but overall it should not be a necessity to support first-party.

I have personally never messed with prediction implementations but as @MuffinTastic pointed out we do not need to reinvent the wheel here. When it comes to synchronizing state; For lag compensation purposes the server should maintain a backlog of previous world states. With that backlog in the event that a client goes out of sync they can request a full world state from the server and get back on track. For general per-tick networking, it would be beneficial to only send partial world updates. Full-world updates should only be resorted to for out-of-sync correction. If we plan on limiting what entities get networked to clients that would definitely need a more complex solution. Perhaps serializing all changes of networkable entities once and then building all the combinations to then be sent out to clients.

Reliable UDP or TCP would be best. The GameNetworkingSockets library @MuffinTastic mentioned looks promising for this. I believe @xezno in the past mentioned wanting to support Steam or at least its networking out of the box. With that in mind Facepunch.Steamworks really is the best option out there for Steam-related services in C#.

In theory, it should just be an instance of Mocha running without any of the rendering pipelines. Just give it a console interface and call it a day. That way it maintains all of the expected functionality while only using what it really needs. In regards to code-splitting that might become a bit more of a complex process. Maybe create attributes we can mark as "ServerOnly"/"ClientOnly" and have an analyzer run over it and strip it where necessary. I have never actually looked into this before so I am not sure how possible it could be.

Covered it briefly in Synchronisation.

I don't see why we cannot support both of those solutions. Our networking setup will most likely follow a message-based process. Just allow the end-user to hook into that system with their own custom message classes. If it came down to only supporting one method for the end-user I would much prefer the attribute approach. The only issue I see with @xezno s code example is making use of the basic C# float type. If we plan on allowing people to network just basic types in C# that might become a nightmare to manage. It seems Facepunch's S&box struggles with it quite a bit when it comes to more complex types like lists, dictionaries, etc. Personally, I am more of a fan of creating dedicated types for networking items so that it is more apparent what is being networked and it can be better managed on the library side when it comes to changes in the types instance. |

Beta Was this translation helpful? Give feedback.

-

Right, perhaps I should also put the idea more succinctly: It's basically using peer-to-peer in a limited fashion, pretending one of the peers is a listen server, with the benefit of it being a lot easier to change "who" the server is. I suppose the downside of that is that you could definitely say it's not true peer-to-peer. Halo 3 seems to use something analogous to this model for its multiplayer, and it does pretty well for itself. The page only mentions that hosts can "migrate" and doesn't mention exactly how, so maybe there's some other way than masquerading as peer-to-peer, but I'm not sure what it would be at the moment. Anyways, I'm not exactly sure what you mean by peer-to-peer falling apart, but if it has to do with lower bandwidth or less powerful CPUs on home computers then with a Halo-like approach it's just about as susceptible to it as listen servers are. I don't think that's so bad, as the average player tends to understand that some peoples PCs are better suited than others to hosting games. If it's true peer-to-peer with a lockstep model though, as described on that page for Halo's campaign coop, I could definitely see it getting pretty nasty. Any framerate stutters on any of those 30 players instances would cause a hiccup and freeze the game for everyone, not good! You could do away with lockstep in peer-to-peer, but that invites more avenues of cheating... unlike Facepunch it's my opinion we should take that into heavy consideration and at least try to limit the effects of cheats when possible, due to having spent years of my life manually banning cheaters in a game that did not try. |

Beta Was this translation helpful? Give feedback.

-

If we're doing client-server then supporting listen servers is a good idea, mainly to make it so that developers don't have to manually boot up a dedicated server next to the engine, but we could also support it as a "client server" solution for developers that want to make games using that model.

My main point / questions regarding synchronisation were more "where do we do this from" rather than "how do we do it", I should have been more clearer on that front; do we want networking entirely in C# or should we do it in C++? In my mind it'd be way easier to do it all in C#, but maybe there's something I'm not thinking about. There's also the benefit of C# being easier to edit, so if someone did want to use full peer-to-peer networking, they could swap out the default networking solution for their own. I do agree that we should take as much info as we can from good existing solutions (source, idtech, etc). The valve wiki networking resources are amazing but I'll throw these in here too: https://fabiensanglard.net/quake3/network.php, https://www.jfedor.org/quake3/

Yeah I did originally think it'd be best to use GameNetworkingSockets since Facepunch.Steamworks has a really nice wrapper around them: https://wiki.facepunch.com/steamworks/Creating_A_Socket_Server. We should ideally abstract this away so that, if we wanted to add or switch transport layers in future, we can do that.

As for types I think having separate networkable types could be a good idea. As long as it's done right and they support all the same operations as basic C# types, it shouldn't be any more friction than adding an attribute in the first place, so instead of doing [Net] public float MyFloat { get; set; }it'd be public NetFloat MyFloat { get; set; }and then we'd obviously have a bunch of types that would cover as many use cases as possible (primitive values, collections). We could even expose the functionality required to make custom networkable types this way, then if game devs need anything specific that we haven't added, they can do it entirely within their games rather than having to go through engine code.

I haven't watched all of this talk but there's a nice GDC talk on Halo Reach's networking here which also covers some of this. |

Beta Was this translation helpful? Give feedback.

-

I am not very familiar with C++ so I am biased but I think C# would fit well. If we want to allow someone to roll their own networking solution we can just create a DLL project that has the basics implemented and the rest can be expanded on in its derivatives.

What you mentioned in Discord here with having the C# type then the generator using the networked type would be the best of both worlds. We would just need to enforce that there is an implicit conversion between the types and a way to find said networked type based on the C# type. Another thing we need to consider is the behavior of multiplayer with the project system and hot loads. Do we want to send the whole project to any other clients joining? If a hot load occurs, how do we react to that? |

Beta Was this translation helpful? Give feedback.

-

Agreed, I'd rather write it in C# too. We get loads of benefits that way (including hotloading)

We can get away with just sending the raw data for the assembly after the server compiles it, and then have clients swap that in. At first this sounds like a security nightmare, because you're receiving a dll from some remote server and then loading that in.. but then the alternative is sending the code over and having clients compile that themselves, which would just be the same thing but with extra steps. I want to try and avoid a whitelist as much as possible with this because I believe that a game engine should just let developers do whatever, but I also want to avoid malicious code where possible. (I believe Windows does an anti-malware scan whenever we load an assembly in anyway thru AMSI, so maybe this isn't an issue.. if not we should be able to take control of AMSI and do it ourselves) |

Beta Was this translation helpful? Give feedback.

-

In distribution builds, I would assume all of the hot-loading features would be disabled anyway so security shouldn't be much of an issue. You should only be working with people you trust to be honest. |

Beta Was this translation helpful? Give feedback.

-

|

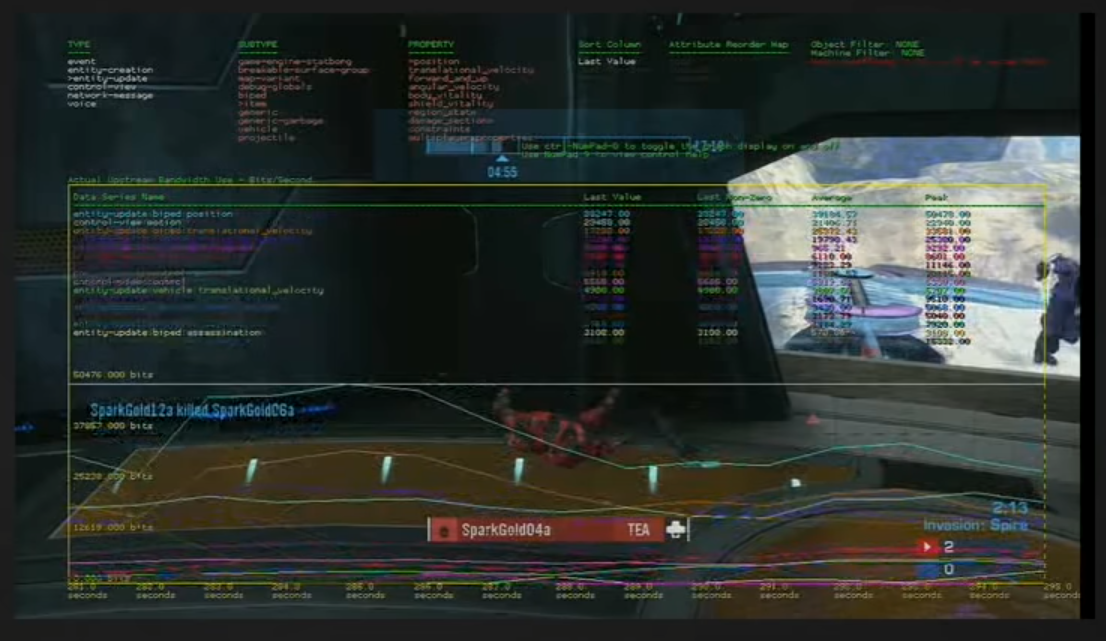

We should also consider what kind of tools we want to have available to the end developer. Watching the GDC talk that @xezno linked there was a neat graph tool shown in it. Sadly I cannot find any of the source videos used in the talk so I can only provide a very low-resolution screenshot from the presentation: The main points to replicate would be being able to collect all of the types of data that are being networked and track their size with a timestamp. That way it can easily be graphed for better visibility. Later on, we could implement filters on a per-client send-and-receive or entity basis. You can find the part where he is talking about inspection tools and the profiler at 1:00:07 in the video. This link here should take you straight to that part. |

Beta Was this translation helpful? Give feedback.

-

|

It might be worth enabling discussions for this repo and moving to that from here on out. There are many facets to this change that are each worthy of their own conversations, having them all mixed together in each post is a bit confusing in my opinion. |

Beta Was this translation helpful? Give feedback.

-

|

Can do, I'll move this to a discussion too |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

Uh oh!

There was an error while loading. Please reload this page.

-

One of the big pros of using engines like Source and Unreal is their built-in server/client architectures. As opposed to constantly having to roll your own networking like in engines like Unity, those engines handle 90% of the networking for you, and all you have to do as the game programmer is tell the engine what values you want replicated to clients and when. Having the server share the same codebase (both the game code and the code for the APIs it uses) reduces headaches for game developers on many levels.

If this is something desirable for this engine, it's probably best to consider it before it grows too big. It would likely be possible to retrofit networking onto it later, but the more code one has to modify, the more painful that's gonna be.

I say "optional" because it's not like everyone wants to make a multiplayer game, so we'll need to find ways of ensuring singleplayer and multiplayer logic can co-exist so to speak. Or we could just make every singleplayer game technically multiplayer and the player would never know, but that feels awkward.

Beta Was this translation helpful? Give feedback.

All reactions