+ [Off-policy RFT](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_reasoning_advanced.html)

+ [Fully asynchronous RFT](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_async_mode.html)

+ [Offline learning by DPO or SFT](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_dpo.html) | +| *Multi-step agentic RL* | + [Concatenated multi-turn workflow](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_multi_turn.html)

+ [General multi-step workflow](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_step_wise.html)

+ [ReAct workflow with an agent framework](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_react.html)

+ [Example: train a web-search agent](https://github.com/modelscope/Trinity-RFT/tree/main/examples/agentscope_websearch) | +| *Full-lifecycle data pipelines* | + [Rollout task mixing and selection](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/develop_selector.html)

+ [Online task curriculum](https://github.com/modelscope/Trinity-RFT/tree/main/examples/bots) (📝 [paper](https://arxiv.org/pdf/2510.26374))

+ [Research project: learn-to-ask](https://github.com/modelscope/Trinity-RFT/tree/main/examples/learn_to_ask) (📝 [paper](https://arxiv.org/pdf/2510.25441))

+ [Experience replay with prioritization](https://github.com/modelscope/Trinity-RFT/tree/main/examples/ppo_countdown_exp_replay)

+ [Advanced data processing & human-in-the-loop](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_data_functionalities.html) | +| *Algorithm development* | + [RL algorithm development with Trinity-RFT](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_mix_algo.html) (📝 [paper](https://arxiv.org/pdf/2508.11408))

+ [Research project: group-relative REINFORCE](https://github.com/modelscope/Trinity-RFT/tree/main/examples/rec_gsm8k) (📝 [paper](https://arxiv.org/abs/2509.24203))

+ Non-verifiable domains: [RULER](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_gsm8k_ruler), [trainable RULER](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_gsm8k_trainable_ruler), [rubric-as-reward](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_rubric_as_reward) | +| *Going deeper into Trinity-RFT* | + [Full configurations](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/trinity_configs.html)

+ [Benchmark toolkit for quick verification and experimentation](./benchmark/README.md)

+ [Understand the coordination between explorer and trainer](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/synchronizer.html) | + + +> [!NOTE] +> For more tutorials, please refer to the [Trinity-RFT documentation](https://modelscope.github.io/Trinity-RFT/). -* 📊 For data engineers. [[tutorial]](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/develop_operator.html) - - Create datasets and build data pipelines for cleaning, augmentation, and human-in-the-loop scenarios. - - Example: [Data Processing Foundations](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_data_functionalities.html), [Online Task Curriculum](https://github.com/modelscope/Trinity-RFT/tree/main/examples/bots) ## 🌟 Key Features @@ -46,14 +67,14 @@ Trinity-RFT is a flexible, general-purpose framework for reinforcement fine-tuni * **Agentic RL Support:** - Supports both concatenated and general multi-step agentic workflows. - - Able to directly train agent applications developed using agent frameworks like AgentScope. + - Able to directly train agent applications developed using agent frameworks like [AgentScope](https://github.com/agentscope-ai/agentscope).

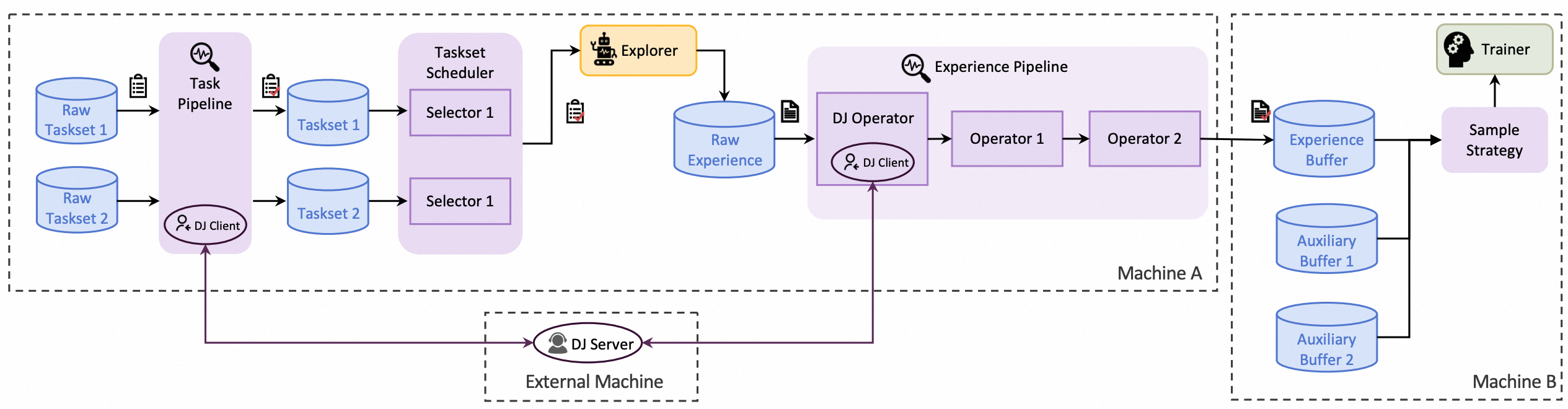

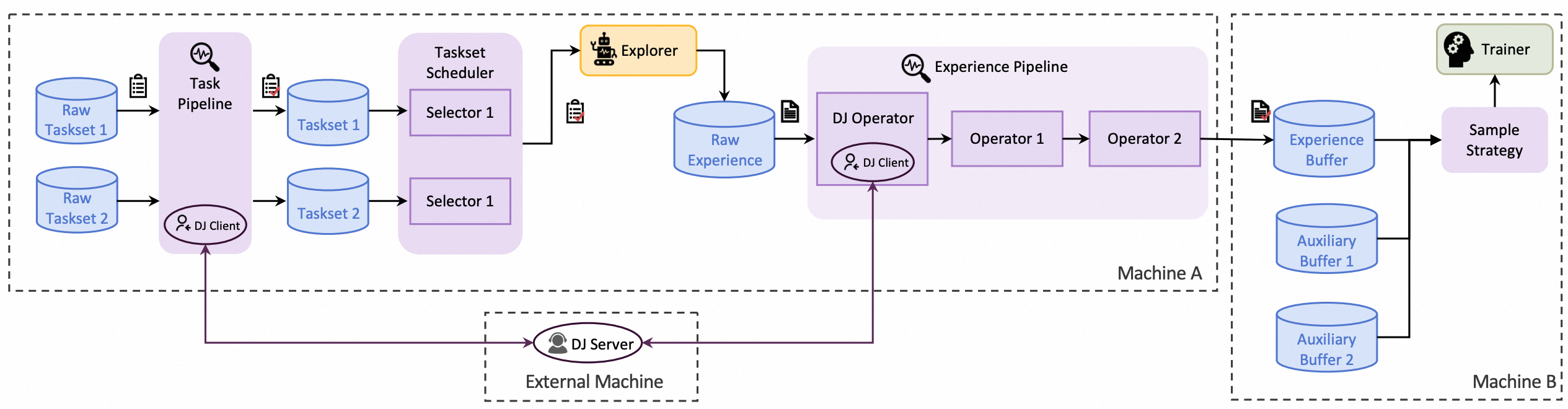

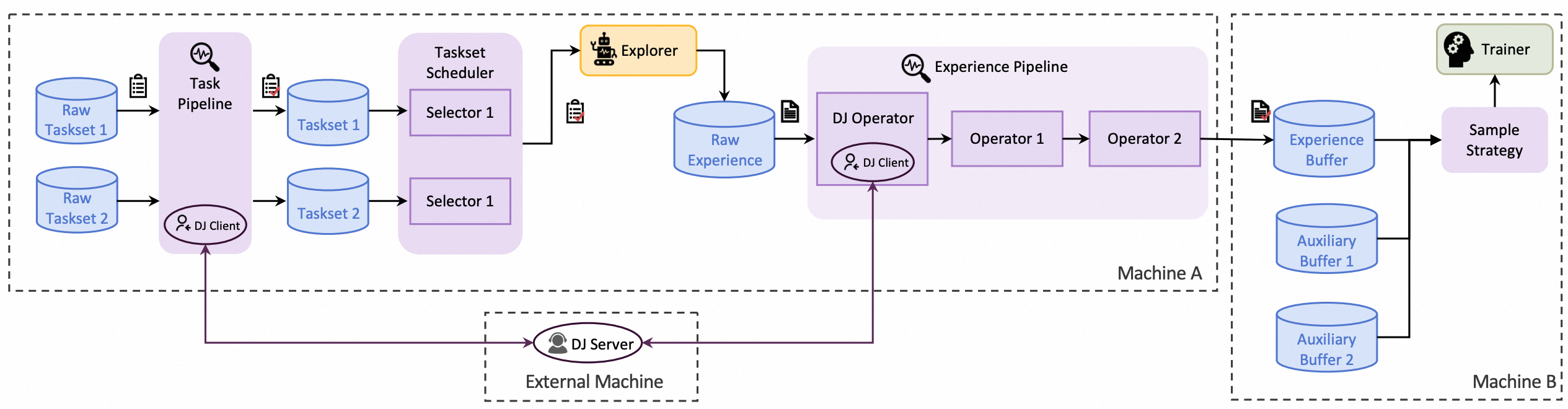

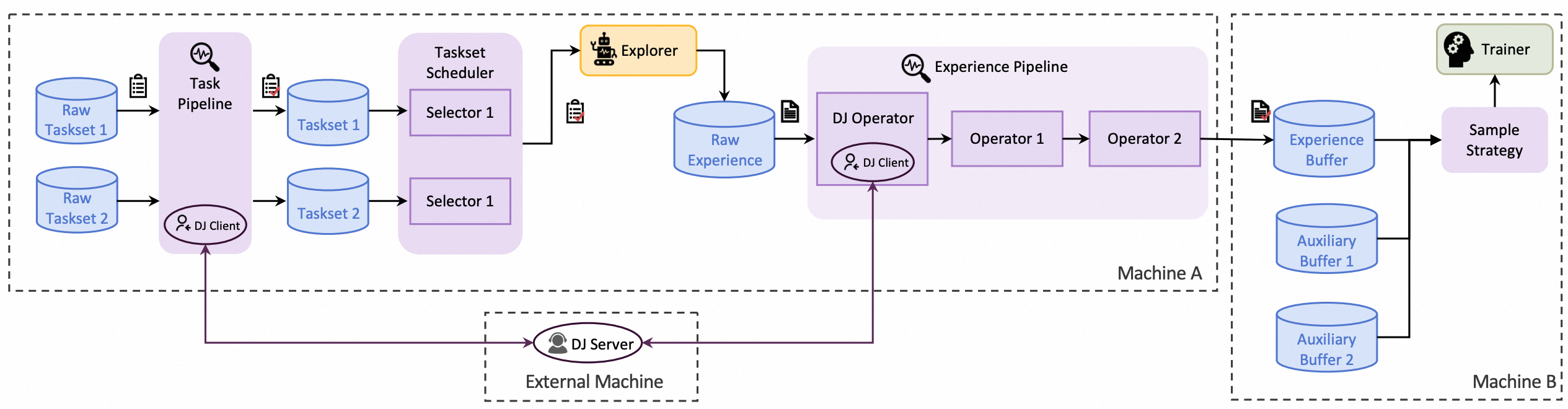

* **Full-Lifecycle Data Pipelines:**

- Enables pipeline processing of rollout tasks and experience samples.

- - Active data management (e.g., prioritization, cleaning, augmentation) throughout the RFT lifecycle.

- - Native support for multi-task joint learning.

+ - Active data management (prioritization, cleaning, augmentation, etc.) throughout the RFT lifecycle.

+ - Native support for multi-task joint learning and online task curriculum construction.

* **Full-Lifecycle Data Pipelines:**

- Enables pipeline processing of rollout tasks and experience samples.

- - Active data management (e.g., prioritization, cleaning, augmentation) throughout the RFT lifecycle.

- - Native support for multi-task joint learning.

+ - Active data management (prioritization, cleaning, augmentation, etc.) throughout the RFT lifecycle.

+ - Native support for multi-task joint learning and online task curriculum construction.

@@ -64,24 +85,10 @@ Trinity-RFT is a flexible, general-purpose framework for reinforcement fine-tuni

@@ -64,24 +85,10 @@ Trinity-RFT is a flexible, general-purpose framework for reinforcement fine-tuni

-## 🔨 Tutorials and Guidelines

-

-

-| Category | Tutorial / Guideline |

-| --- |------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

-| Run diverse RFT modes | + [Quick example: GRPO on GSM8k](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_reasoning_basic.html)

-## 🔨 Tutorials and Guidelines

-

-

-| Category | Tutorial / Guideline |

-| --- |------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

-| Run diverse RFT modes | + [Quick example: GRPO on GSM8k](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_reasoning_basic.html)+ [Off-policy RFT](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_reasoning_advanced.html)

+ [Fully asynchronous RFT](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_async_mode.html)

+ [Offline learning by DPO or SFT](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_dpo.html) | -| Multi-step agentic scenarios | + [Concatenated multi-turn workflow](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_multi_turn.html)

+ [General multi-step workflow](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_step_wise.html)

+ [ReAct workflow with an agent framework](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_react.html) | -| Advanced data pipelines | + [Rollout task mixing and selection](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/develop_selector.html)

+ [Online task curriculum](https://github.com/modelscope/Trinity-RFT/tree/main/examples/bots) ([paper](https://arxiv.org/pdf/2510.26374))

+ [Experience replay](https://github.com/modelscope/Trinity-RFT/tree/main/examples/ppo_countdown_exp_replay)

+ [Advanced data processing & human-in-the-loop](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_data_functionalities.html) | -| Algorithm development / research | + [RL algorithm development with Trinity-RFT](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_mix_algo.html) ([paper](https://arxiv.org/pdf/2508.11408))

+ Non-verifiable domains: [RULER](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_gsm8k_ruler), [trainable RULER](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_gsm8k_trainable_ruler), [rubric-as-reward](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_rubric_as_reward)

+ [Research project: group-relative REINFORCE](https://github.com/modelscope/Trinity-RFT/tree/main/examples/rec_gsm8k) ([paper](https://arxiv.org/abs/2509.24203)) | -| Going deeper into Trinity-RFT | + [Full configurations](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/trinity_configs.html)

+ [Benchmark toolkit for quick verification and experimentation](./benchmark/README.md)

+ [Understand the coordination between explorer and trainer](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/synchronizer.html) | - - -> [!NOTE] -> For more tutorials, please refer to the [Trinity-RFT documentation](https://modelscope.github.io/Trinity-RFT/). - ## 🚀 News +* [2025-11] Introducing [Learn-to-Ask](https://github.com/modelscope/Trinity-RFT/tree/main/examples/learn_to_ask): a framework for training proactive dialogue agents from offline expert data ([paper](https://arxiv.org/pdf/2510.25441)). * [2025-11] Introducing [BOTS](https://github.com/modelscope/Trinity-RFT/tree/main/examples/bots): online RL task selection for efficient LLM fine-tuning ([paper](https://arxiv.org/pdf/2510.26374)). * [2025-11] [[Release Notes](https://github.com/modelscope/Trinity-RFT/releases/tag/v0.3.2)] Trinity-RFT v0.3.2 released: bug fixes and advanced task selection & scheduling. * [2025-10] [[Release Notes](https://github.com/modelscope/Trinity-RFT/releases/tag/v0.3.1)] Trinity-RFT v0.3.1 released: multi-stage training support, improved agentic RL examples, LoRA support, debug mode and new RL algorithms. diff --git a/README_zh.md b/README_zh.md index 2a6c8e03d7..bd8846f4d6 100644 --- a/README_zh.md +++ b/README_zh.md @@ -20,21 +20,43 @@ ## 💡 什么是 Trinity-RFT ? -Trinity-RFT 是一个灵活、通用的大语言模型(LLM)强化微调(RFT)框架。 其将 RFT 流程解耦为三个关键模块:**Explorer**、**Trainer** 和 **Buffer**,并面向不同背景和目标的用户提供相应功能: +Trinity-RFT 是一个通用、灵活、用户友好的大语言模型(LLM)强化微调(RFT)框架。 其将 RFT 流程解耦为三个协同运行的关键模块: -* 🤖 面向智能体应用开发者。[[教程]](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/develop_workflow.html) - - 训练智能体应用,以增强其在指定环境中完成任务的能力 - - 示例:[多轮交互](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/example_multi_turn.html),[ReAct 智能体](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/example_react.html) +* **Explorer** 负责执行智能体-环境交互,并生成经验数据; -* 🧠 面向 RL 算法研究者。[[教程]](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/develop_algorithm.html) - - 在简洁、可插拔的类中设计和验证新的 RL 算法 - - 示例:[SFT/RL 混合算法](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/example_mix_algo.html) +* **Trainer** 在经验数据上最小化损失函数,以此更新模型参数; -* 📊 面向数据工程师。[[教程]](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/develop_operator.html) - - 设计针对任务定制的数据集,构建处理流水线以支持数据清洗、增强以及人类参与场景 - - 示例:[数据处理基础](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/example_data_functionalities.html),[在线任务选择](https://github.com/modelscope/Trinity-RFT/tree/main/examples/bots) +* **Buffer** 负责协调整个 RFT 生命周期中的数据处理流水线。 -# 🌟 核心特性 + +Trinity-RFT 面向不同背景和目标的用户提供相应功能: + +* 🤖 **智能体应用开发者:** 训练智能体应用,以增强其在特定领域中完成任务的能力 [[教程]](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/develop_workflow.html) + +* 🧠 **强化学习算法研究者:** 通过定制化简洁、可插拔的模块,设计、实现与验证新的强化学习算法 [[教程]](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/develop_algorithm.html) + +* 📊 **数据工程师:** 设计针对任务定制的数据集,构建处理流水线以支持数据清洗、增强以及人类参与场景 [[教程]](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/develop_operator.html) + + + +## 🔨 教程与指南 + + +| 类别 | 教程 / 指南 | +| --- | ----| +| *运行各种 RFT 模式* | + [快速开始:在 GSM8k 上运行 GRPO](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/example_reasoning_basic.html)

+ [Off-policy RFT](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/example_reasoning_advanced.html)

+ [全异步 RFT](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/example_async_mode.html)

+ [通过 DPO 或 SFT 进行离线学习](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/example_dpo.html) | +| *多轮智能体强化学习* | + [拼接多轮任务](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/example_multi_turn.html)

+ [通用多轮任务](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/example_step_wise.html)

+ [调用智能体框架中的 ReAct 工作流](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/example_react.html)

+ [例子:训练一个网络搜索智能体](https://github.com/modelscope/Trinity-RFT/tree/main/examples/agentscope_websearch) | +| *全生命周期的数据流水线* | + [Rollout 任务混合与选取](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/develop_selector.html)

+ [在线任务选择](https://github.com/modelscope/Trinity-RFT/tree/main/examples/bots) (📝 [论文](https://arxiv.org/pdf/2510.26374))

+ [研究项目:learn-to-ask](https://github.com/modelscope/Trinity-RFT/tree/main/examples/learn_to_ask) (📝 [论文](https://arxiv.org/pdf/2510.25441))

+ [经验回放机制](https://github.com/modelscope/Trinity-RFT/tree/main/examples/ppo_countdown_exp_replay)

+ [高级数据处理能力 & Human-in-the-loop](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/example_data_functionalities.html) | +| *强化学习算法开发* | + [使用 Trinity-RFT 进行 RL 算法开发](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/example_mix_algo.html) (📝 [论文](https://arxiv.org/pdf/2508.11408))

+ [研究项目: group-relative REINFORCE](https://github.com/modelscope/Trinity-RFT/tree/main/examples/rec_gsm8k) (📝 [论文](https://arxiv.org/abs/2509.24203))

+ 不可验证的领域: [RULER](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_gsm8k_ruler), [可训练 RULER](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_gsm8k_trainable_ruler), [rubric-as-reward](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_rubric_as_reward) | +| *深入认识 Trinity-RFT* | + [完整配置指南](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/trinity_configs.html)

+ [用于快速验证和实验的 Benchmark 工具](./benchmark/README.md)

+ [理解 explorer-trainer 同步逻辑](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/synchronizer.html) | + + +> [!NOTE] +> 更多教程请参考 [Trinity-RFT 文档](https://modelscope.github.io/Trinity-RFT/)。 + + + +## 🌟 核心特性 * **灵活的 RFT 模式:** - 支持同步/异步、on-policy/off-policy 以及在线/离线强化学习 @@ -45,14 +67,14 @@ Trinity-RFT 是一个灵活、通用的大语言模型(LLM)强化微调(RF * **Agentic RL 支持:** - 支持拼接式多轮和通用多轮交互 - - 能够直接训练使用 AgentScope 等智能体框架开发的 Agent 应用 + - 能够直接训练使用 [AgentScope](https://github.com/agentscope-ai/agentscope) 等智能体框架开发的 Agent 应用

-* **全流程的数据流水线:**

+* **全生命周期的数据流水线:**

- 支持 rollout 任务和经验数据的流水线处理

- 贯穿 RFT 生命周期的主动数据管理(优先级排序、清洗、增强等)

- - 原生支持多任务联合训练

+ - 原生支持多任务联合训练与课程学习

-* **全流程的数据流水线:**

+* **全生命周期的数据流水线:**

- 支持 rollout 任务和经验数据的流水线处理

- 贯穿 RFT 生命周期的主动数据管理(优先级排序、清洗、增强等)

- - 原生支持多任务联合训练

+ - 原生支持多任务联合训练与课程学习

@@ -64,25 +86,9 @@ Trinity-RFT 是一个灵活、通用的大语言模型(LLM)强化微调(RF

-## 🔨 教程与指南

-

-

-| Category | Tutorial / Guideline |

-| --- |-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

-| 运行各种 RFT 模式 | + [快速开始:在 GSM8k 上运行 GRPO](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_reasoning_basic.html)

@@ -64,25 +86,9 @@ Trinity-RFT 是一个灵活、通用的大语言模型(LLM)强化微调(RF

-## 🔨 教程与指南

-

-

-| Category | Tutorial / Guideline |

-| --- |-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

-| 运行各种 RFT 模式 | + [快速开始:在 GSM8k 上运行 GRPO](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_reasoning_basic.html)+ [Off-policy RFT](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_reasoning_advanced.html)

+ [全异步 RFT](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_async_mode.html)

+ [通过 DPO 或 SFT 进行离线学习](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_dpo.html) | -| 多轮智能体场景 | + [拼接多轮任务](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_multi_turn.html)

+ [通用多轮任务](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_step_wise.html)

+ [调用智能体框架中的 ReAct 工作流](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_react.html) | -| 数据流水线进阶能力 | + [Rollout 任务混合与选取](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/develop_selector.html)

+ [在线任务选择](https://github.com/modelscope/Trinity-RFT/tree/main/examples/bots) ([论文](https://arxiv.org/pdf/2510.26374))

+ [经验回放](https://github.com/modelscope/Trinity-RFT/tree/main/examples/ppo_countdown_exp_replay)

+ [高级数据处理能力 & Human-in-the-loop](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_data_functionalities.html) | -| RL 算法开发/研究 | + [使用 Trinity-RFT 进行 RL 算法开发](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_mix_algo.html) ([论文](https://arxiv.org/pdf/2508.11408))

+ 不可验证的领域:[RULER](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_gsm8k_ruler), [可训练 RULER](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_gsm8k_trainable_ruler), [rubric-as-reward](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_rubric_as_reward)

+ [研究项目: group-relative REINFORCE](https://github.com/modelscope/Trinity-RFT/tree/main/examples/rec_gsm8k) ([论文](https://arxiv.org/abs/2509.24203)) | -| 深入认识 Trinity-RFT | + [完整配置指南](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/trinity_configs.html)

+ [用于快速验证和实验的 Benchmark 工具](./benchmark/README.md)

+ [理解 explorer-trainer 同步逻辑](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/synchronizer.html) | - - -> [!NOTE] -> 更多教程请参考 [Trinity-RFT 文档](https://modelscope.github.io/Trinity-RFT/)。 - - - ## 🚀 新闻 +* [2025-11] 推出 [Learn-to-Ask](https://github.com/modelscope/Trinity-RFT/tree/main/examples/learn_to_ask):利用离线专家数据,训练具备主动问询能力的对话智能体([论文](https://arxiv.org/pdf/2510.25441)). * [2025-11] 推出 [BOTS](https://github.com/modelscope/Trinity-RFT/tree/main/examples/bots):在线 RL 任务选择,实现高效 LLM 微调([论文](https://arxiv.org/pdf/2510.26374))。 * [2025-11] [[发布说明](https://github.com/modelscope/Trinity-RFT/releases/tag/v0.3.2)] Trinity-RFT v0.3.2 发布:修复若干 Bug 并支持进阶的任务选择和调度。 * [2025-10] [[发布说明](https://github.com/modelscope/Trinity-RFT/releases/tag/v0.3.1)] Trinity-RFT v0.3.1 发布:多阶段训练支持、改进的智能体 RL 示例、LoRA 支持、调试模式和全新 RL 算法。 diff --git a/docs/sphinx_doc/source/main.md b/docs/sphinx_doc/source/main.md index c44ff90401..429a6d2c4c 100644 --- a/docs/sphinx_doc/source/main.md +++ b/docs/sphinx_doc/source/main.md @@ -1,18 +1,39 @@ ## 💡 What is Trinity-RFT? -Trinity-RFT is a flexible, general-purpose framework for reinforcement fine-tuning (RFT) of large language models (LLMs). It decouples the RFT process into three key components: **Explorer**, **Trainer**, and **Buffer**, and provides functionalities for users with different backgrounds and objectives: -* 🤖 For agent application developers. [[tutorial]](/tutorial/develop_workflow.md) - - Train agent applications to improve their ability to complete tasks in specific environments. - - Examples: [Multi-Turn Interaction](/tutorial/example_multi_turn.md), [ReAct Agent](/tutorial/example_react.md) +Trinity-RFT is a general-purpose, flexible and user-friendly framework for LLM reinforcement fine-tuning (RFT). +It decouples RFT into three components that work in coordination: + +* **Explorer** generates experience data via agent-environment interaction; + +* **Trainer** updates model weights by minimizing losses on the data; + +* **Buffer** pipelines data processing throughout the RFT lifecycle. + + +Trinity-RFT provides functionalities for users with different backgrounds and objectives: + +* 🤖 **Agent application developers:** Train LLM-powered agents and improve their capabilities in specific domains [[tutorial]](/tutorial/develop_workflow.md) + +* 🧠 **Reinforcement learning researchers:** Design, implement and validate new RL algorithms using compact, plug-and-play modules that allow non-invasive customization [[tutorial]](/tutorial/develop_algorithm.md) + +* 📊 **Data engineers:** Create RFT datasets and build data pipelines for cleaning, augmentation, and human-in-the-loop scenarios [[tutorial]](/tutorial/develop_operator.md) + + + + +## 🔨 Tutorials and Guidelines + + +| Category | Tutorial / Guideline | +| --- | ----| +| *Run diverse RFT modes* | + [Quick start: GRPO on GSM8k](/tutorial/example_reasoning_basic.md)

+ [Off-policy RFT](/tutorial/example_reasoning_advanced.md)

+ [Fully asynchronous RFT](/tutorial/example_async_mode.md)

+ [Offline learning by DPO or SFT](/tutorial/example_dpo.md) | +| *Multi-step agentic RL* | + [Concatenated multi-turn workflow](/tutorial/example_multi_turn.md)

+ [General multi-step workflow](/tutorial/example_step_wise.md)

+ [ReAct workflow with an agent framework](/tutorial/example_react.md)

+ [Example: train a web-search agent](https://github.com/modelscope/Trinity-RFT/tree/main/examples/agentscope_websearch) | +| *Full-lifecycle data pipelines* | + [Rollout task mixing and selection](/tutorial/develop_selector.md)

+ [Online task curriculum](https://github.com/modelscope/Trinity-RFT/tree/main/examples/bots) (📝 [paper](https://arxiv.org/pdf/2510.26374))

+ [Research project: learn-to-ask](https://github.com/modelscope/Trinity-RFT/tree/main/examples/learn_to_ask) (📝 [paper](https://arxiv.org/pdf/2510.25441))

+ [Experience replay with prioritization](https://github.com/modelscope/Trinity-RFT/tree/main/examples/ppo_countdown_exp_replay)

+ [Advanced data processing & human-in-the-loop](/tutorial/example_data_functionalities.md) | +| *Algorithm development* | + [RL algorithm development with Trinity-RFT](/tutorial/example_mix_algo.md) (📝 [paper](https://arxiv.org/pdf/2508.11408))

+ [Research project: group-relative REINFORCE](https://github.com/modelscope/Trinity-RFT/tree/main/examples/rec_gsm8k) (📝 [paper](https://arxiv.org/abs/2509.24203))

+ Non-verifiable domains: [RULER](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_gsm8k_ruler), [trainable RULER](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_gsm8k_trainable_ruler), [rubric-as-reward](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_rubric_as_reward) | +| *Going deeper into Trinity-RFT* | + [Full configurations](/tutorial/trinity_configs.md)

+ [Benchmark toolkit for quick verification and experimentation](https://github.com/modelscope/Trinity-RFT/tree/main/benchmark/README.md)

+ [Understand the coordination between explorer and trainer](/tutorial/synchronizer.md) | -* 🧠 For RL algorithm researchers. [[tutorial]](/tutorial/develop_algorithm.md) - - Design and validate new reinforcement learning algorithms using compact, plug-and-play modules. - - Example: [Mixture of SFT and GRPO](/tutorial/example_mix_algo.md) -* 📊 For data engineers. [[tutorial]](/tutorial/develop_operator.md) - - Create datasets and build data pipelines for cleaning, augmentation, and human-in-the-loop scenarios. - - Example: [Data Processing Foundations](/tutorial/example_data_functionalities.md), [Online Task Curriculum](https://github.com/modelscope/Trinity-RFT/tree/main/examples/bots) ## 🌟 Key Features @@ -26,14 +47,14 @@ Trinity-RFT is a flexible, general-purpose framework for reinforcement fine-tuni * **Agentic RL Support:** - Supports both concatenated and general multi-step agentic workflows. - - Able to directly train agent applications developed using agent frameworks like AgentScope. + - Able to directly train agent applications developed using agent frameworks like [AgentScope](https://github.com/agentscope-ai/agentscope).

* **Full-Lifecycle Data Pipelines:**

- Enables pipeline processing of rollout tasks and experience samples.

- - Active data management (e.g., prioritization, cleaning, augmentation) throughout the RFT lifecycle.

- - Native support for multi-task joint learning.

+ - Active data management (prioritization, cleaning, augmentation, etc.) throughout the RFT lifecycle.

+ - Native support for multi-task joint learning and online task curriculum construction.

* **Full-Lifecycle Data Pipelines:**

- Enables pipeline processing of rollout tasks and experience samples.

- - Active data management (e.g., prioritization, cleaning, augmentation) throughout the RFT lifecycle.

- - Native support for multi-task joint learning.

+ - Active data management (prioritization, cleaning, augmentation, etc.) throughout the RFT lifecycle.

+ - Native support for multi-task joint learning and online task curriculum construction.

@@ -45,18 +66,6 @@ Trinity-RFT is a flexible, general-purpose framework for reinforcement fine-tuni

-## 🔨 Tutorials and Guidelines

-

-

-| Category | Tutorial / Guideline |

-| --- | --- |

-| Run diverse RFT modes | + [Quick example: GRPO on GSM8k](/tutorial/example_reasoning_basic.md)

@@ -45,18 +66,6 @@ Trinity-RFT is a flexible, general-purpose framework for reinforcement fine-tuni

-## 🔨 Tutorials and Guidelines

-

-

-| Category | Tutorial / Guideline |

-| --- | --- |

-| Run diverse RFT modes | + [Quick example: GRPO on GSM8k](/tutorial/example_reasoning_basic.md)+ [Off-policy RFT](/tutorial/example_reasoning_advanced.md)

+ [Fully asynchronous RFT](/tutorial/example_async_mode.md)

+ [Offline learning by DPO or SFT](/tutorial/example_dpo.md) | -| Multi-step agentic scenarios | + [Concatenated multi-turn workflow](/tutorial/example_multi_turn.md)

+ [General multi-step workflow](/tutorial/example_step_wise.md)

+ [ReAct workflow with an agent framework](/tutorial/example_react.md) | -| Advanced data pipelines | + [Rollout task mixing and selection](/tutorial/develop_selector.md)

+ [Online task curriculum](https://github.com/modelscope/Trinity-RFT/tree/main/examples/bots) ([paper](https://arxiv.org/pdf/2510.26374))

+ [Experience replay](https://github.com/modelscope/Trinity-RFT/tree/main/examples/ppo_countdown_exp_replay)

+ [Advanced data processing & human-in-the-loop](/tutorial/example_data_functionalities.md) | -| Algorithm development / research | + [RL algorithm development with Trinity-RFT](/tutorial/example_mix_algo.md) ([paper](https://arxiv.org/pdf/2508.11408))

+ Non-verifiable domains: [RULER](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_gsm8k_ruler), [trainable RULER](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_gsm8k_trainable_ruler), [rubric-as-reward](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_rubric_as_reward)

+ [Research project: group-relative REINFORCE](https://github.com/modelscope/Trinity-RFT/tree/main/examples/rec_gsm8k) ([paper](https://arxiv.org/abs/2509.24203))| -| Going deeper into Trinity-RFT | + [Full configurations](/tutorial/trinity_configs.md)

+ [Benchmark toolkit for quick verification and experimentation](https://github.com/modelscope/Trinity-RFT/tree/main/benchmark/README.md)

+ [Understand the coordination between explorer and trainer](/tutorial/synchronizer.md) | - - ## Acknowledgements This project is built upon many excellent open-source projects, including: diff --git a/docs/sphinx_doc/source_zh/main.md b/docs/sphinx_doc/source_zh/main.md index 7f1c871998..99d89a1ad2 100644 --- a/docs/sphinx_doc/source_zh/main.md +++ b/docs/sphinx_doc/source_zh/main.md @@ -1,20 +1,40 @@ ## 💡 什么是 Trinity-RFT? -Trinity-RFT 是一个灵活、通用的大语言模型(LLM)强化微调(RFT)框架。 其将 RFT 流程解耦为三个关键模块:**Explorer**、**Trainer** 和 **Buffer**,并面向不同背景和目标的用户提供相应功能: -* 🤖 面向智能体应用开发者。[[教程]](/tutorial/develop_workflow.md) - - 训练智能体应用,以增强其在指定环境中完成任务的能力 - - 示例:[多轮交互](/tutorial/example_multi_turn.md),[ReAct 智能体](/tutorial/example_react.md) +Trinity-RFT 是一个通用、灵活、用户友好的大语言模型(LLM)强化微调(RFT)框架。 其将 RFT 流程解耦为三个协同运行的关键模块: -* 🧠 面向 RL 算法研究者。[[教程]](/tutorial/develop_algorithm.md) - - 在简洁、可插拔的类中设计和验证新的 RL 算法 - - 示例:[SFT/RL 混合算法](/tutorial/example_mix_algo.md) +* **Explorer** 负责执行智能体-环境交互,并生成经验数据; -* 📊 面向数据工程师。[[教程]](/tutorial/develop_operator.md) - - 设计针对任务定制的数据集,构建处理流水线以支持数据清洗、增强以及人类参与场景 - - 示例:[数据处理基础](/tutorial/example_data_functionalities.md),[在线任务选择](https://github.com/modelscope/Trinity-RFT/tree/main/examples/bots) +* **Trainer** 在经验数据上最小化损失函数,以此更新模型参数; -# 🌟 核心特性 +* **Buffer** 负责协调整个 RFT 生命周期中的数据处理流水线。 + + +Trinity-RFT 面向不同背景和目标的用户提供相应功能: + +* 🤖 **智能体应用开发者:** 训练智能体应用,以增强其在特定领域中完成任务的能力 [[教程]](/tutorial/develop_workflow.md) + +* 🧠 **强化学习算法研究者:** 通过定制化简洁、可插拔的模块,设计、实现与验证新的强化学习算法 [[教程]](/tutorial/develop_algorithm.md) + +* 📊 **数据工程师:** 设计针对任务定制的数据集,构建处理流水线以支持数据清洗、增强以及人类参与场景 [[教程]](/tutorial/develop_operator.md) + + + + +## 🔨 教程与指南 + + +| 类别 | 教程 / 指南 | +| --- | ----| +| *运行各种 RFT 模式* | + [快速开始:在 GSM8k 上运行 GRPO](/tutorial/example_reasoning_basic.md)

+ [Off-policy RFT](/tutorial/example_reasoning_advanced.md)

+ [全异步 RFT](/tutorial/example_async_mode.md)

+ [通过 DPO 或 SFT 进行离线学习](/tutorial/example_dpo.md) | +| *多轮智能体强化学习* | + [拼接多轮任务](/tutorial/example_multi_turn.md)

+ [通用多轮任务](/tutorial/example_step_wise.md)

+ [调用智能体框架中的 ReAct 工作流](/tutorial/example_react.md)

+ [例子:训练一个网络搜索智能体](https://github.com/modelscope/Trinity-RFT/tree/main/examples/agentscope_websearch) | +| *全生命周期的数据流水线* | + [Rollout 任务混合与选取](/tutorial/develop_selector.md)

+ [在线任务选择](https://github.com/modelscope/Trinity-RFT/tree/main/examples/bots) (📝 [论文](https://arxiv.org/pdf/2510.26374))

+ [研究项目:learn-to-ask](https://github.com/modelscope/Trinity-RFT/tree/main/examples/learn_to_ask) (📝 [论文](https://arxiv.org/pdf/2510.25441))

+ [经验回放机制](https://github.com/modelscope/Trinity-RFT/tree/main/examples/ppo_countdown_exp_replay)

+ [高级数据处理能力 & Human-in-the-loop](/tutorial/example_data_functionalities.md) | +| *强化学习算法开发* | + [使用 Trinity-RFT 进行 RL 算法开发](/tutorial/example_mix_algo.md) (📝 [论文](https://arxiv.org/pdf/2508.11408))

+ [研究项目: group-relative REINFORCE](https://github.com/modelscope/Trinity-RFT/tree/main/examples/rec_gsm8k) (📝 [论文](https://arxiv.org/abs/2509.24203))

+ 不可验证的领域: [RULER](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_gsm8k_ruler), [可训练 RULER](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_gsm8k_trainable_ruler), [rubric-as-reward](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_rubric_as_reward) | +| *深入认识 Trinity-RFT* | + [完整配置指南](/tutorial/trinity_configs.md)

+ [用于快速验证和实验的 Benchmark 工具](https://github.com/modelscope/Trinity-RFT/tree/main/benchmark/README.md)

+ [理解 explorer-trainer 同步逻辑](/tutorial/synchronizer.md) | + + + +## 🌟 核心特性 * **灵活的 RFT 模式:** - 支持同步/异步、on-policy/off-policy 以及在线/离线强化学习 @@ -25,14 +45,14 @@ Trinity-RFT 是一个灵活、通用的大语言模型(LLM)强化微调(RF * **Agentic RL 支持:** - 支持拼接式多轮和通用多轮交互 - - 能够直接训练使用 AgentScope 等智能体框架开发的 Agent 应用 + - 能够直接训练使用 [AgentScope](https://github.com/agentscope-ai/agentscope) 等智能体框架开发的 Agent 应用

-* **全流程的数据流水线:**

+* **全生命周期的数据流水线:**

- 支持 rollout 任务和经验数据的流水线处理

- 贯穿 RFT 生命周期的主动数据管理(优先级排序、清洗、增强等)

- - 原生支持多任务联合训练

+ - 原生支持多任务联合训练与课程学习

-* **全流程的数据流水线:**

+* **全生命周期的数据流水线:**

- 支持 rollout 任务和经验数据的流水线处理

- 贯穿 RFT 生命周期的主动数据管理(优先级排序、清洗、增强等)

- - 原生支持多任务联合训练

+ - 原生支持多任务联合训练与课程学习

@@ -44,20 +64,6 @@ Trinity-RFT 是一个灵活、通用的大语言模型(LLM)强化微调(RF

-

-## 🔨 教程与指南

-

-

-| Category | Tutorial / Guideline |

-| --- |---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

-| 运行各种 RFT 模式 | + [快速开始:在 GSM8k 上运行 GRPO](/tutorial/example_reasoning_basic.md)

@@ -44,20 +64,6 @@ Trinity-RFT 是一个灵活、通用的大语言模型(LLM)强化微调(RF

-

-## 🔨 教程与指南

-

-

-| Category | Tutorial / Guideline |

-| --- |---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

-| 运行各种 RFT 模式 | + [快速开始:在 GSM8k 上运行 GRPO](/tutorial/example_reasoning_basic.md)+ [Off-policy RFT](/tutorial/example_reasoning_advanced.md)

+ [全异步 RFT](/tutorial/example_async_mode.md)

+ [通过 DPO 或 SFT 进行离线学习](/tutorial/example_dpo.md) | -| 多轮智能体场景 | + [拼接多轮任务](/tutorial/example_multi_turn.md)

+ [通用多轮任务](/tutorial/example_step_wise.md)

+ [调用智能体框架中的 ReAct 工作流](/tutorial/example_react.md) | -| 数据流水线进阶能力 | + [Rollout 任务混合与选取](/tutorial/develop_selector.md)

+ [在线任务选择](https://github.com/modelscope/Trinity-RFT/tree/main/examples/bots) ([论文](https://arxiv.org/pdf/2510.26374))

+ [经验回放](https://github.com/modelscope/Trinity-RFT/tree/main/examples/ppo_countdown_exp_replay)

+ [高级数据处理能力 & Human-in-the-loop](/tutorial/example_data_functionalities.md) | -| RL 算法开发/研究 | + [使用 Trinity-RFT 进行 RL 算法开发](/tutorial/example_mix_algo.md) ([论文](https://arxiv.org/pdf/2508.11408))

+ 不可验证的领域:[RULER](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_gsm8k_ruler), [可训练 RULER](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_gsm8k_trainable_ruler), [rubric-as-reward](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_rubric_as_reward)

+ [研究项目: group-relative REINFORCE](https://github.com/modelscope/Trinity-RFT/tree/main/examples/rec_gsm8k) ([论文](https://arxiv.org/abs/2509.24203)) | -| 深入认识 Trinity-RFT | + [完整配置指南](/tutorial/trinity_configs.md)

+ [用于快速验证和实验的 Benchmark 工具](https://github.com/modelscope/Trinity-RFT/tree/main/benchmark/README.md)

+ [理解 explorer-trainer 同步逻辑](/tutorial/synchronizer.md) | - - - ## 致谢