+ [Off-policy RFT](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_reasoning_advanced.html)

+ [Fully asynchronous RFT](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_async_mode.html)

+ [Offline learning by DPO or SFT](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_dpo.html)

+ [RFT without local GPU (Tinker Backend)](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_tinker_backend.html) | -| *Multi-step agentic RL* | + [Concatenated multi-turn workflow](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_multi_turn.html)

+ [General multi-step workflow](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_step_wise.html)

+ [ReAct workflow with an agent framework](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_react.html)

+ [Example: train a web-search agent](https://github.com/modelscope/Trinity-RFT/tree/main/examples/agentscope_websearch) | -| *Full-lifecycle data pipelines* | + [Rollout task mixing and selection](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/develop_selector.html)

+ [Online task curriculum](https://github.com/modelscope/Trinity-RFT/tree/main/examples/bots) (📝 [paper](https://arxiv.org/pdf/2510.26374))

+ [Research project: learn-to-ask](https://github.com/modelscope/Trinity-RFT/tree/main/examples/learn_to_ask) (📝 [paper](https://arxiv.org/pdf/2510.25441))

+ [Experience replay with prioritization](https://github.com/modelscope/Trinity-RFT/tree/main/examples/ppo_countdown_exp_replay)

+ [Advanced data processing & human-in-the-loop](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_data_functionalities.html) | -| *Algorithm development* | + [RL algorithm development with Trinity-RFT](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_mix_algo.html) (📝 [paper](https://arxiv.org/pdf/2508.11408))

+ [Research project: group-relative REINFORCE](https://github.com/modelscope/Trinity-RFT/tree/main/examples/rec_gsm8k) (📝 [paper](https://arxiv.org/abs/2509.24203))

+ Non-verifiable domains: [RULER](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_gsm8k_ruler), [trainable RULER](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_gsm8k_trainable_ruler), [rubric-as-reward](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_rubric_as_reward) | -| *Benchmarks* | + [Benchmark toolkit (quick verification & experimentation)](https://github.com/modelscope/Trinity-RFT/tree/main/benchmark/README.md)

+ [Guru-Math benchmark & comparison with veRL](https://github.com/modelscope/Trinity-RFT/tree/main/benchmark/reports/guru_math.md)

+ [FrozenLake benchmark & comparison with rLLM](https://github.com/modelscope/Trinity-RFT/tree/main/benchmark/reports/frozenlake.md)

+ [Alfworld benchmark & comparison with rLLM](https://github.com/modelscope/Trinity-RFT/tree/main/benchmark/reports/alfworld.md) | -| *Going deeper into Trinity-RFT* | + [Full configurations](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/trinity_configs.html)

+ [GPU resource and training configuration guide](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/trinity_gpu_configs.html)

+ [Understand the coordination between explorer and trainer](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/synchronizer.html)

+ [How to align configuration with veRL](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/align_with_verl.html) | - +| Category | Tutorial / Guideline | +|-----------------------------------|------------------------------------------------------------------------------------------------------------------| +| *Run diverse RFT modes* | • [Quick start: GRPO on GSM8k](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_reasoning_basic.html)

• [Off-policy RFT](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_reasoning_advanced.html)

• [Fully asynchronous RFT](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_async_mode.html)

• [Offline learning by DPO or SFT](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_dpo.html)

• [RFT without local GPU (Tinker Backend)](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_tinker_backend.html) | +| *Multi-step agentic RL* | • [Concatenated multi-turn workflow](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_multi_turn.html)

• [General multi-step workflow](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_step_wise.html)

• [ReAct workflow with an agent framework](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_react.html)

• [Example: train a web-search agent](https://github.com/modelscope/Trinity-RFT/tree/main/examples/agentscope_websearch) | +| *Full-lifecycle data pipelines* | • [Rollout task mixing and selection](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/develop_selector.html)

• [Online task curriculum](https://github.com/modelscope/Trinity-RFT/tree/main/examples/bots) (📝 [paper](https://arxiv.org/pdf/2510.26374))

• [Research project: learn-to-ask](https://github.com/modelscope/Trinity-RFT/tree/main/examples/learn_to_ask) (📝 [paper](https://arxiv.org/pdf/2510.25441))

• [Experience replay with prioritization](https://github.com/modelscope/Trinity-RFT/tree/main/examples/ppo_countdown_exp_replay)

• [Advanced data processing & human-in-the-loop](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_data_functionalities.html) | +| *Algorithm development* | • [RL algorithm development with Trinity-RFT](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_mix_algo.html) (📝 [paper](https://arxiv.org/pdf/2508.11408))

• [Research project: group-relative REINFORCE](https://github.com/modelscope/Trinity-RFT/tree/main/examples/rec_gsm8k) (📝 [paper](https://arxiv.org/abs/2509.24203))

• Non-verifiable domains: [RULER](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_gsm8k_ruler), [trainable RULER](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_gsm8k_trainable_ruler), [rubric-as-reward](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_rubric_as_reward) | +| *Benchmarks* | • [Benchmark toolkit (quick verification & experimentation)](https://github.com/modelscope/Trinity-RFT/tree/main/benchmark/README.md)

• [Guru-Math benchmark & comparison with veRL](https://github.com/modelscope/Trinity-RFT/tree/main/benchmark/reports/guru_math.md)

• [FrozenLake benchmark & comparison with rLLM](https://github.com/modelscope/Trinity-RFT/tree/main/benchmark/reports/frozenlake.md)

• [Alfworld benchmark & comparison with rLLM](https://github.com/modelscope/Trinity-RFT/tree/main/benchmark/reports/alfworld.md) | +| *Going deeper into Trinity-RFT* | • [Full configurations](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/trinity_configs.html)

• [GPU resource and training configuration guide](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/trinity_gpu_configs.html)

• [Understand the coordination between explorer and trainer](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/synchronizer.html)

• [How to align configuration with veRL](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/align_with_verl.html) | > [!NOTE] > For more tutorials, please refer to the [Trinity-RFT documentation](https://modelscope.github.io/Trinity-RFT/). - - ## 🌟 Key Features * **Flexible RFT Modes:** - Supports synchronous/asynchronous, on-policy/off-policy, and online/offline RL. - Rollout and training can run separately and scale independently across devices. - Boost sample and time efficiency by experience replay. -

* **Agentic RL Support:**

- Supports both concatenated and general multi-step agentic workflows.

- Able to directly train agent applications developed using agent frameworks like [AgentScope](https://github.com/agentscope-ai/agentscope).

-

* **Agentic RL Support:**

- Supports both concatenated and general multi-step agentic workflows.

- Able to directly train agent applications developed using agent frameworks like [AgentScope](https://github.com/agentscope-ai/agentscope).

-

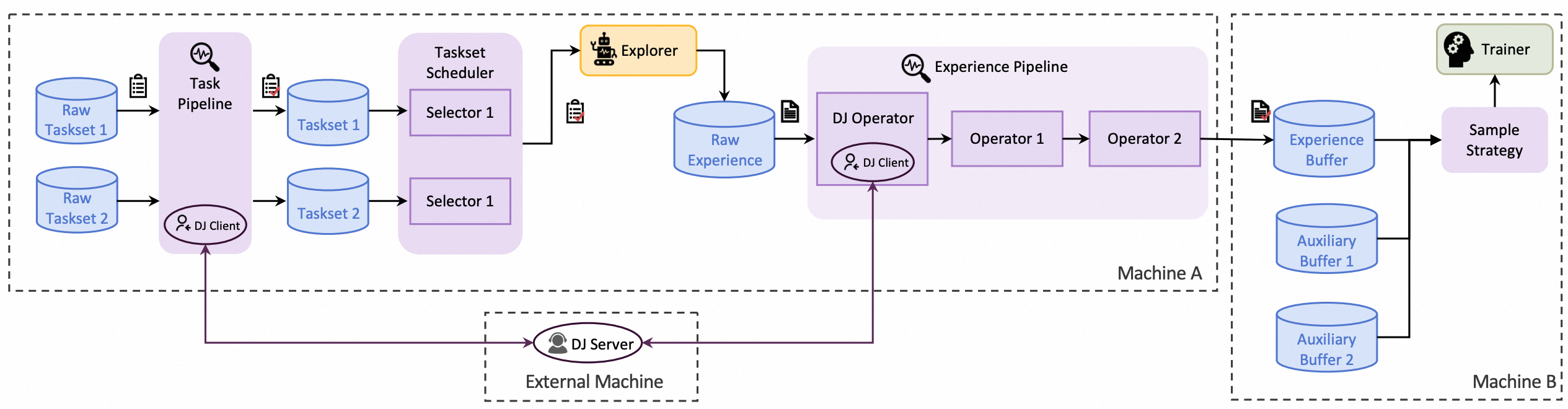

* **Full-Lifecycle Data Pipelines:**

- Enables pipeline processing of rollout tasks and experience samples.

- Active data management (prioritization, cleaning, augmentation, etc.) throughout the RFT lifecycle.

- Native support for multi-task joint learning and online task curriculum construction.

-

* **Full-Lifecycle Data Pipelines:**

- Enables pipeline processing of rollout tasks and experience samples.

- Active data management (prioritization, cleaning, augmentation, etc.) throughout the RFT lifecycle.

- Native support for multi-task joint learning and online task curriculum construction.

-

* **User-Friendly Design:**

- Plug-and-play modules and decoupled architecture, facilitating easy adoption and development.

- Rich graphical user interfaces enable low-code usage.

-

* **User-Friendly Design:**

- Plug-and-play modules and decoupled architecture, facilitating easy adoption and development.

- Rich graphical user interfaces enable low-code usage.

-

-

-

## 🔧 Supported Algorithms

-We list some algorithms supported by Trinity-RFT in the following table. For more details, the concrete configurations are shown in the [Algorithm module](https://github.com/modelscope/Trinity-RFT/blob/main/trinity/algorithm/algorithm.py). You can also set up new algorithms by customizing different components, see [tutorial](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/develop_algorithm.html).

-

-| Algorithm | Doc / Example | Source Code | Key Configurations |

-|:-----------|:-----------|:---------------|:-----------|

-| PPO [[Paper](https://arxiv.org/pdf/1707.06347)] | [[Doc](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_reasoning_basic.html)] [[Countdown Example](https://github.com/modelscope/Trinity-RFT/tree/main/examples/ppo_countdown)] | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/policy_loss_fn/ppo_policy_loss.py)] | `algorithm_type: ppo` |

-| GRPO [[Paper](https://arxiv.org/pdf/2402.03300)] | [[Doc](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_reasoning_basic.html)] [[GSM8K Example](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_gsm8k)]| [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/advantage_fn/grpo_advantage.py)] | `algorithm_type: grpo` |

-| CHORD 💡 [[Paper](https://arxiv.org/pdf/2508.11408)] | [[Doc](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_mix_algo.html)] [[ToolACE Example](https://github.com/modelscope/Trinity-RFT/blob/main/examples/mix_chord/mix_chord_toolace.yaml)] | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/policy_loss_fn/chord_policy_loss.py)] | `algorithm_type: mix_chord` |

-| REC Series 💡 [[Paper](https://arxiv.org/pdf/2509.24203)] | [[GSM8K Example](https://github.com/modelscope/Trinity-RFT/tree/main/examples/rec_gsm8k)] | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/policy_loss_fn/rec_policy_loss.py)] | `algorithm_type: rec` |

-| RLOO [[Paper](https://arxiv.org/pdf/2402.14740)] | - | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/advantage_fn/rloo_advantage.py)] | `algorithm_type: rloo` |

-| REINFORCE++ [[Paper](https://arxiv.org/pdf/2501.03262)] | - | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/advantage_fn/reinforce_advantage.py)] | `algorithm_type: reinforceplusplus` |

-| GSPO [[Paper](https://arxiv.org/pdf/2507.18071)] | - | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/policy_loss_fn/gspo_policy_loss.py)] | `algorithm_type: gspo` |

-| TOPR [[Paper](https://arxiv.org/pdf/2503.14286)] | [[GSM8K Example](https://github.com/modelscope/Trinity-RFT/tree/main/examples/topr_gsm8k)] | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/policy_loss_fn/topr_policy_loss.py)] | `algorithm_type: topr` |

-| sPPO [[Paper](https://arxiv.org/pdf/2108.05828)] | [[GSM8K Example](https://github.com/modelscope/Trinity-RFT/tree/main/examples/sppo_gsm8k)] | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/policy_loss_fn/sppo_loss_fn.py)] | `algorithm_type: sppo` |

-| AsymRE [[Paper](https://arxiv.org/pdf/2506.20520)] | [[GSM8K Example](https://github.com/modelscope/Trinity-RFT/tree/main/examples/asymre_gsm8k)] | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/advantage_fn/asymre_advantage.py)] | `algorithm_type: asymre` |

-| CISPO [[Paper](https://arxiv.org/pdf/2506.13585)] | - | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/policy_loss_fn/cispo_policy_loss.py)] | `algorithm_type: cispo` |

-| SAPO [[Paper](https://arxiv.org/pdf/2511.20347)] | - | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/policy_loss_fn/sapo_policy_loss.py)] | `algorithm_type: sapo` |

+| Algorithm | Doc / Example | Source Code | Key Configurations |

+|------------------------|-------------------------------------------------------------------------------------------------|-------------------------------------------------------------------------------------------------|--------------------------------|

+| PPO [[Paper](https://arxiv.org/pdf/1707.06347)] | [[Doc](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_reasoning_basic.html)] [[Countdown Example](https://github.com/modelscope/Trinity-RFT/tree/main/examples/ppo_countdown)] | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/policy_loss_fn/ppo_policy_loss.py)] | `algorithm_type: ppo` |

+| GRPO [[Paper](https://arxiv.org/pdf/2402.03300)] | [[Doc](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_reasoning_basic.html)] [[GSM8K Example](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_gsm8k)] | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/advantage_fn/grpo_advantage.py)] | `algorithm_type: grpo` |

+| CHORD 💡 [[Paper](https://arxiv.org/pdf/2508.11408)] | [[Doc](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_mix_algo.html)] [[ToolACE Example](https://github.com/modelscope/Trinity-RFT/blob/main/examples/mix_chord/mix_chord_toolace.yaml)] | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/policy_loss_fn/chord_policy_loss.py)] | `algorithm_type: mix_chord` |

+| REC Series 💡 [[Paper](https://arxiv.org/pdf/2509.24203)] | [[GSM8K Example](https://github.com/modelscope/Trinity-RFT/tree/main/examples/rec_gsm8k)] | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/policy_loss_fn/rec_policy_loss.py)] | `algorithm_type: rec` |

+| RLOO [[Paper](https://arxiv.org/pdf/2402.14740)] | - | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/advantage_fn/rloo_advantage.py)] | `algorithm_type: rloo` |

+| REINFORCE++ [[Paper](https://arxiv.org/pdf/2501.03262)] | - | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/advantage_fn/reinforce_advantage.py)] | `algorithm_type: reinforceplusplus` |

+| GSPO [[Paper](https://arxiv.org/pdf/2507.18071)] | - | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/policy_loss_fn/gspo_policy_loss.py)] | `algorithm_type: gspo` |

+| TOPR [[Paper](https://arxiv.org/pdf/2503.14286)] | [[GSM8K Example](https://github.com/modelscope/Trinity-RFT/tree/main/examples/topr_gsm8k)] | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/policy_loss_fn/topr_policy_loss.py)] | `algorithm_type: topr` |

+| sPPO [[Paper](https://arxiv.org/pdf/2108.05828)] | [[GSM8K Example](https://github.com/modelscope/Trinity-RFT/tree/main/examples/sppo_gsm8k)] | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/policy_loss_fn/sppo_loss_fn.py)] | `algorithm_type: sppo` |

+| AsymRE [[Paper](https://arxiv.org/pdf/2506.20520)] | [[GSM8K Example](https://github.com/modelscope/Trinity-RFT/tree/main/examples/asymre_gsm8k)] | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/advantage_fn/asymre_advantage.py)] | `algorithm_type: asymre` |

+| CISPO [[Paper](https://arxiv.org/pdf/2506.13585)] | - | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/policy_loss_fn/cispo_policy_loss.py)] | `algorithm_type: cispo` |

+| SAPO [[Paper](https://arxiv.org/pdf/2511.20347)] | - | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/policy_loss_fn/sapo_policy_loss.py)] | `algorithm_type: sapo` |

| On-Policy Distillation [[Blog](https://thinkingmachines.ai/blog/on-policy-distillation/)] [[Paper](https://arxiv.org/pdf/2306.13649)] | [[GSM8K Example](https://github.com/modelscope/Trinity-RFT/tree/main/examples/on_policy_distill)] | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/common/workflows/on_policy_distill_workflow.py)] | `algorithm_type: on_policy_distill` |

-

-

---

## Table of Contents

- [Quick Start](#quick-start)

+ - [Minimal CPU-Only Quick Start](#minimal-cpu-only-quick-start)

- [Step 1: installation](#step-1-installation)

- [Step 2: prepare dataset and model](#step-2-prepare-dataset-and-model)

- [Step 3: configurations](#step-3-configurations)

@@ -148,46 +124,66 @@ We list some algorithms supported by Trinity-RFT in the following table. For mor

- [Acknowledgements](#acknowledgements)

- [Citation](#citation)

-

+---

## Quick Start

-

> [!NOTE]

> This project is currently under active development. Comments and suggestions are welcome!

->

-> **No GPU? No problem!** You can still try it out:

-> 1. Follow the installation steps (feel free to skip GPU-specific packages like `flash-attn`)

-> 2. Run the **[Tinker training example](https://github.com/modelscope/Trinity-RFT/tree/main/examples/tinker)**, which is specifically designed to work on CPU-only systems.

+### Minimal CPU-Only Quick Start

+

+If you do not have access to a GPU, you can still try Trinity-RFT using the Tinker backend.

+

+```bash

+# Create and activate environment

+python3.10 -m venv .venv

+source .venv/bin/activate

+

+# Install Trinity-RFT with CPU-only backend

+pip install -e ".[tinker]"

+```

+

+Run a simple example:

+

+```bash

+trinity run --config examples/tinker/tinker.yaml

+```

+

+This example is designed to run on CPU-only machines. See the complete [Tinker training example](https://github.com/modelscope/Trinity-RFT/tree/main/examples/tinker) for more details.

+

+To run Trinity-RFT on GPU machines instead, please follow the steps below.

-### Step 1: installation

+### Step 1: Installation

Before installing, make sure your system meets the following requirements:

-- **Python**: version 3.10 to 3.12 (inclusive)

-- **CUDA**: version >= 12.8

-- **GPUs**: at least 2 GPUs

+#### GPU Requirements

+- Python: version 3.10 to 3.12 (inclusive)

+- CUDA: version >= 12.8

+- GPUs: at least 2 GPUs

+

+**Recommended for first-time users:**

+

+* If you have no GPU → Use Tinker backend

+* If you want simple setup → Use Docker

+* If you want development & contribution → Use Conda / venv

#### From Source (Recommended)

If you plan to customize or contribute to Trinity-RFT, this is the best option.

-##### 1. Clone the Repository

+First, clone the repository:

```bash

git clone https://github.com/modelscope/Trinity-RFT

cd Trinity-RFT

```

-##### 2. Set Up Environment

-

-Choose one of the following options:

-

-##### Using Pre-built Docker Image (Recommended for Beginners)

+Then, set up environment via one of the following options:

-We provide a pre-built Docker image with GPU-related dependencies installed.

+**Using Pre-built Docker Image (Recommended for Beginners)**

```bash

docker pull ghcr.io/modelscope/trinity-rft:latest

@@ -201,10 +197,9 @@ docker run -it \

-v

-

-

## 🔧 Supported Algorithms

-We list some algorithms supported by Trinity-RFT in the following table. For more details, the concrete configurations are shown in the [Algorithm module](https://github.com/modelscope/Trinity-RFT/blob/main/trinity/algorithm/algorithm.py). You can also set up new algorithms by customizing different components, see [tutorial](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/develop_algorithm.html).

-

-| Algorithm | Doc / Example | Source Code | Key Configurations |

-|:-----------|:-----------|:---------------|:-----------|

-| PPO [[Paper](https://arxiv.org/pdf/1707.06347)] | [[Doc](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_reasoning_basic.html)] [[Countdown Example](https://github.com/modelscope/Trinity-RFT/tree/main/examples/ppo_countdown)] | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/policy_loss_fn/ppo_policy_loss.py)] | `algorithm_type: ppo` |

-| GRPO [[Paper](https://arxiv.org/pdf/2402.03300)] | [[Doc](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_reasoning_basic.html)] [[GSM8K Example](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_gsm8k)]| [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/advantage_fn/grpo_advantage.py)] | `algorithm_type: grpo` |

-| CHORD 💡 [[Paper](https://arxiv.org/pdf/2508.11408)] | [[Doc](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_mix_algo.html)] [[ToolACE Example](https://github.com/modelscope/Trinity-RFT/blob/main/examples/mix_chord/mix_chord_toolace.yaml)] | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/policy_loss_fn/chord_policy_loss.py)] | `algorithm_type: mix_chord` |

-| REC Series 💡 [[Paper](https://arxiv.org/pdf/2509.24203)] | [[GSM8K Example](https://github.com/modelscope/Trinity-RFT/tree/main/examples/rec_gsm8k)] | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/policy_loss_fn/rec_policy_loss.py)] | `algorithm_type: rec` |

-| RLOO [[Paper](https://arxiv.org/pdf/2402.14740)] | - | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/advantage_fn/rloo_advantage.py)] | `algorithm_type: rloo` |

-| REINFORCE++ [[Paper](https://arxiv.org/pdf/2501.03262)] | - | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/advantage_fn/reinforce_advantage.py)] | `algorithm_type: reinforceplusplus` |

-| GSPO [[Paper](https://arxiv.org/pdf/2507.18071)] | - | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/policy_loss_fn/gspo_policy_loss.py)] | `algorithm_type: gspo` |

-| TOPR [[Paper](https://arxiv.org/pdf/2503.14286)] | [[GSM8K Example](https://github.com/modelscope/Trinity-RFT/tree/main/examples/topr_gsm8k)] | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/policy_loss_fn/topr_policy_loss.py)] | `algorithm_type: topr` |

-| sPPO [[Paper](https://arxiv.org/pdf/2108.05828)] | [[GSM8K Example](https://github.com/modelscope/Trinity-RFT/tree/main/examples/sppo_gsm8k)] | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/policy_loss_fn/sppo_loss_fn.py)] | `algorithm_type: sppo` |

-| AsymRE [[Paper](https://arxiv.org/pdf/2506.20520)] | [[GSM8K Example](https://github.com/modelscope/Trinity-RFT/tree/main/examples/asymre_gsm8k)] | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/advantage_fn/asymre_advantage.py)] | `algorithm_type: asymre` |

-| CISPO [[Paper](https://arxiv.org/pdf/2506.13585)] | - | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/policy_loss_fn/cispo_policy_loss.py)] | `algorithm_type: cispo` |

-| SAPO [[Paper](https://arxiv.org/pdf/2511.20347)] | - | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/policy_loss_fn/sapo_policy_loss.py)] | `algorithm_type: sapo` |

+| Algorithm | Doc / Example | Source Code | Key Configurations |

+|------------------------|-------------------------------------------------------------------------------------------------|-------------------------------------------------------------------------------------------------|--------------------------------|

+| PPO [[Paper](https://arxiv.org/pdf/1707.06347)] | [[Doc](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_reasoning_basic.html)] [[Countdown Example](https://github.com/modelscope/Trinity-RFT/tree/main/examples/ppo_countdown)] | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/policy_loss_fn/ppo_policy_loss.py)] | `algorithm_type: ppo` |

+| GRPO [[Paper](https://arxiv.org/pdf/2402.03300)] | [[Doc](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_reasoning_basic.html)] [[GSM8K Example](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_gsm8k)] | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/advantage_fn/grpo_advantage.py)] | `algorithm_type: grpo` |

+| CHORD 💡 [[Paper](https://arxiv.org/pdf/2508.11408)] | [[Doc](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_mix_algo.html)] [[ToolACE Example](https://github.com/modelscope/Trinity-RFT/blob/main/examples/mix_chord/mix_chord_toolace.yaml)] | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/policy_loss_fn/chord_policy_loss.py)] | `algorithm_type: mix_chord` |

+| REC Series 💡 [[Paper](https://arxiv.org/pdf/2509.24203)] | [[GSM8K Example](https://github.com/modelscope/Trinity-RFT/tree/main/examples/rec_gsm8k)] | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/policy_loss_fn/rec_policy_loss.py)] | `algorithm_type: rec` |

+| RLOO [[Paper](https://arxiv.org/pdf/2402.14740)] | - | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/advantage_fn/rloo_advantage.py)] | `algorithm_type: rloo` |

+| REINFORCE++ [[Paper](https://arxiv.org/pdf/2501.03262)] | - | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/advantage_fn/reinforce_advantage.py)] | `algorithm_type: reinforceplusplus` |

+| GSPO [[Paper](https://arxiv.org/pdf/2507.18071)] | - | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/policy_loss_fn/gspo_policy_loss.py)] | `algorithm_type: gspo` |

+| TOPR [[Paper](https://arxiv.org/pdf/2503.14286)] | [[GSM8K Example](https://github.com/modelscope/Trinity-RFT/tree/main/examples/topr_gsm8k)] | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/policy_loss_fn/topr_policy_loss.py)] | `algorithm_type: topr` |

+| sPPO [[Paper](https://arxiv.org/pdf/2108.05828)] | [[GSM8K Example](https://github.com/modelscope/Trinity-RFT/tree/main/examples/sppo_gsm8k)] | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/policy_loss_fn/sppo_loss_fn.py)] | `algorithm_type: sppo` |

+| AsymRE [[Paper](https://arxiv.org/pdf/2506.20520)] | [[GSM8K Example](https://github.com/modelscope/Trinity-RFT/tree/main/examples/asymre_gsm8k)] | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/advantage_fn/asymre_advantage.py)] | `algorithm_type: asymre` |

+| CISPO [[Paper](https://arxiv.org/pdf/2506.13585)] | - | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/policy_loss_fn/cispo_policy_loss.py)] | `algorithm_type: cispo` |

+| SAPO [[Paper](https://arxiv.org/pdf/2511.20347)] | - | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/algorithm/policy_loss_fn/sapo_policy_loss.py)] | `algorithm_type: sapo` |

| On-Policy Distillation [[Blog](https://thinkingmachines.ai/blog/on-policy-distillation/)] [[Paper](https://arxiv.org/pdf/2306.13649)] | [[GSM8K Example](https://github.com/modelscope/Trinity-RFT/tree/main/examples/on_policy_distill)] | [[Code](https://github.com/modelscope/Trinity-RFT/tree/main/trinity/common/workflows/on_policy_distill_workflow.py)] | `algorithm_type: on_policy_distill` |

-

-

---

## Table of Contents

- [Quick Start](#quick-start)

+ - [Minimal CPU-Only Quick Start](#minimal-cpu-only-quick-start)

- [Step 1: installation](#step-1-installation)

- [Step 2: prepare dataset and model](#step-2-prepare-dataset-and-model)

- [Step 3: configurations](#step-3-configurations)

@@ -148,46 +124,66 @@ We list some algorithms supported by Trinity-RFT in the following table. For mor

- [Acknowledgements](#acknowledgements)

- [Citation](#citation)

-

+---

## Quick Start

-

> [!NOTE]

> This project is currently under active development. Comments and suggestions are welcome!

->

-> **No GPU? No problem!** You can still try it out:

-> 1. Follow the installation steps (feel free to skip GPU-specific packages like `flash-attn`)

-> 2. Run the **[Tinker training example](https://github.com/modelscope/Trinity-RFT/tree/main/examples/tinker)**, which is specifically designed to work on CPU-only systems.

+### Minimal CPU-Only Quick Start

+

+If you do not have access to a GPU, you can still try Trinity-RFT using the Tinker backend.

+

+```bash

+# Create and activate environment

+python3.10 -m venv .venv

+source .venv/bin/activate

+

+# Install Trinity-RFT with CPU-only backend

+pip install -e ".[tinker]"

+```

+

+Run a simple example:

+

+```bash

+trinity run --config examples/tinker/tinker.yaml

+```

+

+This example is designed to run on CPU-only machines. See the complete [Tinker training example](https://github.com/modelscope/Trinity-RFT/tree/main/examples/tinker) for more details.

+

+To run Trinity-RFT on GPU machines instead, please follow the steps below.

-### Step 1: installation

+### Step 1: Installation

Before installing, make sure your system meets the following requirements:

-- **Python**: version 3.10 to 3.12 (inclusive)

-- **CUDA**: version >= 12.8

-- **GPUs**: at least 2 GPUs

+#### GPU Requirements

+- Python: version 3.10 to 3.12 (inclusive)

+- CUDA: version >= 12.8

+- GPUs: at least 2 GPUs

+

+**Recommended for first-time users:**

+

+* If you have no GPU → Use Tinker backend

+* If you want simple setup → Use Docker

+* If you want development & contribution → Use Conda / venv

#### From Source (Recommended)

If you plan to customize or contribute to Trinity-RFT, this is the best option.

-##### 1. Clone the Repository

+First, clone the repository:

```bash

git clone https://github.com/modelscope/Trinity-RFT

cd Trinity-RFT

```

-##### 2. Set Up Environment

-

-Choose one of the following options:

-

-##### Using Pre-built Docker Image (Recommended for Beginners)

+Then, set up environment via one of the following options:

-We provide a pre-built Docker image with GPU-related dependencies installed.

+**Using Pre-built Docker Image (Recommended for Beginners)**

```bash

docker pull ghcr.io/modelscope/trinity-rft:latest

@@ -201,10 +197,9 @@ docker run -it \

-v  -

-