Easier way to automatically figure out the input shape after the nn.Flatten() layer in a CNN?

#313

-

|

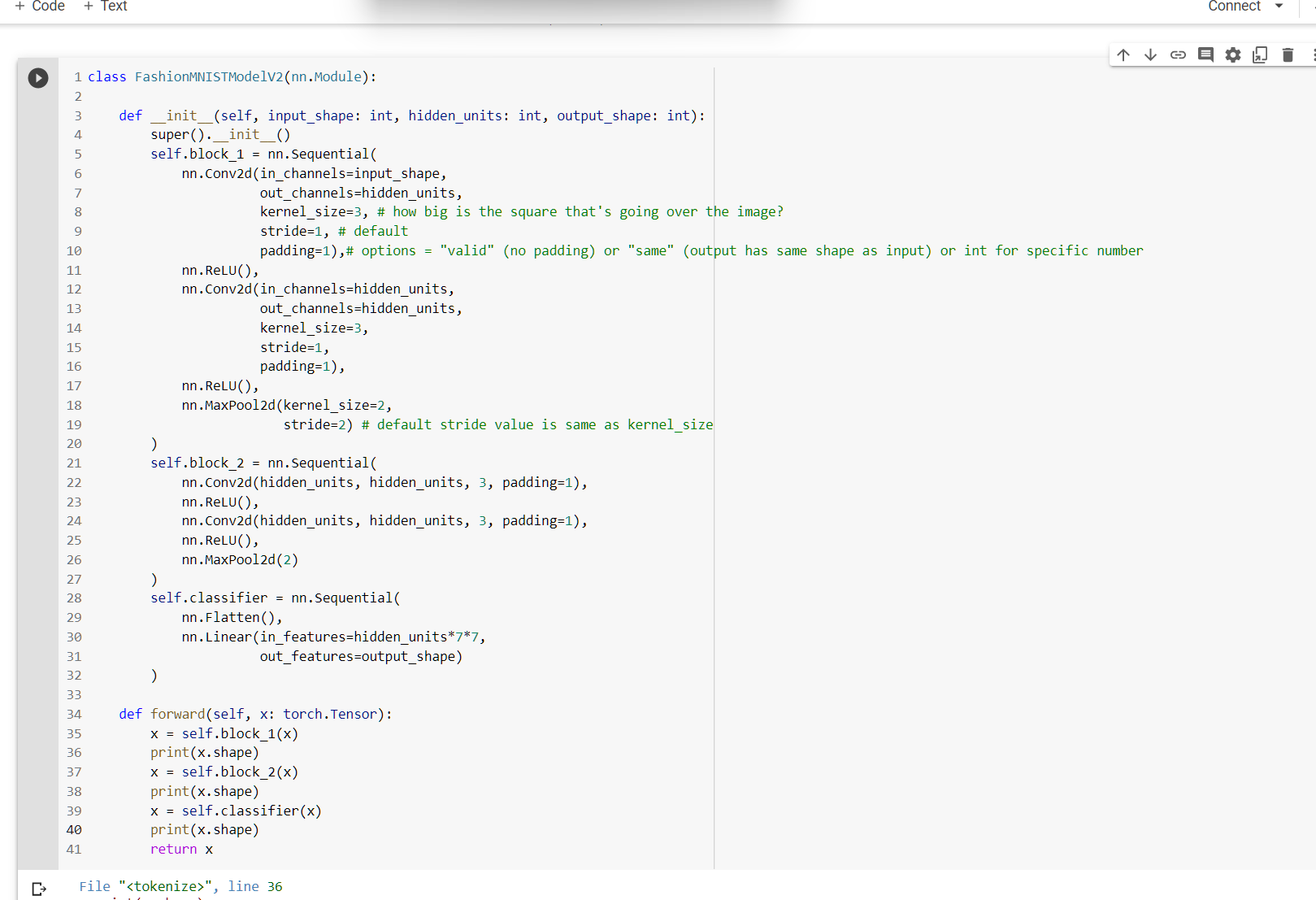

In section 2, in the video "Mode 2: Using a Trick to Find the Input and Output Shapes of Each of Our Layers" a method is gone over of printing the shape after each convolutional layer block. This is to shown in the video to figure out the shape to enter into the linear layer after the flatten layer. We then are walked through multiplying the hidden units by 7 twice. Is there a way around this? Or do we need to figure out the shape this way everytime we build a CNN? |

Beta Was this translation helpful? Give feedback.

Replies: 1 comment 2 replies

-

|

Hey @ZachCalkins, Good question! And yes there is, you can use Check out the documentation above for an example. But in essence, the "Lazy" means to "figure out the For example: # Original

self.classifier = nn.Sequential(

nn.Flatten(),

nn.Linear(in_features=hidden_units*7*7,

out_features=output_shape)

)Becomes: # New with LazyLinear

self.classifier = nn.Sequential(

nn.Flatten(),

nn.LazyLinear(out_features=output_shape) # notice the no "in_features" (this is inferred by the layer)

)Try it out and see how you go! In fact, there are many "Lazy" layers (these are quite new in PyTorch), try searching the documentation for "lazy layers" - https://pytorch.org/docs/stable/search.html?q=lazy&check_keywords=yes&area=default |

Beta Was this translation helpful? Give feedback.

Hey @ZachCalkins,

Good question!

And yes there is, you can use

torch.nn.LazyLinear().Check out the documentation above for an example.

But in essence, the "Lazy" means to "figure out the

in_featuresparameter automatically.For example:

Becomes:

Try it out and see how you go!

In fact, there are many "Lazy" layers (these are quite new in PyTorch), try searching the document…