Replies: 11 comments 3 replies

-

|

Look @jordeu new challenge for easter time! 😆 @wikiselev should |

Beta Was this translation helpful? Give feedback.

-

|

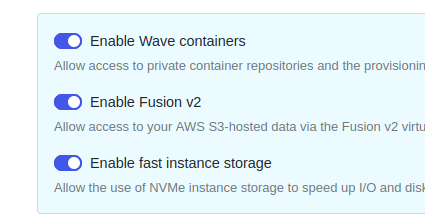

How do you setup the Compute Environment? Are you using Tower? With "fast instance storage" enabled? |

Beta Was this translation helpful? Give feedback.

-

Yes, that was my original code, but then I found some discussions about a too large number of arguments for |

Beta Was this translation helpful? Give feedback.

-

Yes, this is exactly the setup that I call |

Beta Was this translation helpful? Give feedback.

-

|

These 20.000 files are generated by 20.000 different processes or and then declared as input of the "concatenate" process? |

Beta Was this translation helpful? Give feedback.

-

They are generated by 20,000 processes. In the workflow I pass |

Beta Was this translation helpful? Give feedback.

-

|

Actually, after answering to @pditommaso I realised that the long failed run was using just

|

Beta Was this translation helpful? Give feedback.

-

|

I'll test this use case. It's a difficult one and I don't expect to get better results, but it should be possible to at least give similar results. Fusion performance improvements come from the fact that it can download/upload files on the background while the process is running. Also is design to improve the performance when dealing with big files. And this use case is a bit of the opposite, just a pure download and upload of small files and nearly nothing is done by the process. The best solution that you can build now (with or without Fusion) is to add an intermediate step that in parallel concatenates batches of 1000 files and then a final process that collects all of them into a single file. |

Beta Was this translation helpful? Give feedback.

-

|

I wonder if could just be handled via Nextflow collectFile? |

Beta Was this translation helpful? Give feedback.

-

|

Moving this to the discussion, because it's not a Nextflow issue |

Beta Was this translation helpful? Give feedback.

-

|

We found a bug that was making this use case highly inefficient. We've fixed it on latest Fusion version automatically available when running Nextflow 23.04 With the new version when we run this pipeline with an AWS Batch + Fusion v2 + fast storage Tower compute environment the collector process that concatenates 20k files it takes 37 minutes. |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

-

Bug report

AWS Fusion integration is less efficient than NO Fusion when concatenating a large number of files (~20,000).

Expected behavior and actual behavior

Using Fusion is supposed to make file operations faster, but I observe the opposite.

Steps to reproduce the problem

My pipeline has a large number of the same processes that generate a large number of

csvfiles. These files contain only a single row with 10 columns each (200-300 bytes in size). The files are then concatenated together using a single process with the following script:Program output

I've tested the pipeline for different number of

csvfiles and with/without Fusion and got the following results:maxRetries = 5), cancelled after waiting too long with 36GB of RAMEnvironment

Beta Was this translation helpful? Give feedback.

All reactions