@@ -278,11 +278,11 @@ Use the *Step‑by‑step* instructions in our deployment guide, [Setting Up an

Repeat the instructions on all four web servers:

- Running App 1:

- - ngx-oss-app1-1

- - ngx-oss-app1-2

+ - **ngx-oss-app1-1**

+ - **ngx-oss-app1-2**

- Running App 2:

- - ngx-oss-app2-1

- - ngx-oss-app2-2

+ - **ngx-oss-app2-1**

+ - **ngx-oss-app2-2**

#### Configure NGINX Plus on the load balancers

@@ -291,7 +291,7 @@ Configure NGINX Plus instances as load balancers. These distribute requests to

Use the *Step‑by‑step* instructions in our deployment guide, [Setting Up an NGINX Demo Environment]({{< ref "/nginx/deployment-guides/setting-up-nginx-demo-environment.md" >}}).

-Repeat the instructions on both ngx-plus-1 and ngx-plus-2.

+Repeat the instructions on both **ngx‑plus‑1** and **ngx‑plus‑2**.

### Automate instance setup with Packer and Terraform

@@ -317,7 +317,7 @@ To run the scripts, follow these instructions:

3. Set your AWS credentials in the Packer and Terraform scripts:

- - For Packer, set your credentials in the `variables` block in both packer/ngx-oss/packer.json and packer/ngx-plus/packer.json:

+ - For Packer, set your credentials in the `variables` block in both **packer/ngx‑oss/packer.json** and **packer/ngx‑plus/packer.json**:

```none

"variables": {

diff --git a/content/nginx/deployment-guides/amazon-web-services/ingress-controller-elastic-kubernetes-services.md b/content/nginx/deployment-guides/amazon-web-services/ingress-controller-elastic-kubernetes-services.md

index 3a66f745d..e1c9811b3 100644

--- a/content/nginx/deployment-guides/amazon-web-services/ingress-controller-elastic-kubernetes-services.md

+++ b/content/nginx/deployment-guides/amazon-web-services/ingress-controller-elastic-kubernetes-services.md

@@ -43,14 +43,14 @@ This guide covers the `eksctl` command as it is the simplest option.

1. Follow the instructions in the [eksctl.io documentation](https://eksctl.io/installation/) to install or update the `eksctl` command.

-2. Create an Amazon EKS cluster by following the instructions in the [AWS documentation](https://docs.aws.amazon.com/eks/latest/userguide/getting-started-eksctl.html). Select the Managed nodes – Linux option for each step. Note that the `eksctl create cluster` command in the first step can take ten minutes or more.

+2. Create an Amazon EKS cluster by following the instructions in the [AWS documentation](https://docs.aws.amazon.com/eks/latest/userguide/getting-started-eksctl.html). Select the **Managed nodes – Linux** option for each step. Note that the `eksctl create cluster` command in the first step can take ten minutes or more.

## Push the NGINX Plus Ingress Controller Image to AWS ECR

This step is only required if you do not plan to use the prebuilt NGINX Open Source image.

-1. Use the [AWS documentation](https://docs.aws.amazon.com/AmazonECR/latest/userguide/repository-create.html) to create a repository in the Amazon Elastic Container Registry (ECR). In Step 4 of the AWS instructions, name the repository nginx-plus-ic as that is what we use in this guide.

+1. Use the [AWS documentation](https://docs.aws.amazon.com/AmazonECR/latest/userguide/repository-create.html) to create a repository in the Amazon Elastic Container Registry (ECR). In Step 4 of the AWS instructions, name the repository **nginx‑plus‑ic** as that is what we use in this guide.

2. Run the following AWS CLI command. It generates an auth token for your AWS ECR registry, then pipes it into the `docker login` command. This lets AWS ECR authenticate and authorize the upcoming Docker requests. For details about the command, see the [AWS documentation](https://docs.aws.amazon.com/AmazonECR/latest/userguide/registry_auth.html).

diff --git a/content/nginx/deployment-guides/amazon-web-services/route-53-global-server-load-balancing.md b/content/nginx/deployment-guides/amazon-web-services/route-53-global-server-load-balancing.md

index 4a449de16..32038fedb 100644

--- a/content/nginx/deployment-guides/amazon-web-services/route-53-global-server-load-balancing.md

+++ b/content/nginx/deployment-guides/amazon-web-services/route-53-global-server-load-balancing.md

@@ -40,7 +40,7 @@ The setup for global server load balancing (GSLB) in this guide combines Amazon

@@ -278,11 +278,11 @@ Use the *Step‑by‑step* instructions in our deployment guide, [Setting Up an

Repeat the instructions on all four web servers:

- Running App 1:

- - ngx-oss-app1-1

- - ngx-oss-app1-2

+ - **ngx-oss-app1-1**

+ - **ngx-oss-app1-2**

- Running App 2:

- - ngx-oss-app2-1

- - ngx-oss-app2-2

+ - **ngx-oss-app2-1**

+ - **ngx-oss-app2-2**

#### Configure NGINX Plus on the load balancers

@@ -291,7 +291,7 @@ Configure NGINX Plus instances as load balancers. These distribute requests to

Use the *Step‑by‑step* instructions in our deployment guide, [Setting Up an NGINX Demo Environment]({{< ref "/nginx/deployment-guides/setting-up-nginx-demo-environment.md" >}}).

-Repeat the instructions on both ngx-plus-1 and ngx-plus-2.

+Repeat the instructions on both **ngx‑plus‑1** and **ngx‑plus‑2**.

### Automate instance setup with Packer and Terraform

@@ -317,7 +317,7 @@ To run the scripts, follow these instructions:

3. Set your AWS credentials in the Packer and Terraform scripts:

- - For Packer, set your credentials in the `variables` block in both packer/ngx-oss/packer.json and packer/ngx-plus/packer.json:

+ - For Packer, set your credentials in the `variables` block in both **packer/ngx‑oss/packer.json** and **packer/ngx‑plus/packer.json**:

```none

"variables": {

diff --git a/content/nginx/deployment-guides/amazon-web-services/ingress-controller-elastic-kubernetes-services.md b/content/nginx/deployment-guides/amazon-web-services/ingress-controller-elastic-kubernetes-services.md

index 3a66f745d..e1c9811b3 100644

--- a/content/nginx/deployment-guides/amazon-web-services/ingress-controller-elastic-kubernetes-services.md

+++ b/content/nginx/deployment-guides/amazon-web-services/ingress-controller-elastic-kubernetes-services.md

@@ -43,14 +43,14 @@ This guide covers the `eksctl` command as it is the simplest option.

1. Follow the instructions in the [eksctl.io documentation](https://eksctl.io/installation/) to install or update the `eksctl` command.

-2. Create an Amazon EKS cluster by following the instructions in the [AWS documentation](https://docs.aws.amazon.com/eks/latest/userguide/getting-started-eksctl.html). Select the Managed nodes – Linux option for each step. Note that the `eksctl create cluster` command in the first step can take ten minutes or more.

+2. Create an Amazon EKS cluster by following the instructions in the [AWS documentation](https://docs.aws.amazon.com/eks/latest/userguide/getting-started-eksctl.html). Select the **Managed nodes – Linux** option for each step. Note that the `eksctl create cluster` command in the first step can take ten minutes or more.

## Push the NGINX Plus Ingress Controller Image to AWS ECR

This step is only required if you do not plan to use the prebuilt NGINX Open Source image.

-1. Use the [AWS documentation](https://docs.aws.amazon.com/AmazonECR/latest/userguide/repository-create.html) to create a repository in the Amazon Elastic Container Registry (ECR). In Step 4 of the AWS instructions, name the repository nginx-plus-ic as that is what we use in this guide.

+1. Use the [AWS documentation](https://docs.aws.amazon.com/AmazonECR/latest/userguide/repository-create.html) to create a repository in the Amazon Elastic Container Registry (ECR). In Step 4 of the AWS instructions, name the repository **nginx‑plus‑ic** as that is what we use in this guide.

2. Run the following AWS CLI command. It generates an auth token for your AWS ECR registry, then pipes it into the `docker login` command. This lets AWS ECR authenticate and authorize the upcoming Docker requests. For details about the command, see the [AWS documentation](https://docs.aws.amazon.com/AmazonECR/latest/userguide/registry_auth.html).

diff --git a/content/nginx/deployment-guides/amazon-web-services/route-53-global-server-load-balancing.md b/content/nginx/deployment-guides/amazon-web-services/route-53-global-server-load-balancing.md

index 4a449de16..32038fedb 100644

--- a/content/nginx/deployment-guides/amazon-web-services/route-53-global-server-load-balancing.md

+++ b/content/nginx/deployment-guides/amazon-web-services/route-53-global-server-load-balancing.md

@@ -40,7 +40,7 @@ The setup for global server load balancing (GSLB) in this guide combines Amazon

-Route 53 is a Domain Name System (DNS) service that performs global server load balancing by routing each request to the AWS region closest to the requester's location. This guide uses two regions: US West (Oregon) and US East (N. Virginia).

+Route 53 is a Domain Name System (DNS) service that performs global server load balancing by routing each request to the AWS region closest to the requester's location. This guide uses two regions: **US West (Oregon)** and **US East (N. Virginia)**.

In each region, two or more NGINX Plus load balancers are deployed in a high‑availability (HA) configuration. In this guide, there are two NGINX Plus load balancer instances per region. You can also use NGINX Open Source for this purpose, but it lacks the [application health checks](https://docs.nginx.com/nginx/admin-guide/load-balancer/http-health-check/) that make for more precise error detection. For simplicity, we'll refer to NGINX Plus load balancers throughout this guide, noting when features specific to NGINX Plus are used.

@@ -79,7 +79,7 @@ Create a _hosted zone_, which basically involves designating a domain name to be

1. Log in to the [AWS Management Console](https://console.aws.amazon.com/) (**console.aws.amazon.com/**).

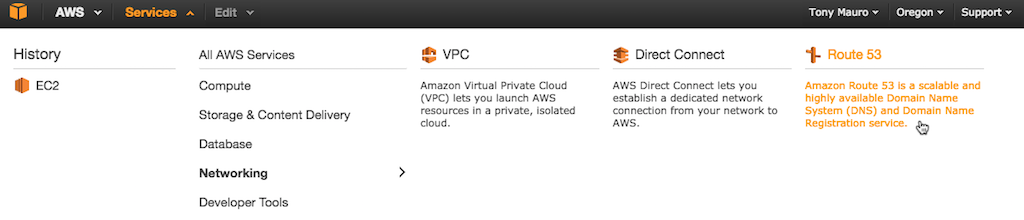

-2. Access the Route 53 dashboard page by clicking **Services** in the top AWS navigation bar, mousing over **Networking** in the All AWS Services column and then clicking **Route 53**.

+2. Access the Route 53 dashboard page by clicking **Services** in the top AWS navigation bar, mousing over **Networking** in the **All AWS Services** column and then clicking **Route 53**.

-Route 53 is a Domain Name System (DNS) service that performs global server load balancing by routing each request to the AWS region closest to the requester's location. This guide uses two regions: US West (Oregon) and US East (N. Virginia).

+Route 53 is a Domain Name System (DNS) service that performs global server load balancing by routing each request to the AWS region closest to the requester's location. This guide uses two regions: **US West (Oregon)** and **US East (N. Virginia)**.

In each region, two or more NGINX Plus load balancers are deployed in a high‑availability (HA) configuration. In this guide, there are two NGINX Plus load balancer instances per region. You can also use NGINX Open Source for this purpose, but it lacks the [application health checks](https://docs.nginx.com/nginx/admin-guide/load-balancer/http-health-check/) that make for more precise error detection. For simplicity, we'll refer to NGINX Plus load balancers throughout this guide, noting when features specific to NGINX Plus are used.

@@ -79,7 +79,7 @@ Create a _hosted zone_, which basically involves designating a domain name to be

1. Log in to the [AWS Management Console](https://console.aws.amazon.com/) (**console.aws.amazon.com/**).

-2. Access the Route 53 dashboard page by clicking **Services** in the top AWS navigation bar, mousing over **Networking** in the All AWS Services column and then clicking **Route 53**.

+2. Access the Route 53 dashboard page by clicking **Services** in the top AWS navigation bar, mousing over **Networking** in the **All AWS Services** column and then clicking **Route 53**.

@@ -87,7 +87,7 @@ Create a _hosted zone_, which basically involves designating a domain name to be

@@ -87,7 +87,7 @@ Create a _hosted zone_, which basically involves designating a domain name to be

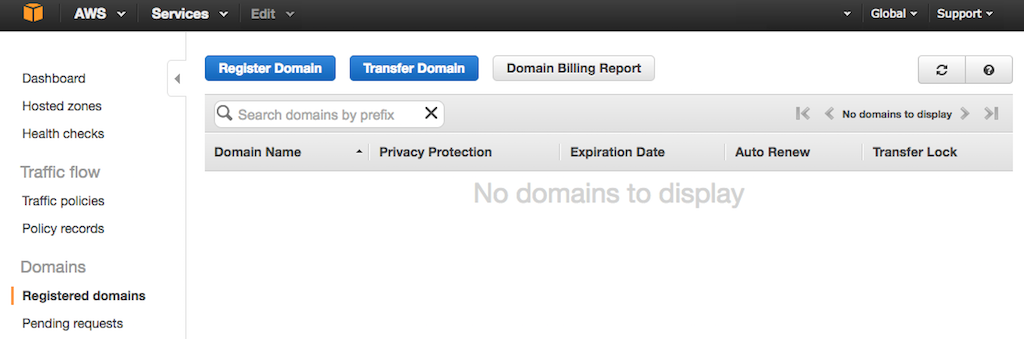

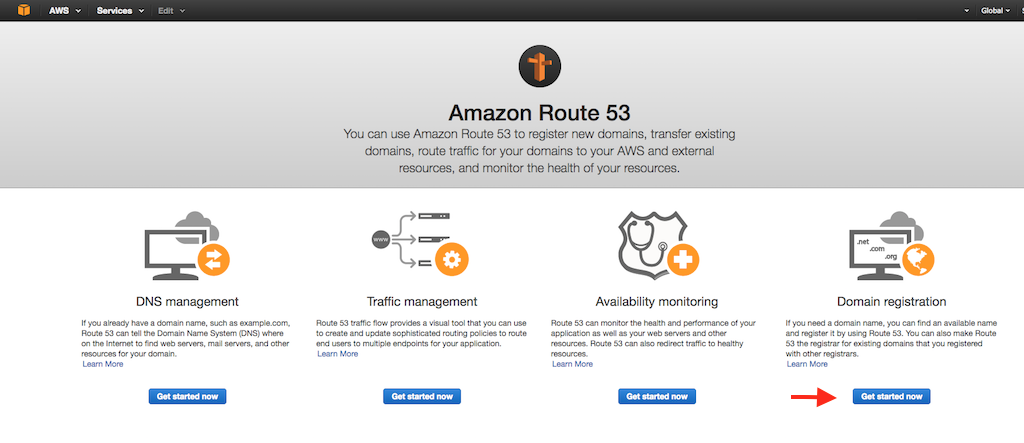

- If you see the Route 53 home page instead, access the **Registered domains** tab by clicking the Get started now button under Domain registration.

+ If you see the Route 53 home page instead, access the **Registered domains** tab by clicking the Get started now button under **Domain registration**.

- If you see the Route 53 home page instead, access the **Registered domains** tab by clicking the Get started now button under Domain registration.

+ If you see the Route 53 home page instead, access the **Registered domains** tab by clicking the Get started now button under **Domain registration**.

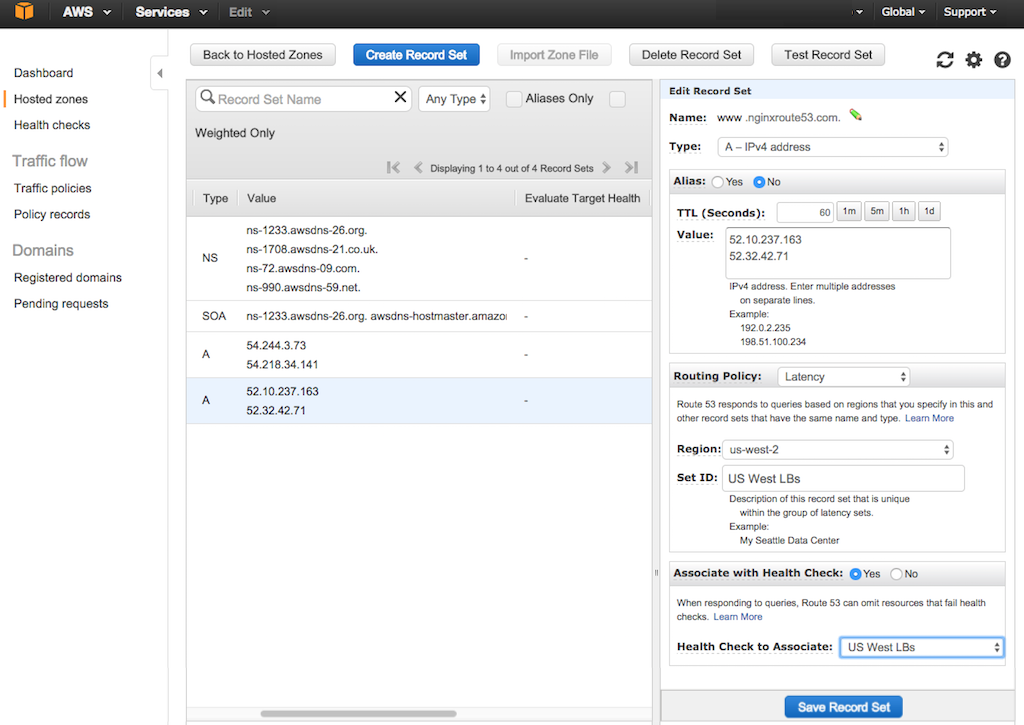

@@ -123,21 +123,21 @@ Create records sets for your domain:

4. Fill in the fields in the **Create Record Set** column:

- - **Name** – You can leave this field blank, but for this guide we are setting the name to www.nginxroute53.com.

- - **Type** – A – IPv4 address.

- - **Alias** – No.

- - **TTL (Seconds)** – 60.

+ - **Name** – You can leave this field blank, but for this guide we are setting the name to **www.nginxroute53.com**.

+ - **Type** – **A – IPv4 address**.

+ - **Alias** – **No**.

+ - **TTL (Seconds)** – **60**.

- **Note**: Reducing TTL from the default of 300 in this way can decrease the time that it takes for Route 53 to fail over when both NGINX Plus load balancers in the region are down, but there is always a delay of about two minutes regardless of the TTL setting. This is a built‑in limitation of Route 53.

+ **Note**: Reducing TTL from the default of **300** in this way can decrease the time that it takes for Route 53 to fail over when both NGINX Plus load balancers in the region are down, but there is always a delay of about two minutes regardless of the TTL setting. This is a built‑in limitation of Route 53.

- - **Value** – [Elastic IP addresses](#elastic-ip) of the NGINX Plus load balancers in the first region [in this guide, US West (Oregon)].

- - **Routing Policy** – Latency.

+ - **Value** – [Elastic IP addresses](#elastic-ip) of the NGINX Plus load balancers in the first region [in this guide, **US West (Oregon)**].

+ - **Routing Policy** – **Latency**.

-5. A new area opens when you select Latency. Fill in the fields as indicated (see the figure below):

+5. A new area opens when you select **Latency**. Fill in the fields as indicated (see the figure below):

- - **Region** – Region to which the load balancers belong (in this guide, us-west-2).

- - **Set ID** – Identifier for this group of load balancers (in this guide, US West LBs).

- - **Associate with Health Check** – No.

+ - **Region** – Region to which the load balancers belong (in this guide, **us‑west‑2**).

+ - **Set ID** – Identifier for this group of load balancers (in this guide, **US West LBs**).

+ - **Associate with Health Check** – **No**.

When you complete all fields, the tab looks like this:

@@ -145,7 +145,7 @@ Create records sets for your domain:

6. Click the Create button.

-7. Repeat Steps 3 through 6 for the load balancers in the other region [in this guide, US East (N. Virginia)].

+7. Repeat Steps 3 through 6 for the load balancers in the other region [in this guide, **US East (N. Virginia)**].

You can now test your website. Insert your domain name into a browser and see that your request is being load balanced between servers based on your location.

@@ -172,21 +172,21 @@ We create health checks both for each NGINX Plus load balancer individually and

@@ -123,21 +123,21 @@ Create records sets for your domain:

4. Fill in the fields in the **Create Record Set** column:

- - **Name** – You can leave this field blank, but for this guide we are setting the name to www.nginxroute53.com.

- - **Type** – A – IPv4 address.

- - **Alias** – No.

- - **TTL (Seconds)** – 60.

+ - **Name** – You can leave this field blank, but for this guide we are setting the name to **www.nginxroute53.com**.

+ - **Type** – **A – IPv4 address**.

+ - **Alias** – **No**.

+ - **TTL (Seconds)** – **60**.

- **Note**: Reducing TTL from the default of 300 in this way can decrease the time that it takes for Route 53 to fail over when both NGINX Plus load balancers in the region are down, but there is always a delay of about two minutes regardless of the TTL setting. This is a built‑in limitation of Route 53.

+ **Note**: Reducing TTL from the default of **300** in this way can decrease the time that it takes for Route 53 to fail over when both NGINX Plus load balancers in the region are down, but there is always a delay of about two minutes regardless of the TTL setting. This is a built‑in limitation of Route 53.

- - **Value** – [Elastic IP addresses](#elastic-ip) of the NGINX Plus load balancers in the first region [in this guide, US West (Oregon)].

- - **Routing Policy** – Latency.

+ - **Value** – [Elastic IP addresses](#elastic-ip) of the NGINX Plus load balancers in the first region [in this guide, **US West (Oregon)**].

+ - **Routing Policy** – **Latency**.

-5. A new area opens when you select Latency. Fill in the fields as indicated (see the figure below):

+5. A new area opens when you select **Latency**. Fill in the fields as indicated (see the figure below):

- - **Region** – Region to which the load balancers belong (in this guide, us-west-2).

- - **Set ID** – Identifier for this group of load balancers (in this guide, US West LBs).

- - **Associate with Health Check** – No.

+ - **Region** – Region to which the load balancers belong (in this guide, **us‑west‑2**).

+ - **Set ID** – Identifier for this group of load balancers (in this guide, **US West LBs**).

+ - **Associate with Health Check** – **No**.

When you complete all fields, the tab looks like this:

@@ -145,7 +145,7 @@ Create records sets for your domain:

6. Click the Create button.

-7. Repeat Steps 3 through 6 for the load balancers in the other region [in this guide, US East (N. Virginia)].

+7. Repeat Steps 3 through 6 for the load balancers in the other region [in this guide, **US East (N. Virginia)**].

You can now test your website. Insert your domain name into a browser and see that your request is being load balanced between servers based on your location.

@@ -172,21 +172,21 @@ We create health checks both for each NGINX Plus load balancer individually and

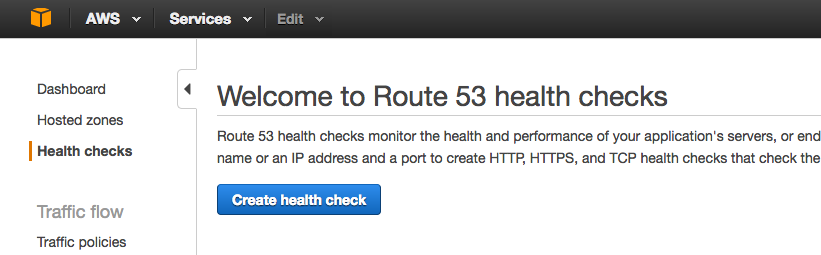

-2. Click the Create health check button. In the Configure health check form that opens, specify the following values, then click the Next button.

+2. Click the Create health check button. In the **Configure health check** form that opens, specify the following values, then click the Next button.

- - **Name** – Identifier for an NGINX Plus load balancer instance, for example US West LB 1.

- - **What to monitor** – Endpoint.

- - **Specify endpoint by** – IP address.

+ - **Name** – Identifier for an NGINX Plus load balancer instance, for example **US West LB 1**.

+ - **What to monitor** – **Endpoint**.

+ - **Specify endpoint by** – **IP address**.

- **IP address** – The [elastic IP address](#elastic-ip) of the NGINX Plus load balancer.

- - **Port** – The port advertised to clients for your domain or web service (the default is 80).

+ - **Port** – The port advertised to clients for your domain or web service (the default is **80**).

-2. Click the Create health check button. In the Configure health check form that opens, specify the following values, then click the Next button.

+2. Click the Create health check button. In the **Configure health check** form that opens, specify the following values, then click the Next button.

- - **Name** – Identifier for an NGINX Plus load balancer instance, for example US West LB 1.

- - **What to monitor** – Endpoint.

- - **Specify endpoint by** – IP address.

+ - **Name** – Identifier for an NGINX Plus load balancer instance, for example **US West LB 1**.

+ - **What to monitor** – **Endpoint**.

+ - **Specify endpoint by** – **IP address**.

- **IP address** – The [elastic IP address](#elastic-ip) of the NGINX Plus load balancer.

- - **Port** – The port advertised to clients for your domain or web service (the default is 80).

+ - **Port** – The port advertised to clients for your domain or web service (the default is **80**).

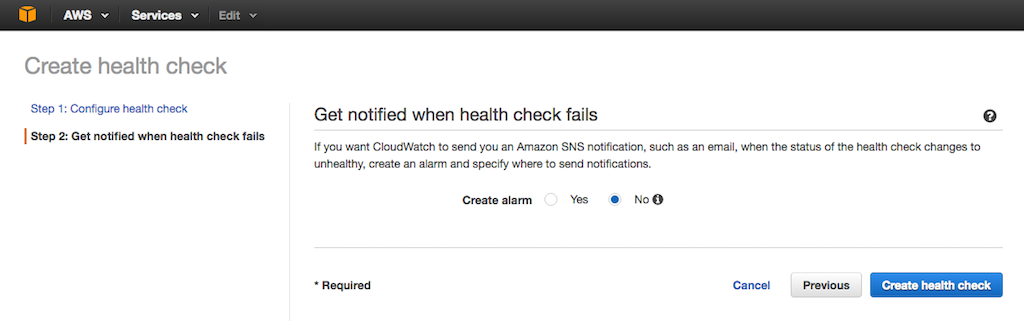

-3. On the Get notified when health check fails screen that opens, set the **Create alarm** radio button to **Yes** or **No** as appropriate, then click the Create health check button.

+3. On the **Get notified when health check fails** screen that opens, set the **Create alarm** radio button to **Yes** or **No** as appropriate, then click the Create health check button.

-3. On the Get notified when health check fails screen that opens, set the **Create alarm** radio button to **Yes** or **No** as appropriate, then click the Create health check button.

+3. On the **Get notified when health check fails** screen that opens, set the **Create alarm** radio button to **Yes** or **No** as appropriate, then click the Create health check button.

-4. Repeat Steps 2 and 3 for your other NGINX Plus load balancers (in this guide, US West LB 2, US East LB 1, and US East LB 2).

+4. Repeat Steps 2 and 3 for your other NGINX Plus load balancers (in this guide, **US West LB 2**, **US East LB 1**, and **US East LB 2**).

5. Proceed to the next section to configure health checks for the load balancer pairs.

@@ -195,18 +195,18 @@ We create health checks both for each NGINX Plus load balancer individually and

1. Click the Create health check button.

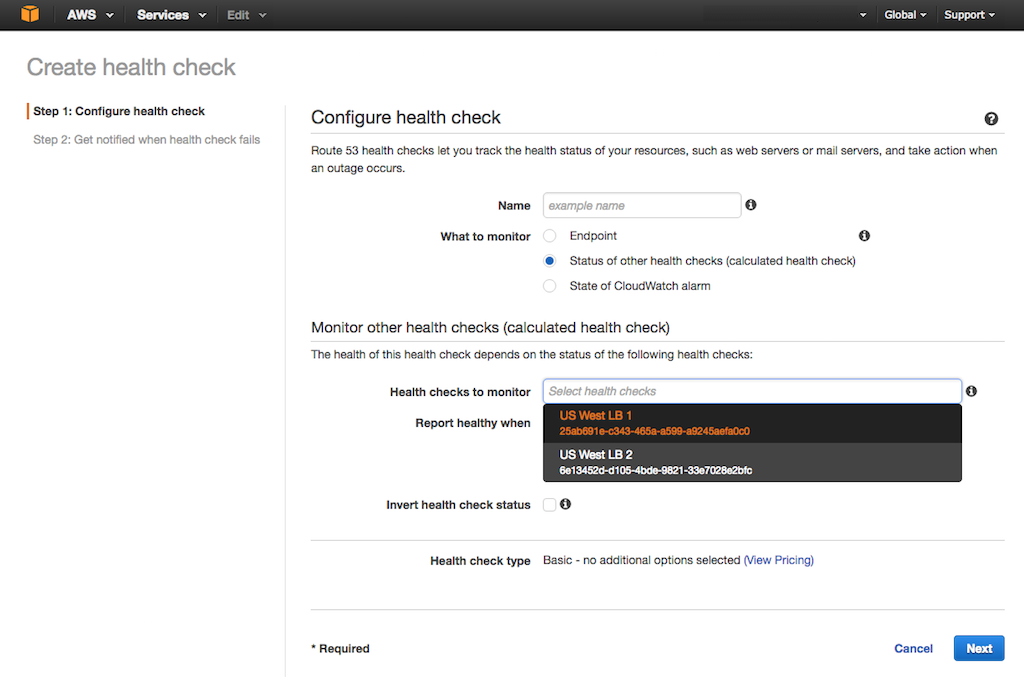

-2. In the Configure health check form that opens, specify the following values, then click the Next button.

+2. In the **Configure health check** form that opens, specify the following values, then click the Next button.

- - **Name** – Identifier for the pair of NGINX Plus load balancers in the first region, for example US West LBs.

- - **What to monitor** – Status of other health checks .

+ - **Name** – Identifier for the pair of NGINX Plus load balancers in the first region, for example **US West LBs**.

+ - **What to monitor** – **Status of other health checks **.

- **Health checks to monitor** – The health checks of the two US West load balancers (add them one after the other by clicking in the box and choosing them from the drop‑down menu as shown).

- - **Report healthy when** – at least 1 of 2 selected health checks are healthy (the choices in this field are obscured in the screenshot by the drop‑down menu).

+ - **Report healthy when** – **at least 1 of 2 selected health checks are healthy** (the choices in this field are obscured in the screenshot by the drop‑down menu).

-4. Repeat Steps 2 and 3 for your other NGINX Plus load balancers (in this guide, US West LB 2, US East LB 1, and US East LB 2).

+4. Repeat Steps 2 and 3 for your other NGINX Plus load balancers (in this guide, **US West LB 2**, **US East LB 1**, and **US East LB 2**).

5. Proceed to the next section to configure health checks for the load balancer pairs.

@@ -195,18 +195,18 @@ We create health checks both for each NGINX Plus load balancer individually and

1. Click the Create health check button.

-2. In the Configure health check form that opens, specify the following values, then click the Next button.

+2. In the **Configure health check** form that opens, specify the following values, then click the Next button.

- - **Name** – Identifier for the pair of NGINX Plus load balancers in the first region, for example US West LBs.

- - **What to monitor** – Status of other health checks .

+ - **Name** – Identifier for the pair of NGINX Plus load balancers in the first region, for example **US West LBs**.

+ - **What to monitor** – **Status of other health checks **.

- **Health checks to monitor** – The health checks of the two US West load balancers (add them one after the other by clicking in the box and choosing them from the drop‑down menu as shown).

- - **Report healthy when** – at least 1 of 2 selected health checks are healthy (the choices in this field are obscured in the screenshot by the drop‑down menu).

+ - **Report healthy when** – **at least 1 of 2 selected health checks are healthy** (the choices in this field are obscured in the screenshot by the drop‑down menu).

-3. On the Get notified when health check fails screen that opens, set the **Create alarm** radio button as appropriate (see Step 5 in the previous section), then click the Create health check button.

+3. On the **Get notified when health check fails** screen that opens, set the **Create alarm** radio button as appropriate (see Step 5 in the previous section), then click the Create health check button.

-4. Repeat Steps 1 through 3 for the paired load balancers in the other region [in this guide, US East (N. Virginia)].

+4. Repeat Steps 1 through 3 for the paired load balancers in the other region [in this guide, **US East (N. Virginia)**].

When you have finished configuring all six health checks, the **Health checks** tab looks like this:

@@ -223,13 +223,13 @@ When you have finished configuring all six health checks, the **Health checks**

The tab changes to display the record sets for the domain.

-3. In the list of record sets that opens, click the row for the record set belonging to your first region [in this guide, US West (Oregon)]. The Edit Record Set column opens on the right side of the tab.

+3. In the list of record sets that opens, click the row for the record set belonging to your first region [in this guide, **US West (Oregon)**]. The Edit Record Set column opens on the right side of the tab.

-3. On the Get notified when health check fails screen that opens, set the **Create alarm** radio button as appropriate (see Step 5 in the previous section), then click the Create health check button.

+3. On the **Get notified when health check fails** screen that opens, set the **Create alarm** radio button as appropriate (see Step 5 in the previous section), then click the Create health check button.

-4. Repeat Steps 1 through 3 for the paired load balancers in the other region [in this guide, US East (N. Virginia)].

+4. Repeat Steps 1 through 3 for the paired load balancers in the other region [in this guide, **US East (N. Virginia)**].

When you have finished configuring all six health checks, the **Health checks** tab looks like this:

@@ -223,13 +223,13 @@ When you have finished configuring all six health checks, the **Health checks**

The tab changes to display the record sets for the domain.

-3. In the list of record sets that opens, click the row for the record set belonging to your first region [in this guide, US West (Oregon)]. The Edit Record Set column opens on the right side of the tab.

+3. In the list of record sets that opens, click the row for the record set belonging to your first region [in this guide, **US West (Oregon)**]. The Edit Record Set column opens on the right side of the tab.

-4. Change the **Associate with Health Check** radio button to Yes.

+4. Change the **Associate with Health Check** radio button to **Yes**.

-5. In the **Health Check to Associate** field, select the paired health check for your first region (in this guide, US West LBs).

+5. In the **Health Check to Associate** field, select the paired health check for your first region (in this guide, **US West LBs**).

6. Click the Save Record Set button.

@@ -242,7 +242,7 @@ These instructions assume that you have configured NGINX Plus on two EC2 instan

**Note:** Some commands require `root` privilege. If appropriate for your environment, prefix commands with the `sudo` command.

-1. Connect to the US West LB 1 instance. For instructions, see Connecting to an EC2 Instance.

+1. Connect to the **US West LB 1** instance. For instructions, see Connecting to an EC2 Instance.

2. Change directory to **/etc/nginx/conf.d**.

@@ -250,7 +250,7 @@ These instructions assume that you have configured NGINX Plus on two EC2 instan

cd /etc/nginx/conf.d

```

-3. Edit the west-lb1.conf file and add the **@healthcheck** location to set up health checks.

+3. Edit the **west‑lb1.conf** file and add the **@healthcheck** location to set up health checks.

```nginx

upstream backend-servers {

@@ -282,9 +282,9 @@ These instructions assume that you have configured NGINX Plus on two EC2 instan

nginx -s reload

```

-5. Repeat Steps 1 through 4 for the other three load balancers (US West LB 2, US East LB 1, and US East LB2).

+5. Repeat Steps 1 through 4 for the other three load balancers (**US West LB 2**, **US East LB 1**, and **US East LB2**).

- In Step 3, change the filename as appropriate (west-lb2.conf, east-lb1.conf, and east-lb2.conf). In the east-lb1.conf and east-lb2.conf files, the `server` directives specify the public DNS names of Backup 3 and Backup 4.

+ In Step 3, change the filename as appropriate (**west‑lb2.conf**, **east‑lb1.conf**, and **east‑lb2.conf**). In the **east‑lb1.conf** and **east‑lb2.conf** files, the `server` directives specify the public DNS names of Backup 3 and Backup 4.

## Appendix

@@ -307,31 +307,31 @@ Step‑by‑step instructions for creating EC2 instances and installing NGINX so

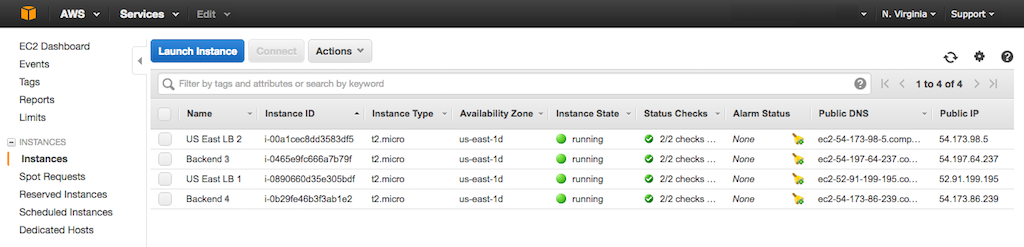

Assign the following names to the instances, and then install the indicated NGINX software.

-- In the first region, which is US West (Oregon) in this guide:

+- In the first region, which is **US West (Oregon)** in this guide:

- Two load balancer instances running NGINX Plus:

- - US West LB 1

- - US West LB 2

+ - **US West LB 1**

+ - **US West LB 2**

- Two backend instances running NGINX Open Source:

- * Backend 1

- - Backend 2

+ * **Backend 1**

+ - **Backend 2**

-- In the second region, which is US East (N. Virginia) in this guide:

+- In the second region, which is **US East (N. Virginia)** in this guide:

- Two load balancer instances running NGINX Plus:

- - US East LB 1

- - US East LB 2

+ - **US East LB 1**

+ - **US East LB 2**

- Two backend instances running NGINX Open Source:

- * Backend 3

- - Backend 4

+ * **Backend 3**

+ - **Backend 4**

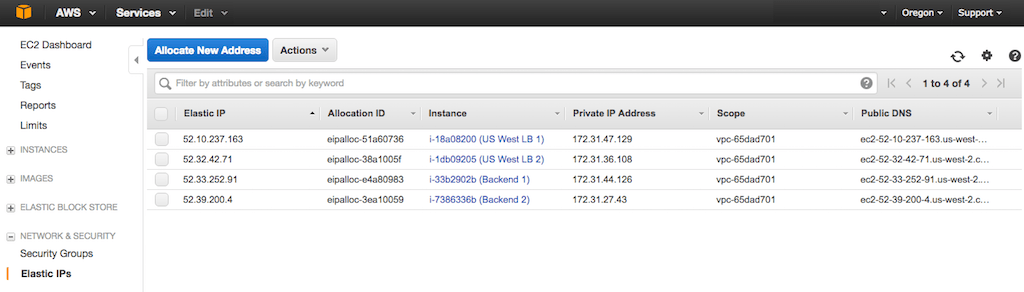

-Here's the **Instances** tab after we create the four instances in the N. Virginia region.

+Here's the **Instances** tab after we create the four instances in the **N. Virginia** region.

-4. Change the **Associate with Health Check** radio button to Yes.

+4. Change the **Associate with Health Check** radio button to **Yes**.

-5. In the **Health Check to Associate** field, select the paired health check for your first region (in this guide, US West LBs).

+5. In the **Health Check to Associate** field, select the paired health check for your first region (in this guide, **US West LBs**).

6. Click the Save Record Set button.

@@ -242,7 +242,7 @@ These instructions assume that you have configured NGINX Plus on two EC2 instan

**Note:** Some commands require `root` privilege. If appropriate for your environment, prefix commands with the `sudo` command.

-1. Connect to the US West LB 1 instance. For instructions, see Connecting to an EC2 Instance.

+1. Connect to the **US West LB 1** instance. For instructions, see Connecting to an EC2 Instance.

2. Change directory to **/etc/nginx/conf.d**.

@@ -250,7 +250,7 @@ These instructions assume that you have configured NGINX Plus on two EC2 instan

cd /etc/nginx/conf.d

```

-3. Edit the west-lb1.conf file and add the **@healthcheck** location to set up health checks.

+3. Edit the **west‑lb1.conf** file and add the **@healthcheck** location to set up health checks.

```nginx

upstream backend-servers {

@@ -282,9 +282,9 @@ These instructions assume that you have configured NGINX Plus on two EC2 instan

nginx -s reload

```

-5. Repeat Steps 1 through 4 for the other three load balancers (US West LB 2, US East LB 1, and US East LB2).

+5. Repeat Steps 1 through 4 for the other three load balancers (**US West LB 2**, **US East LB 1**, and **US East LB2**).

- In Step 3, change the filename as appropriate (west-lb2.conf, east-lb1.conf, and east-lb2.conf). In the east-lb1.conf and east-lb2.conf files, the `server` directives specify the public DNS names of Backup 3 and Backup 4.

+ In Step 3, change the filename as appropriate (**west‑lb2.conf**, **east‑lb1.conf**, and **east‑lb2.conf**). In the **east‑lb1.conf** and **east‑lb2.conf** files, the `server` directives specify the public DNS names of Backup 3 and Backup 4.

## Appendix

@@ -307,31 +307,31 @@ Step‑by‑step instructions for creating EC2 instances and installing NGINX so

Assign the following names to the instances, and then install the indicated NGINX software.

-- In the first region, which is US West (Oregon) in this guide:

+- In the first region, which is **US West (Oregon)** in this guide:

- Two load balancer instances running NGINX Plus:

- - US West LB 1

- - US West LB 2

+ - **US West LB 1**

+ - **US West LB 2**

- Two backend instances running NGINX Open Source:

- * Backend 1

- - Backend 2

+ * **Backend 1**

+ - **Backend 2**

-- In the second region, which is US East (N. Virginia) in this guide:

+- In the second region, which is **US East (N. Virginia)** in this guide:

- Two load balancer instances running NGINX Plus:

- - US East LB 1

- - US East LB 2

+ - **US East LB 1**

+ - **US East LB 2**

- Two backend instances running NGINX Open Source:

- * Backend 3

- - Backend 4

+ * **Backend 3**

+ - **Backend 4**

-Here's the **Instances** tab after we create the four instances in the N. Virginia region.

+Here's the **Instances** tab after we create the four instances in the **N. Virginia** region.

@@ -359,14 +359,14 @@ Perform these steps on all eight instances.

@@ -359,14 +359,14 @@ Perform these steps on all eight instances.

-After you complete the instructions on all instances, the list for a region (here, Oregon) looks like this:

+After you complete the instructions on all instances, the list for a region (here, **Oregon**) looks like this:

-After you complete the instructions on all instances, the list for a region (here, Oregon) looks like this:

+After you complete the instructions on all instances, the list for a region (here, **Oregon**) looks like this:

### Configuring NGINX Open Source on the Backend Servers

-Perform these steps on all four backend servers: Backend 1, Backend 2, Backend 3, and Backend 4. In Step 3, substitute the appropriate name for `Backend X` in the **index.html** file.

+Perform these steps on all four backend servers: **Backend 1**, **Backend 2**, **Backend 3**, and **Backend 4**. In Step 3, substitute the appropriate name for `Backend X` in the **index.html** file.

**Note:** Some commands require `root` privilege. If appropriate for your environment, prefix commands with the `sudo` command.

@@ -421,7 +421,7 @@ Perform these steps on all four backend servers:

### Configuring NGINX Plus on the Load Balancers

-Perform these steps on all four backend servers: US West LB 1, US West LB 2, US East LB 1, and US West LB 2.

+Perform these steps on all four backend servers: **US West LB 1**, **US West LB 2**, **US East LB 1**, and **US West LB 2**.

**Note:** Some commands require `root` privilege. If appropriate for your environment, prefix commands with the `sudo` command.

@@ -439,10 +439,10 @@ Perform these steps on all four backend servers: US West LB 1 – west-lb1.conf

- - For US West LB 2 – west-lb2.conf

- - For US East LB 1 – east-lb1.conf

- - For US West LB 2 – east-lb2.conf

+ - For **US West LB 1** – **west‑lb1.conf**

+ - For **US West LB 2** – **west‑lb2.conf**

+ - For **US East LB 1** – **east‑lb1.conf**

+ - For **US West LB 2** – **east‑lb2.conf**

In the `server` directives in the `upstream` block, substitute the public DNS names of the backend instances in the region; to learn them, see the **Instances** tab in the EC2 Dashboard.

diff --git a/content/nginx/deployment-guides/global-server-load-balancing/ns1-global-server-load-balancing.md b/content/nginx/deployment-guides/global-server-load-balancing/ns1-global-server-load-balancing.md

index d1284e106..8e425506e 100644

--- a/content/nginx/deployment-guides/global-server-load-balancing/ns1-global-server-load-balancing.md

+++ b/content/nginx/deployment-guides/global-server-load-balancing/ns1-global-server-load-balancing.md

@@ -69,9 +69,9 @@ The solution functions alongside other NS1 capabilities, such as geo‑proximal

5. The **Add Record** window pops up. Enter the following values:

- - **Record Type** – A (the default).

+ - **Record Type** – **A** (the default).

- name – Leave blank unless you are creating the ``A`` record for a subdomain.

- - **TTL** – 3600 is the default, which we are not changing.

+ - **TTL** – **3600** is the default, which we are not changing.

- **ANSWERS** – The public IP address of the first NGINX Plus instance. To add each of the other instances, click the Add Answer button. (In this guide we're using private IP addresses in the 10.0.0.0/8 range as examples.)

Click the Save All Changes button.

@@ -86,24 +86,24 @@ The solution functions alongside other NS1 capabilities, such as geo‑proximal

### Configuring NGINX Open Source on the Backend Servers

-Perform these steps on all four backend servers: Backend 1, Backend 2, Backend 3, and Backend 4. In Step 3, substitute the appropriate name for `Backend X` in the **index.html** file.

+Perform these steps on all four backend servers: **Backend 1**, **Backend 2**, **Backend 3**, and **Backend 4**. In Step 3, substitute the appropriate name for `Backend X` in the **index.html** file.

**Note:** Some commands require `root` privilege. If appropriate for your environment, prefix commands with the `sudo` command.

@@ -421,7 +421,7 @@ Perform these steps on all four backend servers:

### Configuring NGINX Plus on the Load Balancers

-Perform these steps on all four backend servers: US West LB 1, US West LB 2, US East LB 1, and US West LB 2.

+Perform these steps on all four backend servers: **US West LB 1**, **US West LB 2**, **US East LB 1**, and **US West LB 2**.

**Note:** Some commands require `root` privilege. If appropriate for your environment, prefix commands with the `sudo` command.

@@ -439,10 +439,10 @@ Perform these steps on all four backend servers: US West LB 1 – west-lb1.conf

- - For US West LB 2 – west-lb2.conf

- - For US East LB 1 – east-lb1.conf

- - For US West LB 2 – east-lb2.conf

+ - For **US West LB 1** – **west‑lb1.conf**

+ - For **US West LB 2** – **west‑lb2.conf**

+ - For **US East LB 1** – **east‑lb1.conf**

+ - For **US West LB 2** – **east‑lb2.conf**

In the `server` directives in the `upstream` block, substitute the public DNS names of the backend instances in the region; to learn them, see the **Instances** tab in the EC2 Dashboard.

diff --git a/content/nginx/deployment-guides/global-server-load-balancing/ns1-global-server-load-balancing.md b/content/nginx/deployment-guides/global-server-load-balancing/ns1-global-server-load-balancing.md

index d1284e106..8e425506e 100644

--- a/content/nginx/deployment-guides/global-server-load-balancing/ns1-global-server-load-balancing.md

+++ b/content/nginx/deployment-guides/global-server-load-balancing/ns1-global-server-load-balancing.md

@@ -69,9 +69,9 @@ The solution functions alongside other NS1 capabilities, such as geo‑proximal

5. The **Add Record** window pops up. Enter the following values:

- - **Record Type** – A (the default).

+ - **Record Type** – **A** (the default).

- name – Leave blank unless you are creating the ``A`` record for a subdomain.

- - **TTL** – 3600 is the default, which we are not changing.

+ - **TTL** – **3600** is the default, which we are not changing.

- **ANSWERS** – The public IP address of the first NGINX Plus instance. To add each of the other instances, click the Add Answer button. (In this guide we're using private IP addresses in the 10.0.0.0/8 range as examples.)

Click the Save All Changes button.

@@ -86,24 +86,24 @@ The solution functions alongside other NS1 capabilities, such as geo‑proximal

-8. In the **Answer Metadata** window that pops up, click Up/down in the STATUS section of the SETTING column, if it is not already selected. Click the **Select** box in the AVAILABLE column, and then select either Up or Down from the drop‑down menu. In this guide we're selecting Up to indicate that the NGINX Plus instance is operational.

+8. In the **Answer Metadata** window that pops up, click Up/down in the STATUS section of the SETTING column, if it is not already selected. Click the **Select** box in the AVAILABLE column, and then select either **Up** or **Down** from the drop‑down menu. In this guide we're selecting **Up** to indicate that the NGINX Plus instance is operational.

-8. In the **Answer Metadata** window that pops up, click Up/down in the STATUS section of the SETTING column, if it is not already selected. Click the **Select** box in the AVAILABLE column, and then select either Up or Down from the drop‑down menu. In this guide we're selecting Up to indicate that the NGINX Plus instance is operational.

+8. In the **Answer Metadata** window that pops up, click Up/down in the STATUS section of the SETTING column, if it is not already selected. Click the **Select** box in the AVAILABLE column, and then select either **Up** or **Down** from the drop‑down menu. In this guide we're selecting **Up** to indicate that the NGINX Plus instance is operational.

9. Click a value in the GEOGRAPHICAL section of the SETTING column and specify the location of the NGINX Plus instance. Begin by choosing one of the several types of codes that NS1 offers for identifying locations:

- **Canadian province(s)** – Two‑letter codes for Canadian provinces

- **Country/countries** – Two‑letter codes for nations and territories

- - **Geographic region(s)** – Identifiers like US-WEST and ASIAPAC

+ - **Geographic region(s)** – Identifiers like **US‑WEST** and **ASIAPAC**

- **ISO region code** – Identification codes for nations and territories as defined in [ISO 3166](https://www.iso.org/iso-3166-country-codes.html)

- **Latitude** – Degrees, minutes, and seconds of latitude (northern or southern hemisphere)

- **Longitude** – Degrees, minutes, and seconds of longitude (eastern or western hemisphere)

- **US State(s)** – Two‑letter codes for US states

- In this guide we're using **Country/countries** codes. For the first NGINX Plus instance, we select Americas > Northern America > United States (US) and click the Ok button.

+ In this guide we're using **Country/countries** codes. For the first NGINX Plus instance, we select **Americas > Northern America > United States (US)** and click the Ok button.

9. Click a value in the GEOGRAPHICAL section of the SETTING column and specify the location of the NGINX Plus instance. Begin by choosing one of the several types of codes that NS1 offers for identifying locations:

- **Canadian province(s)** – Two‑letter codes for Canadian provinces

- **Country/countries** – Two‑letter codes for nations and territories

- - **Geographic region(s)** – Identifiers like US-WEST and ASIAPAC

+ - **Geographic region(s)** – Identifiers like **US‑WEST** and **ASIAPAC**

- **ISO region code** – Identification codes for nations and territories as defined in [ISO 3166](https://www.iso.org/iso-3166-country-codes.html)

- **Latitude** – Degrees, minutes, and seconds of latitude (northern or southern hemisphere)

- **Longitude** – Degrees, minutes, and seconds of longitude (eastern or western hemisphere)

- **US State(s)** – Two‑letter codes for US states

- In this guide we're using **Country/countries** codes. For the first NGINX Plus instance, we select Americas > Northern America > United States (US) and click the Ok button.

+ In this guide we're using **Country/countries** codes. For the first NGINX Plus instance, we select **Americas > Northern America > United States (US)** and click the Ok button.

-10. Repeat Steps 7–9 for both of the other two NGINX Plus instances. For the country in Step 9, we're selecting Europe > Western Europe > Germany (DE) for NGINX Plus instance 2 and Asia > South‑Eastern Asia > Singapore (SG) for NGINX Plus instance 3.

+10. Repeat Steps 7–9 for both of the other two NGINX Plus instances. For the country in Step 9, we're selecting **Europe > Western Europe > Germany (DE)** for NGINX Plus instance 2 and **Asia > South‑Eastern Asia > Singapore (SG)** for NGINX Plus instance 3.

When finished with both instances, on the details page for the ``A`` record click the Save Record button.

@@ -113,9 +113,9 @@ The solution functions alongside other NS1 capabilities, such as geo‑proximal

12. In the **Add Filters** window that pops up, click the plus sign (+) on the button for each filter you want to apply. In this guide, we're configuring the filters in this order:

- - Up in the HEALTHCHECKS section

- - Geotarget Country in the GEOGRAPHIC section

- - Select First N in the TRAFFIC MANAGEMENT section

+ - **Up** in the ** HEALTHCHECKS ** section

+ - **Geotarget Country** in the ** GEOGRAPHIC ** section

+ - **Select First N** in the ** TRAFFIC MANAGEMENT ** section

Click the Save Filter Chain button.

@@ -128,17 +128,17 @@ In this section we install and configure the NS1 agent on the same hosts as our

1. Follow the instructions in the [NS1 documentation](https://help.ns1.com/hc/en-us/articles/360020474154) to set up and connect a separate data feed for each of the three NGINX Plus instances, which NS1 calls _answers_.

- On the first page (**Configure a new data source from NSONE Data Feed API v1**) specify a name for the _data source_, which is the administrative container for the data feeds you will be creating. Use the same name each of the three times you go through the instructions. We're naming the data source NGINX-GSLB.

+ On the first page (**Configure a new data source from NSONE Data Feed API v1**) specify a name for the _data source_, which is the administrative container for the data feeds you will be creating. Use the same name each of the three times you go through the instructions. We're naming the data source **NGINX‑GSLB**.

On the next page (**Create Feed from NSONE Data Feed API v1**), create a data feed for the instance. Because the **Name** field is just for internal use, any value is fine. The value in the **Label** field is used in the YAML configuration file for the instance (see Step 4 below). We're specifying labels that indicate the country (using the ISO 3166 codes) in which the instance is running:

- - us-nginxgslb-datafeed for instance 1 in the US

- - de-nginxgslb-datafeed for instance 2 in Germany

- - sg-nginxgslb-datafeed for instance 3 in Singapore

+ - **us‑nginxgslb‑datafeed** for instance 1 in the US

+ - **de‑nginxgslb‑datafeed** for instance 2 in Germany

+ - **sg‑nginxgslb‑datafeed** for instance 3 in Singapore

- After creating the three feeds, note the value in the **Feeds URL** field on the INTEGRATIONS tab. The final element of the URL is the ``

-10. Repeat Steps 7–9 for both of the other two NGINX Plus instances. For the country in Step 9, we're selecting Europe > Western Europe > Germany (DE) for NGINX Plus instance 2 and Asia > South‑Eastern Asia > Singapore (SG) for NGINX Plus instance 3.

+10. Repeat Steps 7–9 for both of the other two NGINX Plus instances. For the country in Step 9, we're selecting **Europe > Western Europe > Germany (DE)** for NGINX Plus instance 2 and **Asia > South‑Eastern Asia > Singapore (SG)** for NGINX Plus instance 3.

When finished with both instances, on the details page for the ``A`` record click the Save Record button.

@@ -113,9 +113,9 @@ The solution functions alongside other NS1 capabilities, such as geo‑proximal

12. In the **Add Filters** window that pops up, click the plus sign (+) on the button for each filter you want to apply. In this guide, we're configuring the filters in this order:

- - Up in the HEALTHCHECKS section

- - Geotarget Country in the GEOGRAPHIC section

- - Select First N in the TRAFFIC MANAGEMENT section

+ - **Up** in the ** HEALTHCHECKS ** section

+ - **Geotarget Country** in the ** GEOGRAPHIC ** section

+ - **Select First N** in the ** TRAFFIC MANAGEMENT ** section

Click the Save Filter Chain button.

@@ -128,17 +128,17 @@ In this section we install and configure the NS1 agent on the same hosts as our

1. Follow the instructions in the [NS1 documentation](https://help.ns1.com/hc/en-us/articles/360020474154) to set up and connect a separate data feed for each of the three NGINX Plus instances, which NS1 calls _answers_.

- On the first page (**Configure a new data source from NSONE Data Feed API v1**) specify a name for the _data source_, which is the administrative container for the data feeds you will be creating. Use the same name each of the three times you go through the instructions. We're naming the data source NGINX-GSLB.

+ On the first page (**Configure a new data source from NSONE Data Feed API v1**) specify a name for the _data source_, which is the administrative container for the data feeds you will be creating. Use the same name each of the three times you go through the instructions. We're naming the data source **NGINX‑GSLB**.

On the next page (**Create Feed from NSONE Data Feed API v1**), create a data feed for the instance. Because the **Name** field is just for internal use, any value is fine. The value in the **Label** field is used in the YAML configuration file for the instance (see Step 4 below). We're specifying labels that indicate the country (using the ISO 3166 codes) in which the instance is running:

- - us-nginxgslb-datafeed for instance 1 in the US

- - de-nginxgslb-datafeed for instance 2 in Germany

- - sg-nginxgslb-datafeed for instance 3 in Singapore

+ - **us‑nginxgslb‑datafeed** for instance 1 in the US

+ - **de‑nginxgslb‑datafeed** for instance 2 in Germany

+ - **sg‑nginxgslb‑datafeed** for instance 3 in Singapore

- After creating the three feeds, note the value in the **Feeds URL** field on the INTEGRATIONS tab. The final element of the URL is the `` -3. The **Shed Load** filter is added as the fourth (lowest) box in the **Active Filters** section. Move it to be third by clicking and dragging it above the Select First N box.

+3. The **Shed Load** filter is added as the fourth (lowest) box in the **Active Filters** section. Move it to be third by clicking and dragging it above the **Select First N** box.

4. Click the Save Filter Chain button.

@@ -363,9 +363,9 @@ First we perform these steps to create the shed filter:

7. In the **Answer Metadata** window that opens, set values for the following metadata. In each case, click the icon in the FEED column of the metadata's row, then select or enter the indicated value in the AVAILABLE column. (For testing purposes, we're setting very small values for the watermarks so that the threshold is exceeded very quickly.)

- - **Active connections** – us-nginxgslb-datafeed

- - **High watermark** – 5

- - **Low watermark** – 2

+ - **Active connections** – **us‑nginxgslb‑datafeed**

+ - **High watermark** – **5**

+ - **Low watermark** – **2**

After setting all three, click the Ok button. (The screenshot shows the window just before this action.)

diff --git a/content/nginx/deployment-guides/google-cloud-platform/high-availability-all-active.md b/content/nginx/deployment-guides/google-cloud-platform/high-availability-all-active.md

index 2596ddbf5..cf9e705b0 100644

--- a/content/nginx/deployment-guides/google-cloud-platform/high-availability-all-active.md

+++ b/content/nginx/deployment-guides/google-cloud-platform/high-availability-all-active.md

@@ -15,7 +15,7 @@ This guide explains how to deploy F5 NGINX Plus in a high-availability configura

**Notes:**

- The GCE environment changes constantly. This could include names and arrangements of GUI elements. This guide was accurate when published. But, some GCE GUI elements might have changed over time. Use this guide as a reference and adapt to the current GCE working environment.

-- The configuration described in this guide allows anyone from a public IP address to access the NGINX Plus instances. While this works in common scenarios in a test environment, we do not recommend it in production. Block external HTTP/HTTPS access to app-1 and app-2 instances to external IP address before production deployment. Alternatively, remove the external IP addresses for all application instances, so they're accessible only on the internal GCE network.

+- The configuration described in this guide allows anyone from a public IP address to access the NGINX Plus instances. While this works in common scenarios in a test environment, we do not recommend it in production. Block external HTTP/HTTPS access to **app‑1** and **app‑2** instances to external IP address before production deployment. Alternatively, remove the external IP addresses for all application instances, so they're accessible only on the internal GCE network.

@@ -38,7 +38,7 @@ The GCE network LB assigns each new client to a specific NGINX Plus LB. This ass

NGINX Plus LB uses the round-robin algorithm to forward requests to specific app instances. It also adds a session cookie. It keeps future requests from the same client on the same app instance as long as it's running.

-This deployment guide uses two groups of app instances: – app-1 and app-2. It demonstrates [load balancing](https://www.nginx.com/products/nginx/load-balancing/) between different app types. But both groups have the same app configurations.

+This deployment guide uses two groups of app instances: – **app‑1** and **app‑2**. It demonstrates [load balancing](https://www.nginx.com/products/nginx/load-balancing/) between different app types. But both groups have the same app configurations.

You can adapt the deployment to distribute unique connections to different groups of app instances. This can be done by creating discrete upstream blocks and routing content based on the URI.

@@ -67,19 +67,19 @@ All component names, like projects and instances, are examples only. You can cha

Create a new GCE project to host the all‑active NGINX Plus deployment.

-1. Log into the [GCP Console](http://console.cloud.google.com) at console.cloud.google.com.

+1. Log into the [GCP Console](http://console.cloud.google.com) at **console.cloud.google.com**.

-2. The GCP Home > Dashboard tab opens. Its contents depend on whether you have any existing projects.

+2. The GCP **Home > Dashboard** tab opens. Its contents depend on whether you have any existing projects.

- If there are no existing projects, click the Create a project button.

-3. The **Shed Load** filter is added as the fourth (lowest) box in the **Active Filters** section. Move it to be third by clicking and dragging it above the Select First N box.

+3. The **Shed Load** filter is added as the fourth (lowest) box in the **Active Filters** section. Move it to be third by clicking and dragging it above the **Select First N** box.

4. Click the Save Filter Chain button.

@@ -363,9 +363,9 @@ First we perform these steps to create the shed filter:

7. In the **Answer Metadata** window that opens, set values for the following metadata. In each case, click the icon in the FEED column of the metadata's row, then select or enter the indicated value in the AVAILABLE column. (For testing purposes, we're setting very small values for the watermarks so that the threshold is exceeded very quickly.)

- - **Active connections** – us-nginxgslb-datafeed

- - **High watermark** – 5

- - **Low watermark** – 2

+ - **Active connections** – **us‑nginxgslb‑datafeed**

+ - **High watermark** – **5**

+ - **Low watermark** – **2**

After setting all three, click the Ok button. (The screenshot shows the window just before this action.)

diff --git a/content/nginx/deployment-guides/google-cloud-platform/high-availability-all-active.md b/content/nginx/deployment-guides/google-cloud-platform/high-availability-all-active.md

index 2596ddbf5..cf9e705b0 100644

--- a/content/nginx/deployment-guides/google-cloud-platform/high-availability-all-active.md

+++ b/content/nginx/deployment-guides/google-cloud-platform/high-availability-all-active.md

@@ -15,7 +15,7 @@ This guide explains how to deploy F5 NGINX Plus in a high-availability configura

**Notes:**

- The GCE environment changes constantly. This could include names and arrangements of GUI elements. This guide was accurate when published. But, some GCE GUI elements might have changed over time. Use this guide as a reference and adapt to the current GCE working environment.

-- The configuration described in this guide allows anyone from a public IP address to access the NGINX Plus instances. While this works in common scenarios in a test environment, we do not recommend it in production. Block external HTTP/HTTPS access to app-1 and app-2 instances to external IP address before production deployment. Alternatively, remove the external IP addresses for all application instances, so they're accessible only on the internal GCE network.

+- The configuration described in this guide allows anyone from a public IP address to access the NGINX Plus instances. While this works in common scenarios in a test environment, we do not recommend it in production. Block external HTTP/HTTPS access to **app‑1** and **app‑2** instances to external IP address before production deployment. Alternatively, remove the external IP addresses for all application instances, so they're accessible only on the internal GCE network.

@@ -38,7 +38,7 @@ The GCE network LB assigns each new client to a specific NGINX Plus LB. This ass

NGINX Plus LB uses the round-robin algorithm to forward requests to specific app instances. It also adds a session cookie. It keeps future requests from the same client on the same app instance as long as it's running.

-This deployment guide uses two groups of app instances: – app-1 and app-2. It demonstrates [load balancing](https://www.nginx.com/products/nginx/load-balancing/) between different app types. But both groups have the same app configurations.

+This deployment guide uses two groups of app instances: – **app‑1** and **app‑2**. It demonstrates [load balancing](https://www.nginx.com/products/nginx/load-balancing/) between different app types. But both groups have the same app configurations.

You can adapt the deployment to distribute unique connections to different groups of app instances. This can be done by creating discrete upstream blocks and routing content based on the URI.

@@ -67,19 +67,19 @@ All component names, like projects and instances, are examples only. You can cha

Create a new GCE project to host the all‑active NGINX Plus deployment.

-1. Log into the [GCP Console](http://console.cloud.google.com) at console.cloud.google.com.

+1. Log into the [GCP Console](http://console.cloud.google.com) at **console.cloud.google.com**.

-2. The GCP Home > Dashboard tab opens. Its contents depend on whether you have any existing projects.

+2. The GCP **Home > Dashboard** tab opens. Its contents depend on whether you have any existing projects.

- If there are no existing projects, click the Create a project button.

- - If there are existing projects, the name of one of them appears in the upper left of the blue header bar (in the screenshot, it's My Test Project ). Click the project name and select Create project from the menu that opens.

+ - If there are existing projects, the name of one of them appears in the upper left of the blue header bar (in the screenshot, it's My Test Project ). Click the project name and select **Create project** from the menu that opens.

- - If there are existing projects, the name of one of them appears in the upper left of the blue header bar (in the screenshot, it's My Test Project ). Click the project name and select Create project from the menu that opens.

+ - If there are existing projects, the name of one of them appears in the upper left of the blue header bar (in the screenshot, it's My Test Project ). Click the project name and select **Create project** from the menu that opens.

-3. Type your project name in the New Project window that pops up, then click CREATE. We're naming the project NGINX Plus All-Active-LB.

+3. Type your project name in the **New Project** window that pops up, then click CREATE. We're naming the project **NGINX Plus All‑Active‑LB**.

-3. Type your project name in the New Project window that pops up, then click CREATE. We're naming the project NGINX Plus All-Active-LB.

+3. Type your project name in the **New Project** window that pops up, then click CREATE. We're naming the project **NGINX Plus All‑Active‑LB**.

@@ -87,24 +87,24 @@ Create a new GCE project to host the all‑active NGINX Plus deployment.

Create firewall rules that allow access to the HTTP and HTTPS ports on your GCE instances. You'll attach the rules to all the instances you create for the deployment.

-1. Navigate to the Networking > Firewall rules tab and click + CREATE FIREWALL RULE. (The screenshot shows the default rules provided by GCE.)

+1. Navigate to the **Networking > Firewall rules** tab and click + CREATE FIREWALL RULE. (The screenshot shows the default rules provided by GCE.)

@@ -87,24 +87,24 @@ Create a new GCE project to host the all‑active NGINX Plus deployment.

Create firewall rules that allow access to the HTTP and HTTPS ports on your GCE instances. You'll attach the rules to all the instances you create for the deployment.

-1. Navigate to the Networking > Firewall rules tab and click + CREATE FIREWALL RULE. (The screenshot shows the default rules provided by GCE.)

+1. Navigate to the **Networking > Firewall rules** tab and click + CREATE FIREWALL RULE. (The screenshot shows the default rules provided by GCE.)

-2. Fill in the fields on the Create a firewall rule screen that opens:

+2. Fill in the fields on the **Create a firewall rule** screen that opens:

- - **Name** – nginx-plus-http-fw-rule

- - **Description** – Allow access to ports 80, 8080, and 443 on all NGINX Plus instances

- - Source filter – On the drop-down menu, select either Allow from any source (0.0.0.0/0), or IP range if you want to restrict access to users on your private network. In the second case, fill in the Source IP ranges field that opens. In the screenshot, we are allowing unrestricted access.

- - Allowed protocols and ports – tcp:80; tcp:8080; tcp:443

+ - **Name** – **nginx‑plus‑http‑fw‑rule**

+ - **Description** – **Allow access to ports 80, 8080, and 443 on all NGINX Plus instances**

+ - **Source filter** – On the drop-down menu, select either **Allow from any source (0.0.0.0/0)**, or **IP range** if you want to restrict access to users on your private network. In the second case, fill in the **Source IP ranges** field that opens. In the screenshot, we are allowing unrestricted access.

+ - **Allowed protocols and ports** – **tcp:80; tcp:8080; tcp:443**

**Note:** As noted in the introduction, allowing access from any public IP address is appropriate only in a test environment. Before deploying the architecture in production, create a firewall rule. Use this rule to block access to the external IP address for your application instances. Alternatively, you can disable external IP addresses for the instances. This limits access only to the internal GCE network.

- - Target tags – nginx-plus-http-fw-rule

+ - **Target tags** – **nginx‑plus‑http‑fw‑rule**

-2. Fill in the fields on the Create a firewall rule screen that opens:

+2. Fill in the fields on the **Create a firewall rule** screen that opens:

- - **Name** – nginx-plus-http-fw-rule

- - **Description** – Allow access to ports 80, 8080, and 443 on all NGINX Plus instances

- - Source filter – On the drop-down menu, select either Allow from any source (0.0.0.0/0), or IP range if you want to restrict access to users on your private network. In the second case, fill in the Source IP ranges field that opens. In the screenshot, we are allowing unrestricted access.

- - Allowed protocols and ports – tcp:80; tcp:8080; tcp:443

+ - **Name** – **nginx‑plus‑http‑fw‑rule**

+ - **Description** – **Allow access to ports 80, 8080, and 443 on all NGINX Plus instances**

+ - **Source filter** – On the drop-down menu, select either **Allow from any source (0.0.0.0/0)**, or **IP range** if you want to restrict access to users on your private network. In the second case, fill in the **Source IP ranges** field that opens. In the screenshot, we are allowing unrestricted access.

+ - **Allowed protocols and ports** – **tcp:80; tcp:8080; tcp:443**

**Note:** As noted in the introduction, allowing access from any public IP address is appropriate only in a test environment. Before deploying the architecture in production, create a firewall rule. Use this rule to block access to the external IP address for your application instances. Alternatively, you can disable external IP addresses for the instances. This limits access only to the internal GCE network.

- - Target tags – nginx-plus-http-fw-rule

+ - **Target tags** – **nginx‑plus‑http‑fw‑rule**

-3. Click the Create button. The new rule is added to the table on the Firewall rules tab.

+3. Click the Create button. The new rule is added to the table on the **Firewall rules** tab.

## Task 2: Creating Source Instances

@@ -123,96 +123,96 @@ The methods to create a source instance are different. Once you've created the s

Create three source VM instances based on a GCE VM image. We're basing our instances on the Ubuntu 16.04 LTS image.

-1. Verify that the NGINX Plus All-Active-LB project is still selected in the Google Cloud Platform header bar.

+1. Verify that the **NGINX Plus All‑Active‑LB** project is still selected in the Google Cloud Platform header bar.

-2. Navigate to the Compute Engine > VM instances tab.

+2. Navigate to the **Compute Engine > VM instances** tab.

-3. Click the Create instance button. The Create an instance page opens.

+3. Click the Create instance button. The **Create an instance** page opens.

#### Creating the First Application Instance from a VM Image

-1. On the Create an instance page, modify or verify the fields and checkboxes as indicated (a screenshot of the completed page appears in the next step):

+1. On the **Create an instance** page, modify or verify the fields and checkboxes as indicated (a screenshot of the completed page appears in the next step):

- - **Name** – nginx-plus-app-1

- - **Zone** – The GCP zone that makes sense for your location. We're using us-west1-a.

- - Machine type – The appropriate size for the level of traffic you anticipate. We're selecting micro, which is ideal for testing purposes.

- - Boot disk – Click Change. The Boot disk page opens to the OS images subtab. Perform the following steps:

+ - **Name** – **nginx‑plus‑app‑1**

+ - **Zone** – The GCP zone that makes sense for your location. We're using **us‑west1‑a**.

+ - **Machine type** – The appropriate size for the level of traffic you anticipate. We're selecting **micro**, which is ideal for testing purposes.

+ - **Boot disk** – Click **Change**. The **Boot disk** page opens to the OS images subtab. Perform the following steps:

- - Click the radio button for the Unix or Linux image of your choice (here, Ubuntu 16.04 LTS).

- - Accept the default values in the Boot disk type and Size (GB) fields (Standard persistent disk and 10 respectively).

+ - Click the radio button for the Unix or Linux image of your choice (here, **Ubuntu 16.04 LTS**).

+ - Accept the default values in the **Boot disk type** and **Size (GB)** fields (**Standard persistent disk** and **10** respectively).

- Click the Select button.

-3. Click the Create button. The new rule is added to the table on the Firewall rules tab.

+3. Click the Create button. The new rule is added to the table on the **Firewall rules** tab.

## Task 2: Creating Source Instances

@@ -123,96 +123,96 @@ The methods to create a source instance are different. Once you've created the s

Create three source VM instances based on a GCE VM image. We're basing our instances on the Ubuntu 16.04 LTS image.

-1. Verify that the NGINX Plus All-Active-LB project is still selected in the Google Cloud Platform header bar.

+1. Verify that the **NGINX Plus All‑Active‑LB** project is still selected in the Google Cloud Platform header bar.

-2. Navigate to the Compute Engine > VM instances tab.

+2. Navigate to the **Compute Engine > VM instances** tab.

-3. Click the Create instance button. The Create an instance page opens.

+3. Click the Create instance button. The **Create an instance** page opens.

#### Creating the First Application Instance from a VM Image

-1. On the Create an instance page, modify or verify the fields and checkboxes as indicated (a screenshot of the completed page appears in the next step):

+1. On the **Create an instance** page, modify or verify the fields and checkboxes as indicated (a screenshot of the completed page appears in the next step):

- - **Name** – nginx-plus-app-1

- - **Zone** – The GCP zone that makes sense for your location. We're using us-west1-a.

- - Machine type – The appropriate size for the level of traffic you anticipate. We're selecting micro, which is ideal for testing purposes.

- - Boot disk – Click Change. The Boot disk page opens to the OS images subtab. Perform the following steps:

+ - **Name** – **nginx‑plus‑app‑1**

+ - **Zone** – The GCP zone that makes sense for your location. We're using **us‑west1‑a**.

+ - **Machine type** – The appropriate size for the level of traffic you anticipate. We're selecting **micro**, which is ideal for testing purposes.

+ - **Boot disk** – Click **Change**. The **Boot disk** page opens to the OS images subtab. Perform the following steps:

- - Click the radio button for the Unix or Linux image of your choice (here, Ubuntu 16.04 LTS).

- - Accept the default values in the Boot disk type and Size (GB) fields (Standard persistent disk and 10 respectively).

+ - Click the radio button for the Unix or Linux image of your choice (here, **Ubuntu 16.04 LTS**).

+ - Accept the default values in the **Boot disk type** and **Size (GB)** fields (**Standard persistent disk** and **10** respectively).

- Click the Select button.

- - Identity and API access – Keep the defaults for the Service account field and Access scopes radio button. Unless you need more granular control.

- - **Firewall** – Verify that neither check box is checked (the default). The firewall rule invoked in the **Tags** field on the Management subtab (see Step 3 below) controls this type of access.

+ - **Identity and API access** – Keep the defaults for the **Service account ** field and **Access scopes** radio button. Unless you need more granular control.

+ - **Firewall** – Verify that neither check box is checked (the default). The firewall rule invoked in the **Tags** field on the **Management** subtab (see Step 3 below) controls this type of access.

2. Click Management, disk, networking, SSH keys to open that set of subtabs. (The screenshot shows the values entered in the previous step.)

- - Identity and API access – Keep the defaults for the Service account field and Access scopes radio button. Unless you need more granular control.

- - **Firewall** – Verify that neither check box is checked (the default). The firewall rule invoked in the **Tags** field on the Management subtab (see Step 3 below) controls this type of access.

+ - **Identity and API access** – Keep the defaults for the **Service account ** field and **Access scopes** radio button. Unless you need more granular control.

+ - **Firewall** – Verify that neither check box is checked (the default). The firewall rule invoked in the **Tags** field on the **Management** subtab (see Step 3 below) controls this type of access.

2. Click Management, disk, networking, SSH keys to open that set of subtabs. (The screenshot shows the values entered in the previous step.)

-3. On the Management subtab, modify or verify the fields as indicated:

+3. On the **Management** subtab, modify or verify the fields as indicated:

- - **Description** – NGINX Plus app-1 Image

- - **Tags** – nginx-plus-http-fw-rule

- - **Preemptibility** – Off (recommended) (the default)

- - Automatic restart – On (recommended) (the default)

- - On host maintenance – Migrate VM instance (recommended) (the default)

+ - **Description** – **NGINX Plus app‑1 Image**

+ - **Tags** – **nginx‑plus‑http‑fw‑rule**

+ - **Preemptibility** – **Off (recommended)** (the default)

+ - **Automatic restart** – **On (recommended)** (the default)

+ - **On host maintenance** – **Migrate VM instance (recommended)** (the default)

-3. On the Management subtab, modify or verify the fields as indicated:

+3. On the **Management** subtab, modify or verify the fields as indicated:

- - **Description** – NGINX Plus app-1 Image

- - **Tags** – nginx-plus-http-fw-rule

- - **Preemptibility** – Off (recommended) (the default)

- - Automatic restart – On (recommended) (the default)

- - On host maintenance – Migrate VM instance (recommended) (the default)

+ - **Description** – **NGINX Plus app‑1 Image**

+ - **Tags** – **nginx‑plus‑http‑fw‑rule**

+ - **Preemptibility** – **Off (recommended)** (the default)

+ - **Automatic restart** – **On (recommended)** (the default)

+ - **On host maintenance** – **Migrate VM instance (recommended)** (the default)

-4. On the Disks subtab, uncheck the checkbox labeled Delete boot disk when instance is deleted.

+4. On the **Disks** subtab, uncheck the checkbox labeled **Delete boot disk when instance is deleted**.

-4. On the Disks subtab, uncheck the checkbox labeled Delete boot disk when instance is deleted.

+4. On the **Disks** subtab, uncheck the checkbox labeled **Delete boot disk when instance is deleted**.

-5. On the Networking subtab, verify the default settings, in particular Ephemeral for External IP and Off for IP Forwarding.

+5. On the **Networking** subtab, verify the default settings, in particular **Ephemeral** for **External IP** and **Off** for **IP Forwarding**.

-5. On the Networking subtab, verify the default settings, in particular Ephemeral for External IP and Off for IP Forwarding.

+5. On the **Networking** subtab, verify the default settings, in particular **Ephemeral** for **External IP** and **Off** for **IP Forwarding**.

-6. If you're using your own SSH public key instead of your default GCE keys, paste the hexadecimal key string on the SSH Keys subtab. Right into the box that reads Enter entire key data.

+6. If you're using your own SSH public key instead of your default GCE keys, paste the hexadecimal key string on the **SSH Keys** subtab. Right into the box that reads **Enter entire key data**.

-6. If you're using your own SSH public key instead of your default GCE keys, paste the hexadecimal key string on the SSH Keys subtab. Right into the box that reads Enter entire key data.

+6. If you're using your own SSH public key instead of your default GCE keys, paste the hexadecimal key string on the **SSH Keys** subtab. Right into the box that reads **Enter entire key data**.

-7. Click the Create button at the bottom of the Create an instance page.

+7. Click the Create button at the bottom of the **Create an instance** page.

- The VM instances summary page opens. It can take several minutes for the instance to be created. Wait to continue until the green check mark appears.

+ The **VM instances** summary page opens. It can take several minutes for the instance to be created. Wait to continue until the green check mark appears.

-7. Click the Create button at the bottom of the Create an instance page.

+7. Click the Create button at the bottom of the **Create an instance** page.

- The VM instances summary page opens. It can take several minutes for the instance to be created. Wait to continue until the green check mark appears.

+ The **VM instances** summary page opens. It can take several minutes for the instance to be created. Wait to continue until the green check mark appears.

#### Creating the Second Application Instance from a VM Image

-1. On the VM instances summary page, click CREATE INSTANCE.

+1. On the **VM instances** summary page, click CREATE INSTANCE.

2. Repeat the steps in Creating the First Application Instance to create the second application instance. Specify the same values as for the first application instance, except:

- - In Step 1, **Name** – nginx-plus-app-2

- - In Step 3, **Description** – NGINX Plus app-2 Image

+ - In Step 1, **Name** – **nginx‑plus‑app‑2**

+ - In Step 3, **Description** – **NGINX Plus app‑2 Image**

#### Creating the Load-Balancing Instance from a VM Image