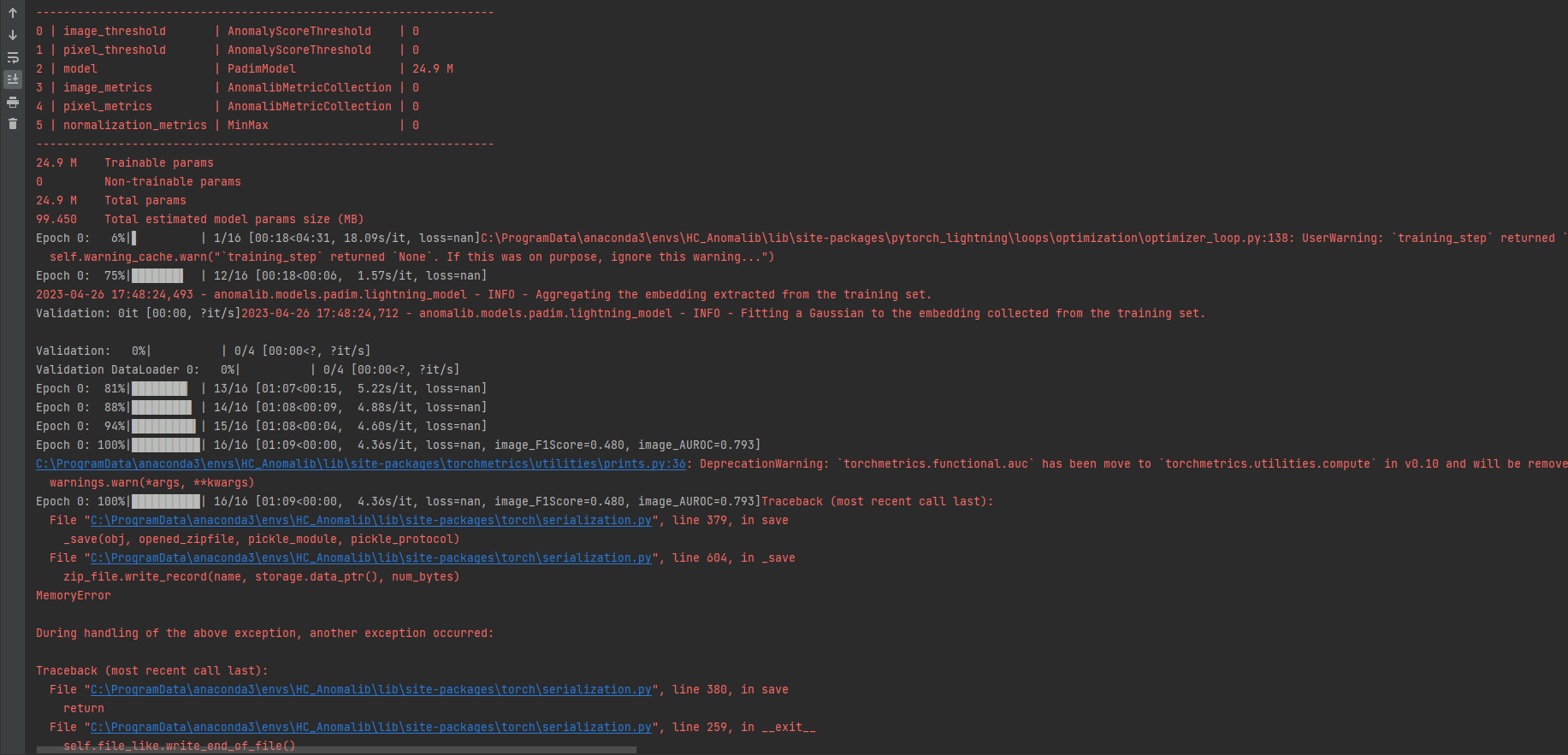

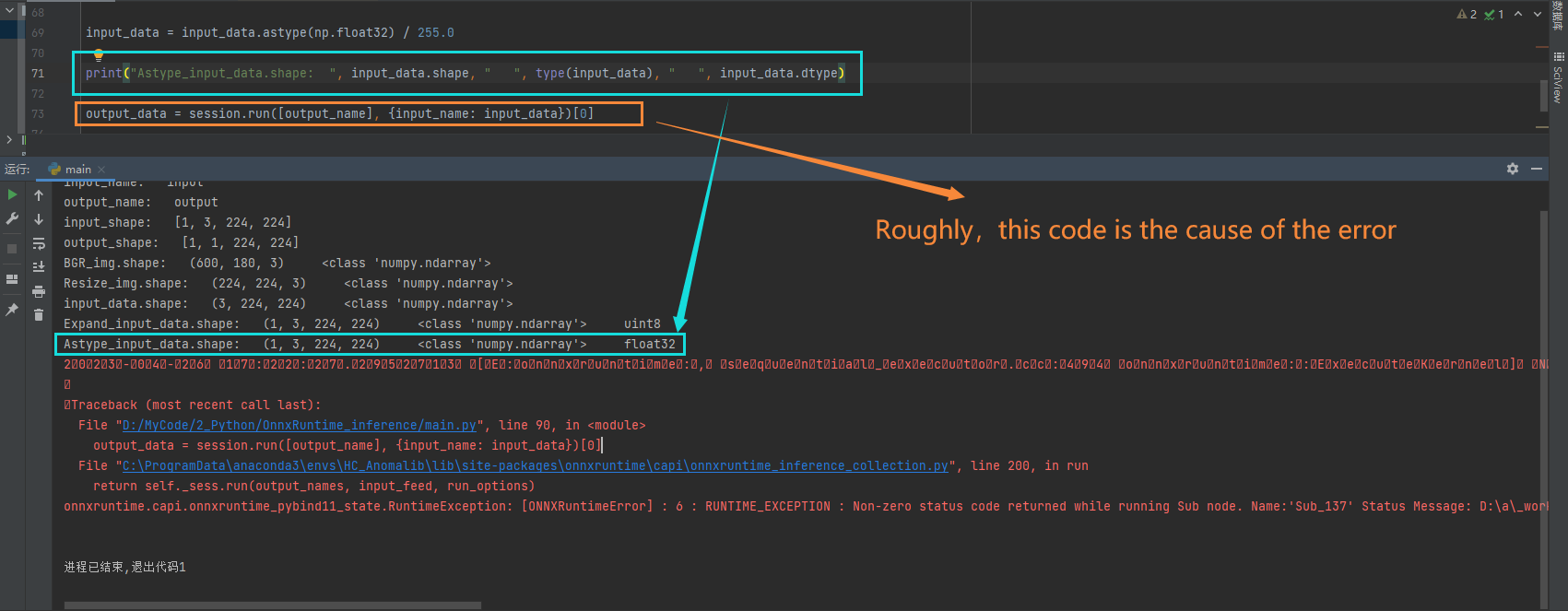

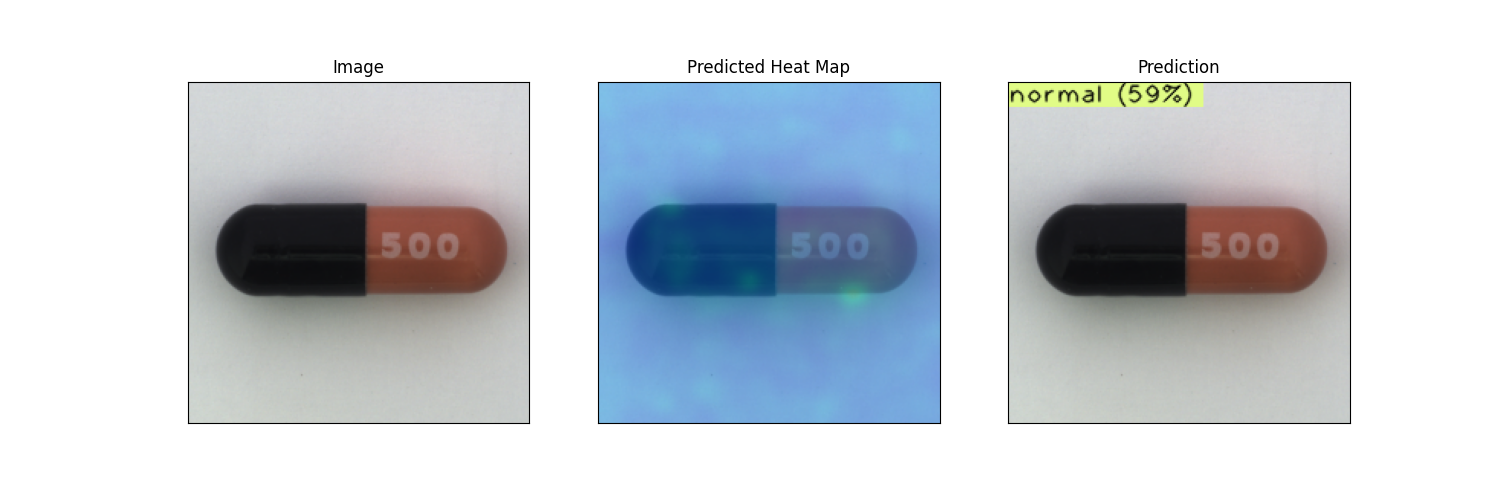

[Bug]: Some bugs about Padim and PatchCore (or maybe it's just a problem I'm having) #1264

-

Beta Was this translation helpful? Give feedback.

Replies: 1 comment 1 reply

-

|

Thanks for your patience @laogonggong847. I'll try to reply to your questions one by one. Padim Training with WideResNet-50I'm unfortunately unable to reproduce this. Are you certain that you do not change your configuration file? When I use the default settings by just changing the backbone to python tools/train.py --model padim

/home/sakcay/Projects/anomalib/src/anomalib/config/config.py:280: UserWarning: config.project.unique_dir is set to False. This does not ensure that your results will be written in an empty directory and you may overwrite files.

warn(

Global seed set to 42

2023-08-11 07:15:33,911 - anomalib.data - INFO - Loading the datamodule

2023-08-11 07:15:33,911 - anomalib.data.utils.transform - INFO - No config file has been provided. Using default transforms.

2023-08-11 07:15:33,911 - anomalib.data.utils.transform - INFO - No config file has been provided. Using default transforms.

2023-08-11 07:15:33,912 - anomalib.models - INFO - Loading the model.

2023-08-11 07:15:33,912 - anomalib.models.components.base.anomaly_module - INFO - Initializing PadimLightning model.

/home/sakcay/.local/lib/python3.10/site-packages/torchmetrics/utilities/prints.py:36: UserWarning: Metric `PrecisionRecallCurve` will save all targets and predictions in buffer. For large datasets this may lead to large memory footprint.

warnings.warn(*args, **kwargs)

2023-08-11 07:15:33,916 - anomalib.models.components.feature_extractors.timm - WARNING - FeatureExtractor is deprecated. Use TimmFeatureExtractor instead. Both FeatureExtractor and TimmFeatureExtractor will be removed in a future release.

2023-08-11 07:15:35,087 - timm.models.helpers - INFO - Loading pretrained weights from url (https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-weights/wide_resnet50_racm-8234f177.pth)

2023-08-11 07:15:35,549 - anomalib.utils.loggers - INFO - Loading the experiment logger(s)

2023-08-11 07:15:35,550 - anomalib.utils.callbacks - INFO - Loading the callbacks

/home/sakcay/Projects/anomalib/src/anomalib/utils/callbacks/__init__.py:142: UserWarning: Export option: None not found. Defaulting to no model export

warnings.warn(f"Export option: {config.optimization.export_mode} not found. Defaulting to no model export")

2023-08-11 07:15:35,561 - pytorch_lightning.utilities.rank_zero - INFO - GPU available: True (cuda), used: True

2023-08-11 07:15:35,561 - pytorch_lightning.utilities.rank_zero - INFO - TPU available: False, using: 0 TPU cores

2023-08-11 07:15:35,561 - pytorch_lightning.utilities.rank_zero - INFO - IPU available: False, using: 0 IPUs

2023-08-11 07:15:35,561 - pytorch_lightning.utilities.rank_zero - INFO - HPU available: False, using: 0 HPUs

2023-08-11 07:15:35,561 - pytorch_lightning.utilities.rank_zero - INFO - `Trainer(limit_train_batches=1.0)` was configured so 100% of the batches per epoch will be used..

2023-08-11 07:15:35,561 - pytorch_lightning.utilities.rank_zero - INFO - `Trainer(limit_val_batches=1.0)` was configured so 100% of the batches will be used..

2023-08-11 07:15:35,561 - pytorch_lightning.utilities.rank_zero - INFO - `Trainer(limit_test_batches=1.0)` was configured so 100% of the batches will be used..

2023-08-11 07:15:35,561 - pytorch_lightning.utilities.rank_zero - INFO - `Trainer(limit_predict_batches=1.0)` was configured so 100% of the batches will be used..

2023-08-11 07:15:35,561 - pytorch_lightning.utilities.rank_zero - INFO - `Trainer(val_check_interval=1.0)` was configured so validation will run at the end of the training epoch..

2023-08-11 07:15:35,561 - anomalib - INFO - Training the model.

2023-08-11 07:15:35,664 - anomalib.data.mvtec - INFO - Found the dataset.

2023-08-11 07:15:35,685 - pytorch_lightning.utilities.rank_zero - INFO - You are using a CUDA device ('NVIDIA GeForce RTX 3090') that has Tensor Cores. To properly utilize them, you should set `torch.set_float32_matmul_precision('medium' | 'high')` which will trade-off precision for performance. For more details, read https://pytorch.org/docs/stable/generated/torch.set_float32_matmul_precision.html#torch.set_float32_matmul_precision

/home/sakcay/.local/lib/python3.10/site-packages/torchmetrics/utilities/prints.py:36: UserWarning: Metric `ROC` will save all targets and predictions in buffer. For large datasets this may lead to large memory footprint.

warnings.warn(*args, **kwargs)

/home/sakcay/.local/lib/python3.10/site-packages/pytorch_lightning/callbacks/model_checkpoint.py:613: UserWarning: Checkpoint directory /home/sakcay/Projects/anomalib/results/padim/mvtec/bottle/run/weights/lightning exists and is not empty.

rank_zero_warn(f"Checkpoint directory {dirpath} exists and is not empty.")

2023-08-11 07:15:36,684 - pytorch_lightning.accelerators.cuda - INFO - LOCAL_RANK: 0 - CUDA_VISIBLE_DEVICES: [0,1]

/home/sakcay/.local/lib/python3.10/site-packages/pytorch_lightning/core/optimizer.py:183: UserWarning: `LightningModule.configure_optimizers` returned `None`, this fit will run with no optimizer

rank_zero_warn(

2023-08-11 07:15:36,688 - pytorch_lightning.callbacks.model_summary - INFO -

| Name | Type | Params

-------------------------------------------------------------------

0 | image_threshold | AnomalyScoreThreshold | 0

1 | pixel_threshold | AnomalyScoreThreshold | 0

2 | model | PadimModel | 24.9 M

3 | image_metrics | AnomalibMetricCollection | 0

4 | pixel_metrics | AnomalibMetricCollection | 0

5 | normalization_metrics | MinMax | 0

-------------------------------------------------------------------

24.9 M Trainable params

0 Non-trainable params

24.9 M Total params

99.450 Total estimated model params size (MB)

Epoch 0: 0%| | 0/10 [00:00<?, ?it/s]/home/sakcay/.local/lib/python3.10/site-packages/pytorch_lightning/loops/optimization/optimizer_loop.py:138: UserWarning: `training_step` returned `None`. If this was on purpose, ignore this warning...

self.warning_cache.warn("`training_step` returned `None`. If this was on purpose, ignore this warning...")

Epoch 0: 70%|████████████████2023-08-11 07:15:39,024 - anomalib.models.padim.lightning_model - INFO - Aggregating the embedding extracted from the training set.dation: 0it [00:00, ?it/s]

2023-08-11 07:15:39,176 - anomalib.models.padim.lightning_model - INFO - Fitting a Gaussian to the embedding collected from the training set.

Epoch 0: 100%|█████████████████████████████████████████████████████████████| 10/10 [00:31<00:00, 3.13s/it, loss=nan, pixel_F1Score=0.705, pixel_AUROC=0.983]2023-08-11 07:16:12,716 - pytorch_lightning.utilities.rank_zero - INFO - `Trainer.fit` stopped: `max_epochs=1` reached.

Epoch 0: 100%|█████████████████████████████████████████████████████████████| 10/10 [00:36<00:00, 3.60s/it, loss=nan, pixel_F1Score=0.705, pixel_AUROC=0.983]

2023-08-11 07:16:12,846 - anomalib.utils.callbacks.timer - INFO - Training took 36.16 seconds

2023-08-11 07:16:12,846 - anomalib - INFO - Loading the best model weights.

2023-08-11 07:16:12,846 - anomalib - INFO - Testing the model.

2023-08-11 07:16:12,850 - anomalib.data.mvtec - INFO - Found the dataset.

2023-08-11 07:16:12,850 - pytorch_lightning.utilities.rank_zero - INFO - You are using a CUDA device ('NVIDIA GeForce RTX 3090') that has Tensor Cores. To properly utilize them, you should set `torch.set_float32_matmul_precision('medium' | 'high')` which will trade-off precision for performance. For more details, read https://pytorch.org/docs/stable/generated/torch.set_float32_matmul_precision.html#torch.set_float32_matmul_precision

2023-08-11 07:16:12,851 - anomalib.utils.callbacks.model_loader - INFO - Loading the model from /home/sakcay/Projects/anomalib/results/padim/mvtec/bottle/run/weights/lightning/model-v2.ckpt

2023-08-11 07:16:14,951 - pytorch_lightning.accelerators.cuda - INFO - LOCAL_RANK: 0 - CUDA_VISIBLE_DEVICES: [0,1]

Testing DataLoader 0: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████| 3/3 [00:14<00:00, 4.85s/it]2023-08-11 07:16:30,522 - anomalib.utils.callbacks.timer - INFO - Testing took 15.568396091461182 seconds

Throughput (batch_size=32) : 5.331313483572217 FPS

Testing DataLoader 0: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████| 3/3 [00:14<00:00, 4.91s/it]

┏━━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━━━━┓

┃ Test metric ┃ DataLoader 0 ┃

┡━━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━━━━┩

│ image_AUROC │ 0.9976190328598022 │

│ image_F1Score │ 0.9919999837875366 │

│ pixel_AUROC │ 0.9825913310050964 │

│ pixel_F1Score │ 0.7047178745269775 │

└───────────────────────────┴───────────────────────────┘I feel the issue could be related to something else. Patchcore ONNX RuntimeThis has been fixed in one of the recent anomalib versions, so ONNX version of the patchcore model should ideally work fine. How to Calculate the Confidence Score and LabelAnomalib inferencers have Please note that you could use OpenVINO Inferencer to run the ONNX model. You don't need to write a separate script for ONNX. Let us know if you have any other questions. |

Beta Was this translation helpful? Give feedback.

Thanks for your patience @laogonggong847. I'll try to reply to your questions one by one.

Padim Training with WideResNet-50

I'm unfortunately unable to reproduce this. Are you certain that you do not change your configuration file? When I use the default settings by just changing the backbone to

wide_resnet50_2, I can train the model. Can you share your config file. For the record, here is my output after the training: