Reason for 30s audio length #1118

-

|

Hello, I understand that whisper can only access 30s of audio content, what is the reason behind that? Is it because larger than 30s is harder to train? I assume a window larger than 30 seconds since GPU can speed up the process. Assuming one was to retrain the model from scratch what are the benefits and drawbacks of using a smaller and larger window? |

Beta Was this translation helpful? Give feedback.

Replies: 2 comments 5 replies

-

|

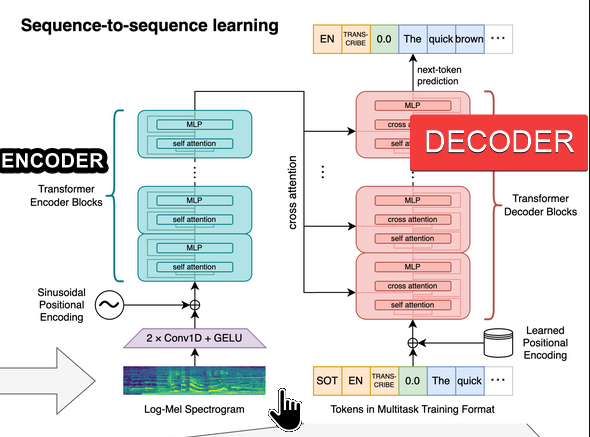

I'm not super academic about ML, so take this with a grain of salt, but speaking broadly, the whisper model is basically the same structure as a image-to-text that looks at a picture and comes up with a description of it. Instead of looking at pictures, it looks at 30s of audio spectrogram instead. The encoder layers extract cross-attention weights describing aspects of semantic import and then the decoder generates tokens from that cross attention, keeping track of prior context (prompt) and parts that were already "transcribed/translated" (prefix). This is why we are limited to 30s of audio. Too short, and you'd lack surrounding context. You'd cut sentences more often. A lot of sentences would cease to make sense. Too long, and you'll need larger and larger models to contain the complexity of the meaning you want the model to keep track of. |

Beta Was this translation helpful? Give feedback.

-

|

I also have a similar question. I am wondering the influence of audio length for the finetuning performance. To be specific, do we have some requirements of audio length for finetuning in order to get a good WER? Such as the proportion of data within a given range of audio length (say 15s~25s etc.)? What if the audio length for finetuning is fairly short? |

Beta Was this translation helpful? Give feedback.

I'm not super academic about ML, so take this with a grain of salt, but speaking broadly, the whisper model is basically the same structure as a image-to-text that looks at a picture and comes up with a description of it. Instead of looking at pictures, it looks at 30s of audio spectrogram instead.

The encoder layers extract cross-attention weights describing aspects of semantic import and then the decoder generates tokens from that cross attention, keeping track of prior context (prompt) and parts that were already "transcribed/translated" (prefix). This is why we are limited to 30s of audio. Too short, and you'd lack surrounding context. You'd cut sentences more often. A lot of sentenc…