-

|

Hi, Great work, congrats! Would it be possible to add a new language to the model by fine-tuning it on my own dataset, or in some other way? Thanks. |

Beta Was this translation helpful? Give feedback.

Replies: 6 comments 12 replies

-

|

We haven't tried fine-tuning, but it could be a good avenue for research evaluating Whisper models as pretrained representation for unseen language. We have observed some transfer between linguistically adjacent languages, such as Asturian <-> Spanish (Castillian) or Cebuano <-> Filipino (Tagalog). So if your language of interest has an adjacent language that works acceptably in Whisper, you could fine-tune on your dataset using that language token. |

Beta Was this translation helpful? Give feedback.

-

|

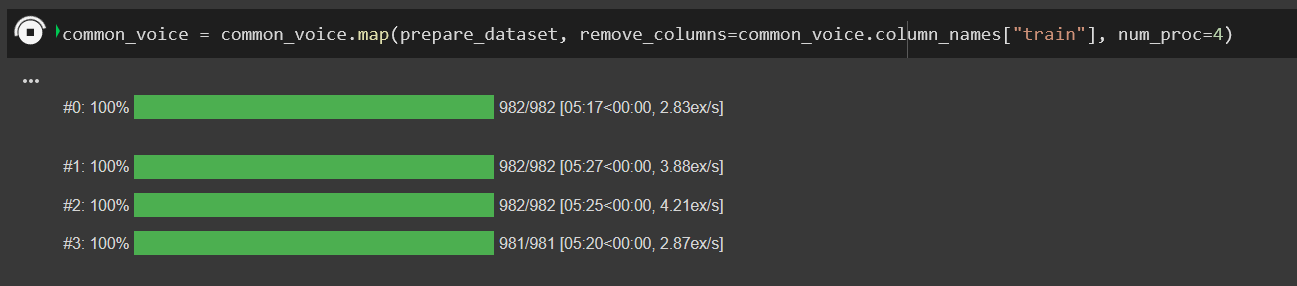

Check-out this blog for fine-tuning Whisper for multilingual ASR with Hugging Face Transformers: https://huggingface.co/blog/fine-tune-whisper It provides a step-by-step guide to fine-tuning, right from data preparation to evaluation 🤗 There'a Google Colab so you can also run it as a notebook 😉 |

Beta Was this translation helpful? Give feedback.

-

|

Thank you! 🙂 |

Beta Was this translation helpful? Give feedback.

-

|

Can we fine-tune the whisper to Language identification task, using hugging face @sanchit-gandhi . |

Beta Was this translation helpful? Give feedback.

-

|

Hi, may i know can we fine-tune the whisper on new language for language identification task so that whisper can detect the new language? |

Beta Was this translation helpful? Give feedback.

-

|

I’ve completed a project where I fine-tuned Whisper-Tiny for translation tasks, and it worked great. You can check out my repo to see the process I followed, and it could help you solve the problem you're encountering. |

Beta Was this translation helpful? Give feedback.

We haven't tried fine-tuning, but it could be a good avenue for research evaluating Whisper models as pretrained representation for unseen language. We have observed some transfer between linguistically adjacent languages, such as Asturian <-> Spanish (Castillian) or Cebuano <-> Filipino (Tagalog). So if your language of interest has an adjacent language that works acceptably in Whisper, you could fine-tune on your dataset using that language token.