Accelerate the Whisper decoding with CTranslate2 #937

Replies: 18 comments 113 replies

-

|

|

Beta Was this translation helpful? Give feedback.

-

|

Do you have any templates/examples of how you would transcribe longer than 30s and does the |

Beta Was this translation helpful? Give feedback.

-

|

Just ran into the following error trying to run this locally: |

Beta Was this translation helpful? Give feedback.

-

Beta Was this translation helpful? Give feedback.

-

|

Hi! Is there a way to convert the whisper weights that I have finetune on your code? Thanks. |

Beta Was this translation helpful? Give feedback.

-

|

Hi, TypeError: WhisperModel.init() got an unexpected keyword argument 'num_workers' |

Beta Was this translation helpful? Give feedback.

-

|

I am using whisper large model and transcribe English as well as Spanish audios. What is best bean_size, compute_type and quantization parameters. Currently I am suing bean_size=5, compute_type =float16 and quantization = float 16 |

Beta Was this translation helpful? Give feedback.

-

|

I worked on a new optimization to further reduce the memory usage (20 to 30% reduction) and slightly increase the execution speed. See the latest results in the Benchmark section of the README. |

Beta Was this translation helpful? Give feedback.

-

|

I encountered this error in GPU implementation. I hope you can help me. Could not load library libcudnn_ops_infer.so.8. Error: libcudnn_ops_infer.so.8: cannot open shared object file: No such file or directory |

Beta Was this translation helpful? Give feedback.

-

|

@guillaumekln First of all, thanks for this amazing library - it's an amazing improvement, and should really be the default implementation. I have a question on speaker separation. The audio files I'm working with have two speakers - one per stereo channel. How feasible would it be to process those files using faster-whisper? I can see you're mixing the audio to Mono to do the transcription, but I couldn't figure out from the code how you'd process two separate channels independently. |

Beta Was this translation helpful? Give feedback.

-

|

Hello! Sorry for my bad english, it's not my native language. I'm a beginner in this language (py) and that's why I have some questions that may sound horrible. Today I already use How do I use your script in my native language (Portuguese)? I understood that I must run Again I apologize for such a lay question and my bad English. |

Beta Was this translation helpful? Give feedback.

-

|

The repository faster-whisper has been updated to support word-level timestamps! You can find a basic usage example in the README. |

Beta Was this translation helpful? Give feedback.

-

|

Works amazing! I will move to use this on freesubtitles.ai , thanks for the great work! |

Beta Was this translation helpful? Give feedback.

-

|

I need your help now, encounter such a problem, I have such an audio, I am a dual-track voice, he is two people calling audio. How do I separate the results of his recognition by orbit. |

Beta Was this translation helpful? Give feedback.

-

|

Hi @guillaumekln , Because i use a custom model so that my model can not run an audio which is longer than 25s. Can I convert the weights from huggingface model to whisper-model and use CTranslate2 for my model to process on audio is longer than 25s. Thank you. |

Beta Was this translation helpful? Give feedback.

-

|

I am new to this stuffs... Please help me with this error. I donnot understand what this means? |

Beta Was this translation helpful? Give feedback.

-

|

I have finetuned the Whisper small model for specific use case, and I have also quantized it for faster transcription using

Is there any way to enhance the transcription speed for a 10-second audio on CPU? |

Beta Was this translation helpful? Give feedback.

-

|

can i use it to text2text |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

Uh oh!

There was an error while loading. Please reload this page.

-

Hello,

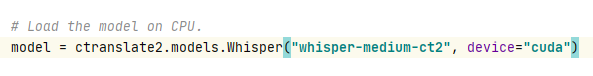

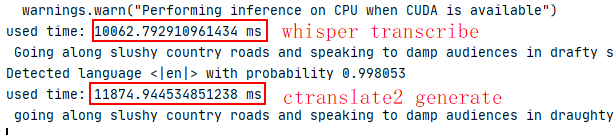

We integrated the Whisper model in CTranslate2, which is a fast inference engine for Transformer models. The project implements many useful inference features such as optimized CPU and GPU execution, asynchronous execution, multi-GPU execution, 8-bit quantization, etc.

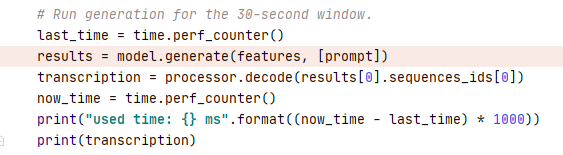

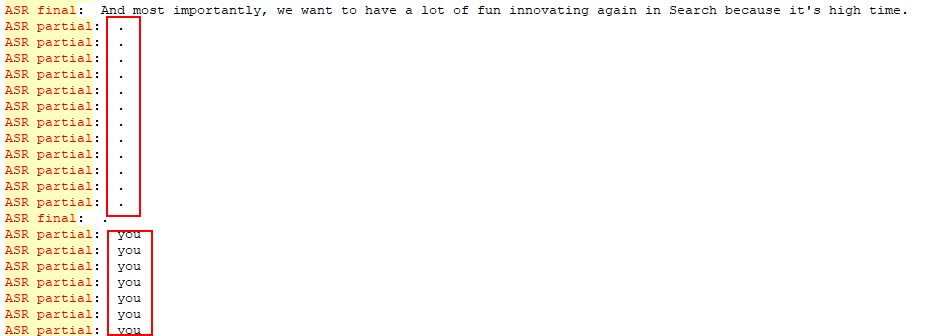

The library only implements the decoding part (equivalent to

model.decodehere), but you can find a possible implementation of the full transcription logic in this repository:https://github.com/guillaumekln/faster-whisper

For example, here's the transcription time of 13 minutes of audio on a V100 for the same accuracy:

Hopefully this can be useful to some of you!

Feel free to ask questions here.

Best,

Guillaume

Beta Was this translation helpful? Give feedback.

All reactions