-

|

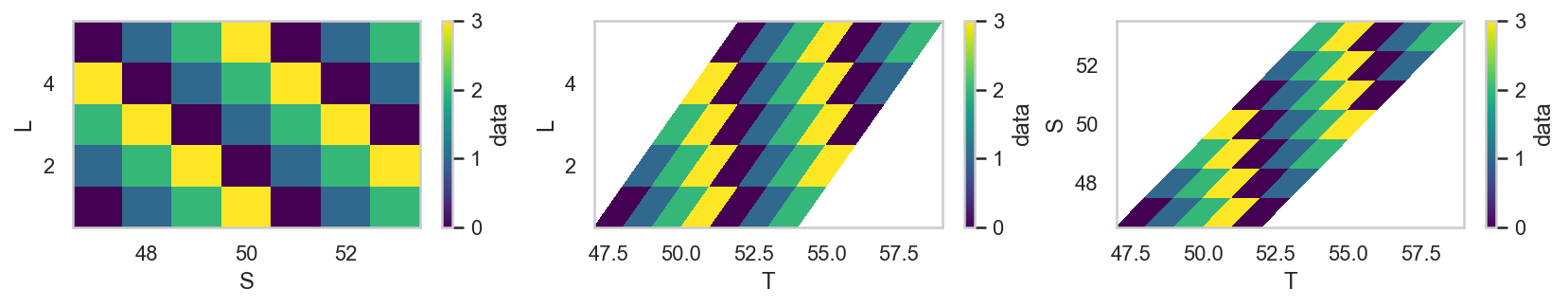

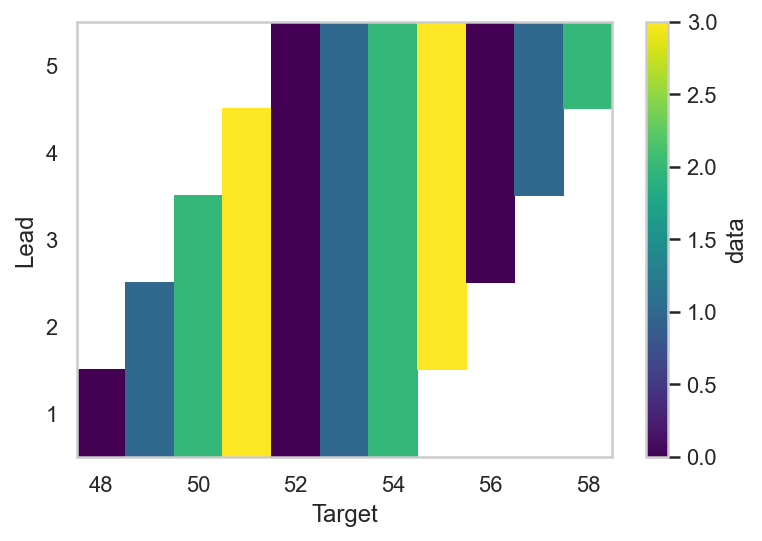

Weather/climate data often uses two time dimensions: Start time (S; the initial time of the forecast integration) and Lead time (L; how long the forecast integration goes beyond the initial time). Also there is Target time (T; the date being forecast). The relation between the three dimensions is T = S + L Any 2 of the 3 time dimensions is sufficient. My question what is the xarray of making of change of dimensions from S & L to T & L where T = S + L. Example dataset with S & L And the data can be plotted (sometimes called chiclet charts) using any 2 of the three coordinates (albeit with some slanted boxes) To create a dataset with the same data but T & L, I stacked S &L, replaced the values of S by T (using idea from here), and unstacked. It seems to work. |

Beta Was this translation helpful? Give feedback.

Replies: 4 comments 5 replies

-

|

Stacking is a reshape which will get you in trouble if you use dask chunking along S, L. Does climpred help? cc @aaronspring |

Beta Was this translation helpful? Give feedback.

-

|

@mktippett I tried your code above and ran into an EDIT: works with Traceback for ---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

Input In [4], in <cell line: 3>()

1 ds_stacked = ds.chunk({"L":1}).stack(SL=['S', 'L'])

2 T = ds_stacked['S'] + ds_stacked['L']

----> 3 ds_stacked_TL = ds_stacked.reset_index('S', drop=True).assign_coords(S=('SL', T)).set_index(SL='S',append=True)

4 ds_TL = ds_stacked_TL.unstack().rename({'S': 'Target', 'SL_level_0': 'Lead'})

5 display(ds_TL.data) # 250 tasks

File ~/mambaforge/envs/xr/lib/python3.9/site-packages/xarray/core/common.py:592, in DataWithCoords.assign_coords(self, coords, **coords_kwargs)

590 data = self.copy(deep=False)

591 results: dict[Hashable, Any] = self._calc_assign_results(coords_combined)

--> 592 data.coords.update(results)

593 return data

File ~/mambaforge/envs/xr/lib/python3.9/site-packages/xarray/core/coordinates.py:162, in Coordinates.update(self, other)

160 other_vars = getattr(other, "variables", other)

161 self._maybe_drop_multiindex_coords(set(other_vars))

--> 162 coords, indexes = merge_coords(

163 [self.variables, other_vars], priority_arg=1, indexes=self.xindexes

164 )

165 self._update_coords(coords, indexes)

File ~/mambaforge/envs/xr/lib/python3.9/site-packages/xarray/core/merge.py:564, in merge_coords(objects, compat, join, priority_arg, indexes, fill_value)

560 coerced = coerce_pandas_values(objects)

561 aligned = deep_align(

562 coerced, join=join, copy=False, indexes=indexes, fill_value=fill_value

563 )

--> 564 collected = collect_variables_and_indexes(aligned)

565 prioritized = _get_priority_vars_and_indexes(aligned, priority_arg, compat=compat)

566 variables, out_indexes = merge_collected(collected, prioritized, compat=compat)

File ~/mambaforge/envs/xr/lib/python3.9/site-packages/xarray/core/merge.py:365, in collect_variables_and_indexes(list_of_mappings, indexes)

362 indexes.pop(name, None)

363 append_all(coords, indexes)

--> 365 variable = as_variable(variable, name=name)

366 if name in indexes:

367 append(name, variable, indexes[name])

File ~/mambaforge/envs/xr/lib/python3.9/site-packages/xarray/core/variable.py:125, in as_variable(obj, name)

123 elif isinstance(obj, tuple):

124 if isinstance(obj[1], DataArray):

--> 125 raise TypeError(

126 "Using a DataArray object to construct a variable is"

127 " ambiguous, please extract the data using the .data property."

128 )

129 try:

130 obj = Variable(*obj)

TypeError: Using a DataArray object to construct a variable is ambiguous, please extract the data using the .data property. |

Beta Was this translation helpful? Give feedback.

-

|

@mktippett I also found alternative solution with a loop which I previously used: |

Beta Was this translation helpful? Give feedback.

-

|

ok. with following @dcherian's chunking point, when data is chunked ds_stacked = ds.chunk({"L":1}).stack(SL=['S', 'L'])

T = ds_stacked['S'] + ds_stacked['L']

ds_stacked_TL = ds_stacked.reset_index('S', drop=True).assign_coords(S=('SL', T)).set_index(SL='S',append=True)

ds_TL = ds_stacked_TL.unstack().rename({'S': 'Target', 'SL_level_0': 'Lead'})

display(ds_TL.data) # 250 taskswhereas the swap = xr.concat([ds.chunk({"L":1}).sel(L=l).swap_dims({"S":"T"}) for l in ds.L],"L").data

display(swap) # 50 tasksbut overall the skill result is much less data heavy than the initialized inputs, so probably the skill calculation before is much more demanding for memory and compute. |

Beta Was this translation helpful? Give feedback.

Stacking is a reshape which will get you in trouble if you use dask chunking along S, L.

Does climpred help?

cc @aaronspring